Ranger2.1集成CDH 6.3.2

Ranger介绍

针对Ranger与CDH平台的集成,需要通过编译ranger的源码,解决兼容性问题。当然,网上也有提供好的tar包,但是这种方式比较适合社区版本。对应的下载地址为: https://mirrors.tuna.tsinghua.edu.cn/apache/ranger/2.4.0/apache-ranger-2.4.0.tar.gz

目前在github上,ranger最新版本为2.4,本次采用的版本采用2.1,版本太新有太多坑要躺。相关的地址如下:

- 官网地址:https://ranger.apache.org/

- 文档WIKI地址:https://cwiki.apache.org/confluence/display/RANGER/Running+Apache+Ranger+-++%5BFrom+source%5D+in+minutes

- Git地址:https://github.com/apache/ranger

编译前相关配置

2.1 源码编译相关依赖

1)JDK版本:1.8

2)Maven版本:3.6.2

- 下载maven

[root@hadoop1 packages]# wget https://archive.apache.org/dist/maven/maven-3/3.6.2/binaries/apache-maven-3.6.2-bin.tar.gz

- 部署安装

[root@hadoop1 packages]# tar zxvf apache-maven-3.6.2-bin.tar.gz -C /data

[root@hadoop1 data]# mv apache-maven-3.6.2/ maven

3)安装Git

[root@hadoop1 ~]# yum install git -y

2.2 源码下载与POM文件修改

2.2.1 从github下载源码

[root@hadoop1 ranger]# git clone --branch release-ranger-2.1.0 https://github.com/apache/ranger.git

这里如果下载较慢的话,可以直接下载zip包到本地,在上传到服务器

2.2.2 修改POM文件

1)在repositories新增以下部分,加快编译速度。

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

2)修改组件为CDH对应的版本

<hadoop.version>3.0.0-cdh6.3.2</hadoop.version>

<hbase.version>2.1.0-cdh6.3.2</hbase.version>

<hive.version>2.1.1-cdh6.3.2</hive.version>

<kafka.version>2.2.1-cdh6.3.2</kafka.version>

<solr.version>7.4.0-cdh6.3.2</solr.version>

<zookeeper.version>3.4.5-cdh6.3.2</zookeeper.version>

主要修改包括hadoop,kafka,hbase等等。这块需要用到啥组件就改对应组件即可。

3)修改ES对应版本

<elasticsearch.version>7.13.0</elasticsearch.version>

2.2.3 HIVE版本兼容问题

Apache Ranger 2.1.0 对应hive版本3.1.2,CDH 6.3.2对应hive版本2.1.1,不兼容,hive server启动会报错。

- 下载Apache Ranger1.2.0 版本

[root@hadoop1 ranger-1.2]# git clone --branch release-ranger-1.2.0 https://github.com/apache/ranger.git

- 删除Apache Ranger 2.1.0 版本的hive插件hive-agent

[root@hadoop1 ranger-1.2]# rm -rf /data/packages/ranger/ranger-2.1/hive-agent/

- 将Apache Ranger1.2.0 版本的hive插件hive-agent拷贝到Apache Ranger 2.1.0 目录中。

[root@hadoop1 ranger-1.2]# cp -r ranger/hive-agent/ /data/packages/ranger/ranger-2.1

- 使用下面的pom文件替代hive-agent下面的pom

<?xml version="1.0" encoding="UTF-8"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<artifactId>ranger-hive-plugin</artifactId>

<name>Hive Security Plugin</name>

<description>Hive Security Plugins</description>

<packaging>jar</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<parent>

<groupId>org.apache.ranger</groupId>

<artifactId>ranger</artifactId>

<version>2.1.0</version>

<relativePath>..</relativePath>

</parent>

<dependencies>

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>${commons.lang.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-common</artifactId>

<version>2.1.1-cdh6.3.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-service</artifactId>

<version>2.1.1-cdh6.3.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.1.1-cdh6.3.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>2.1.1-cdh6.3.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>2.1.1-cdh6.3.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>2.1.1-cdh6.3.2</version>

<classifier>standalone</classifier>

</dependency>

<dependency>

<groupId>org.apache.ranger</groupId>

<artifactId>ranger-plugins-common</artifactId>

<version>${project.version}</version>

</dependency>

<dependency>

<groupId>org.apache.ranger</groupId>

<artifactId>ranger-plugins-audit</artifactId>

<version>${project.version}</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpcore</artifactId>

<version>${httpcomponents.httpcore.version}</version>

</dependency>

<dependency>

<groupId>org.apache.ranger</groupId>

<artifactId>ranger-plugins-common</artifactId>

<version>2.1.1-SNAPSHOT</version>

<scope>compile</scope>

</dependency>

</dependencies>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<build>

<testResources>

<testResource>

<directory>src/test/resources</directory>

<includes>

<include>**/*</include>

</includes>

<filtering>true</filtering>

</testResource>

</testResources>

</build>

</project>

这里面主要做了三个操作:

- 把hive相关的版本替换成

2.1.1-cdh6.3.2(按需修改),如果不修改,默认情况下为hive-3.1.2(虽然已经在ranger的pom里面已经配置为hive.version为2.1.1-cdh6.3.2,但是没生效) - 在

</dependencies>添加仓库地址,之前在ranger这个父pom里面已经添加了下述的配置,但是实际没有找到

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

- 修改新拷贝过去 hive-agent目录中pom.xml的ranger版本号为2.1.0:

vim /data/packages/ranger/ranger-2.1/hive-agent/pom.xml

<parent>

<groupId>org.apache.ranger</groupId>

<artifactId>ranger</artifactId>

<version>2.1.0</version>

<relativePath>..</relativePath>

</parent>

- 添加ranger-plugins-common依赖

在<dependencies>下面添加如下依赖,否则找不到添加的代码。

<dependency>

<groupId>org.apache.ranger</groupId>

<artifactId>ranger-plugins-common</artifactId>

<version>2.1.1-SNAPSHOT</version>

<scope>compile</scope>

</dependency>

2.2.4 kylin插件POM修改

1)修改/data/packages/ranger/ranger-2.1/ranger-kylin-plugin-shim/pom.xml 文件

<dependency>

<groupId>org.apache.kylin</groupId>

<artifactId>kylin-server-base</artifactId>

<version>${kylin.version}</version>

<scope>provided</scope>

<exclusions>

<exclusion>

<groupId>org.apache.kylin</groupId>

<artifactId>kylin-external-htrace</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.calcite</groupId>

<artifactId>calcite-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.calcite</groupId>

<artifactId>calcite-linq4j</artifactId>

</exclusion>

</exclusions>

</dependency>

2)修改/data/packages/ranger/ranger-2.1/plugin-kylin/pom.xml 文件

<dependency>

<groupId>org.apache.kylin</groupId>

<artifactId>kylin-server-base</artifactId>

<version>${kylin.version}</version>

<scope>provided</scope>

<exclusions>

<exclusion>

<groupId>org.apache.kylin</groupId>

<artifactId>kylin-external-htrace</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.calcite</groupId>

<artifactId>calcite-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.calcite</groupId>

<artifactId>calcite-linq4j</artifactId>

</exclusion>

</exclusions>

</dependency>

2.2.5 修改distro对应的pom文件

vim /data/packages/ranger/ranger-2.1/distro/pom.xml

<artifactId>maven-assembly-plugin</artifactId>

<!--<version>${assembly.plugin.version}</version>-->

<version>3.3.0</version>

2.3 兼容性源码修改

2.3.1 修改RangerDefaultAuditHandler.java类

vim /data/packages/ranger/ranger-2.1/agents-common/src/main/java/org/apache/ranger/plugin/audit/RangerDefaultAuditHandler.java

在源码的import导入里面添加:

import org.apache.ranger.authorization.hadoop.config.RangerConfiguration;

在public class RangerDefaultAuditHandler implements行下面添加如下代码

protected static final String RangerModuleName = RangerConfiguration.getInstance().get(RangerHadoopConstants.AUDITLOG_RANGER_MODULE_ACL_NAME_PROP , RangerHadoopConstants.DEFAULT_RANGER_MODULE_ACL_NAME);

2.3.2 修改RangerConfiguration.java类:

vim /data/packages/ranger/ranger-2.1/agents-common/src/main/java/org/apache/ranger/authorization/hadoop/config/RangerConfiguration.java

在public class RangerConfiguration extends Configuration代码下面添加如下代码:

private static volatile RangerConfiguration config;

public static RangerConfiguration getInstance() {

RangerConfiguration result = config;

if (result == null) {

synchronized (RangerConfiguration.class) {

result = config;

if (result == null) {

config = result = new RangerConfiguration();

}

}

}

return result;

}

2.3.3 修改RangerElasticsearchPlugin.java类

vim /data/packages/ranger/ranger-2.1/ranger-elasticsearch-plugin-shim/src/main/java/org/apache/ranger/authorization/elasticsearch/plugin/RangerElasticsearchPlugin.java

把createComponents方法上面的@Override删除

@Override

public Collection<Object> createComponents

修改为:

public Collection<Object> createComponents

2.3.5 修改ServiceKafkaClient.java类

vim /data/packages/ranger/ranger-2.1/plugin-kafka/src/main/java/org/apache/ranger/services/kafka/client/ServiceKafkaClient.java

38行删除:

import scala.Option;

87行:

ZooKeeperClient zookeeperClient = new ZooKeeperClient(zookeeperConnect, sessionTimeout, connectionTimeout,

1, Time.SYSTEM, "kafka.server", "SessionExpireListener", Option.empty());

修改:

ZooKeeperClient zookeeperClient = new ZooKeeperClient(zookeeperConnect, sessionTimeout, connectionTimeout,

1, Time.SYSTEM, "kafka.server", "SessionExpireListener");

2.3.6 实现getHivePolicyProvider方法

vim /data/packages/ranger/ranger-2.1/hive-agent/src/main/java/org/apache/ranger/authorization/hive/authorizer/RangerHiveAuthorizer.java

在public boolean needTransform下面添加如下代码

@Override

public HivePolicyProvider getHivePolicyProvider() throws HiveAuthzPluginException {

if (hivePlugin == null) {

throw new HiveAuthzPluginException();

}

RangerHivePolicyProvider policyProvider = new RangerHivePolicyProvider(hivePlugin, this);

return policyProvider;

}

2.4 CDH平台适配 - 配置文件

问题描述:

CDH在重启组件服务时为组件服务独立启动进程运行,动态生成运行配置文件目录和配置文件,ranger插件配置文件部署到CDH安装目录无法被组件服务读取到。

解决方案

在/data/packages/ranger/ranger-2.1/agents-common/src/main/java/org/apache/ranger/authorization/hadoop/config/RangerPluginConfig.java中添加copyConfigFile方法:

1)把所有的import导入的类换成如下:

import org.apache.commons.collections.CollectionUtils;

import org.apache.commons.io.FileUtils;

import org.apache.commons.io.filefilter.IOFileFilter;

import org.apache.commons.io.filefilter.RegexFileFilter;

import org.apache.commons.io.filefilter.TrueFileFilter;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.log4j.Logger;

import org.apache.ranger.authorization.utils.StringUtil;

import org.apache.ranger.plugin.policyengine.RangerPolicyEngineOptions;

import java.io.File;

import java.net.URL;

import java.util.*;

2)在private Set<String> superGroups代码下面添加如下代码:

private void copyConfigFile(String serviceType) {

// 这个方法用来适配CDH版本的组件,非CDH组件需要跳出

if (serviceType.equals("presto")) {

return;

}

// 环境变量

Map map = System.getenv();

Iterator it = map.entrySet().iterator();

while (it.hasNext()) {

Map.Entry entry = (Map.Entry) it.next();

LOG.info("env key: " + entry.getKey() + ", value: " + entry.getValue());

}

// 系统变量

Properties properties = System.getProperties();

Iterator itr = properties.entrySet().iterator();

while (itr.hasNext()) {

Map.Entry entry = (Map.Entry) itr.next();

LOG.info("system key: " + entry.getKey() + ", value: " + entry.getValue());

}

String serviceHome = "CDH_" + serviceType.toUpperCase() + "_HOME";

if ("CDH_HDFS_HOME".equals(serviceHome)) {

serviceHome = "CDH_HADOOP_HOME";

}

serviceHome = System.getenv(serviceHome);

File serviceHomeDir = new File(serviceHome);

String userDir = System.getenv("CONF_DIR");

File destDir = new File(userDir);

LOG.info("-----Service Home: " + serviceHome);

LOG.info("-----User dir: " + userDir);

LOG.info("-----Dest dir: " + destDir);

IOFileFilter regexFileFilter = new RegexFileFilter("ranger-.+xml");

Collection<File> configFileList = FileUtils.listFiles(serviceHomeDir, regexFileFilter, TrueFileFilter.INSTANCE);

boolean flag = true;

for (File rangerConfigFile : configFileList) {

try {

if (serviceType.toUpperCase().equals("HIVE") && flag) {

File file = new File(rangerConfigFile.getParentFile().getPath() + "/xasecure-audit.xml");

FileUtils.copyFileToDirectory(file, destDir);

flag = false;

LOG.info("-----Source dir: " + file.getPath());

}

FileUtils.copyFileToDirectory(rangerConfigFile, destDir);

} catch (IOException e) {

LOG.error("Copy ranger config file failed.", e);

}

}

}

3)在addResourcesForServiceType方法第一行添加copyConfigFile的调用:

private void addResourcesForServiceType(String serviceType) {

copyConfigFile(serviceType);

String auditCfg = "ranger-" + serviceType + "-audit.xml";

String securityCfg = "ranger-" + serviceType + "-security.xml";

String sslCfg = "ranger-policymgr-ssl.xml";

if (!addResourceIfReadable(auditCfg)) {

addAuditResource(serviceType);

}

if (!addResourceIfReadable(securityCfg)) {

addSecurityResource(serviceType);

}

if (!addResourceIfReadable(sslCfg)) {

addSslConfigResource(serviceType);

}

}

2.5 CDH平台适配 - ENABLE-AGENT.SH配置

问题描述

- hdfs和yarn插件安装部署后,插件jar包会部署到组件安装目录的share/hadoop/hdfs/lib子目录下,启动hdfs或yarn运行时加载不到这些jar包,会报ClassNotFoundException: Class org.apache.ranger.authorization.yarn.authorizer.RangerYarnAuthorizer not found

- kafka插件安装部署后,启动运行时会从插件jar包所在目录加载ranger插件配置文件,读不到配置文件会报错addResourceIfReadable(ranger-kafka-audit.xml): couldn't find resource file location

解决方案

修改agents-common模块enable-agent.sh脚本文件:

vim /data/packages/ranger/ranger-2.1/agents-common/scripts/enable-agent.sh

将

HCOMPONENT_LIB_DIR=${HCOMPONENT_INSTALL_DIR}/share/hadoop/hdfs/lib

修改为:

HCOMPONENT_LIB_DIR=${HCOMPONENT_INSTALL_DIR}

将:

elif [ "${HCOMPONENT_NAME}" = "kafka" ]; then

HCOMPONENT_CONF_DIR=${HCOMPONENT_INSTALL_DIR}/config

修改为:

elif [ "${HCOMPONENT_NAME}" = "kafka" ]; then

HCOMPONENT_CONF_DIR=${PROJ_LIB_DIR}/ranger-kafka-plugin-impl

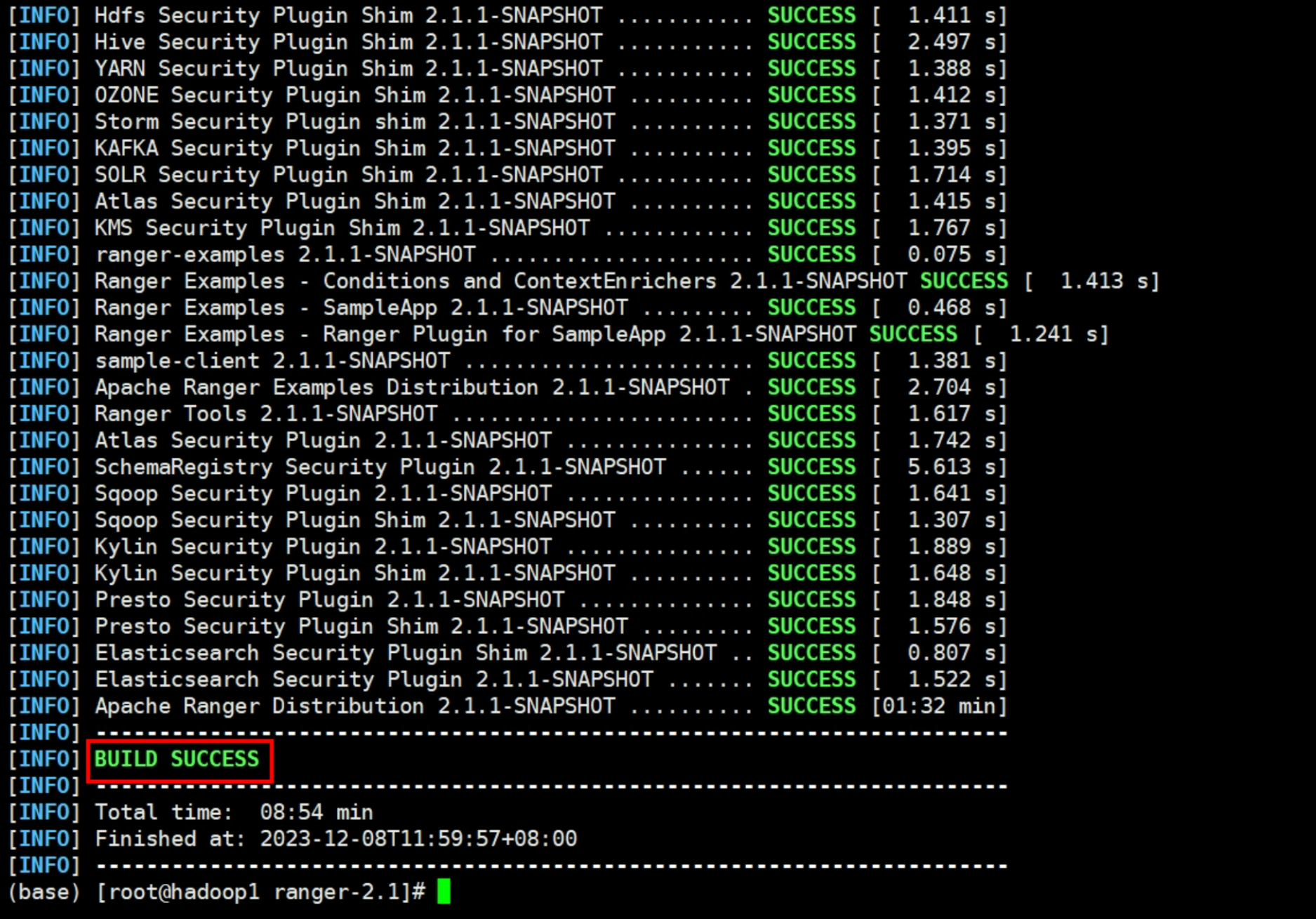

编译

[root@hadoop1 ranger-2.1]# /data/maven/bin/mvn clean package install -Dmaven.test.skip=true -X

或者

[root@hadoop1 ranger-2.1]# /data/maven/bin/mvn clean compile package assembly:assembly install -DskipTests -Drat.skip=true

或者

[root@hadoop1 ranger-2.1]# /data/maven/bin/mvn clean package install -Dpmd.skip=true -Dcheckstyle.skip=true -Dmaven.test.skip=true

说明:

选择第一种方式编译,会跳过测试代码编译以及测试;

第二种方式编译会跳过测试代码测试,但是不会跳过编译;

第三种方式主要是忽略一些规范问题,比如修改源码时代码或者注释不规范,编译可能会报You have 1 PMD violation,通过这种方式解决即可。

本次使用的最后一个命令最终编译成功。构建过程躺了很多坑,差不多花了两天才解决。相比ranger编译社区版来说,要费劲一些。

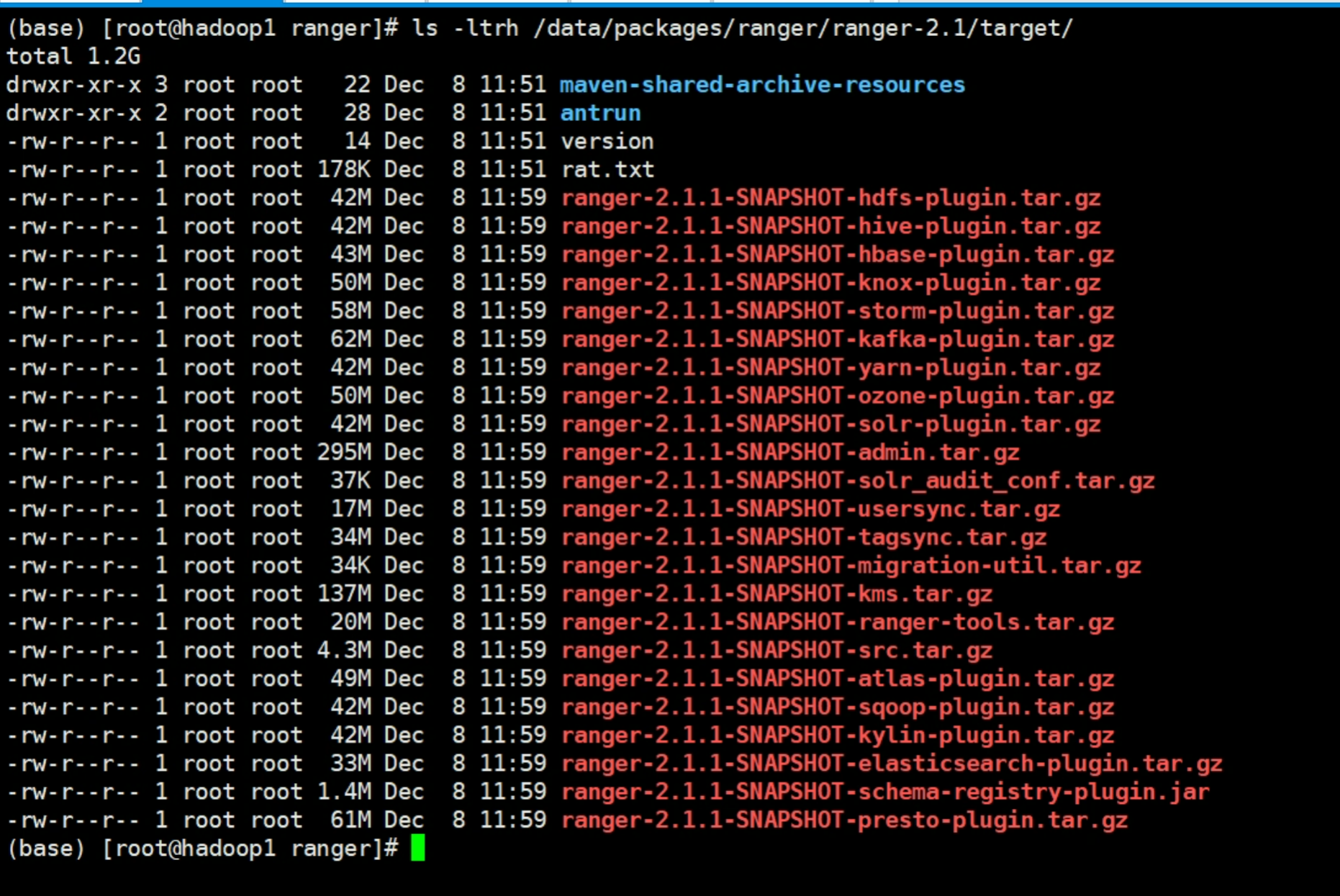

查看编译软件包

默认在当前工程的target目录下: