通过phoenix导入数据到hbase出错记录

解决方法1

错误如下

2019-08-26 10:03:56,819 [hconnection-0x7b9e01aa-shared--pool11069-t114734] WARN org.apache.hadoop.hbase.ipc.CoprocessorRpcChannel - Call failed on IOException org.apache.hadoop.hbase.exceptions.UnknownProtocolException: org.apache.hadoop.hbase.exceptions.UnknownProtocolException: No registered coprocessor service found for name ServerCachingService in region TABLE_RESULT,\x012019-05-12\x00037104581382,1566564239929.dcd3d414bc567586049d3c71aa74512d. at org.apache.hadoop.hbase.regionserver.HRegion.execService(HRegion.java:8062) at org.apache.hadoop.hbase.regionserver.RSRpcServices.execServiceOnRegion(RSRpcServices.java:2068) at org.apache.hadoop.hbase.regionserver.RSRpcServices.execService(RSRpcServices.java:2050) at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:34954) at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2339) at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:123) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:188) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:168) at sun.reflect.GeneratedConstructorAccessor81.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106) at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:95) at org.apache.hadoop.hbase.protobuf.ProtobufUtil.getRemoteException(ProtobufUtil.java:332) at org.apache.hadoop.hbase.protobuf.ProtobufUtil.execService(ProtobufUtil.java:1694) at org.apache.hadoop.hbase.ipc.RegionCoprocessorRpcChannel$1.call(RegionCoprocessorRpcChannel.java:100) at org.apache.hadoop.hbase.ipc.RegionCoprocessorRpcChannel$1.call(RegionCoprocessorRpcChannel.java:90) at org.apache.hadoop.hbase.client.RpcRetryingCaller.callWithRetries(RpcRetryingCaller.java:137) at org.apache.hadoop.hbase.ipc.RegionCoprocessorRpcChannel.callExecService(RegionCoprocessorRpcChannel.java:103) at org.apache.hadoop.hbase.ipc.CoprocessorRpcChannel.callMethod(CoprocessorRpcChannel.java:56) at org.apache.phoenix.coprocessor.generated.ServerCachingProtos$ServerCachingService$Stub.addServerCache(ServerCachingProtos.java:8394) at org.apache.phoenix.cache.ServerCacheClient$1$1.call(ServerCacheClient.java:224) at org.apache.phoenix.cache.ServerCacheClient$1$1.call(ServerCacheClient.java:193) at org.apache.hadoop.hbase.client.HTable$15.call(HTable.java:1763) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:748) Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException(org.apache.hadoop.hbase.exceptions.UnknownProtocolException): org.apache.hadoop.hbase.exceptions.UnknownProtocolException: No registered coprocessor service found for name ServerCachingService in region

TABLE_RESULT,\x012019-05-12\x00037104581382,1566564239929.dcd3d414bc567586049d3c71aa74512d. at org.apache.hadoop.hbase.regionserver.HRegion.execService(HRegion.java:8062) at org.apache.hadoop.hbase.regionserver.RSRpcServices.execServiceOnRegion(RSRpcServices.java:2068) at org.apache.hadoop.hbase.regionserver.RSRpcServices.execService(RSRpcServices.java:2050) at org.apache.hadoop.hbase.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:34954) at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2339) at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:123) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:188)

错误分析:从错误的信息来看,是关于协处理器的错误,可能是region或者表没有使用协处理器。

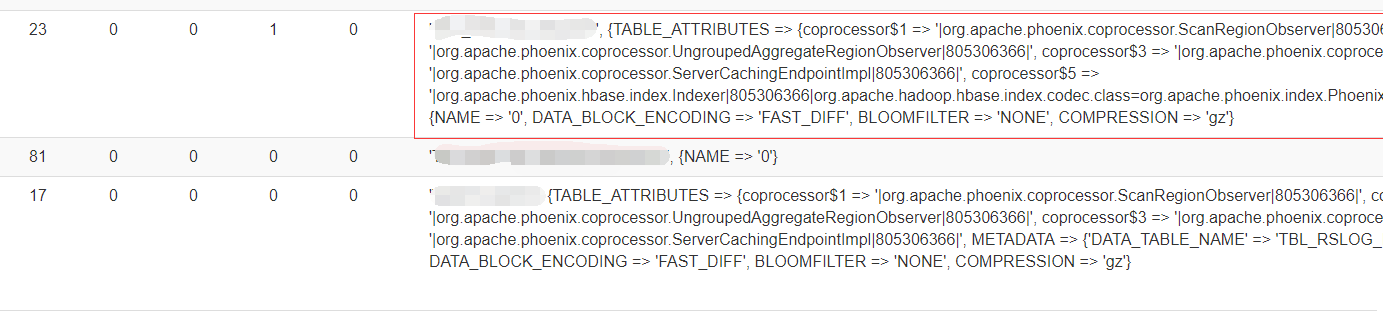

web界面查看表信息如下

从web信息来看,确实这张表没有相关协处理器的信息,正常通过phoenix创建表以后,都会自带phoenix相关的协处理器信息,如上面红框圈起来的地方,上图有张表(红框下面的这个)却没有,导致phoenix插入数据的时候,由于协处理器问题,导致插入失败,这种情况估计跟二级索引有一定的关系。

解决

修改表属性

# hbase shell

#####添加相关的协处理器信息

hbase(main):005:0> alter 'TABLE_RESULT', { METHOD => 'table_att','coprocessor$1' => '|org.apache.phoenix.coprocessor.ScanRegionObserver|805306366|', 'coprocessor$2' => '|org.apache.phoenix.coprocessor.UngroupedAggregateRegionObserver|805306366|', 'coprocessor$3' => '|org.apache.phoenix.coprocessor.GroupedAggregateRegionObserver|805306366|', 'coprocessor$4' => '|org.apache.phoenix.coprocessor.ServerCachingEndpointImpl|805306366|', 'coprocessor$5' => '|org.apache.phoenix.hbase.index.Indexer|805306366|org.apache.hadoop.hbase.index.codec.class=org.apache.phoenix.index.PhoenixIndexCodec,index.builder=org.apache.phoenix.index.PhoenixIndexBuilder'}

然后通过phoenix导入数据正常。

解决方法2(可能有问题)

从官网或者其他博客搜索到一些解决协处理器的问题,只是借鉴,对我这种情况不起作用

可以全局配置哪些协处理器在 HBase 启动时加载。这可以通过向 hbase-site.xml 配置文件中添加如下配置属性实现:

如下是借鉴其他人的博客:

注意:下面配置属性的值有的是他们自己Java代码实现的,所以,按如下配置加入到hbase的配置文件,在启动的时候会提示找不到相关的协处理器,导致hbase启动失败。

<property>

<name>hbase.coprocessor.master.classes</name>

<value>coprocessor.MasterObserverExample</value>

</property>

<property>

<name>hbase.coprocessor.regionserver.classes</name>

<value>coprocessor.RegionServerObserverExample</value>

</property>

<property>

<name>hbase.coprocessor.region.classes</name>

<value>coprocessor.system.RegionObserverExample, coprocessor.AnotherCoprocessor</value>

</property>

<property>

<name>hbase.coprocessor.user.region.classes</name>

<value>coprocessor.user.RegionObserverExample</value>

</property>

<property>

<name>hbase.coprocessor.wal.classes</name>

<value>coprocessor.WALObserverExample, bar.foo.MyWALObserver</value>

</property>

解决方法3

通过在hbase-site.xml文件中设置参数:

<property>

<name>hbase.coprocessor.abortonerror</name>

<value>false</value>

</property>

并启动region server可以解决,这样就忽略了协处理器出现的错误,保证集群高可用

借鉴:

记录学习和生活的酸甜苦辣.....哈哈哈