训练逻辑与门和逻辑或门

作业要求

作业正文

代码

import numpy as np

import matplotlib.pyplot as plt

# x1=0,0,1,1

# x2=0,1,0,1

# y =0,0,0,1

def ReadData_And():

X = np.array([0,0,1,1,0,1,0,1]).reshape(2,4)

Y = np.array([0,0,0,1]).reshape(1,4)

return X,Y

# x1=0,0,1,1

# x2=0,1,0,1

# y =0,1,1,1

def ReadData_Or():

X = np.array([0,0,1,1,0,1,0,1]).reshape(2,4)

Y = np.array([0,1,1,1]).reshape(1,4)

return X,Y

def Sigmoid(x):

s = 1/(1+np.exp(-x))

return s

def ForwardCalculation(w,b,x):

z = np.dot(w , x) + b

a = Sigmoid(z)

return a

def BackPropagation(x,y,z):

m = x.shape[1]

dZ = z - y

dB = dZ.sum(axis=1 , keepdims = True)/m

dW = np.dot(dZ , x.T)/m

return dW, dB

def UpdateWeights(w, b, dW, dB, eta):

w = w - eta*dW

b = b - eta*dB

return w,b

def CheckLoss(w, b, X, Y):

m = X.shape[1]

a = ForwardCalculation(w, b, X)

# Cross Entropy

n = np.sum(-(np.multiply(Y, np.log(a)) + np.multiply(1 - Y, np.log(1 - a))))

loss = n / m

return loss

# 初始化参数

def InitialWeights(num_input, num_output):

W = np.zeros((num_output, num_input))

B = np.zeros((num_output, 1))

return W, B

def ShowResult(W,B,X,Y):

w = -W[0,0]/W[0,1]

b = -B[0,0]/W[0,1]

x = np.array([0,1])

y = w * x + b

plt.plot(x,y)

# 画出原始样本值

for i in range(X.shape[1]):

if Y[0,i] == 0:

plt.scatter(X[0,i],X[1,i],marker="o",c='b',s=64)

else:

plt.scatter(X[0,i],X[1,i],marker="^",c='r',s=64)

plt.axis([-0.1,1.1,-0.1,1.1])

plt.show()

if __name__ == '__main__':

# initialize_data

eta = 0.5

eps = 2e-3

max_epoch = 10000

# calculate loss to decide the stop condition

loss = 5

# create mock up data

X, Y = ReadData_Or()

#w, b = np.random.random(),np.random.random()

w, b = InitialWeights(X.shape[0],Y.shape[0])

# count of samples

num_features = X.shape[0]

num_example = X.shape[1]

for epoch in range(max_epoch):

for i in range(num_example):

# get x and y value for one sample

x = X[:,i].reshape(num_features,1)

y = Y[:,i].reshape(1,1)

# get z from x,y

z = ForwardCalculation(w, b, x)

# calculate gradient of w and b

dW, dB = BackPropagation(x, y, z)

# update w,b

w, b = UpdateWeights(w, b, dW, dB, eta)

# calculate loss for this batch

loss = CheckLoss(w,b,X,Y)

if loss < eps:

break

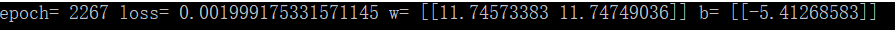

print("epoch=",epoch,"loss=",loss,"w=",w,"b=",b)

ShowResult(w,b,X,Y)

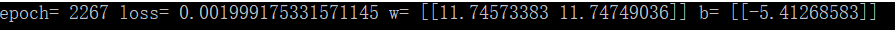

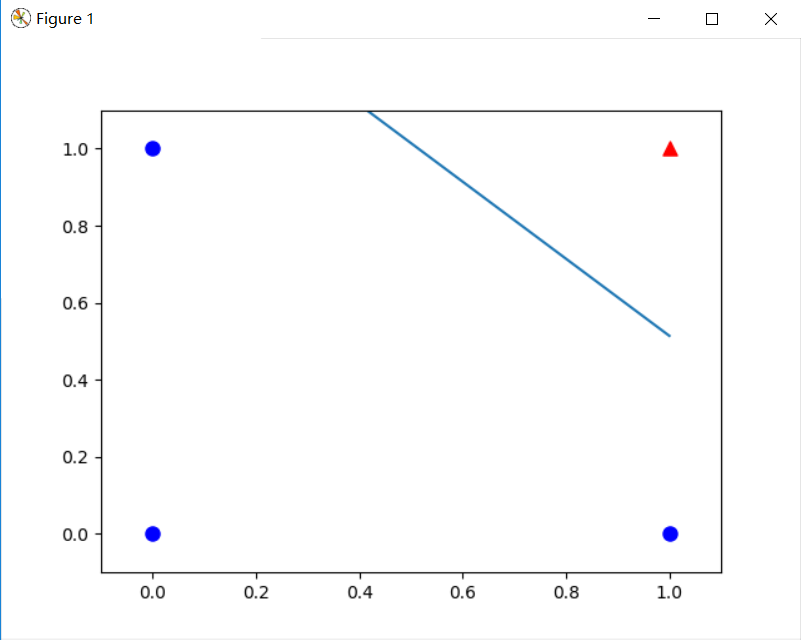

输出结果

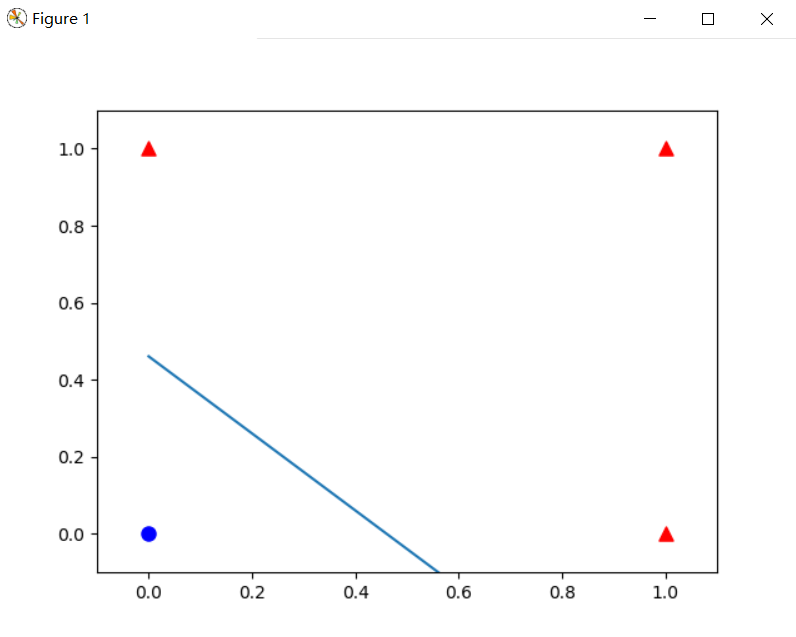

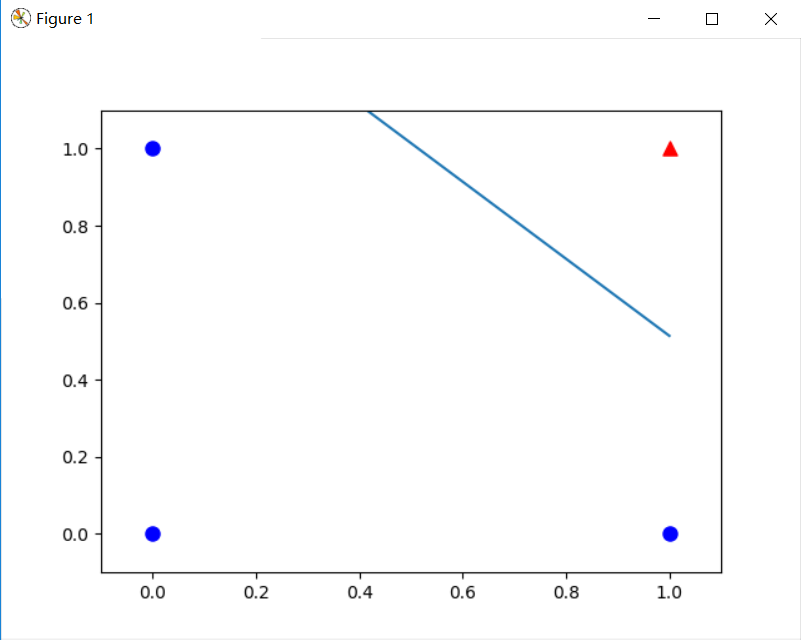

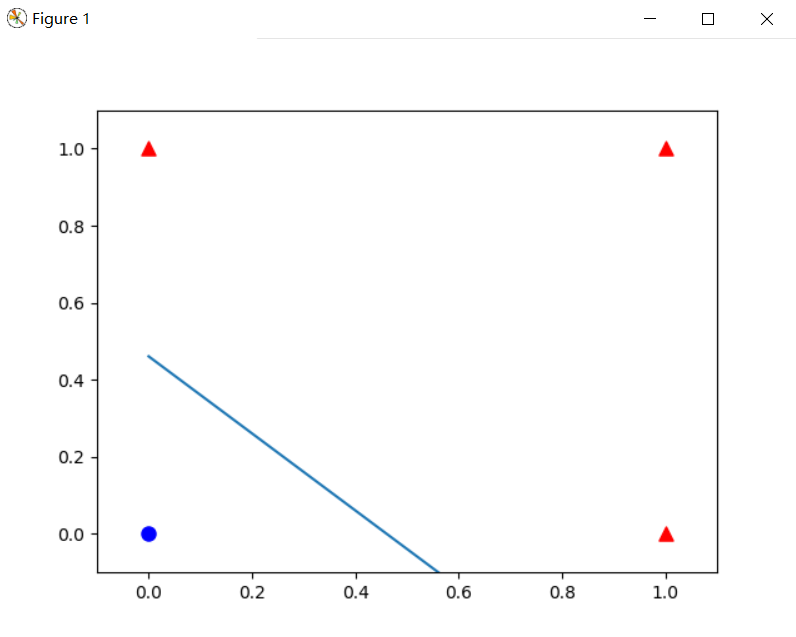

逻辑与门

逻辑或门