04.flume+kafka环境搭建

1、flume下载 安装 测试

1.1 官网下载,通过xshell从winser2012传到cent0s的/opt/flume目录中,使用rz命令

1.2 解压安装 tar -zxvf apache-flume-1.8.0-bin.tar.gz

[root@spark01 flume]# ls

apache-flume-1.8.0-bin apache-flume-1.8.0-bin.tar.gz

1.3 修改conf里面的flume-env.sh

[root@spark01 flume]# cd apache-flume-1.8.0-bin

[root@spark01 apache-flume-1.8.0-bin]# ls

bin conf doap_Flume.rdf lib NOTICE RELEASE-NOTES

CHANGELOG DEVNOTES docs LICENSE README.md tools

[root@spark01 apache-flume-1.8.0-bin]# cd conf

[root@spark01 conf]# ls

flume-conf.properties.template flume-env.sh.template

flume-env.ps1.template log4j.properties

[root@spark01 conf]# cp flume-env.sh.template flume-env.sh

[root@spark01 conf]# ls

flume-conf.properties.template flume-env.sh log4j.properties

flume-env.ps1.template flume-env.sh.template

[root@spark01 conf]# vi flume-env.sh

增加

export JAVA_HOME=/opt/java/jdk1.8.0_191配置

1.4 修改/etc/profile

[root@spark01 conf]# cd /etc

[root@spark01 etc]# vi profile

增加

export FLUME_HOME=/opt/flume/apache-flume-1.8.0-bin

export PATH=.:${JAVA_HOME}/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:${SPARK_HOME}/bin:${SCALA_HOME}/bin:${FLUME_HOME}/bin:$PATH

[root@spark01 etc]# source /etc/profile

1.5 测试安装是否成功

[root@spark01 etc]# flume-ng version

Flume 1.8.0

1.6 flume 示例测试

在conf文件夹中新建a1.conf

[root@spark01 conf]# vi a1.conf

[root@spark01 conf]# cat a1.conf

a1.sources = r1

a1.channels = c1

a1.sinks = k1

#define sources

a1.sources.r1.type = netcat

a1.sources.r1.bind = hadoop-senior.shinelon.com

a1.sources.r1.port = 44444

#define channels

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

#define sink

a1.sinks.k1.type = logger

a1.sinks.k1.maxBytesToLog = 2014

#bind sources and sinks to channel

a1.sources.r1.channels = c1

a1.sink.k1.channel = c1

[root@spark01 conf]# vi a1.conf

[root@spark01 conf]# cat a1.conf

a1.sources = r1

a1.channels = c1

a1.sinks = k1

#define sources

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

#define channels

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

#define sink

a1.sinks.k1.type = logger

a1.sinks.k1.maxBytesToLog = 2014

#bind sources and sinks to channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

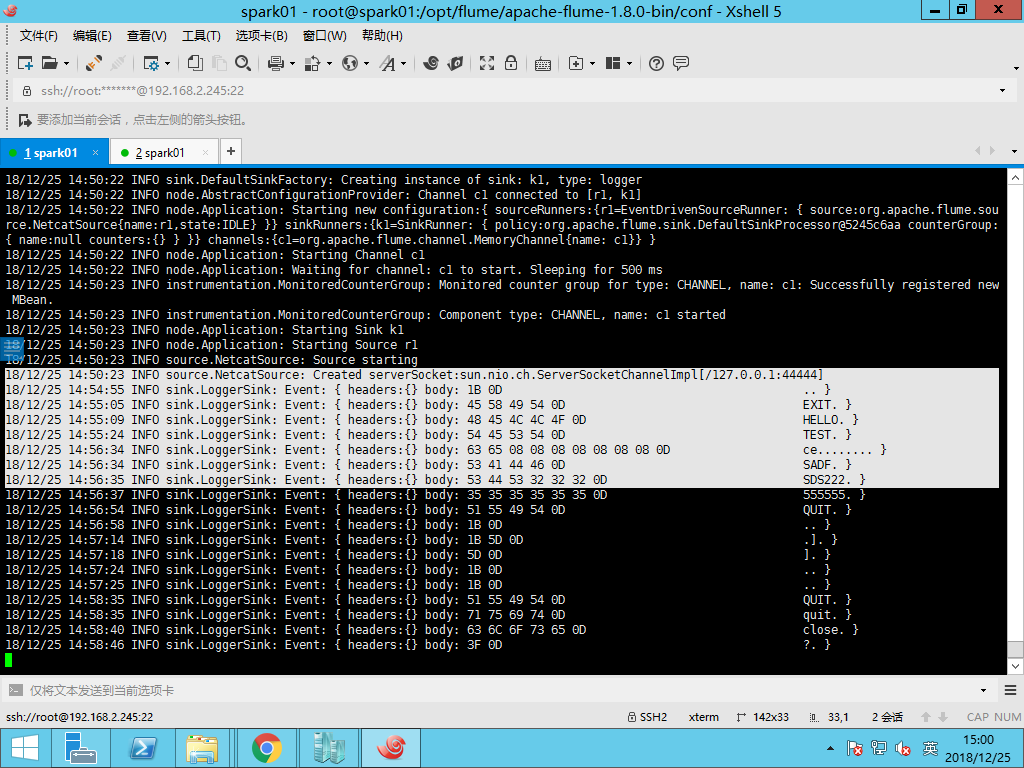

测试:

打开flume:

[root@spark01 conf]# flume-ng agent --conf conf --name a1 --conf-file a1.conf -Dflume.root.logger=DEBUG,console

另外打开一个控制台:telnet localhost 44444

[root@spark01 ~]# telnet localhost 44444

Trying ::1...

telnet: connect to address ::1: Connection refused

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

ce^H^H^H^H^H^H^H

OK

SADF

OK

SDS222

OK

555555

OK

查看打开flume那个控制台界面: