初试scrapy

需求:抓取http://quotes.toscrape.com/中quote,author,tags,保存到MongoDB中

环境:pycharm

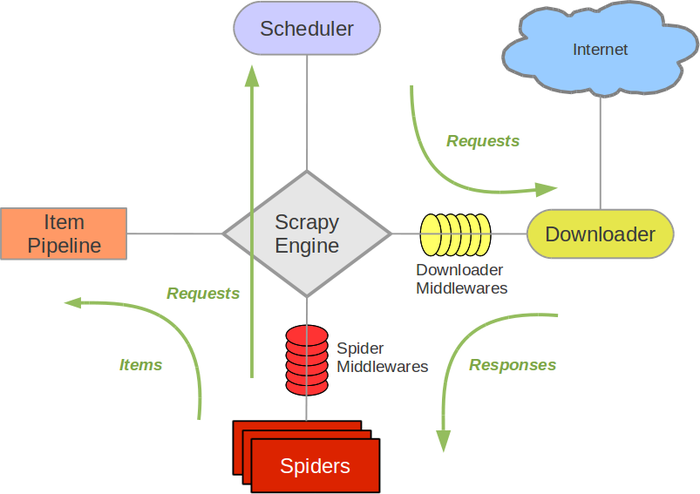

scrapy流程图:

1,建立工程scrapy startproject toscrapy

2.创建spider

cd toscrapy

scrapy genspider quotes quotes.toscrape.com

3.创建item

items.py

import scrapy class QuotesItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() quote = scrapy.Field() author = scrapy.Field() tags = scrapy.Field()

4,解析response

quotes.py

import scrapy from ..items import QuotesItem class QuotesSpider(scrapy.Spider): name = 'quotes' allowed_domains = ['quotes.toscrape.com'] start_urls = ['http://quotes.toscrape.com/'] def parse(self, response): quotes = response.css('.quote') for quote in quotes: item = QuotesItem() item['quote'] = quote.css('.text::text').extract_first() item['author'] = quote.css('.author::text').extract_first() item['tags'] = quote.css('.tags .tag::text').extract() yield item next_page = response.css('.next a::attr(href)').extract_first() url = response.urljoin(next_page) yield scrapy.Request(url=url,callback=self.parse)

5.数据处理,pipeline

pipelines.py

import pymongo class TextPipeline(object): def __init__(self,quote_limit): self.limit = quote_limit @classmethod def from_crawler(cls, crawler): return cls( quote_limit = crawler.settings.get('QUOTE_LIMIT'), ) def process_item(self, item, spider): if len(item['quote']) > self.limit: item['quote'] = item['quote'][:self.limit].rstrip() + '...' return item class ToscrapyPipeline(object): collection_name = 'scrapy_items' def __init__(self, mongo_uri, mongo_db): self.mongo_uri = mongo_uri self.mongo_db = mongo_db @classmethod def from_crawler(cls, crawler): return cls( mongo_uri=crawler.settings.get('MONGO_URI'), mongo_db=crawler.settings.get('MONGO_DATABASE', 'items') ) def open_spider(self, spider): self.client = pymongo.MongoClient(self.mongo_uri) self.db = self.client[self.mongo_db] def close_spider(self, spider): self.client.close() def process_item(self, item, spider): self.db[self.collection_name].insert_one(dict(item)) return item

另外需要的配置:

settings.py

ITEM_PIPELINES = { 'toscrapy.pipelines.ToscrapyPipeline': 400, 'toscrapy.pipelines.TextPipeline': 300, } #... MONGO_URI='localhost' MONGO_DATABASE = 'toscrape' QUOTE_LIMIT = 50

scrapy.cfg文件目录下创建一个begin.py:

from scrapy import cmdline cmdline.execute("scrapy crawl quotes".split())

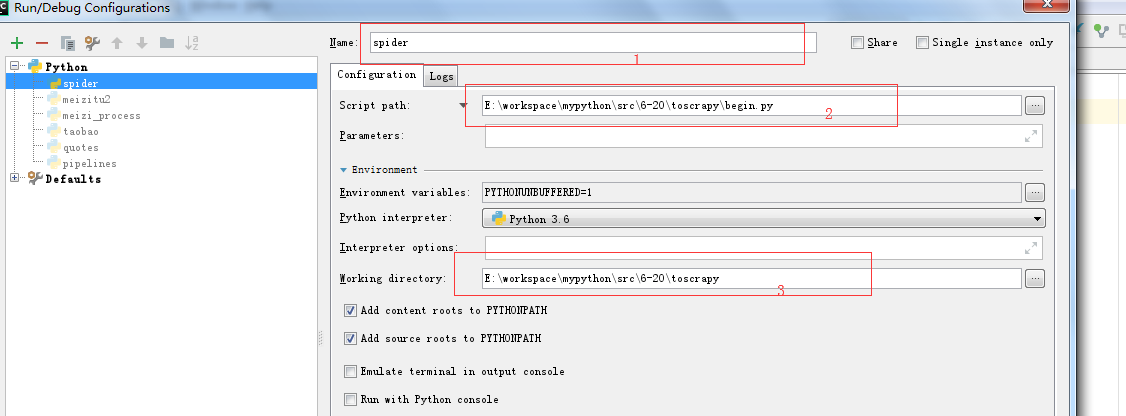

配置pycharm: