HDFS权限管理篇

HDFS权限管理篇

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.HDFS权限概述

HDFS文件权限用作每次访问HDFS文件或目录时尝试的授权检查。HDFS文件权限与通常在Linux或Unix文件系统中使用的权限非常相似。但HDFS域Linux和其他采用POSIX模型的操作系统之间也存在显著差异。 在Linux中,每个文件和目录都有一个用户和组。HDFS本身没有任何用户或组的概念,HDFS只是从底层的操作系统实体(例如在Linux文件系统中创建的用户和组)导出用户和组。 在Kerberos集群中,用户的Kerberos凭证决定客户端进程的身份,在默认的简单安全模式下,用户的身份由主机操作系统确定。 与Linux文件系统一样,可以为文件或目录的所有者,组的成员以及其他人分配单独的文件权限。可以像Linux中一样使用我们熟悉的r(读取文件并列出目录的内容),w(创建或删除文件或目录)和x(访问目录的子目录)权限。 可以使用八进制(例如"755","644"等)来设置文件的模式。需要注意的是,在Linux中,x表示执行文件的权限,但在HDFS中没有这样的概念。 当然,您也可以为文件或目录指定umask值。默认的umask值为"022",但是可以通过以下方式为"${HADOOP_HOME}/etc/hadoop/core-site.xml"文件进行修改。 <property> <name>fs.permissions.umask-mode</name> <value>037</value> <description>指定HDFS集群的umask值,默认值为022(创建的文件(目录)权限即为"755"),在Linux中,x表示执行文件权限,而在HDFS中没有这样的概念,尽管你可以为该文件添加x权限。</description> </property> 温馨提示:【umask值的计算方法,公式为:"实际权限 = max_permnishu & (~umask)"】 本案例中我配置的umask值为"037",对应二进制为"000 011 111",而"(~umask)"则对应为"111 100 000",因此创建文件的默认权限应该为"740",因此计算过程如下所示: 111 111 111 & ~(000 011 111) ---------------- 111 111 111 & 111 100 000 --------------- 111 100 000

二.HDFS权限相关的配置参数

1>.配置HDFS权限

可以通过在"${HADOOP_HOME}/etc/hadoop/hdfs-site.xml"配置文件中将参数"dfs.permissions.enabled"的值设置为ture来配置HDFS权限。 如下所示,有此参数的默认值为true,因此权限检查功能已经打开,故HDFS不需要再执行其他任何操作来进行权限检查。 <property> <name>dfs.permissions.enabled</name> <value>true</value> <description>如果为"true",则在HDFS中启用权限检查;如果为"false",则关闭权限检查;默认值为"true"。</description> </property>

2>.配置HDFS超级用户

与Linux文件系统不同,主机用户root不是HDFS的超级用户。超级用户是启动NameNode的用户,通常是操作系统用户(如"root","hdfs","hadoop"等),因此用户(如"root","hdfs","hadoop"等)通常是HDFS的超级用户。 可以通过在"${HADOOP_HOME}/etc/hadoop/hdfs-site.xml"配置文件中设置"dfs.permissions.superusergroup"参数来配置超级用户组(改参数仅能被设置一次)。 <property> <name>dfs.permissions.superusergroup</name> <value>admin</value> <description> 此参数指定包含HDFS超级用户的组(可以任意指定,分配给该组的任何用户都将是HDFS超级用户),默认值为"supergroup",我这里自定义超级用户的组名为"admin"。 需要注意的是,该组名只能被设置一次,当对NameNode节点进行格式化后在修改改参数并不生效!如果你想要的强行生效只能重新格式化NameNode节点。幸运的是,我们可以通过"hdfs dfs -chown"命令来修改某个文件或目录的所属者。 </description> </property>

3>.HDFS如何执行权限检查

客户端的身份由客户端(用户)的名称和它所属的组的列表组成。HDFS执行文件权限检查,以确保用户是具有适当组权限的所有者或组列表的成员。如果两者都不属于,HDFS检查用户的"其他"文件权限,如果该检查也失败,则拒绝客户端访问HDFS的请求。 如前所述,在默认的simple操作模式下,由操作系统用户名来确定客户端进程ID,而在Kerberos模式下,通过Kerberos凭据确定客户端身份。 根据运行集群的模式,一旦HDFS确定了用户的身份,它会使用"${HADOOP_HOME}/etc/hadoop/core-site.xml"文件中的"hadoop.security.group.mapping"属性来确定用户所属的组列表。NameNode执行用户到组的映射。 如果组不在Linux服务器上,仅在公司LDAP服务器上,则必须配置名为"org.apache.hadoop.security.LdapGroupsMapping"的备用组映射服务,而不是默认组映射实现。 博主推荐阅读: https://hadoop.apache.org/docs/r2.10.0/hadoop-project-dist/hadoop-common/GroupsMapping.html

三.更改HDFS集群中文件属性的常用命令

1>.chmod命令

chmod命令用于更改HDFS集群中文件的权限。这类似于shell的chmod命令,但有一些例外(使用"man chmod",你会发现Linux系统支持的参数更多)。

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help chmod -chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH... : Changes permissions of a file. This works similar to the shell's chmod command with a few exceptions. -R modifies the files recursively. This is the only option currently supported. <MODE> Mode is the same as mode used for the shell's command. The only letters recognized are 'rwxXt', e.g. +t,a+r,g-w,+rwx,o=r. <OCTALMODE> Mode specifed in 3 or 4 digits. If 4 digits, the first may be 1 or 0 to turn the sticky bit on or off, respectively. Unlike the shell command, it is not possible to specify only part of the mode, e.g. 754 is same as u=rwx,g=rx,o=r. If none of 'augo' is specified, 'a' is assumed and unlike the shell command, no umask is applied. [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwxr----- - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rw-r--r-- 3 nn admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chmod 700 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwxr----- - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 nn admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls -R /yinzhengjie/ drwx-wx--x - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rwxr----- 3 nn admin 3362 2020-10-08 11:16 /yinzhengjie/conf/hdfs.keytab -rwxr----- 3 nn admin 1346 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_cert -rwxr----- 3 nn admin 1834 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_key -rwxr----- 3 nn admin 115 2020-10-08 11:16 /yinzhengjie/conf/host-rack.txt -rwxr----- 3 nn admin 4181 2020-10-08 11:16 /yinzhengjie/conf/keystore -rwxr----- 3 nn admin 463 2020-10-08 11:16 /yinzhengjie/conf/toplogy.py -rwxr----- 3 nn admin 1016 2020-10-08 11:16 /yinzhengjie/conf/truststore -rwx------ 3 nn admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chmod -R 731 /yinzhengjie/conf [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls -R /yinzhengjie/ drwx-wx--x - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rwx-wx--x 3 nn admin 3362 2020-10-08 11:16 /yinzhengjie/conf/hdfs.keytab -rwx-wx--x 3 nn admin 1346 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_cert -rwx-wx--x 3 nn admin 1834 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_key -rwx-wx--x 3 nn admin 115 2020-10-08 11:16 /yinzhengjie/conf/host-rack.txt -rwx-wx--x 3 nn admin 4181 2020-10-08 11:16 /yinzhengjie/conf/keystore -rwx-wx--x 3 nn admin 463 2020-10-08 11:16 /yinzhengjie/conf/toplogy.py -rwx-wx--x 3 nn admin 1016 2020-10-08 11:16 /yinzhengjie/conf/truststore -rwx------ 3 nn admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]#

2>. chown命令

更改文件的所有者和组。这与Linux shell的chown命令类似,但有一些例外。

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help chown -chown [-R] [OWNER][:[GROUP]] PATH... : Changes owner and group of a file. This is similar to the shell's chown command with a few exceptions. -R modifies the files recursively. This is the only option currently supported. If only the owner or group is specified, then only the owner or group is modified. The owner and group names may only consist of digits, alphabet, and any of [-_./@a-zA-Z0-9]. The names are case sensitive. WARNING: Avoid using '.' to separate user name and group though Linux allows it. If user names have dots in them and you are using local file system, you might see surprising results since the shell command 'chown' is used for local files. [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 nn supergroup 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chown jason /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 jason supergroup 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 jason supergroup 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chown :admin /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 jason admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 jason admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chown java:bigdata /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls -R /yinzhengjie/ drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx-wx--x 3 nn admingroup 3362 2020-10-08 11:16 /yinzhengjie/conf/hdfs.keytab -rwx-wx--x 3 nn admingroup 1346 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_cert -rwx-wx--x 3 nn admingroup 1834 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_key -rwx-wx--x 3 nn admingroup 115 2020-10-08 11:16 /yinzhengjie/conf/host-rack.txt -rwx-wx--x 3 nn admingroup 4181 2020-10-08 11:16 /yinzhengjie/conf/keystore -rwx-wx--x 3 nn admingroup 463 2020-10-08 11:16 /yinzhengjie/conf/toplogy.py -rwx-wx--x 3 nn admingroup 1016 2020-10-08 11:16 /yinzhengjie/conf/truststore -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chown -R python:devops /yinzhengjie/conf [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls -R /yinzhengjie/ drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwx-wx--x 3 python devops 3362 2020-10-08 11:16 /yinzhengjie/conf/hdfs.keytab -rwx-wx--x 3 python devops 1346 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_cert -rwx-wx--x 3 python devops 1834 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_key -rwx-wx--x 3 python devops 115 2020-10-08 11:16 /yinzhengjie/conf/host-rack.txt -rwx-wx--x 3 python devops 4181 2020-10-08 11:16 /yinzhengjie/conf/keystore -rwx-wx--x 3 python devops 463 2020-10-08 11:16 /yinzhengjie/conf/toplogy.py -rwx-wx--x 3 python devops 1016 2020-10-08 11:16 /yinzhengjie/conf/truststore -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]#

3>.chgrp命令

chgrp命令相当于 "-chown ...:GROUP ..."

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help chgrp -chgrp [-R] GROUP PATH... : This is equivalent to -chown ... :GROUP ... [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 nn admin 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chgrp supergroup /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 nn supergroup 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls -R /yinzhengjie/ drwx-wx--x - nn admin 0 2020-10-08 11:16 /yinzhengjie/conf -rwx-wx--x 3 nn admin 3362 2020-10-08 11:16 /yinzhengjie/conf/hdfs.keytab -rwx-wx--x 3 nn admin 1346 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_cert -rwx-wx--x 3 nn admin 1834 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_key -rwx-wx--x 3 nn admin 115 2020-10-08 11:16 /yinzhengjie/conf/host-rack.txt -rwx-wx--x 3 nn admin 4181 2020-10-08 11:16 /yinzhengjie/conf/keystore -rwx-wx--x 3 nn admin 463 2020-10-08 11:16 /yinzhengjie/conf/toplogy.py -rwx-wx--x 3 nn admin 1016 2020-10-08 11:16 /yinzhengjie/conf/truststore -rwx------ 3 nn supergroup 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chgrp -R admingroup /yinzhengjie/conf [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls -R /yinzhengjie/ drwx-wx--x - nn admingroup 0 2020-10-08 11:16 /yinzhengjie/conf -rwx-wx--x 3 nn admingroup 3362 2020-10-08 11:16 /yinzhengjie/conf/hdfs.keytab -rwx-wx--x 3 nn admingroup 1346 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_cert -rwx-wx--x 3 nn admingroup 1834 2020-10-08 11:16 /yinzhengjie/conf/hdfs_ca_key -rwx-wx--x 3 nn admingroup 115 2020-10-08 11:16 /yinzhengjie/conf/host-rack.txt -rwx-wx--x 3 nn admingroup 4181 2020-10-08 11:16 /yinzhengjie/conf/keystore -rwx-wx--x 3 nn admingroup 463 2020-10-08 11:16 /yinzhengjie/conf/toplogy.py -rwx-wx--x 3 nn admingroup 1016 2020-10-08 11:16 /yinzhengjie/conf/truststore -rwx------ 3 nn supergroup 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

四.HDFS访问控制列表

HDFS支持使用ACL为特定用户和组设置更细粒度的权限。当希望以细粒度的方式授予权限,并处理复杂文件权限和访问需求时,ACL是一种很好的方式。

在默认情况下,ACL是被禁用的。为了使用HDFS ACL,可以通过将以下属性添加到"${HADOOP_HOME}/etc/hadoop/hdfs-site.xml"配置文件中,从而在NameNode上启用它。 <property> <name>dfs.namenode.acls.enabled</name> <value>true</value> <description>设置为true以启用对HDFS acl(访问控制列表)的支持。默认情况下,ACL处于禁用状态。禁用ACL时,NameNode会拒绝与设置或获取ACL相关的所有RPC。</description> </property>

温馨提示:

如下图所示,若将"dfs.namenode.acls.enabled"修改为"true",别忘记重启HDFS集群哟~否则NameNode并不会让ACL生效,换句话说就是无法设置配置ACL策略。

1>.与ACL相关的命令概述

getfacl:

显示文件和目录的访问控制列表(ACL)。如果目录具有默认ACL,那么getfacl也会显示默认ACL。

setfacl:

设置文件和目录的访问控制列表(ACL)。

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help getfacl -getfacl [-R] <path> : Displays the Access Control Lists (ACLs) of files and directories. If a directory has a default ACL, then getfacl also displays the default ACL. -R List the ACLs of all files and directories recursively. <path> File or directory to list. [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help setfacl -setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>] : Sets Access Control Lists (ACLs) of files and directories. Options: -b Remove all but the base ACL entries. The entries for user, group and others are retained for compatibility with permission bits. -k Remove the default ACL. -R Apply operations to all files and directories recursively. -m Modify ACL. New entries are added to the ACL, and existing entries are retained. -x Remove specified ACL entries. Other ACL entries are retained. --set Fully replace the ACL, discarding all existing entries. The <acl_spec> must include entries for user, group, and others for compatibility with permission bits. <acl_spec> Comma separated list of ACL entries. <path> File or directory to modify. [root@hadoop101.yinzhengjie.com ~]#

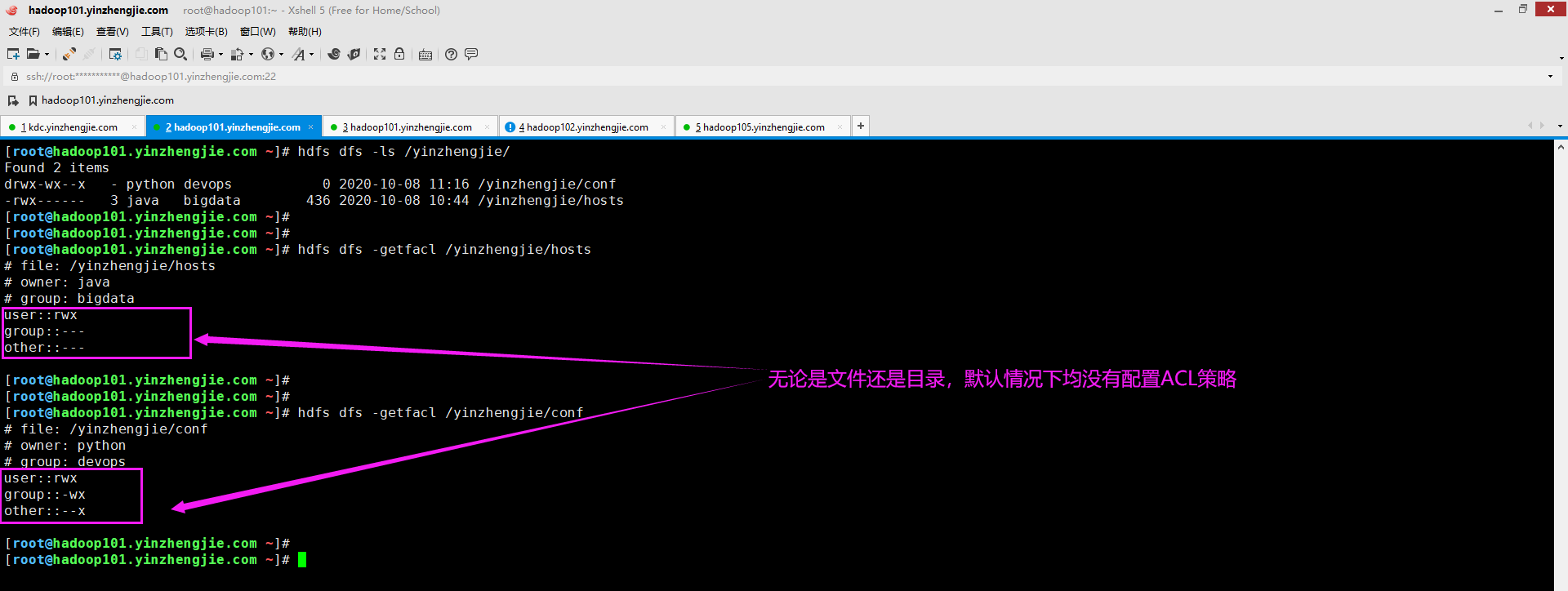

2>.使用getfacl命令检查目录或文件上当前的ACL信息(若之前没有启用ACL功能,默认是没有ACL策略的)

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx group::--- other::--- [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/conf # file: /yinzhengjie/conf # owner: python # group: devops user::rwx group::-wx other::--x [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

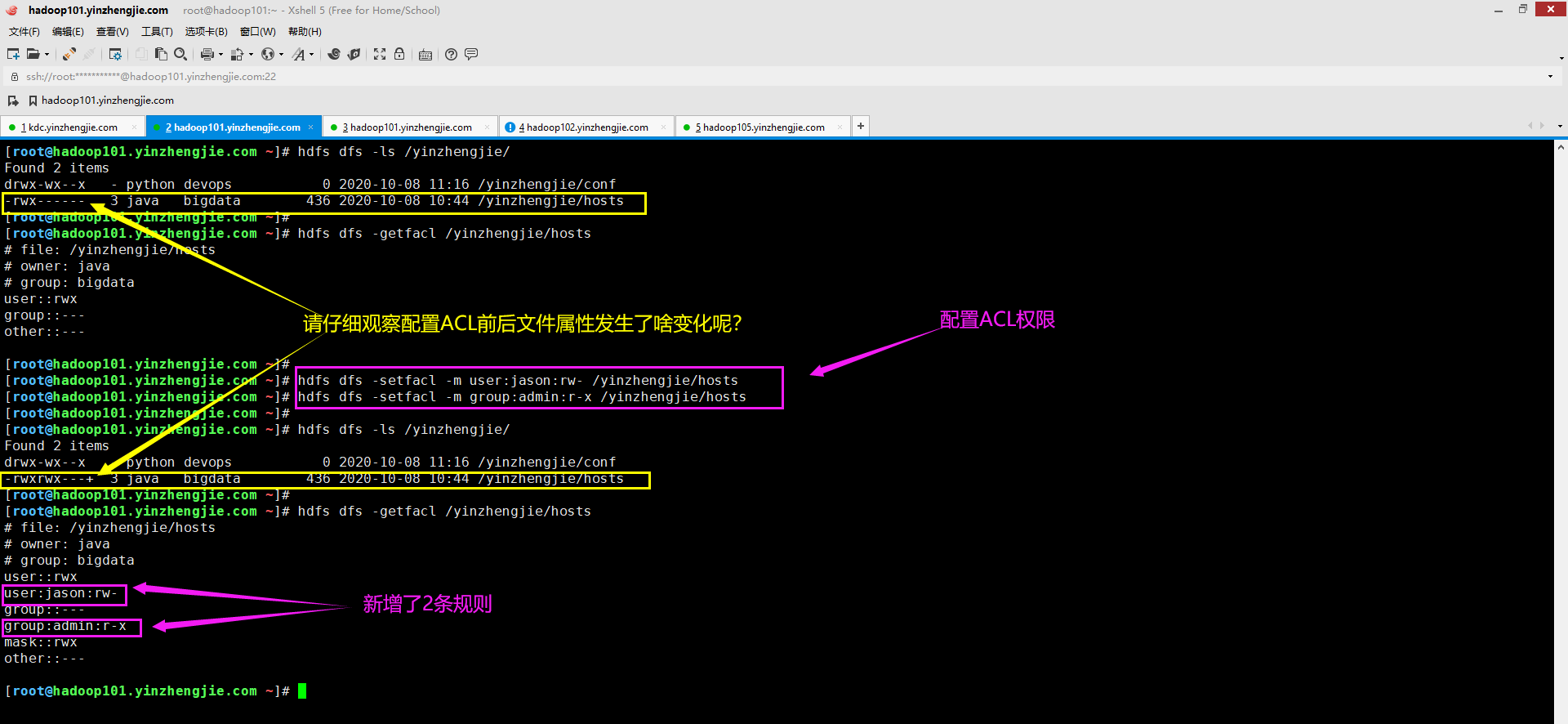

3>.使用setfacl命令为目录或文件设置ACL策略

如下图所示,在为文件设置ACL策略后,在该文件权限列表的最后添加了一个加号("+"),一次表示此文件权限的ACL条目。这一点你是否似曾相识?基本上和Linux的ACL策略有异曲同工之妙。

需要注意的是,即使可以对具有ACL的文件或目录授予用户特定权限,但这些权限必须在文件掩码的范围之内。

如下图所示,在"-getfacl"命令的输出中,有一个名为mask的新条目,这里,它的值为"rwx",这意味着用户(jason)和组(admin)在此文件上确实具有"rwx"权限。

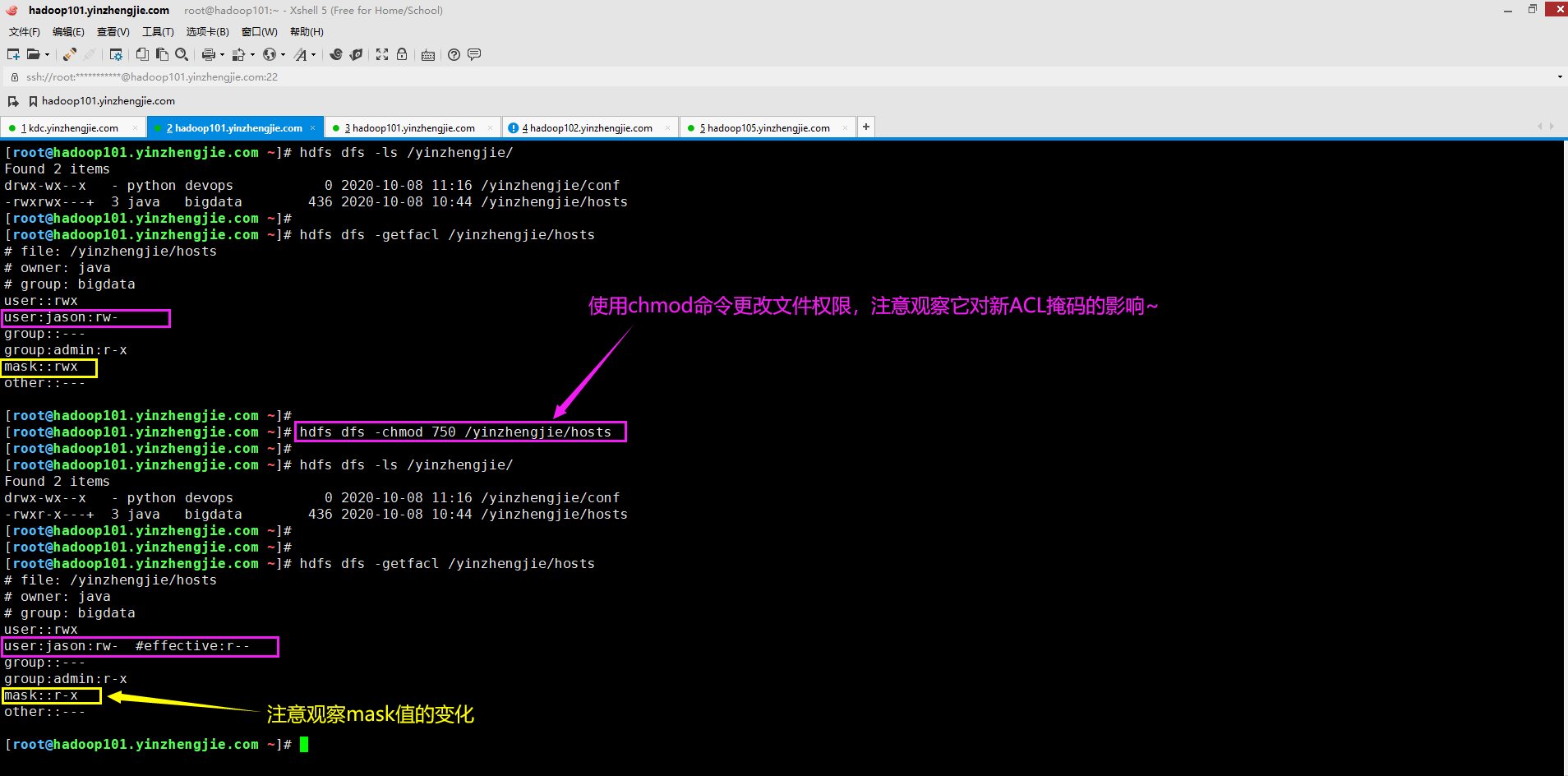

mask属性定义对文件的最大权限。如果掩码是"r-x",则虽然用户(jason)具有"rw-"权限,但有效的权限只是"r--"。

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx group::--- other::--- [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfacl -m user:jason:rw- /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfacl -m group:admin:r-x /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwxrwx---+ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx user:jason:rw- group::--- group:admin:r-x mask::rwx other::--- [root@hadoop101.yinzhengjie.com ~]#

4>.使用chmod命令更改文件权限,而后在看它对新ACL掩码的影响

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwxrwx---+ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx user:jason:rw- group::--- group:admin:r-x mask::rwx other::--- [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -chmod 750 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwxr-x---+ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx user:jason:rw- #effective:r-- group::--- group:admin:r-x mask::r-x other::--- [root@hadoop101.yinzhengjie.com ~]#

5>.删除ACL策略

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx user:jason:rw- #effective:r-- group::--- group:admin:r-x mask::r-x other::--- [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfacl -b /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfacl /yinzhengjie/hosts # file: /yinzhengjie/hosts # owner: java # group: bigdata user::rwx group::--- other::--- [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

五.HDFS扩展属性

HDFS允许用户往文件或者目录中添加一些其他的元数据,这些数据被称为HDFS的扩展属性,因此应用程序也可以将其他信息存储在inode中。例如,HDFS扩展属性可以帮助应用程序指定文档的字符编码。HDFS扩展属性可以被看作是系统HDFS文件系统权限的扩展。 如下图所示,在设置扩展属性(XAttr)时需要制定命名空间,否则就会设置属性失败。虽然有5种不同访问限制的命名空间类型(即"user","trusted","security","system","raw"): 客户端应用程序仅使用"用户(user)"命名空间,"用户(user)"命名空间中的HDFS扩展属性可通过HDFS文件权限进行管理; 还有3种其他类型的命名空间,即"系统(system)","安全性(security)"和"原始(raw)",供内部HDFS和其他系统使用; "可信(trusted)"命名空间是为HDFS超级用户保留的; 默认HDFS扩展属性为启用状态,可以通过"${HADOOP_HOME}/etc/hadoop/hdfs-site.xml"配置文件中的"dfs.namenode.xattrs.enabled"的值设置为"true"或"false"来启用或禁用该功能。 <property> <name>dfs.namenode.xattrs.enabled</name> <value>true</value> <description>是否在NameNode上启用了对扩展属性的支持。默认值为true</description> </property>

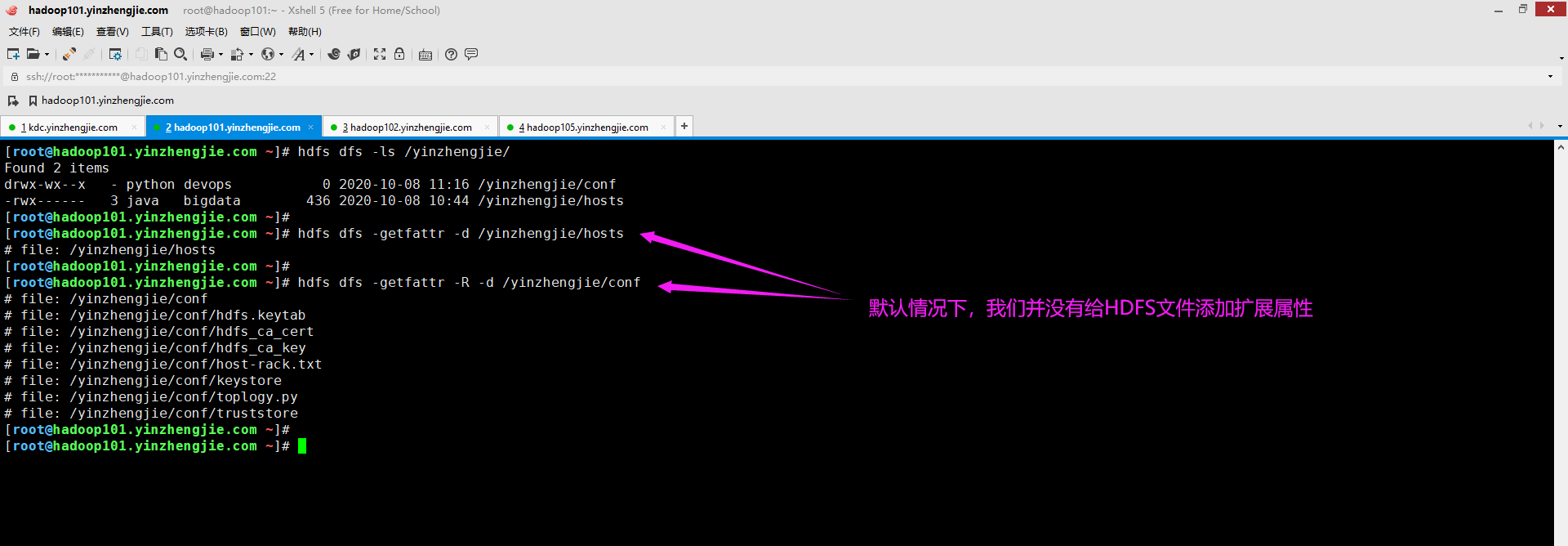

1>.查看文件或目录的扩展属性的名称和值

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help getfattr -getfattr [-R] {-n name | -d} [-e en] <path> : Displays the extended attribute names and values (if any) for a file or directory. -R Recursively list the attributes for all files and directories. -n name Dump the named extended attribute value. -d Dump all extended attribute values associated with pathname. -e <encoding> Encode values after retrieving them.Valid encodings are "text", "hex", and "base64". Values encoded as text strings are enclosed in double quotes ("), and values encoded as hexadecimal and base64 are prefixed with 0x and 0s, respectively. <path> The file or directory. [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfattr -d /yinzhengjie/hosts # file: /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfattr -R -d /yinzhengjie/conf # file: /yinzhengjie/conf # file: /yinzhengjie/conf/hdfs.keytab # file: /yinzhengjie/conf/hdfs_ca_cert # file: /yinzhengjie/conf/hdfs_ca_key # file: /yinzhengjie/conf/host-rack.txt # file: /yinzhengjie/conf/keystore # file: /yinzhengjie/conf/toplogy.py # file: /yinzhengjie/conf/truststore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

2>.将扩展属性的名称和值与文件或目录相关联

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -help setfattr -setfattr {-n name [-v value] | -x name} <path> : Sets an extended attribute name and value for a file or directory. -n name The extended attribute name. -v value The extended attribute value. There are three different encoding methods for the value. If the argument is enclosed in double quotes, then the value is the string inside the quotes. If the argument is prefixed with 0x or 0X, then it is taken as a hexadecimal number. If the argument begins with 0s or 0S, then it is taken as a base64 encoding. -x name Remove the extended attribute. <path> The file or directory. [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

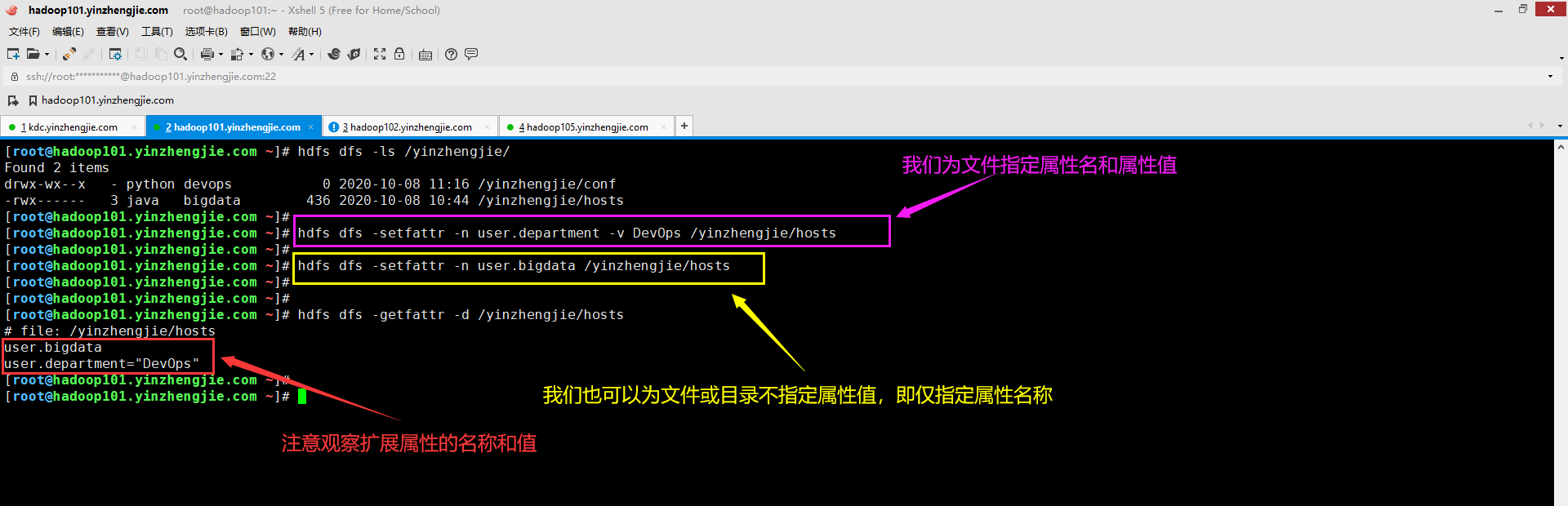

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfattr -n user.department -v DevOps /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfattr -n user.bigdata /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfattr -d /yinzhengjie/hosts # file: /yinzhengjie/hosts user.bigdata user.department="DevOps" [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

3>.删除扩展属性

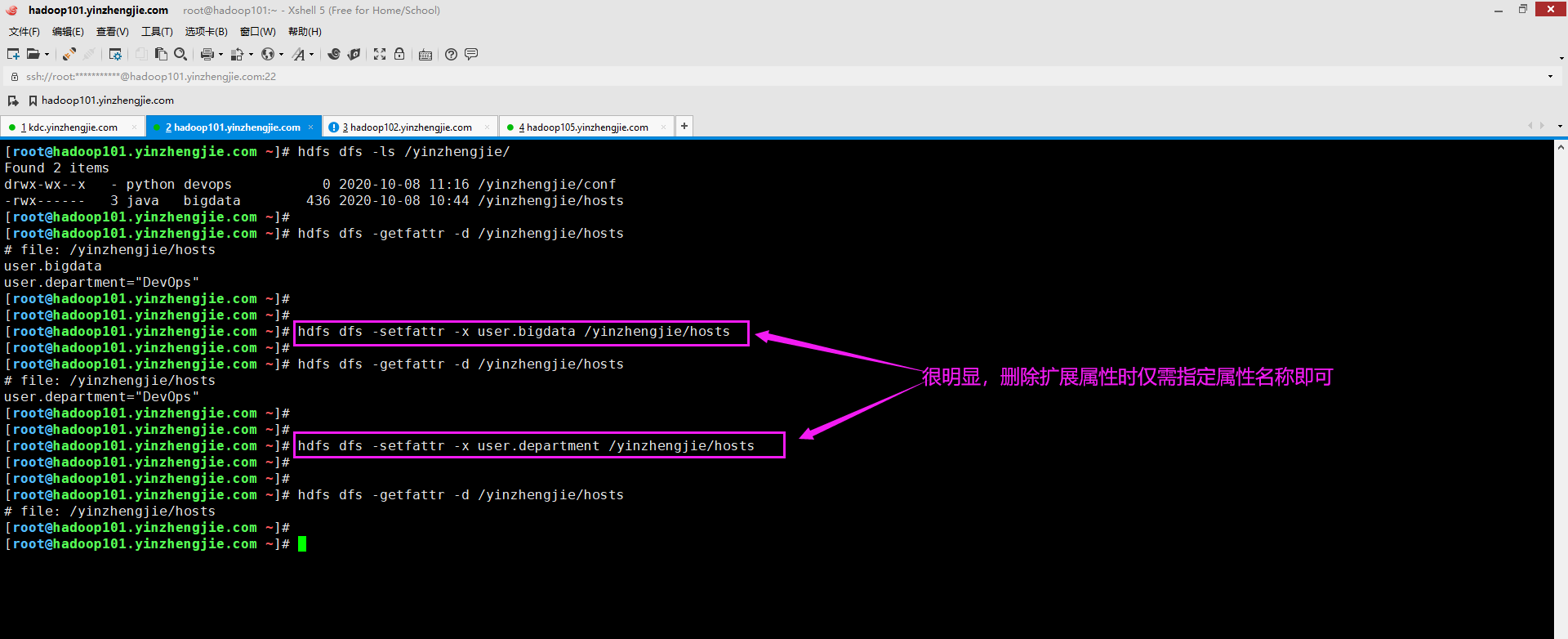

[root@hadoop101.yinzhengjie.com ~]# hdfs dfs -ls /yinzhengjie/ Found 2 items drwx-wx--x - python devops 0 2020-10-08 11:16 /yinzhengjie/conf -rwx------ 3 java bigdata 436 2020-10-08 10:44 /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfattr -d /yinzhengjie/hosts # file: /yinzhengjie/hosts user.bigdata user.department="DevOps" [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfattr -x user.bigdata /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfattr -d /yinzhengjie/hosts # file: /yinzhengjie/hosts user.department="DevOps" [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -setfattr -x user.department /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# hdfs dfs -getfattr -d /yinzhengjie/hosts # file: /yinzhengjie/hosts [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架