Hadoop 集群-伪分布式模式(Pseudo-Distributed Operation)

Hadoop 集群-伪分布式模式(Pseudo-Distributed Operation)

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

Hadoop也可以以伪分布式模式在单节点上运行,其中每个Hadoop守护程序都在单独的Java进程中运行。

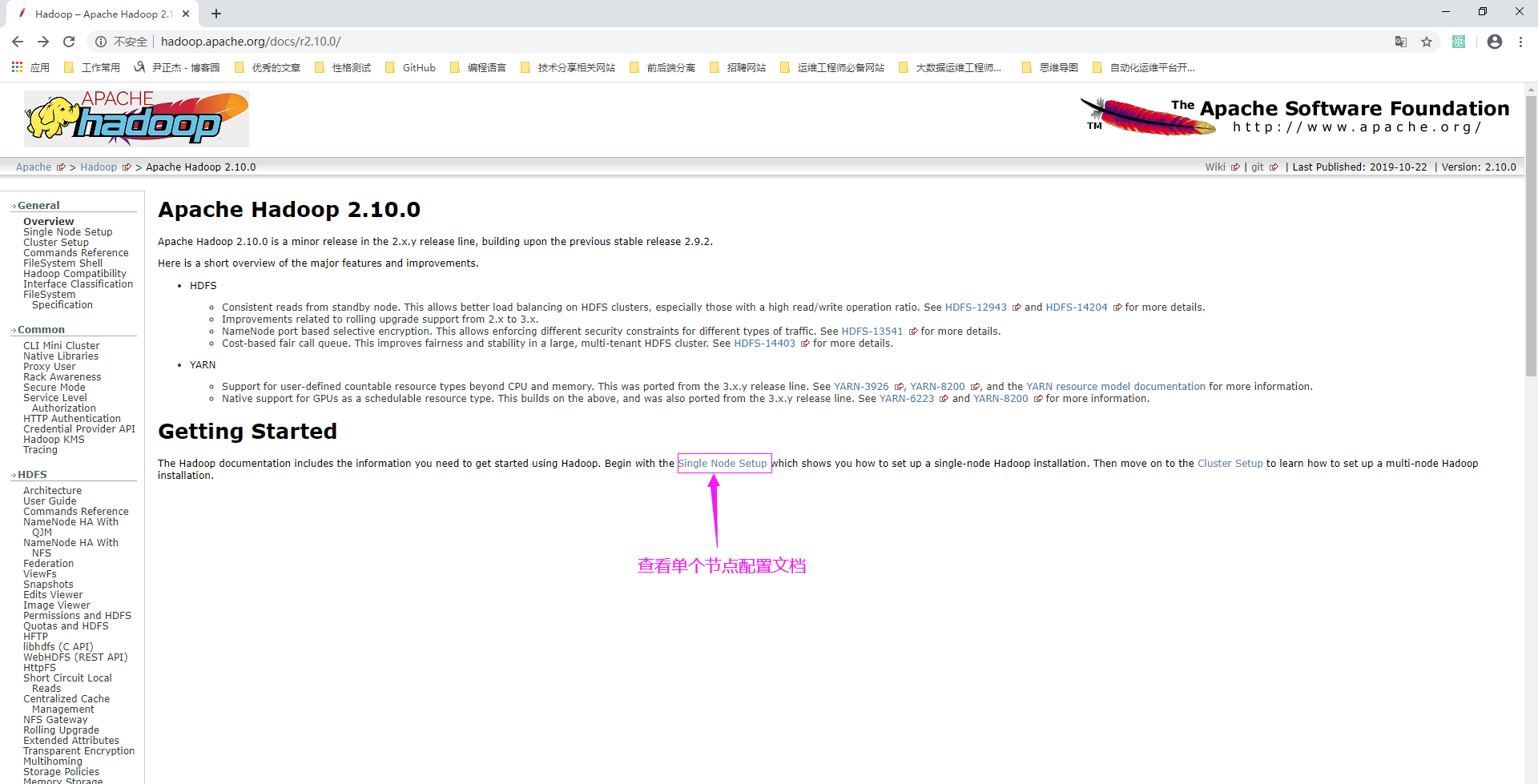

一.查看官方文档

1>.查看对应Apache Hadoop版本的官方文档

博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/

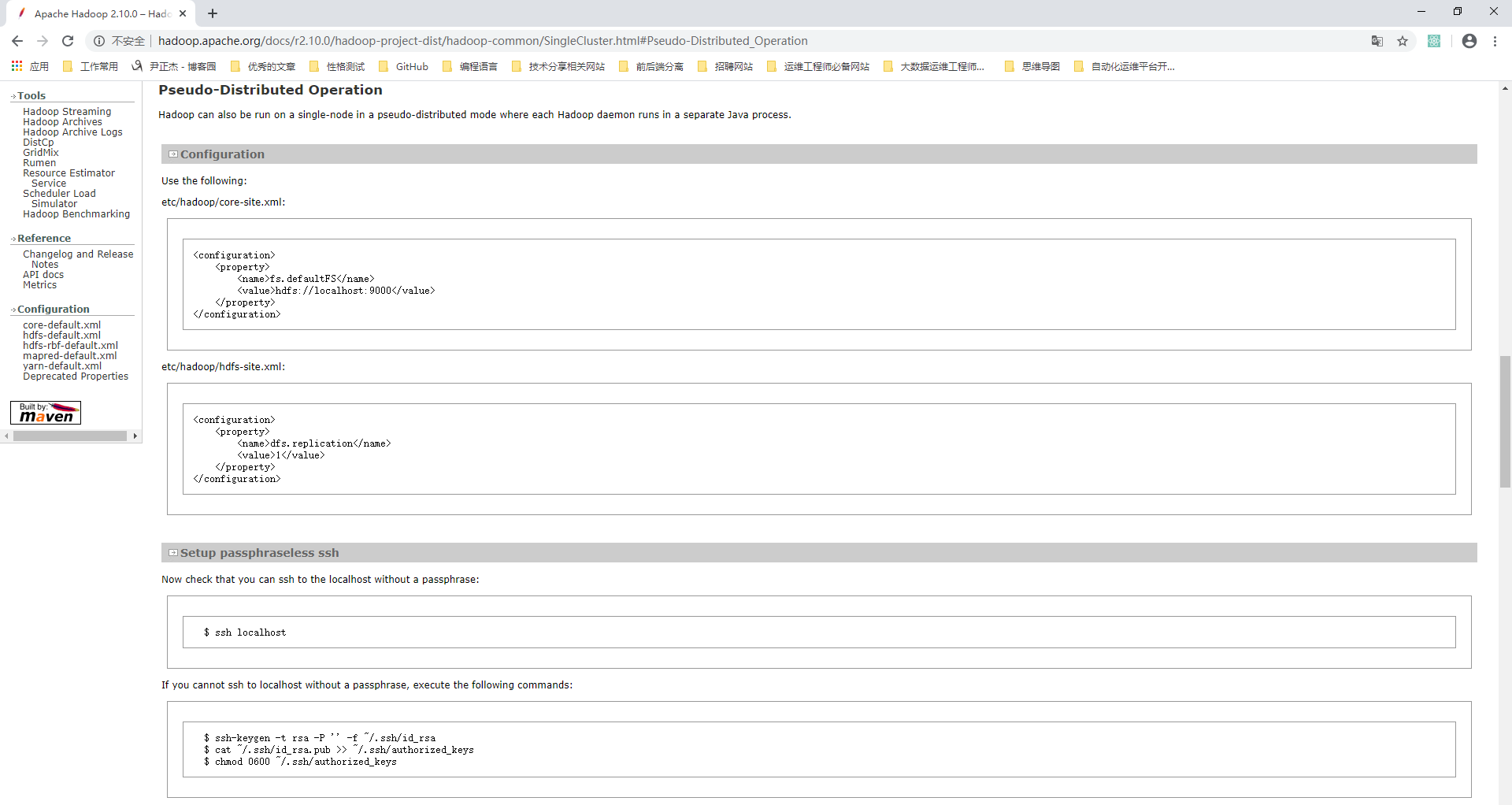

2>.查看Hadoop设置单节点群集的文档

博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/hadoop-project-dist/hadoop-common/SingleCluster.html#Pseudo-Distributed_Operation

3>.Hadoop配置文件说明

Hadoop配置文件分两类:默认配置文件和自定义配置文件,只有用户想修改某一默认配置值时,才需要修改自定义配置文件,更改相应属性值。 默认配置文件: core-default.xml: 默认配置文件存放在"${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-common/"路径下。 博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/hadoop-project-dist/hadoop-common/core-default.xml hdfs-default.xml: 默认配置文件存放在"${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/"路径下。 博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml yarn-default.xml: 默认配置文件存放在"/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/"路径下。 博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/hadoop-yarn/hadoop-yarn-common/yarn-default.xml mapred-default.xml: 默认配置文件存放在""路径下。 博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

自定义配置文件: core-site.xml,hdfs-site.xml,yarn-site.xml,mapred-site.xml这四个配置文件存放默认存放在"$HADOOP_HOME/etc/hadoop"这个路径下。 用户可以根据项目需求重新进行修改配置,自定义的值会覆盖上面对应默认配置文件的值哟。 博主推荐阅读: http://hadoop.apache.org/docs/r2.10.0/hadoop-project-dist/hadoop-common/DeprecatedProperties.html

[root@hadoop101.yinzhengjie.org.cn ~]# ll ${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-common/core-default.xml

-rw-r--r-- 1 root root 104408 Mar 12 03:27 /yinzhengjie/softwares/hadoop-2.10.0/share/doc/hadoop/hadoop-project-dist/hadoop-common/core-default.xml

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-common/core-default.xml | wc -l

3103

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ll ${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

-rw-r--r-- 1 root root 160516 Mar 12 03:27 /yinzhengjie/softwares/hadoop-2.10.0/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml | wc -l

4667

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ll ${HADOOP_HOME}/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

-rw-r--r-- 1 root root 132138 Mar 12 03:27 /yinzhengjie/softwares/hadoop-2.10.0/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml | wc -l

3580

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ll ${HADOOP_HOME}/share/doc/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

-rw-r--r-- 1 root root 74650 Mar 12 03:27 /yinzhengjie/softwares/hadoop-2.10.0/share/doc/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat ${HADOOP_HOME}/share/doc/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml | wc -l

2045

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]#

二.伪分布式配置HDFS实操案例

1>.修改core-site.xml

[root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/core-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/core-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定HDFS中NameNode的RPC地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://hadoop101.yinzhengjie.org.cn:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/yinzhengjie/softwares/hadoop-2.10.0/data/tmp</value> <description>指定Hadoop运行时产生文件的存储目录</description> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

core-site.xml配置文件的作用: 用于定义系统级别的参数,如HDFS URL、Hadoop的临时目录以及用于机架感知(rack-aware)集群中的配置文件的配置等,此中的参数定义会覆盖core-default.xml文件中的默认配置。 fs.defaultFS 参数的作用: 声明namenode的地址,相当于声明hdfs文件系统。 hadoop.tmp.dir 参数的作用: 声明hadoop工作目录的地址。

2>.修改hdfs-site.xml

[root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/hdfs-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定HDFS副本的数量 --> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]#

hdfs-site.xml 配置文件的作用: HDFS的相关设定,如文件副本的个数、块大小及是否使用强制权限等,此中的参数定义会覆盖hdfs-default.xml文件中的默认配置. dfs.replication 参数的作用: 为了数据可用性及冗余的目的,HDFS会在多个节点上保存同一个数据块的多个副本,其默认为3个。而只有一个节点的伪分布式环境中其仅用保存一个副本即可,这可以通过dfs.replication属性进行定义。它是一个软件级备份。

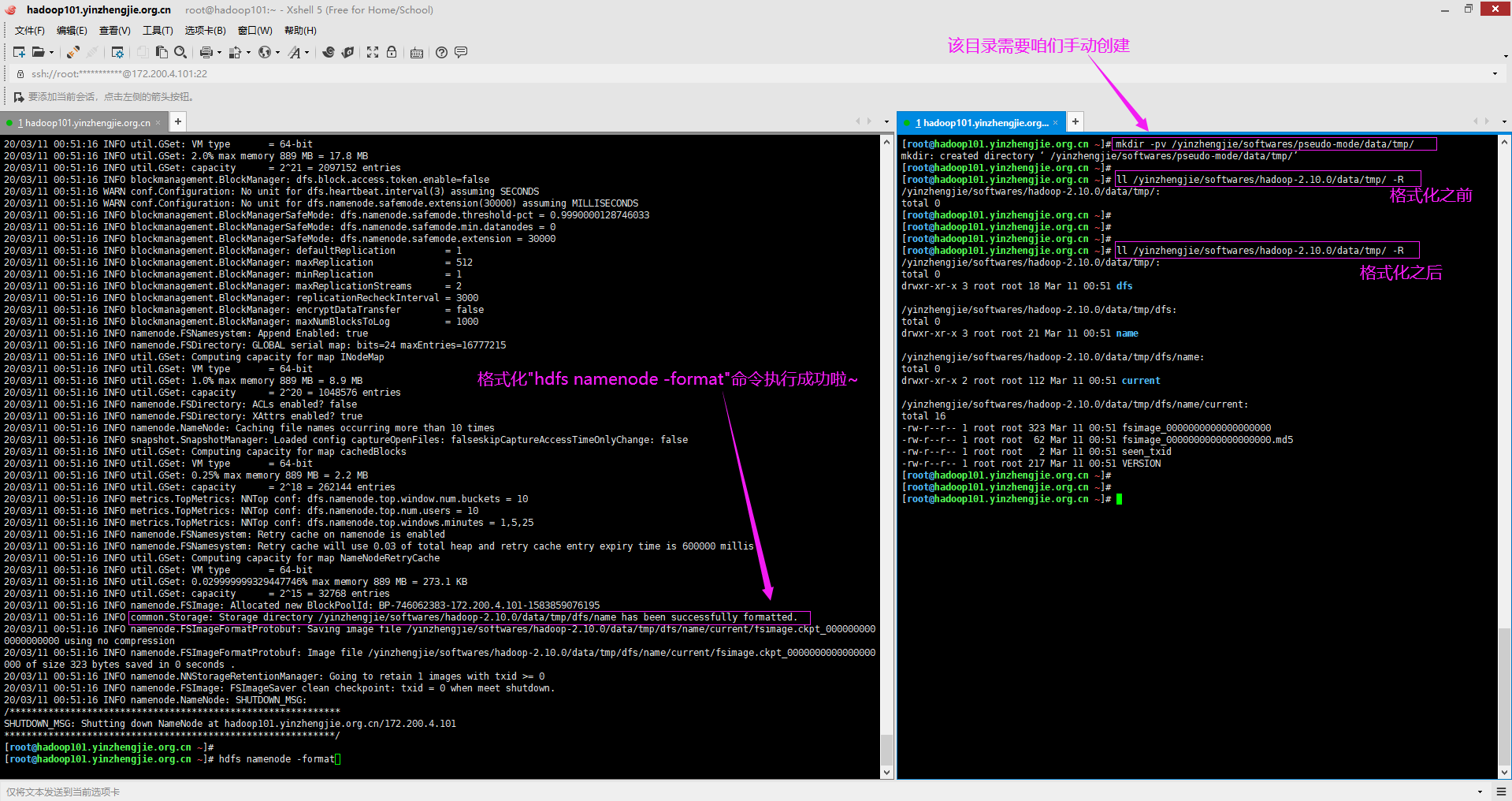

3>.格式化namenode

[root@hadoop101.yinzhengjie.org.cn ~]# mkdir -pv /yinzhengjie/softwares/pseudo-mode/data/tmp/ #该目录是我们在配置文件中指定Hadoop在运行时产生的数据存放目录,需要咱们手动创建哟~

mkdir: created directory ‘/yinzhengjie/softwares/pseudo-mode/data/tmp/’

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/ -R

/yinzhengjie/softwares/hadoop-2.10.0/data/tmp/:

total 0

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs namenode -format #执行格式化命令

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/ -R

/yinzhengjie/softwares/hadoop-2.10.0/data/tmp/:

total 0

drwxr-xr-x 3 root root 18 Mar 11 00:51 dfs

/yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs:

total 0

drwxr-xr-x 3 root root 21 Mar 11 00:51 name

/yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/name:

total 0

drwxr-xr-x 2 root root 112 Mar 11 00:51 current

/yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/name/current:

total 16

-rw-r--r-- 1 root root 323 Mar 11 00:51 fsimage_0000000000000000000 #HDFS文件系统元数据的一个永久性的检查点,其中包含HDFS文件系统的所有目录和文件idnode的序列化信息。

-rw-r--r-- 1 root root 62 Mar 11 00:51 fsimage_0000000000000000000.md5 #存放对应镜像文件的md5校验值

-rw-r--r-- 1 root root 2 Mar 11 00:51 seen_txid #保存的是一个数字,就是最后一个edits_的数字

-rw-r--r-- 1 root root 217 Mar 11 00:51 VERSION #HDFS版本信息的描述文件

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/name/current/VERSION #Wed Mar 11 00:51:16 CST 2020 namespaceID=1733780700 clusterID=CID-50dd1d9b-c90a-4928-a554-d2b4e777cfcb cTime=1583859076195 storageType=NAME_NODE blockpoolID=BP-746062383-172.200.4.101-1583859076195 layoutVersion=-63 [root@hadoop101.yinzhengjie.org.cn ~]# 温馨提示: namespaceID: 是文件系统命名空间的唯一标识,是在namenode首次格式化的时候创建的。 clusterID: 是将HDFS集群作为一个整体赋予的唯一标识符,对于联邦HDFS非常重要,因为在联邦HDFS机制下一个集群由多个命名空间组成,每个命名空间由一个namenode管理。 cTime: 标记了namenode存储系统的创建时间。对于刚刚格式化的文件系统这个属性值为0,在文件系统升级之后这个值就会更新到新的时间戳。 storageType: 说明该存储目录包含的是namenode的数据结构。 blockpoolID: 是数据块池的唯一标识符,数据块池中包含了由一个namenode管理的命名空间中的所有文件 layoutVersion: 是一个负整数,描述HDFS持久性数据结构(也称之为布局)的版本,但是这个与Hadoop发布包的版本号无关。

4>.启动namenode

[root@hadoop101.yinzhengjie.org.cn ~]# jps 10701 Jps [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hadoop-daemon.sh start namenode starting namenode, logging to /yinzhengjie/softwares/pseudo-mode/logs/hadoop-root-namenode-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# jps 10808 Jps 10731 NameNode [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# tree /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/name/ /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/name/ ├── current │ ├── edits_0000000000000000001-0000000000000000001 #已滚动的编辑日志文件 │ ├── edits_inprogress_0000000000000000002 #当前可写的编辑日志文件 │ ├── fsimage_0000000000000000000 │ ├── fsimage_0000000000000000000.md5 │ ├── seen_txid │ └── VERSION └── in_use.lock #一个锁文件,namenode使用该文件为存储目录加锁,避免其它namenode实例同时使用同一个存储目录的情况。 1 directory, 7 files [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ss -ntl State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 172.200.4.101:9000 *:* LISTEN 0 128 *:50070 *:* LISTEN 0 128 *:22 *:* LISTEN 0 128 :::22 :::* [root@hadoop101.yinzhengjie.org.cn ~]#

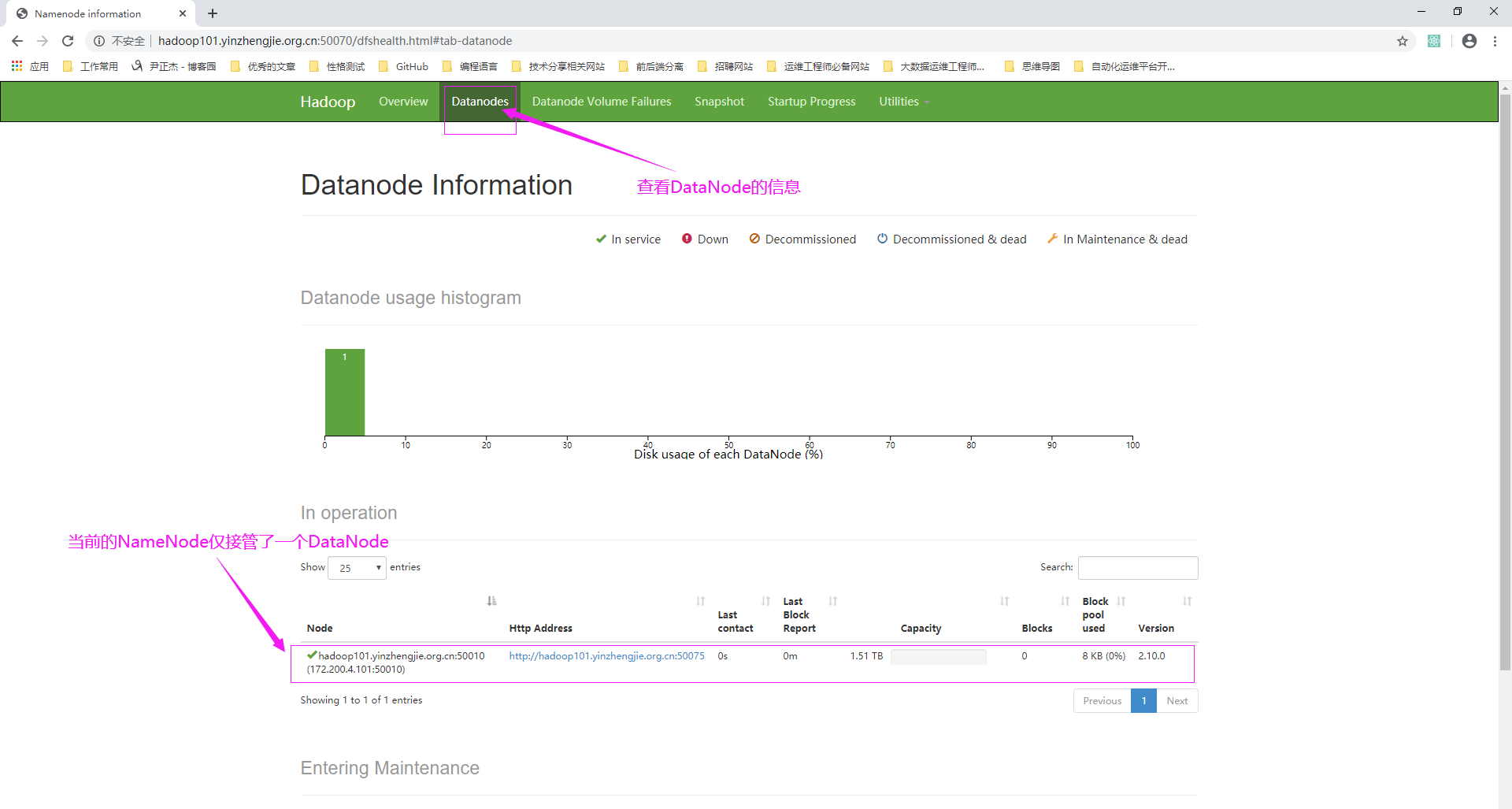

5>.启动datanode

[root@hadoop101.yinzhengjie.org.cn ~]# jps 10832 Jps 10731 NameNode [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hadoop-daemon.sh start datanode starting datanode, logging to /yinzhengjie/softwares/pseudo-mode/logs/hadoop-root-datanode-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# jps 10857 DataNode 10731 NameNode 10940 Jps [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/ total 4 drwx------ 4 root root 54 Mar 11 01:34 BP-746062383-172.200.4.101-1583859076195 -rw-r--r-- 1 root root 229 Mar 11 01:34 VERSION [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/BP-746062383-172.200.4.101-1583859076195/current/ total 4 drwxr-xr-x 2 root root 6 Mar 11 01:34 finalized drwxr-xr-x 2 root root 6 Mar 11 01:34 rbw -rw-r--r-- 1 root root 144 Mar 11 01:34 VERSION [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/VERSION #Wed Mar 11 01:34:46 CST 2020 storageID=DS-3676d3ef-2dec-4e4e-b461-078884bf2251 clusterID=CID-50dd1d9b-c90a-4928-a554-d2b4e777cfcb cTime=0 datanodeUuid=ac57c3e3-a212-45a7-a541-1792e84725fa storageType=DATA_NODE layoutVersion=-57 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# 温馨提示: storageID: 存储ID号,用于标识一个DataNode的存储一个存储路径,DataNode可以配置多个目录存储,因此每个目录都有存储ID编号哟~ clusterID: 集群ID,全局唯一 cTime: 标记了datanode存储系统的创建时间,对于刚刚格式化的存储系统,这个属性为0;但是在文件系统升级之后,该值会更新到新的时间戳。 datanodeUuid: datanode 的唯一识别码 storageType: 存储类型 layoutVersion: 是一个负整数。通常只有 HDFS 增加新 特性时才会更新这个版本号。

[root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/BP-746062383-172.200.4.101-1583859076195/current/VERSION #Wed Mar 11 01:34:46 CST 2020 namespaceID=1733780700 cTime=1583859076195 blockpoolID=BP-746062383-172.200.4.101-1583859076195 layoutVersion=-57 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# 温馨提示: namespaceID: 是文件系统命名空间的唯一标识,是在namenode首次格式化的时候创建的。 cTime: 标记了namenode存储系统的创建时间。对于刚刚格式化的文件系统这个属性值为0,在文件系统升级之后这个值就会更新到新的时间戳。 blockpoolID: 是数据块池的唯一标识符,数据块池中包含了由一个namenode管理的命名空间中的所有文件 layoutVersion: 是一个负整数,描述HDFS持久性数据结构(也称之为布局)的版本,但是这个与Hadoop发布包的版本号无关。

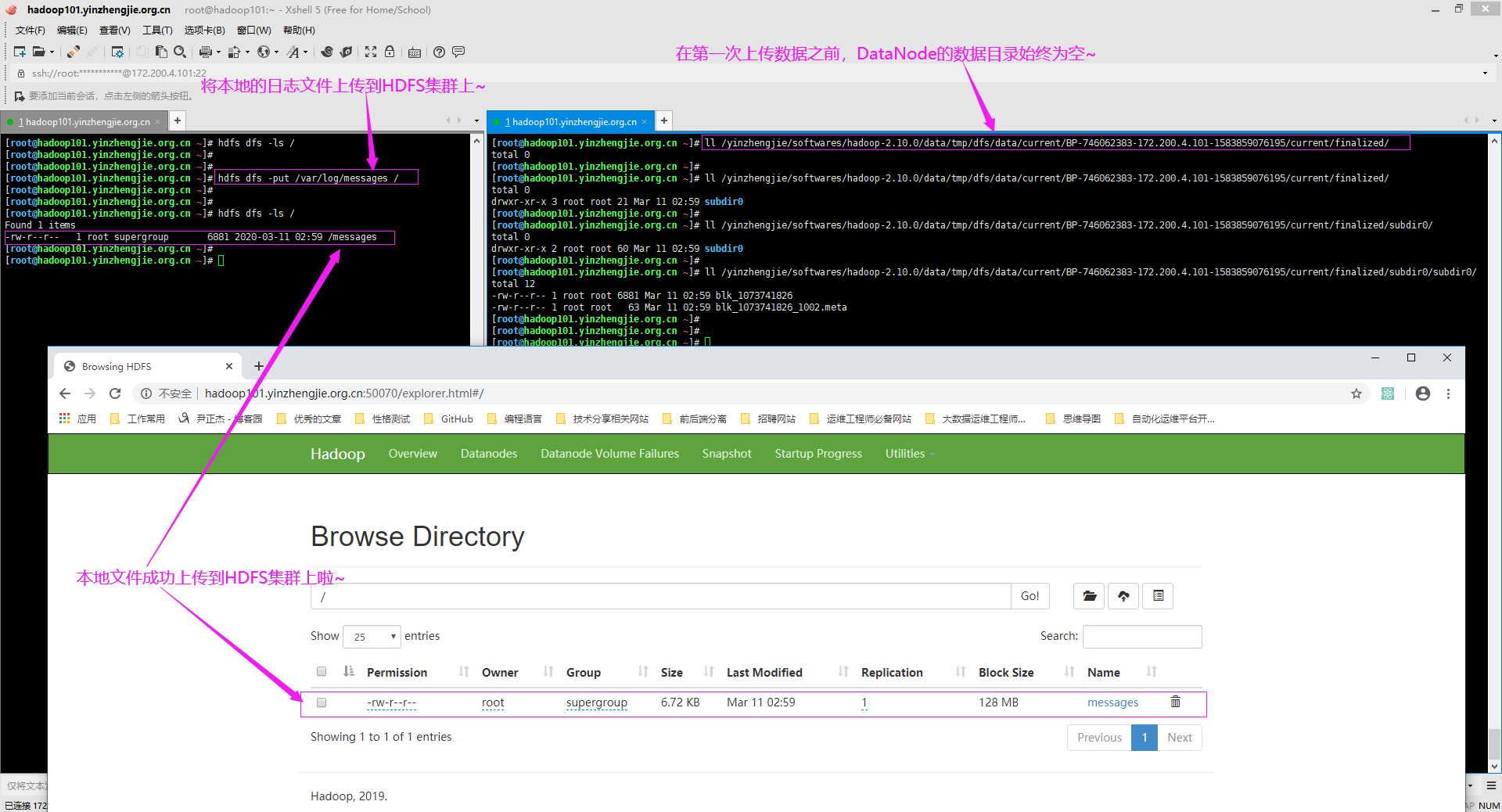

6>.上传本地文件到HDFS集群

[root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/BP-746062383-172.200.4.101-1583859076195/current/finalized/ total 0 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -put /var/log/messages / [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 1 items -rw-r--r-- 1 root supergroup 6881 2020-03-11 02:59 /messages [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/BP-746062383-172.200.4.101-1583859076195/current/finalized/ total 0 drwxr-xr-x 3 root root 21 Mar 11 02:59 subdir0 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/BP-746062383-172.200.4.101-1583859076195/current/finalized/subdir0/ total 0 drwxr-xr-x 2 root root 60 Mar 11 02:59 subdir0 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ll /yinzhengjie/softwares/hadoop-2.10.0/data/tmp/dfs/data/current/BP-746062383-172.200.4.101-1583859076195/current/finalized/subdir0/subdir0/ total 12 -rw-r--r-- 1 root root 6881 Mar 11 02:59 blk_1073741826 -rw-r--r-- 1 root root 63 Mar 11 02:59 blk_1073741826_1002.meta [root@hadoop101.yinzhengjie.org.cn ~]#

7>.温馨提示

生产环境中NameNode格式化一次即可,如果格式化一次以上,则有以下事情发生:

(1)如果一个NameNode被格式化了两次这意味着将之前的所有数据删除;

(2)如果格式化NameNode后,NameNode可以正常启动,但DataNode无法启动,原因是DataNode的VERSION版本中保存的依旧是上一次格式化的集群ID,需要手动修改集群ID号或者将DataNode的数据删除掉重新启动DataNode。

三.伪分布式配置YARN实操案例

1>.修改yarn-env.sh

[root@hadoop101.yinzhengjie.org.cn ~]# echo ${JAVA_HOME} /yinzhengjie/softwares/jdk1.8.0_201 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# grep ^export /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/yarn-env.sh | grep JAVA_HOME export JAVA_HOME=/yinzhengjie/softwares/jdk1.8.0_201 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

2>.修改yarn-site.xml

[root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/yarn-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/yarn-site.xml <?xml version="1.0"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> <description>Reducer获取数据的方式</description> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>hadoop101.yinzhengjie.org.cn</value> <description>指定YARN的ResourceManager的地址</description> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]#

yarn-site.xml配置文件的作用: 主要用于配置调度器级别的参数. yarn.resourcemanager.hostname 参数的作用: 指定资源管理器(resourcemanager)的主机名 yarn.nodemanager.aux-services 参数的作用: 指定nodemanager使用shuffle

3>.修改mapred-env.sh文件

[root@hadoop101.yinzhengjie.org.cn ~]# echo ${JAVA_HOME} /yinzhengjie/softwares/jdk1.8.0_201 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# grep ^export /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-env.sh | grep JAVA_HOME export JAVA_HOME=/yinzhengjie/softwares/jdk1.8.0_201 [root@hadoop101.yinzhengjie.org.cn ~]#

4>.创建mapred-site.xml文件

[root@hadoop101.yinzhengjie.org.cn ~]# cp /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml.template /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# vim /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.10.0/etc/hadoop/mapred-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>指定MR运行在YARN上</description> </property> </configuration> [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]#

mapred-site.xml 配置文件的作用: mapreduce的相关设定,如reduce任务的默认个数、任务所能够使用内存的默认上下限等,此中的参数定义会覆盖mapred-default.xml文件中的默认配置. mapreduce.framework.name 参数的作用: 指定MapReduce的计算框架,有三种可选,第一种:local(本地,默认就是本地模式),第二种是classic(hadoop一代执行框架),第三种是yarn(二代执行框架),我们这里配置用目前版本最新的计算框架yarn即可。

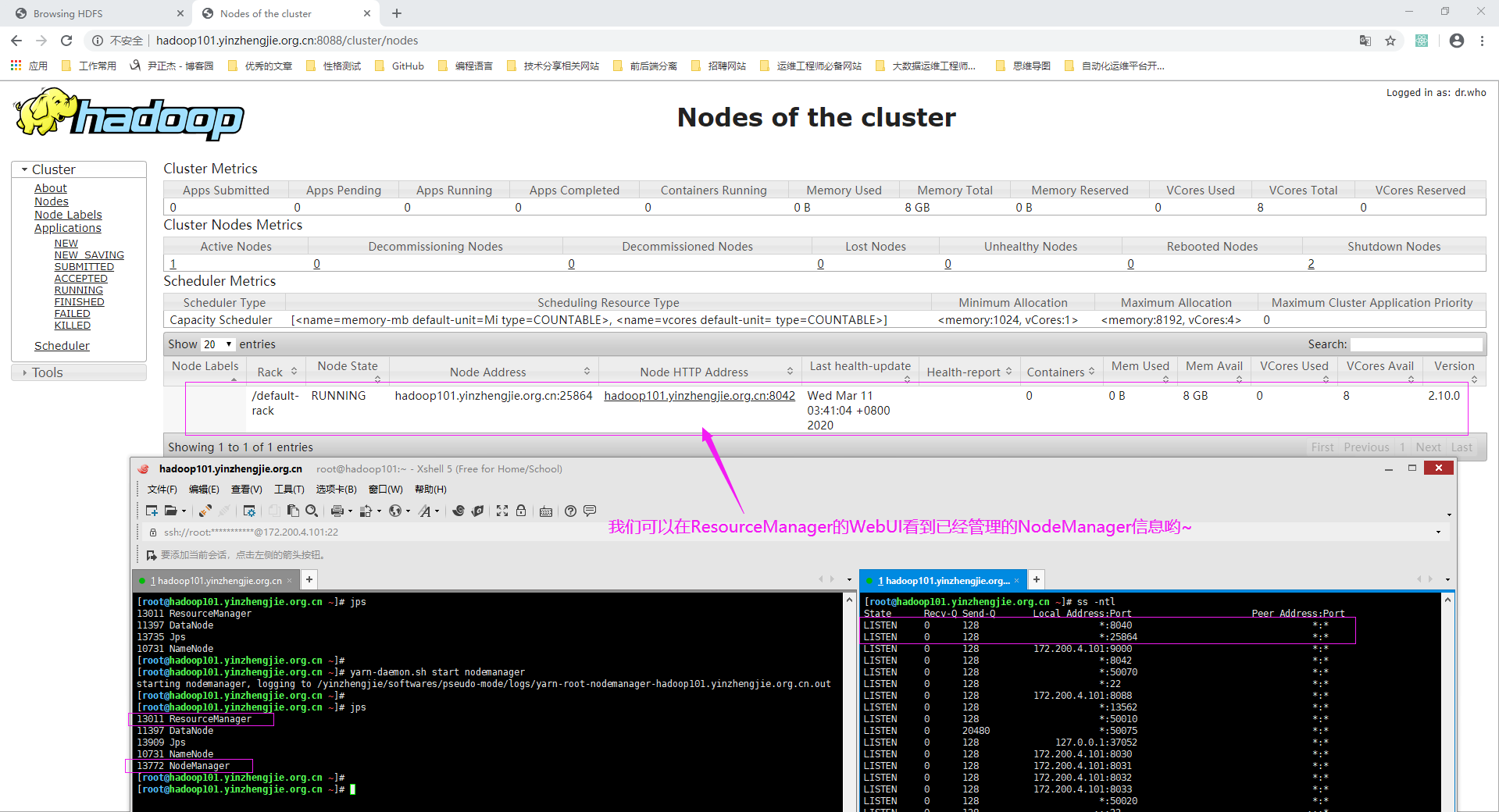

5>.启动resourcemanager

[root@hadoop101.yinzhengjie.org.cn ~]# jps 12976 Jps 11397 DataNode 10731 NameNode [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# yarn-daemon.sh start resourcemanager starting resourcemanager, logging to /yinzhengjie/softwares/pseudo-mode/logs/yarn-root-resourcemanager-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# jps 13011 ResourceManager 11397 DataNode 13240 Jps 10731 NameNode [root@hadoop101.yinzhengjie.org.cn ~]#

6>.启动nodemanager

[root@hadoop101.yinzhengjie.org.cn ~]# jps 13011 ResourceManager 11397 DataNode 13271 Jps 10731 NameNode [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# yarn-daemon.sh start nodemanager starting nodemanager, logging to /yinzhengjie/softwares/pseudo-mode/logs/yarn-root-nodemanager-hadoop101.yinzhengjie.org.cn.out [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# jps 13442 Jps 13011 ResourceManager 11397 DataNode 13306 NodeManager 10731 NameNode [root@hadoop101.yinzhengjie.org.cn ~]#

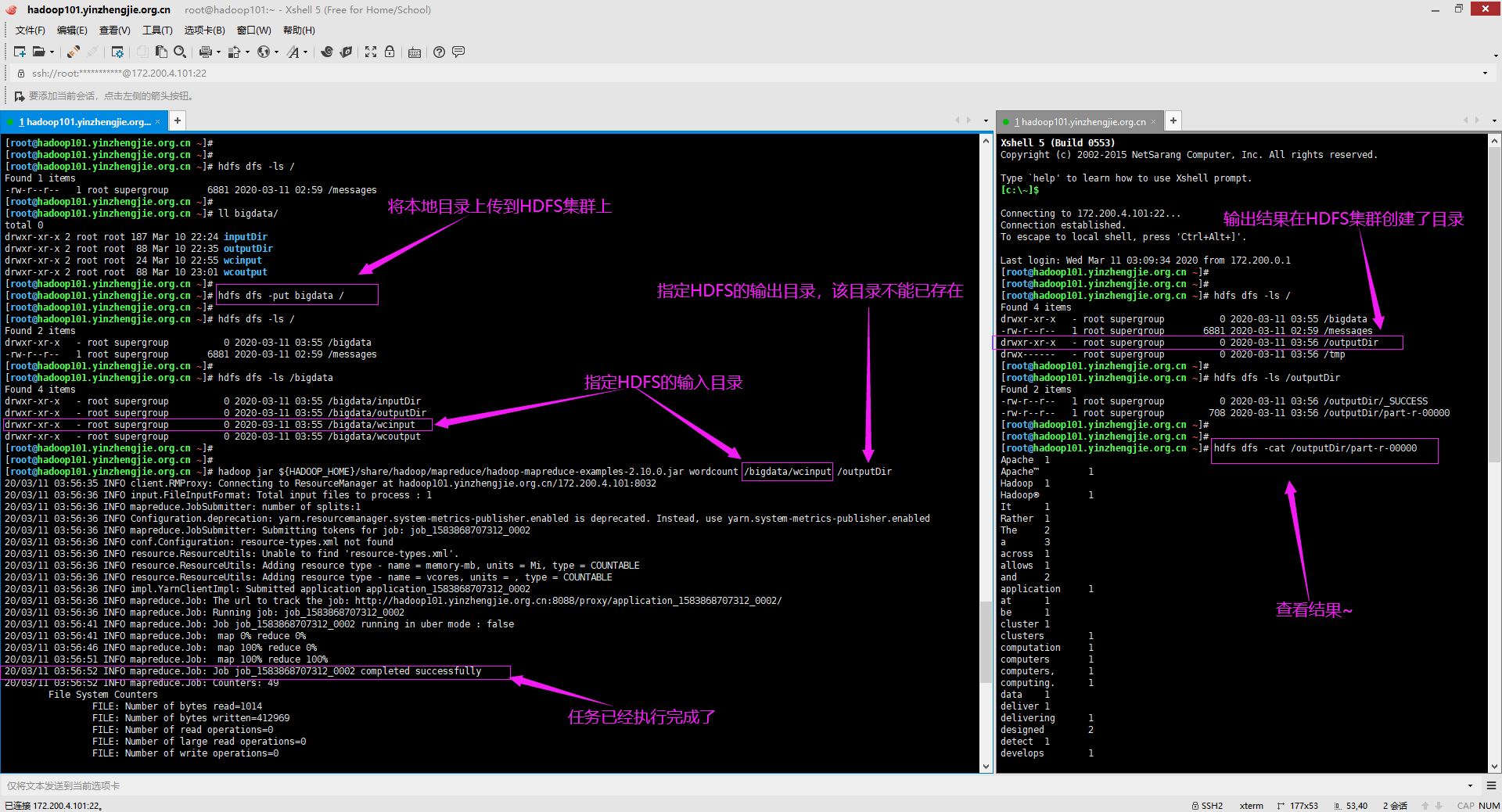

7>.将本地的测试文件上传到HDFS集群

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 1 items -rw-r--r-- 1 root supergroup 6881 2020-03-11 02:59 /messages [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# ll bigdata/ total 0 drwxr-xr-x 2 root root 187 Mar 10 22:24 inputDir drwxr-xr-x 2 root root 88 Mar 10 22:35 outputDir drwxr-xr-x 2 root root 24 Mar 10 22:55 wcinput drwxr-xr-x 2 root root 88 Mar 10 23:01 wcoutput [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -put bigdata / [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 2 items drwxr-xr-x - root supergroup 0 2020-03-11 03:55 /bigdata -rw-r--r-- 1 root supergroup 6881 2020-03-11 02:59 /messages [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /bigdata Found 4 items drwxr-xr-x - root supergroup 0 2020-03-11 03:55 /bigdata/inputDir drwxr-xr-x - root supergroup 0 2020-03-11 03:55 /bigdata/outputDir drwxr-xr-x - root supergroup 0 2020-03-11 03:55 /bigdata/wcinput drwxr-xr-x - root supergroup 0 2020-03-11 03:55 /bigdata/wcoutput [root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hadoop jar ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.0.jar wordcount /bigdata/wcinput /outputDir

20/03/11 03:56:35 INFO client.RMProxy: Connecting to ResourceManager at hadoop101.yinzhengjie.org.cn/172.200.4.101:8032

20/03/11 03:56:36 INFO input.FileInputFormat: Total input files to process : 1

20/03/11 03:56:36 INFO mapreduce.JobSubmitter: number of splits:1

20/03/11 03:56:36 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

20/03/11 03:56:36 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1583868707312_0002

20/03/11 03:56:36 INFO conf.Configuration: resource-types.xml not found

20/03/11 03:56:36 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

20/03/11 03:56:36 INFO resource.ResourceUtils: Adding resource type - name = memory-mb, units = Mi, type = COUNTABLE

20/03/11 03:56:36 INFO resource.ResourceUtils: Adding resource type - name = vcores, units = , type = COUNTABLE

20/03/11 03:56:36 INFO impl.YarnClientImpl: Submitted application application_1583868707312_0002

20/03/11 03:56:36 INFO mapreduce.Job: The url to track the job: http://hadoop101.yinzhengjie.org.cn:8088/proxy/application_1583868707312_0002/

20/03/11 03:56:36 INFO mapreduce.Job: Running job: job_1583868707312_0002

20/03/11 03:56:41 INFO mapreduce.Job: Job job_1583868707312_0002 running in uber mode : false

20/03/11 03:56:41 INFO mapreduce.Job: map 0% reduce 0%

20/03/11 03:56:46 INFO mapreduce.Job: map 100% reduce 0%

20/03/11 03:56:51 INFO mapreduce.Job: map 100% reduce 100%

20/03/11 03:56:52 INFO mapreduce.Job: Job job_1583868707312_0002 completed successfully

20/03/11 03:56:52 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1014

FILE: Number of bytes written=412969

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=794

HDFS: Number of bytes written=708

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=2585

Total time spent by all reduces in occupied slots (ms)=2301

Total time spent by all map tasks (ms)=2585

Total time spent by all reduce tasks (ms)=2301

Total vcore-milliseconds taken by all map tasks=2585

Total vcore-milliseconds taken by all reduce tasks=2301

Total megabyte-milliseconds taken by all map tasks=2647040

Total megabyte-milliseconds taken by all reduce tasks=2356224

Map-Reduce Framework

Map input records=3

Map output records=99

Map output bytes=1057

Map output materialized bytes=1014

Input split bytes=132

Combine input records=99

Combine output records=75

Reduce input groups=75

Reduce shuffle bytes=1014

Reduce input records=75

Reduce output records=75

Spilled Records=150

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=206

CPU time spent (ms)=1320

Physical memory (bytes) snapshot=501587968

Virtual memory (bytes) snapshot=4330676224

Total committed heap usage (bytes)=295698432

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=662

File Output Format Counters

Bytes Written=708

[root@hadoop101.yinzhengjie.org.cn ~]#

[root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls / Found 4 items drwxr-xr-x - root supergroup 0 2020-03-11 03:55 /bigdata -rw-r--r-- 1 root supergroup 6881 2020-03-11 02:59 /messages drwxr-xr-x - root supergroup 0 2020-03-11 03:56 /outputDir drwx------ - root supergroup 0 2020-03-11 03:56 /tmp [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -ls /outputDir Found 2 items -rw-r--r-- 1 root supergroup 0 2020-03-11 03:56 /outputDir/_SUCCESS -rw-r--r-- 1 root supergroup 708 2020-03-11 03:56 /outputDir/part-r-00000 [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# [root@hadoop101.yinzhengjie.org.cn ~]# hdfs dfs -cat /outputDir/part-r-00000 Apache 1 Apache™ 1 Hadoop 1 Hadoop® 1 It 1 Rather 1 The 2 a 3 across 1 allows 1 and 2 application 1 at 1 be 1 cluster 1 clusters 1 computation 1 computers 1 computers, 1 computing. 1 data 1 deliver 1 delivering 1 designed 2 detect 1 develops 1 distributed 2 each 2 failures 1 failures. 1 for 2 framework 1 from 1 handle 1 hardware 1 high-availability, 1 highly-available 1 is 3 itself 1 large 1 layer, 1 library 2 local 1 machines, 1 may 1 models. 1 of 6 offering 1 on 2 open-source 1 processing 1 programming 1 project 1 prone 1 reliable, 1 rely 1 scalable, 1 scale 1 servers 1 service 1 sets 1 simple 1 single 1 so 1 software 2 storage. 1 than 1 that 1 the 3 thousands 1 to 5 top 1 up 1 using 1 which 1 [root@hadoop101.yinzhengjie.org.cn ~]#

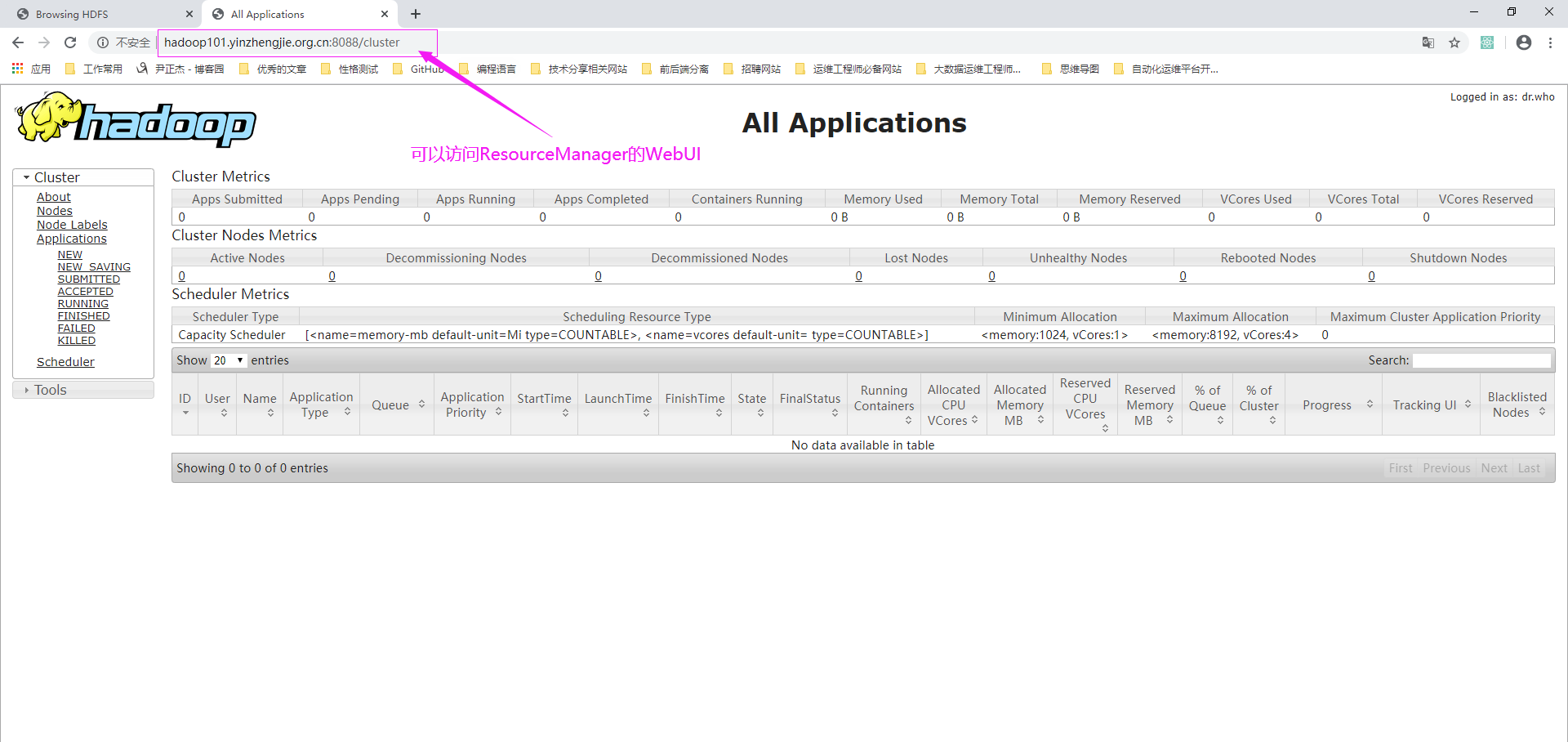

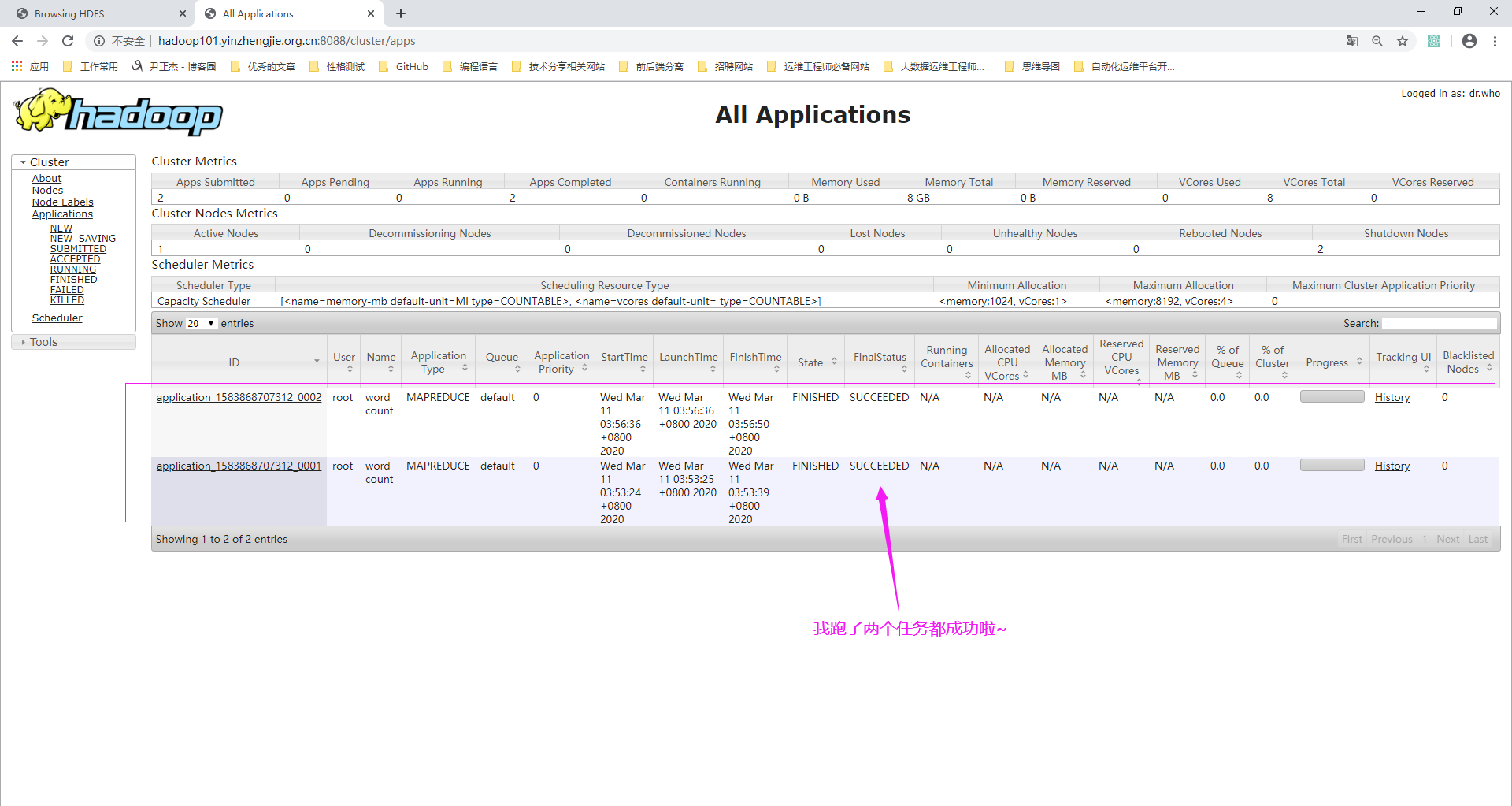

8>.查看WebUI

浏览器访问ResourceManager的WebUI界面,查看MapReduce服务:

http://hadoop101.yinzhengjie.org.cn:8088/cluster/apps

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。 欢迎加入基础架构自动化运维:598432640,大数据SRE进阶之路:959042252,DevOps进阶之路:526991186