Hadoop基础-配置历史服务器

Hadoop基础-配置历史服务器

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

Hadoop自带了一个历史服务器,可以通过历史服务器查看已经运行完的Mapreduce作业记录,比如用了多少个Map、用了多少个Reduce、作业提交时间、作业启动时间、作业完成时间等信息。默认情况下,Hadoop历史服务器是没有启动的,我们可以通过Hadoop自带的命令(mr-jobhistory-daemon.sh)来启动Hadoop历史服务器。

一.yarn上运行mr程序

1>.启动集群

[yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 3043 ResourceManager 2507 NameNode 3389 Jps 2814 DFSZKFailoverController 命令执行成功 ============= s102 jps ============ 2417 DataNode 2484 JournalNode 2664 NodeManager 2828 Jps 2335 QuorumPeerMain 命令执行成功 ============= s103 jps ============ 2421 DataNode 2488 JournalNode 2666 NodeManager 2333 QuorumPeerMain 2830 Jps 命令执行成功 ============= s104 jps ============ 2657 NodeManager 2818 Jps 2328 QuorumPeerMain 2410 DataNode 2477 JournalNode 命令执行成功 ============= s105 jps ============ 2688 Jps 2355 NameNode 2424 DFSZKFailoverController 命令执行成功 [yinzhengjie@s101 ~]$

2>.在yarn上执行MapReduce程序

[yinzhengjie@s101 ~]$ hadoop jar /soft/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /yinzhengjie/data/ /yinzhengjie/data/output 18/08/21 07:37:35 INFO client.RMProxy: Connecting to ResourceManager at s101/172.30.1.101:8032 18/08/21 07:37:37 INFO input.FileInputFormat: Total input paths to process : 1 18/08/21 07:37:37 INFO mapreduce.JobSubmitter: number of splits:1 18/08/21 07:37:37 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1534851274873_0001 18/08/21 07:37:37 INFO impl.YarnClientImpl: Submitted application application_1534851274873_0001 18/08/21 07:37:37 INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1534851274873_0001/ 18/08/21 07:37:37 INFO mapreduce.Job: Running job: job_1534851274873_0001 18/08/21 07:37:55 INFO mapreduce.Job: Job job_1534851274873_0001 running in uber mode : false 18/08/21 07:37:55 INFO mapreduce.Job: map 0% reduce 0% 18/08/21 07:38:13 INFO mapreduce.Job: map 100% reduce 0% 18/08/21 07:38:31 INFO mapreduce.Job: map 100% reduce 100% 18/08/21 07:38:32 INFO mapreduce.Job: Job job_1534851274873_0001 completed successfully 18/08/21 07:38:32 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=4469 FILE: Number of bytes written=249719 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=3925 HDFS: Number of bytes written=3315 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=15295 Total time spent by all reduces in occupied slots (ms)=15161 Total time spent by all map tasks (ms)=15295 Total time spent by all reduce tasks (ms)=15161 Total vcore-milliseconds taken by all map tasks=15295 Total vcore-milliseconds taken by all reduce tasks=15161 Total megabyte-milliseconds taken by all map tasks=15662080 Total megabyte-milliseconds taken by all reduce tasks=15524864 Map-Reduce Framework Map input records=104 Map output records=497 Map output bytes=5733 Map output materialized bytes=4469 Input split bytes=108 Combine input records=497 Combine output records=288 Reduce input groups=288 Reduce shuffle bytes=4469 Reduce input records=288 Reduce output records=288 Spilled Records=576 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=163 CPU time spent (ms)=1430 Physical memory (bytes) snapshot=439443456 Virtual memory (bytes) snapshot=4216639488 Total committed heap usage (bytes)=286785536 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=3817 File Output Format Counters Bytes Written=3315 [yinzhengjie@s101 ~]$

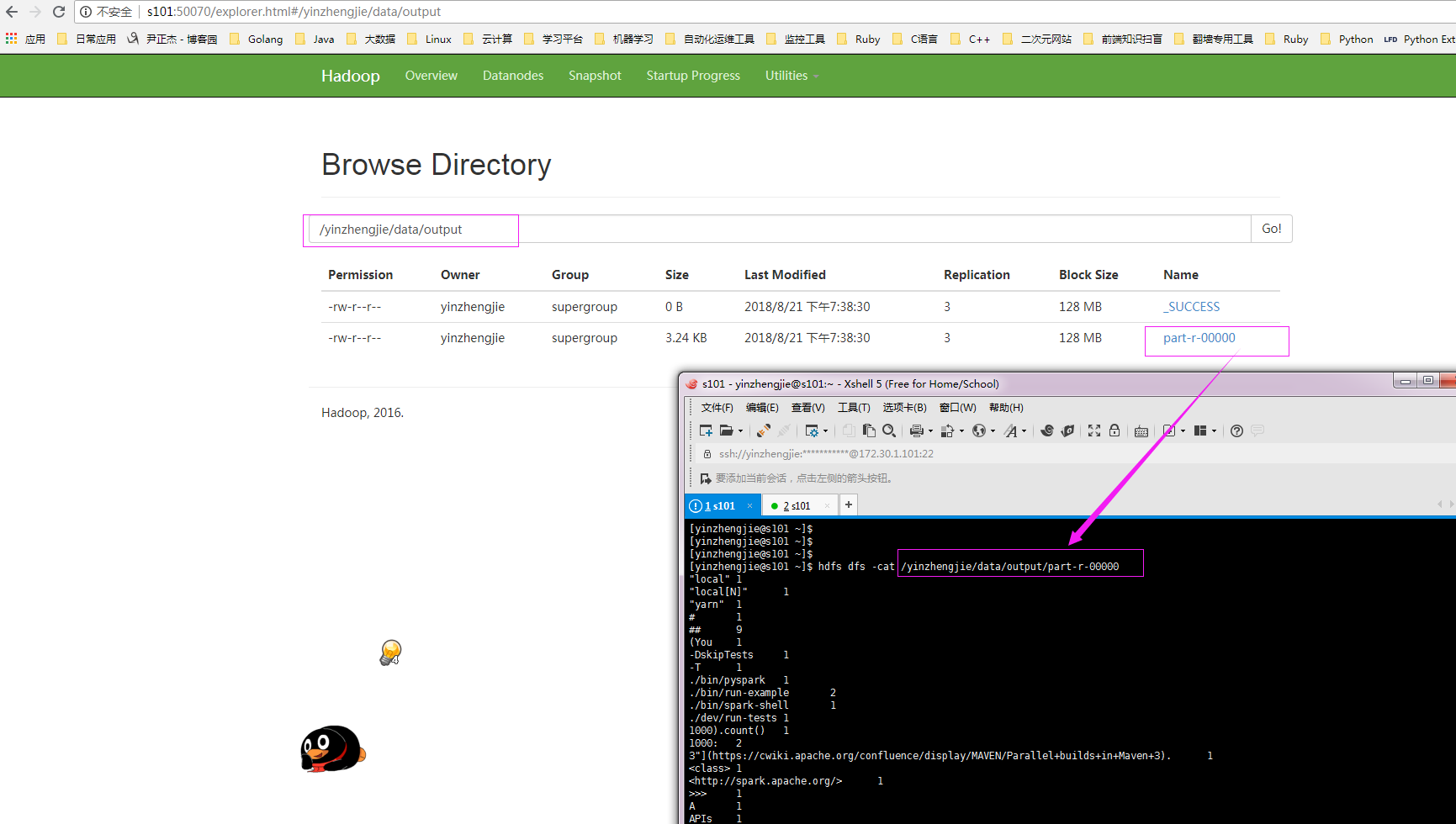

3>.通过webUI查看hdfs是否有数据产生

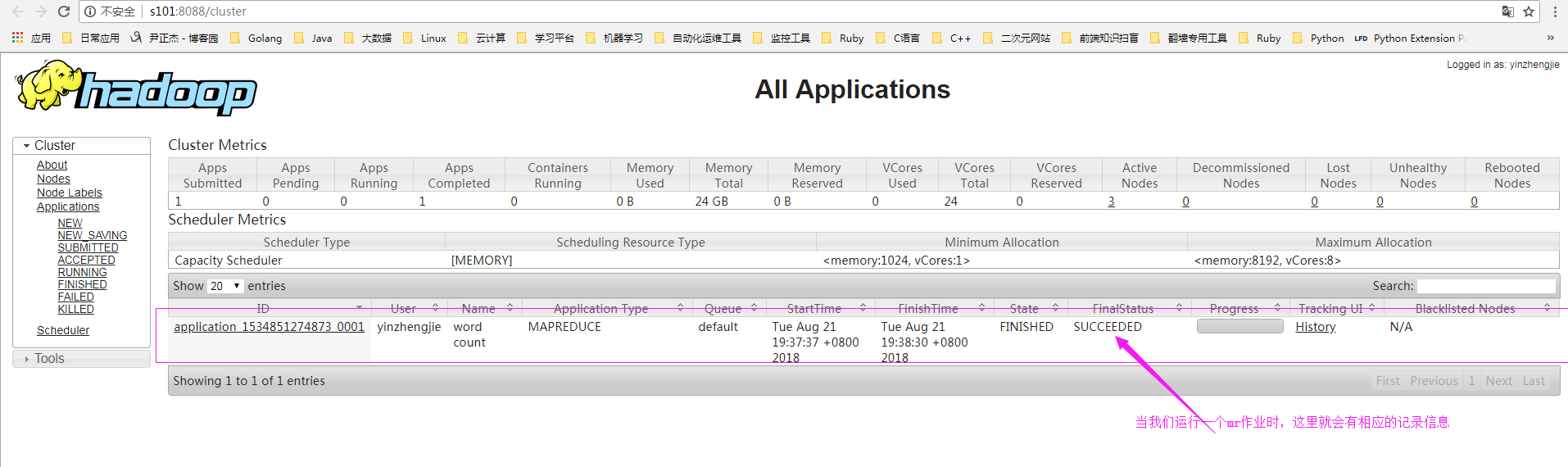

4>.查看yarn的记录信息

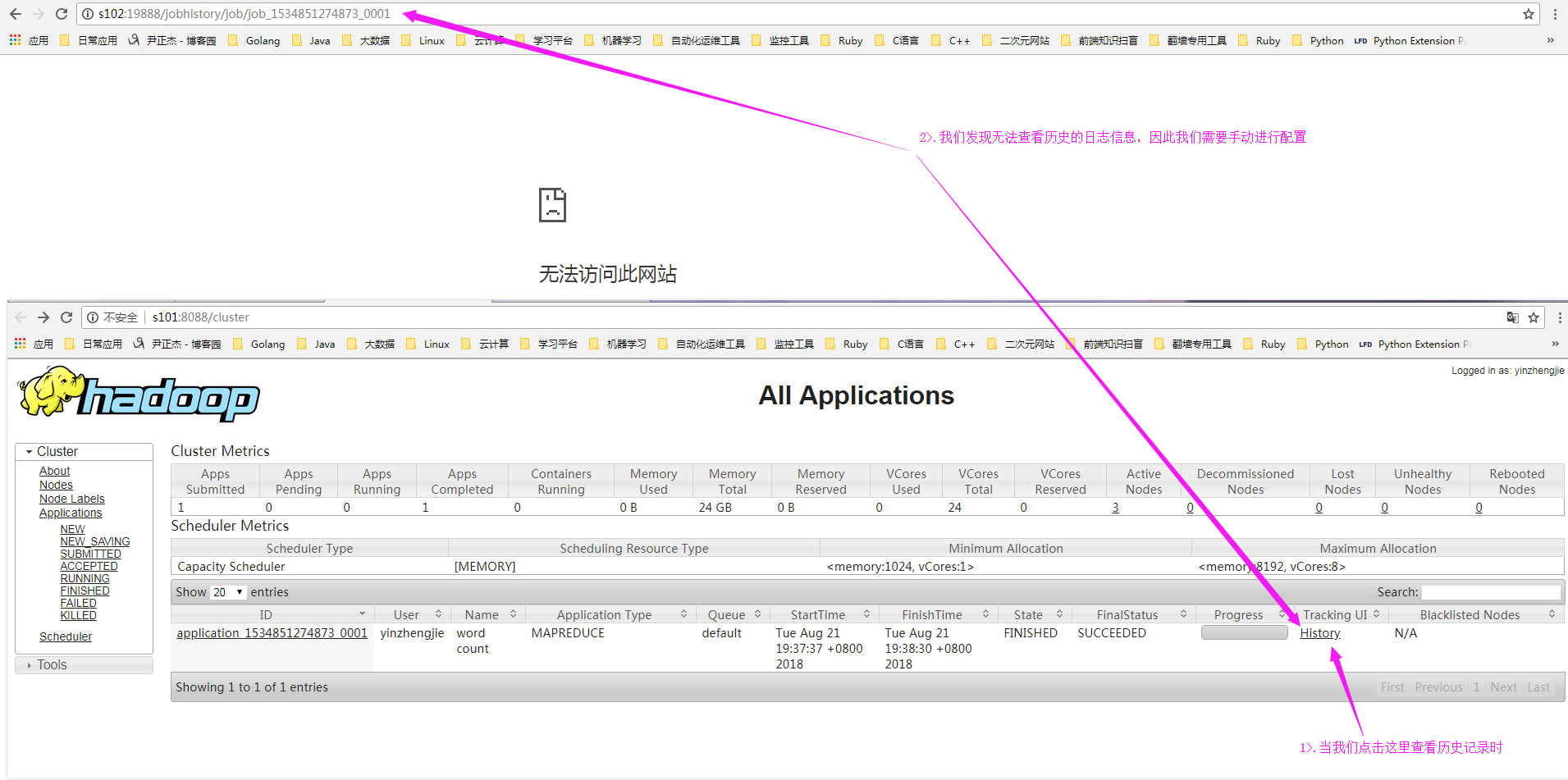

5>.查看历史日志,发现无法访问

二.配置yarn历史服务器

1>.修改“mapred-site.xml”配置文件

1 [yinzhengjie@s101 ~]$ more /soft/hadoop/etc/hadoop/mapred-site.xml 2 <?xml version="1.0"?> 3 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 4 <configuration> 5 <property> 6 <name>mapreduce.framework.name</name> 7 <value>yarn</value> 8 </property> 9 10 <property> 11 <name>mapreduce.jobhistory.address</name> 12 <value>s101:10020</value> 13 </property> 14 15 <property> 16 <name>mapreduce.jobhistory.webapp.address</name> 17 <value>s101:19888</value> 18 </property> 19 20 21 <property> 22 <name>mapreduce.jobhistory.done-dir</name> 23 <value>${yarn.app.mapreduce.am.staging-dir}/done</value> 24 </property> 25 26 <property> 27 <name>mapreduce.jobhistory.intermediate-done-dir</name> 28 <value>${yarn.app.mapreduce.am.staging-dir}/done_intermediate</value> 29 </property> 30 31 <property> 32 <name>yarn.app.mapreduce.am.staging-dir</name> 33 <value>/yinzhengjie/logs/hdfs/history</value> 34 </property> 35 36 </configuration> 37 38 <!-- 39 mapred-site.xml 配置文件的作用: 40 #HDFS的相关设定,如reduce任务的默认个数、任务所能够使用内存 41 的默认上下限等,此中的参数定义会覆盖mapred-default.xml文件中的 42 默认配置. 43 44 mapreduce.framework.name 参数的作用: 45 #指定MapReduce的计算框架,有三种可选,第一种:local(本地),第 46 二种是classic(hadoop一代执行框架),第三种是yarn(二代执行框架),我 47 们这里配置用目前版本最新的计算框架yarn即可。 48 49 mapreduce.jobhistory.address 参数的作用: 50 #指定job的历史服务器 51 52 mapreduce.jobhistory.webapp.address 参数的作用: 53 #指定日志服务器的web访问端口 54 55 mapreduce.jobhistory.done-dir 参数的作用: 56 #指定存放已经运行完的Hadoop作业记录 57 58 mapreduce.jobhistory.intermediate-done-dir 参数的作用: 59 #指定正在运行的Hadoop作业记录 60 61 yarn.app.mapreduce.am.staging-dir 参数的作用: 62 #指定applicationID以及需要的jar包文件等 63 64 --> 65 [yinzhengjie@s101 ~]$

2>.启动历史服务器服务

[yinzhengjie@s101 ~]$ hdfs dfs -mkdir /yinzhengjie/logs/hdfs/history #创建存放历史日志的路径 [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ mr-jobhistory-daemon.sh start historyserver #启动历史服务 starting historyserver, logging to /soft/hadoop-2.7.3/logs/mapred-yinzhengjie-historyserver-s101.out [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ jps 3043 ResourceManager 4009 JobHistoryServer #注意,这个进程就是历史服务进程 2507 NameNode 4045 Jps 2814 DFSZKFailoverController [yinzhengjie@s101 ~]$

3>.在yarn上执行MapReduce程序

[yinzhengjie@s101 ~]$ hdfs dfs -rm -R /yinzhengjie/data/output #删除之前的输出路径 18/08/21 08:43:34 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes. Deleted /yinzhengjie/data/output [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ hadoop jar /soft/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /yinzhengjie/data/input /yinzhengjie/data/output 18/08/21 08:44:58 INFO client.RMProxy: Connecting to ResourceManager at s101/172.30.1.101:8032 18/08/21 08:44:58 INFO input.FileInputFormat: Total input paths to process : 1 18/08/21 08:44:58 INFO mapreduce.JobSubmitter: number of splits:1 18/08/21 08:44:58 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1534851274873_0002 18/08/21 08:44:59 INFO impl.YarnClientImpl: Submitted application application_1534851274873_0002 18/08/21 08:44:59 INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1534851274873_0002/ 18/08/21 08:44:59 INFO mapreduce.Job: Running job: job_1534851274873_0002 18/08/21 08:45:15 INFO mapreduce.Job: Job job_1534851274873_0002 running in uber mode : false 18/08/21 08:45:15 INFO mapreduce.Job: map 0% reduce 0% 18/08/21 08:45:30 INFO mapreduce.Job: map 100% reduce 0% 18/08/21 08:45:45 INFO mapreduce.Job: map 100% reduce 100% 18/08/21 08:45:45 INFO mapreduce.Job: Job job_1534851274873_0002 completed successfully 18/08/21 08:45:46 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=4469 FILE: Number of bytes written=249693 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=3931 HDFS: Number of bytes written=3315 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=12763 Total time spent by all reduces in occupied slots (ms)=12963 Total time spent by all map tasks (ms)=12763 Total time spent by all reduce tasks (ms)=12963 Total vcore-milliseconds taken by all map tasks=12763 Total vcore-milliseconds taken by all reduce tasks=12963 Total megabyte-milliseconds taken by all map tasks=13069312 Total megabyte-milliseconds taken by all reduce tasks=13274112 Map-Reduce Framework Map input records=104 Map output records=497 Map output bytes=5733 Map output materialized bytes=4469 Input split bytes=114 Combine input records=497 Combine output records=288 Reduce input groups=288 Reduce shuffle bytes=4469 Reduce input records=288 Reduce output records=288 Spilled Records=576 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=139 CPU time spent (ms)=1610 Physical memory (bytes) snapshot=439873536 Virtual memory (bytes) snapshot=4216696832 Total committed heap usage (bytes)=281018368 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=3817 File Output Format Counters Bytes Written=3315 [yinzhengjie@s101 ~]$

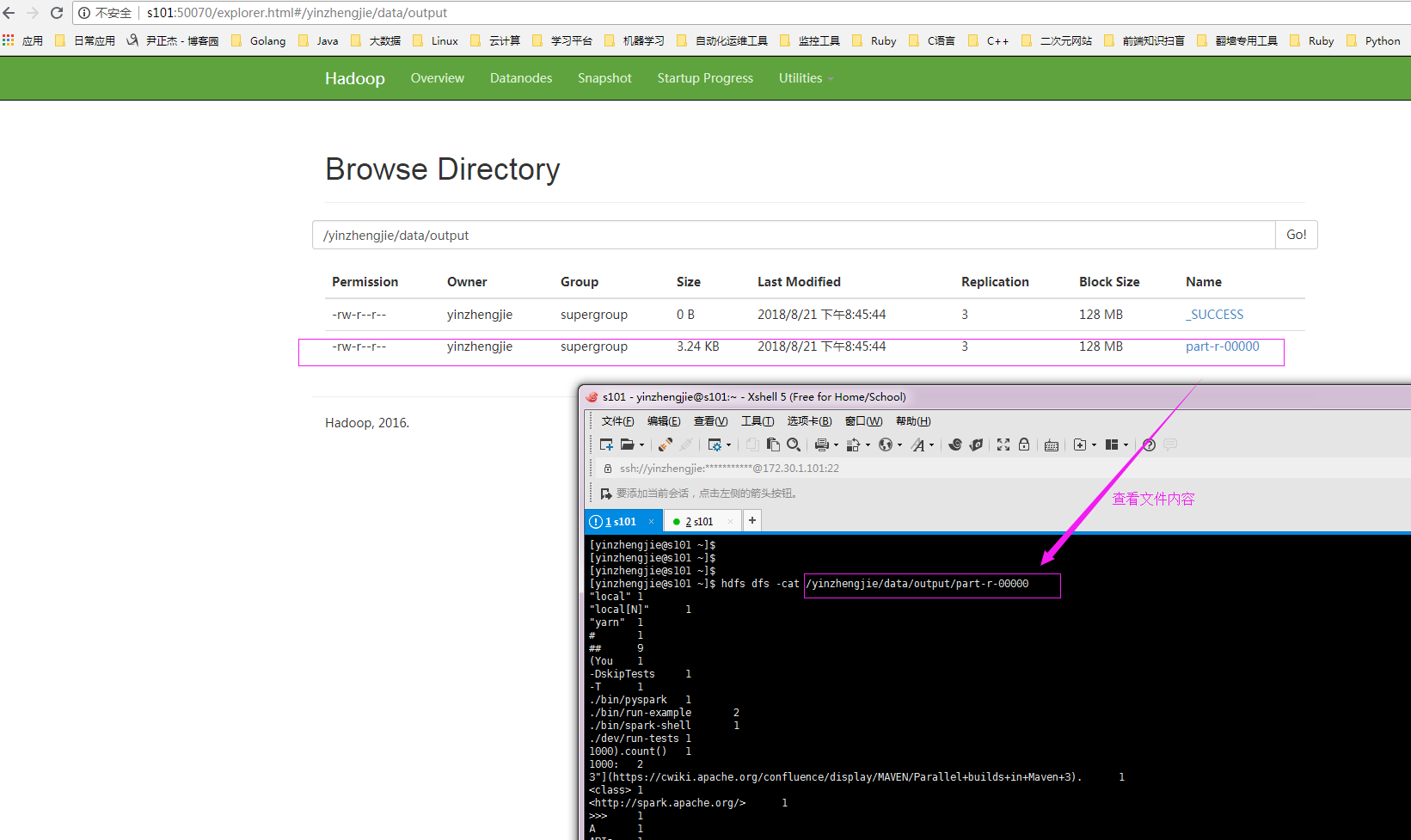

4>.通过webUI查看hdfs是否有数据产生

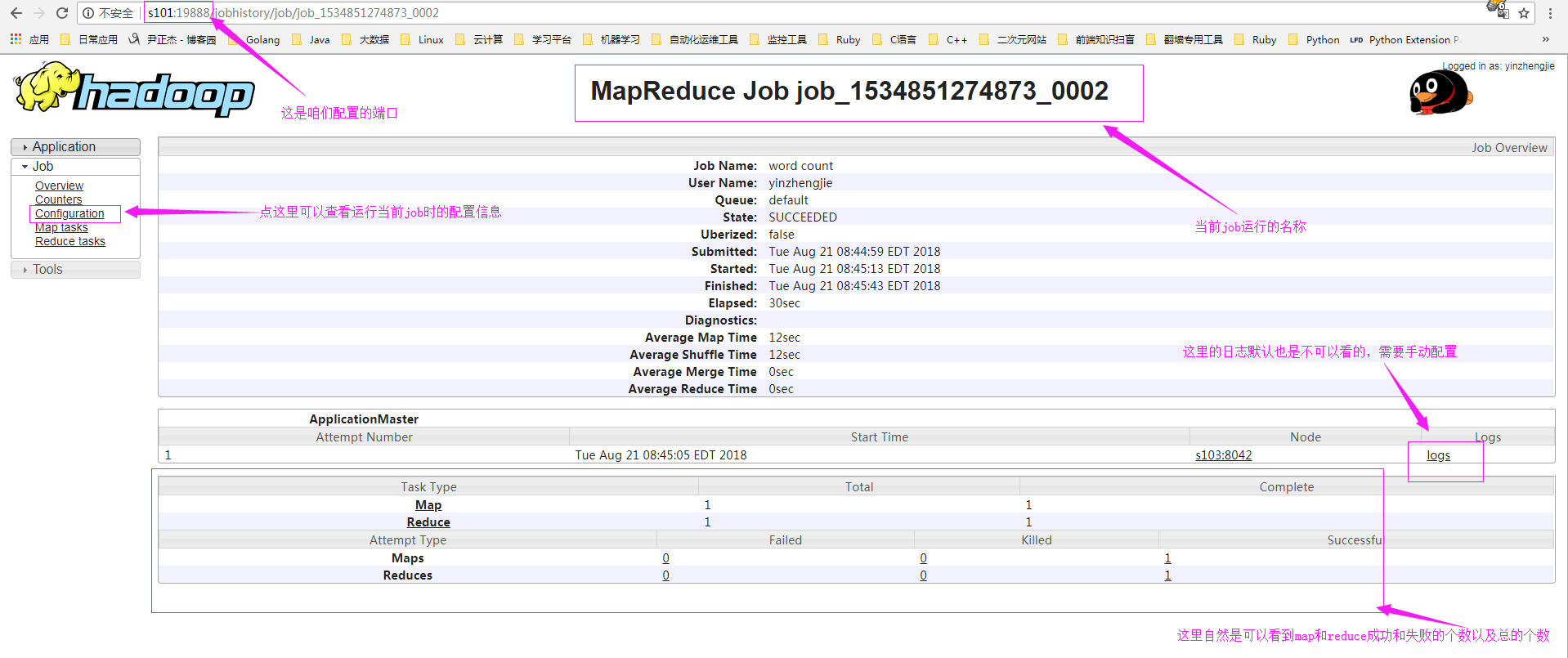

5>.查看yarn的webUI的历史任务

6>.查看历史记录

7>.配置日志聚集功能

详情请参考:https://www.cnblogs.com/yinzhengjie/p/9471921.html

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/9466159.html,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。