node_exporter配置黑白名单及采集自定义模块实战

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.node_exporter黑白名单模块配置

1.正常启动node_exporter

1.观察默认启用的采集(collector)指标模块日志输出信息

[root@prometheus-server31 ~]# /yinzhengjie/softwares/node_exporter-1.8.2.linux-amd64/node_exporter

...

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:111 level=info msg="Enabled collectors"

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=arp

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=bcache

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=bonding

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=btrfs

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=conntrack

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=cpu

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=cpufreq

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=diskstats

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=dmi

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=edac

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=entropy

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=fibrechannel

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=filefd

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=filesystem

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=hwmon

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=infiniband

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=ipvs

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=loadavg

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=mdadm

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=meminfo

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=netclass

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=netdev

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=netstat

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=nfs

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=nfsd

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=nvme

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=os

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=powersupplyclass

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=pressure

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=rapl

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=schedstat

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=selinux

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=sockstat

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=softnet

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=stat

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=tapestats

ts=2024-11-04T12:41:47.358Z caller=node_exporter.go:118 level=info collector=textfile

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=thermal_zone

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=time

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=timex

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=udp_queues

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=uname

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=vmstat

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=watchdog

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=xfs

ts=2024-11-04T12:41:47.359Z caller=node_exporter.go:118 level=info collector=zfs

ts=2024-11-04T12:42:53.184Z caller=tls_config.go:313 level=info msg="Listening on" address=[::]:9100

ts=2024-11-04T12:42:53.184Z caller=tls_config.go:316 level=info msg="TLS is disabled." http2=false address=[::]:9100

...

2.测试CPU的相关指标

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep node_cpu_secon

# HELP node_cpu_seconds_total Seconds the CPUs spent in each mode.

# TYPE node_cpu_seconds_total counter

node_cpu_seconds_total{cpu="0",mode="idle"} 856.22

node_cpu_seconds_total{cpu="0",mode="iowait"} 3.78

node_cpu_seconds_total{cpu="0",mode="irq"} 0

node_cpu_seconds_total{cpu="0",mode="nice"} 47.35

node_cpu_seconds_total{cpu="0",mode="softirq"} 2.47

node_cpu_seconds_total{cpu="0",mode="steal"} 0

node_cpu_seconds_total{cpu="0",mode="system"} 58.15

node_cpu_seconds_total{cpu="0",mode="user"} 39.17

node_cpu_seconds_total{cpu="1",mode="idle"} 903.87

node_cpu_seconds_total{cpu="1",mode="iowait"} 2.71

node_cpu_seconds_total{cpu="1",mode="irq"} 0

node_cpu_seconds_total{cpu="1",mode="nice"} 39.4

node_cpu_seconds_total{cpu="1",mode="softirq"} 1.57

node_cpu_seconds_total{cpu="1",mode="steal"} 0

node_cpu_seconds_total{cpu="1",mode="system"} 43.67

node_cpu_seconds_total{cpu="1",mode="user"} 34.72

[root@prometheus-server31 ~]#

2.node_exporter配置黑名单模块

1.使用"--no-collector.<module_name>"参数可以禁用某个模块(关闭某一项默认开启的采集项)

[root@prometheus-server31 ~]# /yinzhengjie/softwares/node_exporter-1.8.2.linux-amd64/node_exporter --no-collector.cpu

...

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:111 level=info msg="Enabled collectors"

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=arp

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=bcache

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=bonding

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=btrfs

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=conntrack

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=cpufreq

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=diskstats

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=dmi

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=edac

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=entropy

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=fibrechannel

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=filefd

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=filesystem

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=hwmon

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=infiniband

ts=2024-11-04T12:44:04.105Z caller=node_exporter.go:118 level=info collector=ipvs

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=loadavg

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=mdadm

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=meminfo

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=netclass

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=netdev

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=netstat

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=nfs

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=nfsd

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=nvme

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=os

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=powersupplyclass

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=pressure

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=rapl

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=schedstat

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=selinux

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=sockstat

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=softnet

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=stat

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=tapestats

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=textfile

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=thermal_zone

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=time

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=timex

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=udp_queues

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=uname

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=vmstat

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=watchdog

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=xfs

ts=2024-11-04T12:44:04.106Z caller=node_exporter.go:118 level=info collector=zfs

ts=2024-11-04T12:44:04.107Z caller=tls_config.go:313 level=info msg="Listening on" address=[::]:9100

ts=2024-11-04T12:44:04.107Z caller=tls_config.go:316 level=info msg="TLS is disabled." http2=false address=[::]:9100

2.测试CPU的相关指标,发现根本就采集不到CPU相关的指标了

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep node_cpu_seconds_total

[root@prometheus-server31 ~]#

3.node_exporter配置白名单模块

1.使用"--collector.disable-defaults"可以禁用所有默认的模块,仅启用cpu模块

[root@prometheus-server31 ~]# /yinzhengjie/softwares/node_exporter-1.8.2.linux-amd64/node_exporter --collector.disable-defaults --collector.cpu

...

ts=2024-11-04T14:12:57.565Z caller=node_exporter.go:111 level=info msg="Enabled collectors"

ts=2024-11-04T14:12:57.565Z caller=node_exporter.go:118 level=info collector=cpu

ts=2024-11-04T14:12:57.565Z caller=tls_config.go:313 level=info msg="Listening on" address=[::]:9100

ts=2024-11-04T14:12:57.565Z caller=tls_config.go:316 level=info msg="TLS is disabled." http2=false address=[::]:9100

...

2.仅开启CPU和内存的数据采集

[root@prometheus-server31 ~]# /yinzhengjie/softwares/node_exporter-1.8.2.linux-amd64/node_exporter --collector.disable-defaults --collector.cpu --collector.meminfo

...

ts=2024-11-04T14:21:25.289Z caller=node_exporter.go:111 level=info msg="Enabled collectors"

ts=2024-11-04T14:21:25.289Z caller=node_exporter.go:118 level=info collector=cpu

ts=2024-11-04T14:21:25.289Z caller=node_exporter.go:118 level=info collector=meminfo

ts=2024-11-04T14:21:25.290Z caller=tls_config.go:313 level=info msg="Listening on" address=[::]:9100

ts=2024-11-04T14:21:25.290Z caller=tls_config.go:316 level=info msg="TLS is disabled." http2=false address=[::]:9100

...

3.测试验证

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep ^node

node_cpu_guest_seconds_total{cpu="0",mode="nice"} 0

...

node_memory_Active_anon_bytes 1.585152e+06

...

[root@prometheus-server31 ~]#

4.默认关闭的原因

之所以有些模块官方是禁用的,原因是这些模块太重量级,运行太慢,以及太多资源开销。

详情可参考Prometheus官方文档和github的说明。

5.node_exporter一键部署脚本

推荐阅读:

https://www.cnblogs.com/yinzhengjie/p/18432546

二.node_exporter sdk指标和配置本地采集目录

1.Prometheus SDK指标

1.1 "promth_"代表访问/metrics的http情况

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep promhttp_

# HELP promhttp_metric_handler_errors_total Total number of internal errors encountered by the promhttp metric handler.

# TYPE promhttp_metric_handler_errors_total counter

promhttp_metric_handler_errors_total{cause="encoding"} 0

promhttp_metric_handler_errors_total{cause="gathering"} 0

# HELP promhttp_metric_handler_requests_in_flight Current number of scrapes being served.

# TYPE promhttp_metric_handler_requests_in_flight gauge

promhttp_metric_handler_requests_in_flight 1

# HELP promhttp_metric_handler_requests_total Total number of scrapes by HTTP status code.

# TYPE promhttp_metric_handler_requests_total counter

promhttp_metric_handler_requests_total{code="200"} 3

promhttp_metric_handler_requests_total{code="500"} 0

promhttp_metric_handler_requests_total{code="503"} 0

[root@prometheus-server31 ~]#

1.2 "go_"代表goruntime信息

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep go_

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 2.7899e-05

go_gc_duration_seconds{quantile="0.25"} 3.6908e-05

go_gc_duration_seconds{quantile="0.5"} 4.4417e-05

go_gc_duration_seconds{quantile="0.75"} 6.3601e-05

go_gc_duration_seconds{quantile="1"} 9.6055e-05

go_gc_duration_seconds_sum 0.000607092

go_gc_duration_seconds_count 12

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 9

# HELP go_info Information about the Go environment.

# TYPE go_info gauge

go_info{version="go1.22.5"} 1

# HELP go_memstats_alloc_bytes Number of bytes allocated and still in use.

# TYPE go_memstats_alloc_bytes gauge

go_memstats_alloc_bytes 2.42844e+06

# HELP go_memstats_alloc_bytes_total Total number of bytes allocated, even if freed.

# TYPE go_memstats_alloc_bytes_total counter

go_memstats_alloc_bytes_total 2.2699856e+07

# HELP go_memstats_buck_hash_sys_bytes Number of bytes used by the profiling bucket hash table.

# TYPE go_memstats_buck_hash_sys_bytes gauge

go_memstats_buck_hash_sys_bytes 1.456692e+06

# HELP go_memstats_frees_total Total number of frees.

# TYPE go_memstats_frees_total counter

go_memstats_frees_total 237643

# HELP go_memstats_gc_sys_bytes Number of bytes used for garbage collection system metadata.

# TYPE go_memstats_gc_sys_bytes gauge

go_memstats_gc_sys_bytes 3.13476e+06

# HELP go_memstats_heap_alloc_bytes Number of heap bytes allocated and still in use.

# TYPE go_memstats_heap_alloc_bytes gauge

go_memstats_heap_alloc_bytes 2.42844e+06

# HELP go_memstats_heap_idle_bytes Number of heap bytes waiting to be used.

# TYPE go_memstats_heap_idle_bytes gauge

go_memstats_heap_idle_bytes 4.128768e+06

# HELP go_memstats_heap_inuse_bytes Number of heap bytes that are in use.

# TYPE go_memstats_heap_inuse_bytes gauge

go_memstats_heap_inuse_bytes 3.817472e+06

# HELP go_memstats_heap_objects Number of allocated objects.

# TYPE go_memstats_heap_objects gauge

go_memstats_heap_objects 16168

# HELP go_memstats_heap_released_bytes Number of heap bytes released to OS.

# TYPE go_memstats_heap_released_bytes gauge

go_memstats_heap_released_bytes 3.383296e+06

# HELP go_memstats_heap_sys_bytes Number of heap bytes obtained from system.

# TYPE go_memstats_heap_sys_bytes gauge

go_memstats_heap_sys_bytes 7.94624e+06

# HELP go_memstats_last_gc_time_seconds Number of seconds since 1970 of last garbage collection.

# TYPE go_memstats_last_gc_time_seconds gauge

go_memstats_last_gc_time_seconds 1.7307314395576775e+09

# HELP go_memstats_lookups_total Total number of pointer lookups.

# TYPE go_memstats_lookups_total counter

go_memstats_lookups_total 0

# HELP go_memstats_mallocs_total Total number of mallocs.

# TYPE go_memstats_mallocs_total counter

go_memstats_mallocs_total 253811

# HELP go_memstats_mcache_inuse_bytes Number of bytes in use by mcache structures.

# TYPE go_memstats_mcache_inuse_bytes gauge

go_memstats_mcache_inuse_bytes 1200

# HELP go_memstats_mcache_sys_bytes Number of bytes used for mcache structures obtained from system.

# TYPE go_memstats_mcache_sys_bytes gauge

go_memstats_mcache_sys_bytes 15600

# HELP go_memstats_mspan_inuse_bytes Number of bytes in use by mspan structures.

# TYPE go_memstats_mspan_inuse_bytes gauge

go_memstats_mspan_inuse_bytes 65920

# HELP go_memstats_mspan_sys_bytes Number of bytes used for mspan structures obtained from system.

# TYPE go_memstats_mspan_sys_bytes gauge

go_memstats_mspan_sys_bytes 81600

# HELP go_memstats_next_gc_bytes Number of heap bytes when next garbage collection will take place.

# TYPE go_memstats_next_gc_bytes gauge

go_memstats_next_gc_bytes 4.454152e+06

# HELP go_memstats_other_sys_bytes Number of bytes used for other system allocations.

# TYPE go_memstats_other_sys_bytes gauge

go_memstats_other_sys_bytes 657916

# HELP go_memstats_stack_inuse_bytes Number of bytes in use by the stack allocator.

# TYPE go_memstats_stack_inuse_bytes gauge

go_memstats_stack_inuse_bytes 425984

# HELP go_memstats_stack_sys_bytes Number of bytes obtained from system for stack allocator.

# TYPE go_memstats_stack_sys_bytes gauge

go_memstats_stack_sys_bytes 425984

# HELP go_memstats_sys_bytes Number of bytes obtained from system.

# TYPE go_memstats_sys_bytes gauge

go_memstats_sys_bytes 1.3718792e+07

# HELP go_threads Number of OS threads created.

# TYPE go_threads gauge

go_threads 4

[root@prometheus-server31 ~]#

1.3 "process_"代表进程信息

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep process_

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 0.28

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 1.048576e+06

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 10

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 1.9300352e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.73073125521e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 1.270972416e+09

# HELP process_virtual_memory_max_bytes Maximum amount of virtual memory available in bytes.

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+19

[root@prometheus-server31 ~]#

2.禁用golang sdk指标

2.1 禁用golang sdk

[root@prometheus-server31 ~]# /yinzhengjie/softwares/node_exporter-1.8.2.linux-amd64/node_exporter --web.disable-exporter-metrics

2.2 验证

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep promhttp_

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep go_

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep process_

[root@prometheus-server31 ~]#

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep prom_

[root@prometheus-server31 ~]#

3.配置本地采集目录

3.1 准备测试文件

1.创建目录

[root@prometheus-server31 ~]# mkdir yinzhengjie-node-exporter

2.准备prom文件

[root@prometheus-server31 ~]# cat > yinzhengjie-node-exporter/student.prom <<EOF

# HELP yinzhengjie_student student online count

# TYPE yinzhengjie_student gauge

yinzhengjie_student{school="oldboyedu",class="linux"} 4096

EOF

温馨提示:

文本格式要遵循官方的要求哟,参考链接: https://prometheus.io/docs/instrumenting/exposition_formats/#text-format-example

3.2 启动服务

[root@prometheus-server31 ~]# /yinzhengjie/softwares/node_exporter-1.8.2.linux-amd64/node_exporter --collector.textfile.directory=./yinzhengjie-node-exporter

3.3 测试验证

[root@prometheus-server31 ~]# curl -s 10.0.0.31:9100/metrics| grep yinzhengjie

node_textfile_mtime_seconds{file="yinzhengjie-node-exporter/student.prom"} 1.730732383e+09

# HELP yinzhengjie_student student online count

# TYPE yinzhengjie_student gauge

yinzhengjie_student{class="linux",school="oldboyedu"} 4096

[root@prometheus-server31 ~]#

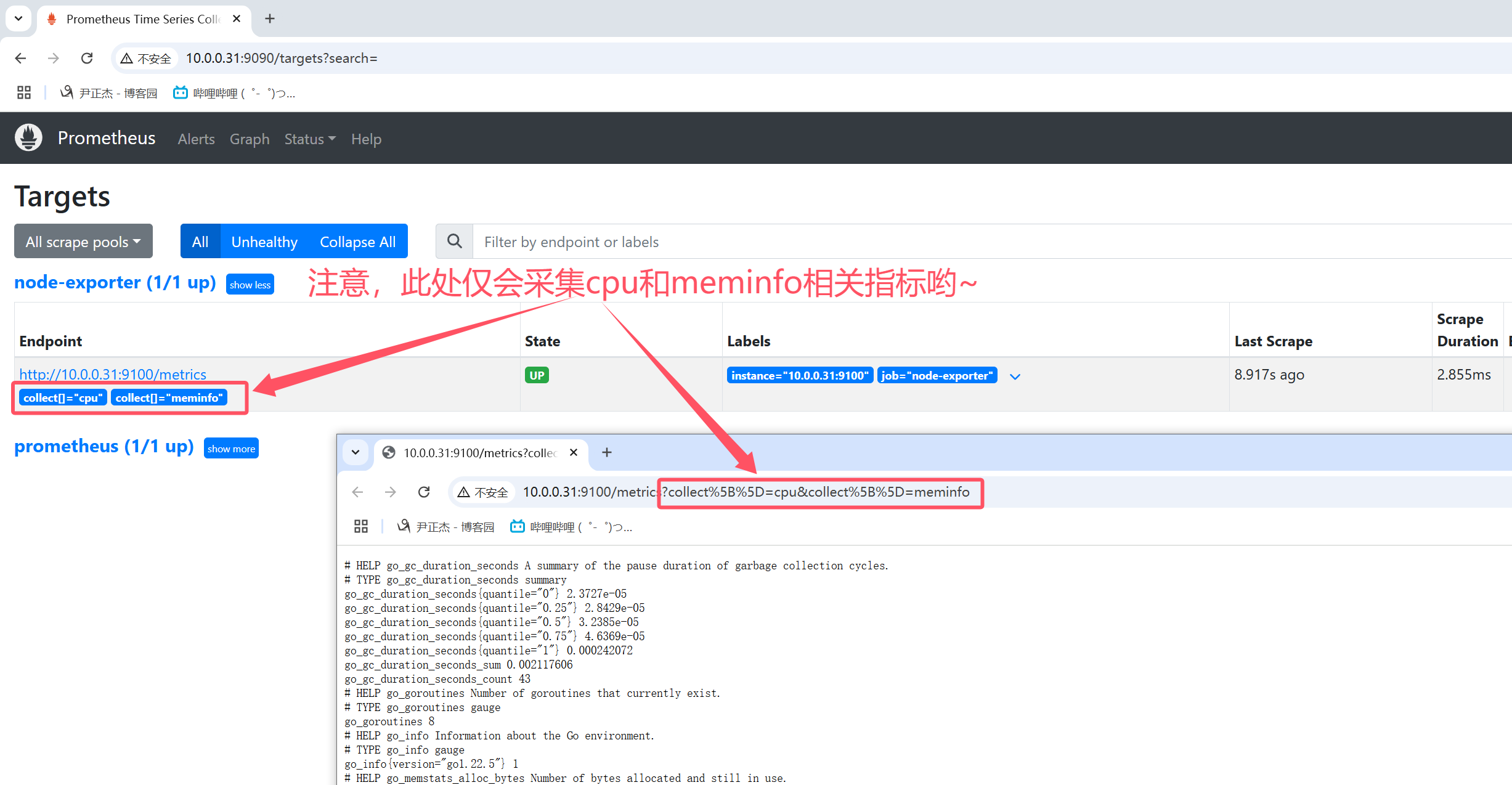

三.node_exporter采集指定的指标

1.访问node exporter页面基于collect传参

1.1 只查看cpu相关指标

http://10.0.0.31:9100/metrics?collect[]=cpu

1.2 只查看内存指标

http://10.0.0.31:9100/metrics?collect[]=meminfo

1.3 同时查看cpu和内存指标

http://10.0.0.31:9100/metrics?collect[]=cpu&collect[]=meminfo

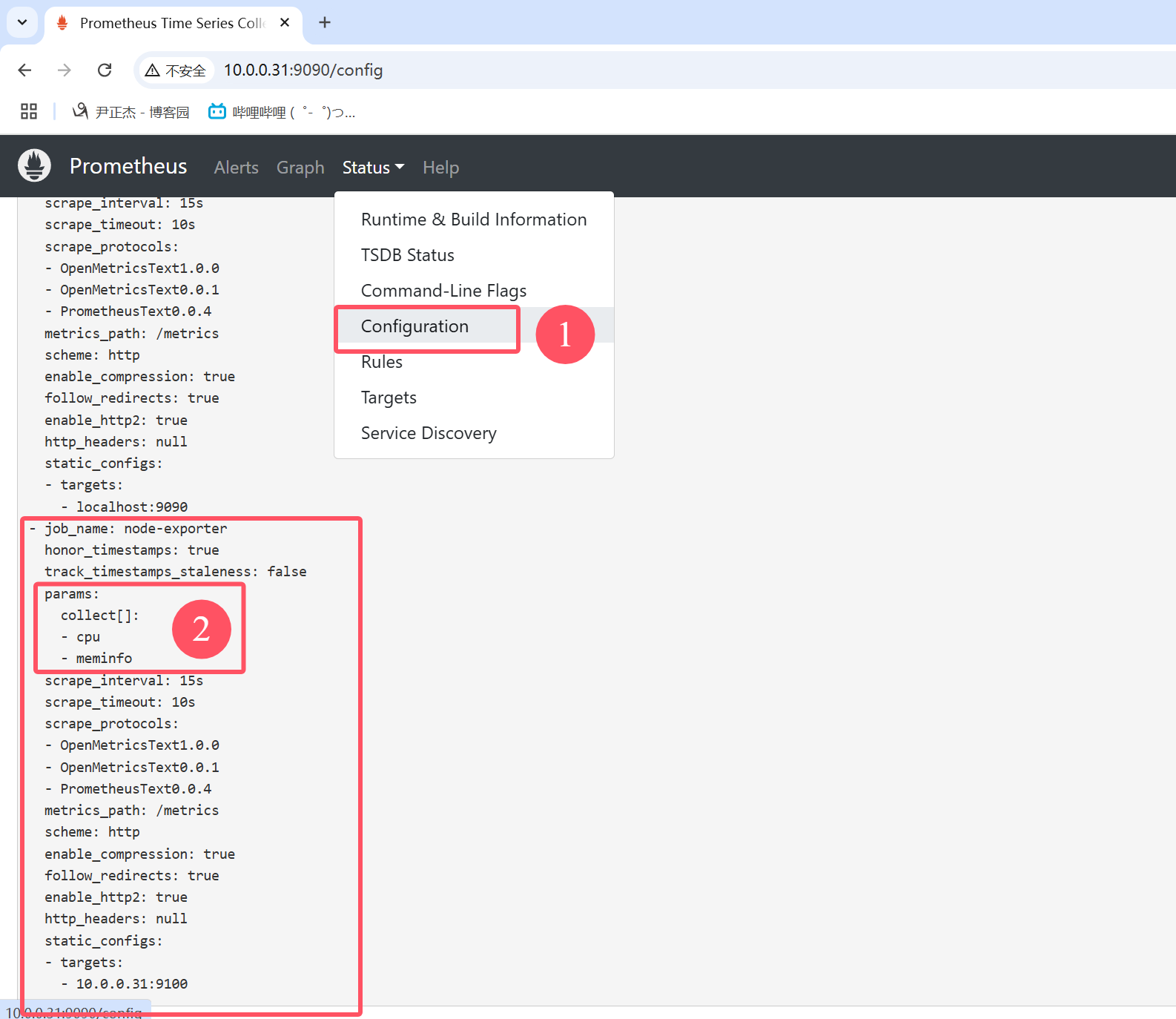

2.Prometheus server采集指定指标

2.1 修改Prometheus的配置文件

- job_name: "node-exporter"

# 表示仅采集"cpu"和"meminfo"指标。

params:

collect[]:

- cpu

- meminfo

static_configs:

- targets: ["10.0.0.31:9100"]

2.2 查看Prometheus的配置文件

如上图所示,我们配置成功后,就可以看到后端的监控信息多出来了采集的相关指标。

如下图所示,我们可以查看完整的配置信息,有些配置信息我们没有在配置文件中写,但是服务会自动补全哟~

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/18524275,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

标签:

Prometheus

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· AI 智能体引爆开源社区「GitHub 热点速览」

· 写一个简单的SQL生成工具

· Manus的开源复刻OpenManus初探

2019-11-04 MySQL/MariaDB数据库的冷备份和还原

2018-11-04 Hadoop基础-MapReduce入门篇之编写简单的Wordcount测试代码

2018-11-04 Hadoop基础-通过IO流操作HDFS

2018-11-04 Hadoop基础-HDFS集群中大数据开发常用的命令总结

2018-11-04 Hadoop基础-HDFS的API常见操作