cephFS高可用分布式文件系统部署指南

目录

一.cephFS概述

1.什么是cephFS

cephFS是一个可用的文件系统,可以简单理解为高可用,高性能,扩展性强的nfs。

cephFS存储数据时将其分为两个部分,元数据和实际数据。

推荐阅读:

https://docs.ceph.com/en/nautilus/cephfs/

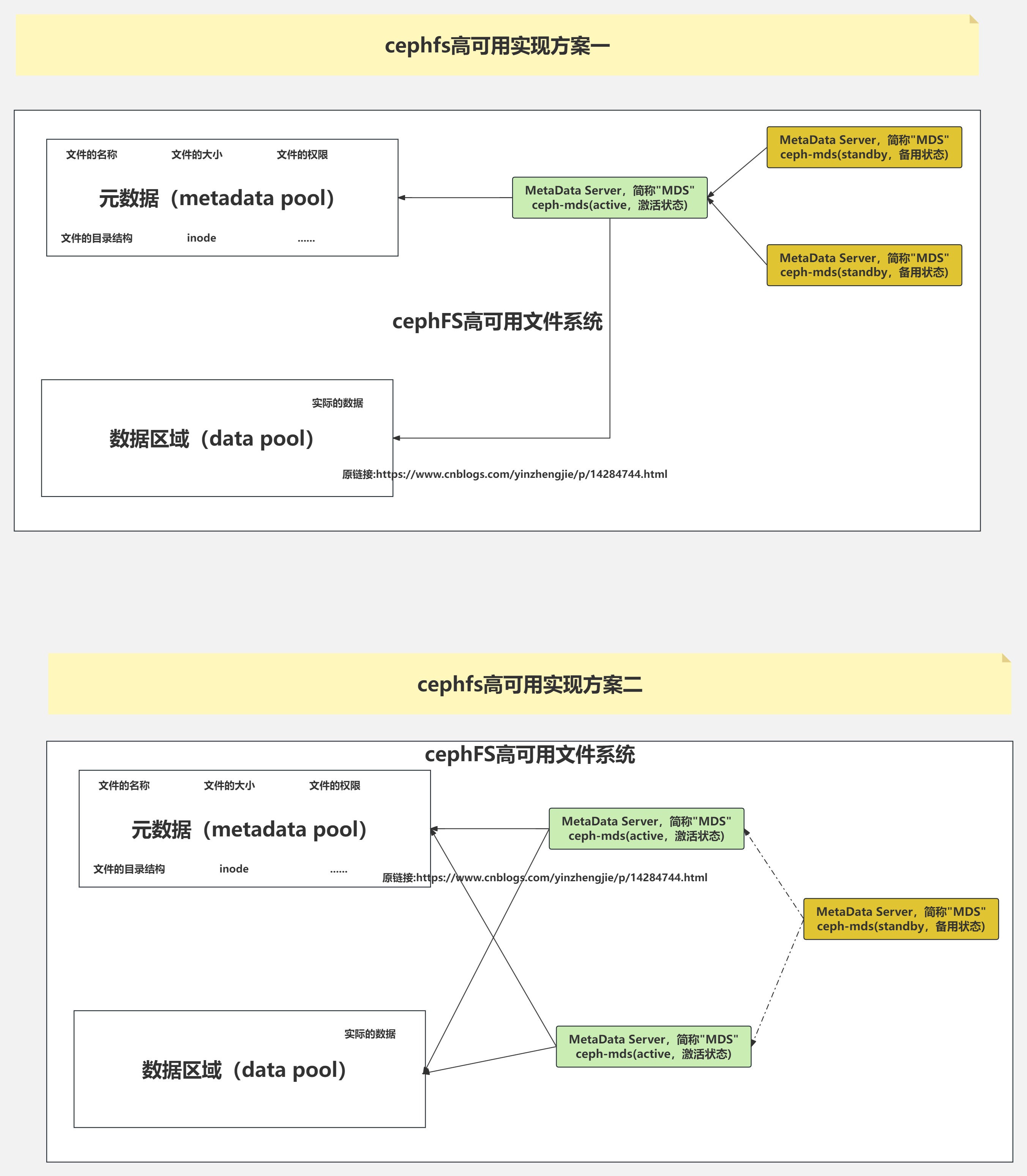

2.cephFS架构图解

如上图所示,本教程会做出两种cephFS高可用的架构设计

二.部署高可用cephFS集群

1.在ceph-deploy节点安装ceph-mds

1.查看现有环境是没有mds服务器

[root@harbor250 ceph-cluster]# ceph -s

cluster:

id: 5821e29c-326d-434d-a5b6-c492527eeaad

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 60m)

mgr: ceph142(active, since 61m), standbys: ceph141, ceph143

osd: 7 osds: 7 up (since 59m), 7 in (since 19h)

task status:

data:

pools: 3 pools, 96 pgs

objects: 60 objects, 100 MiB

usage: 7.8 GiB used, 1.9 TiB / 2.0 TiB avail

pgs: 96 active+clean

[root@harbor250 ceph-cluster]#

[root@harbor250 ceph-cluster]# pwd

/yinzhengjie/softwares/ceph-cluster

[root@harbor250 ceph-cluster]#

2.安装mds服务器

[root@harbor250 ceph-cluster]# ceph-deploy --overwrite-conf mds create ceph141 ceph142 ceph143

...

[ceph141][INFO ] Running command: systemctl start ceph-mds@ceph141

[ceph141][INFO ] Running command: systemctl enable ceph.target

...

[ceph142][INFO ] Running command: systemctl start ceph-mds@ceph142

[ceph142][INFO ] Running command: systemctl enable ceph.target

...

[ceph143][INFO ] Running command: systemctl start ceph-mds@ceph143

[ceph143][INFO ] Running command: systemctl enable ceph.target

[root@harbor250 ceph-cluster]#

2.查看集群状态

[root@ceph141 ~]# ceph -s # 注意观察"mds"相关字段。

cluster:

id: 5821e29c-326d-434d-a5b6-c492527eeaad

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 64m)

mgr: ceph142(active, since 64m), standbys: ceph141, ceph143

mds: 3 up:standby # 注意,有3个mds均处于standby模式,无法对外提供服务

osd: 7 osds: 7 up (since 63m), 7 in (since 19h)

data:

pools: 3 pools, 96 pgs

objects: 60 objects, 100 MiB

usage: 7.8 GiB used, 1.9 TiB / 2.0 TiB avail

pgs: 96 active+clean

[root@ceph141 ~]#

[root@ceph141 ~]# ceph mds stat # 只查看mds相关状态信息

3 up:standby

[root@ceph141 ~]#

3.创建元数据存储池和数据存储池

[root@ceph141 ~]# ceph osd pool create yinzhengjie-cephfs-metadata 32 32

pool 'yinzhengjie-cephfs-metadata' created

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool create yinzhengjie-cephfs-data 128 128

pool 'yinzhengjie-cephfs-data' created

[root@ceph141 ~]#

[root@ceph141 ~]# ceph osd pool ls detail | grep cephfs

pool 5 'yinzhengjie-cephfs-metadata' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode warn last_change 634 flags hashpspool stripe_width 0

pool 6 'yinzhengjie-cephfs-data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num 128 autoscale_mode warn last_change 637 flags hashpspool stripe_width 0

[root@ceph141 ~]#

4.初始化cephFS文件系统

1 查看ceph集群现有的文件系统

[root@ceph141 ~]# ceph fs ls

No filesystems enabled

[root@ceph141 ~]#

2 创建cephFS实例

[root@ceph141 ~]# ceph fs new yinzhengjie-linux-cephfs yinzhengjie-cephfs-metadata yinzhengjie-cephfs-data

new fs with metadata pool 5 and data pool 6

[root@ceph141 ~]#

3 再次查看ceph集群现有的文件系统

[root@ceph141 ~]# ceph fs ls

name: yinzhengjie-linux-cephfs, metadata pool: yinzhengjie-cephfs-metadata, data pools: [yinzhengjie-cephfs-data ]

[root@ceph141 ~]#

4 查看cephFS的状态信息

[root@ceph141 ~]# ceph fs status yinzhengjie-linux-cephfs

yinzhengjie-linux-cephfs - 0 clients

======================

+------+--------+---------+---------------+-------+-------+

| Rank | State | MDS | Activity | dns | inos |

+------+--------+---------+---------------+-------+-------+

| 0 | active | ceph143 | Reqs: 0 /s | 10 | 13 |

+------+--------+---------+---------------+-------+-------+

+---------------------------+----------+-------+-------+

| Pool | type | used | avail |

+---------------------------+----------+-------+-------+

| yinzhengjie-cephfs-metadata | metadata | 1536k | 629G |

| yinzhengjie-cephfs-data | data | 0 | 629G |

+---------------------------+----------+-------+-------+

+-------------+

| Standby MDS |

+-------------+

| ceph142 |

| ceph141 |

+-------------+

MDS version: ceph version 14.2.22 (ca74598065096e6fcbd8433c8779a2be0c889351) nautilus (stable)

[root@ceph141 ~]#

5 再次查看集群信息

[root@ceph141 ~]# ceph mds stat

yinzhengjie-linux-cephfs:1 {0=ceph143=up:active} 2 up:standby

[root@ceph141 ~]#

[root@ceph141 ~]# ceph -s

cluster:

id: 5821e29c-326d-434d-a5b6-c492527eeaad

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph141,ceph142,ceph143 (age 70m)

mgr: ceph142(active, since 70m), standbys: ceph141, ceph143

mds: yinzhengjie-linux-cephfs:1 {0=ceph143=up:active} 2 up:standby

osd: 7 osds: 7 up (since 69m), 7 in (since 19h)

data:

pools: 5 pools, 256 pgs

objects: 82 objects, 100 MiB

usage: 7.8 GiB used, 1.9 TiB / 2.0 TiB avail

pgs: 256 active+clean

[root@ceph141 ~]#

5.验证mds的高可用

[root@ceph143 ~]# ceph mds stat

yinzhengjie-linux-cephfs:1 {0=ceph143=up:active} 2 up:standby

[root@ceph143 ~]#

[root@ceph143 ~]# systemctl stop ceph-mds@ceph143.service # 停止mds服务后,会自动触发切换mds角色

[root@ceph143 ~]#

[root@ceph143 ~]# ceph mds stat

yinzhengjie-linux-cephfs:1 {0=ceph142=up:active} 1 up:standby

[root@ceph143 ~]#

[root@ceph143 ~]#

[root@ceph143 ~]# systemctl start ceph-mds@ceph143.service

[root@ceph143 ~]#

[root@ceph143 ~]# ceph mds stat # 服务启动后,发现并不会抢占mds角色。

yinzhengjie-linux-cephfs:1 {0=ceph142=up:active} 2 up:standby

[root@ceph143 ~]#

三.cephFS两主一从架构

1.部署ephFS两主一从环境

1.修改之前查看ceph集群的mds状态

[root@ceph141 ~]# ceph mds stat

yinzhengjie-linux-cephfs:1 {0=ceph142=up:active} 2 up:standby

[root@ceph141 ~]#

2.修改max_mds的数量,默认值为1

[root@ceph141 ~]# ceph fs get yinzhengjie-linux-cephfs | grep max_mds

max_mds 1

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs set yinzhengjie-linux-cephfs max_mds 2

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs get yinzhengjie-linux-cephfs | grep max_mds

max_mds 2

[root@ceph141 ~]#

2.查看集群的状态

[root@ceph141 ~]# ceph mds stat

yinzhengjie-linux-cephfs:2 {0=ceph142=up:active,1=ceph141=up:active} 1 up:standby

[root@ceph141 ~]#

[root@ceph141 ~]#

[root@ceph141 ~]# ceph fs status yinzhengjie-linux-cephfs

yinzhengjie-linux-cephfs - 0 clients

======================

+------+--------+---------+---------------+-------+-------+

| Rank | State | MDS | Activity | dns | inos |

+------+--------+---------+---------------+-------+-------+

| 0 | active | ceph142 | Reqs: 0 /s | 10 | 13 |

| 1 | active | ceph141 | Reqs: 0 /s | 10 | 13 |

+------+--------+---------+---------------+-------+-------+

+---------------------------+----------+-------+-------+

| Pool | type | used | avail |

+---------------------------+----------+-------+-------+

| yinzhengjie-cephfs-metadata | metadata | 2688k | 629G |

| yinzhengjie-cephfs-data | data | 0 | 629G |

+---------------------------+----------+-------+-------+

+-------------+

| Standby MDS |

+-------------+

| ceph143 |

+-------------+

MDS version: ceph version 14.2.22 (ca74598065096e6fcbd8433c8779a2be0c889351) nautilus (stable)

[root@ceph141 ~]#

2.验证两主一从架构

1 停止服务

[root@ceph142 ~]# ceph mds stat

yinzhengjie-linux-cephfs:2 {0=ceph142=up:active,1=ceph141=up:active} 1 up:standby

[root@ceph142 ~]#

[root@ceph142 ~]# systemctl stop ceph-mds@ceph142.service

[root@ceph142 ~]#

[root@ceph142 ~]# ceph mds stat

yinzhengjie-linux-cephfs:2 {0=ceph143=up:active,1=ceph141=up:active}

[root@ceph142 ~]#

2 启动服务

[root@ceph142 ~]# systemctl start ceph-mds@ceph142.service

[root@ceph142 ~]#

[root@ceph142 ~]# ceph mds stat

yinzhengjie-linux-cephfs:2 {0=ceph143=up:active,1=ceph141=up:active} 1 up:standby

[root@ceph142 ~]#

[root@ceph142 ~]#

3 再次查看集群状态信息

[root@ceph141 ~]# ceph fs status yinzhengjie-linux-cephfs

yinzhengjie-linux-cephfs - 0 clients

======================

+------+--------+---------+---------------+-------+-------+

| Rank | State | MDS | Activity | dns | inos |

+------+--------+---------+---------------+-------+-------+

| 0 | active | ceph143 | Reqs: 0 /s | 10 | 13 |

| 1 | active | ceph141 | Reqs: 0 /s | 10 | 13 |

+------+--------+---------+---------------+-------+-------+

+---------------------------+----------+-------+-------+

| Pool | type | used | avail |

+---------------------------+----------+-------+-------+

| yinzhengjie-cephfs-metadata | metadata | 2688k | 629G |

| yinzhengjie-cephfs-data | data | 0 | 629G |

+---------------------------+----------+-------+-------+

+-------------+

| Standby MDS |

+-------------+

| ceph142 |

+-------------+

MDS version: ceph version 14.2.22 (ca74598065096e6fcbd8433c8779a2be0c889351) nautilus (stable)

[root@ceph141 ~]#

综上所述,建议最少保留一个备用节点,如果都指定为主节点,是可以正常工作,但是挂掉任意个主节点,此时cephFS集群不工作!

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/14284744.html,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。