Hadoop集群配置https实战案例

Hadoop集群配置https实战案例

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.生成私钥及证书文件并拷贝到Hadoop节点

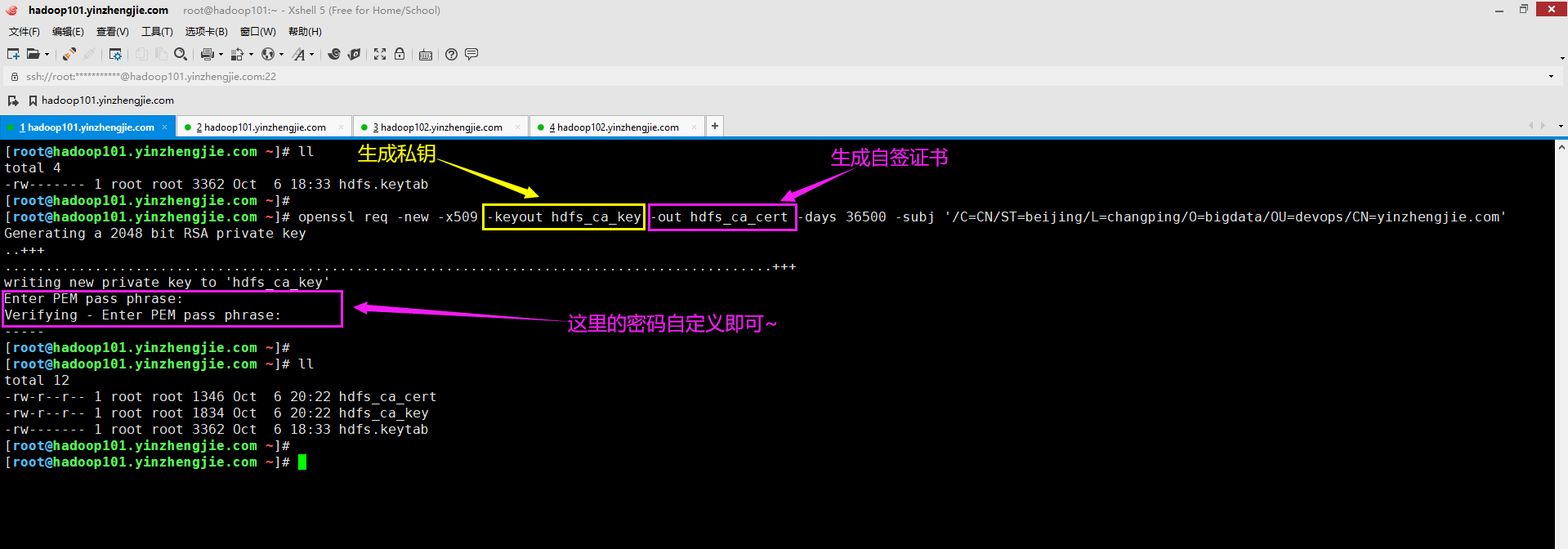

1>.生成私钥和证书文件

[root@hadoop101.yinzhengjie.com ~]# ll total 4 -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# openssl req -new -x509 -keyout hdfs_ca_key -out hdfs_ca_cert -days 36500 -subj '/C=CN/ST=beijing/L=changping/O=bigdata/OU=devops/CN=yinzhengjie.com' Generating a 2048 bit RSA private key ..+++ ..............................................................................................+++ writing new private key to 'hdfs_ca_key' Enter PEM pass phrase: Verifying - Enter PEM pass phrase: ----- [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ll total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

2>.将私钥和自签证书拷贝到Hadoop集群节点

[root@hadoop101.yinzhengjie.com ~]# ll total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=~/hdfs_ca_cert dest=${HADOOP_HOME}/etc/hadoop/conf" hadoop104.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a8f408afafd58c73986a8d2a305c72c524be1064", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_cert", "gid": 0, "group": "root", "md5sum": "1eccd159e93db3ecbd9f8a6f5da37516", "mode": "0644", "owner": "root", "size": 1346, "src": "/root/.ansible/tmp/ansible-tmp-1601987750.21-12128-10738301489038/source", "state": "file", "uid": 0 } hadoop105.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a8f408afafd58c73986a8d2a305c72c524be1064", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_cert", "gid": 0, "group": "root", "md5sum": "1eccd159e93db3ecbd9f8a6f5da37516", "mode": "0644", "owner": "root", "size": 1346, "src": "/root/.ansible/tmp/ansible-tmp-1601987750.25-12130-180011782497170/source", "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a8f408afafd58c73986a8d2a305c72c524be1064", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_cert", "gid": 0, "group": "root", "md5sum": "1eccd159e93db3ecbd9f8a6f5da37516", "mode": "0644", "owner": "root", "size": 1346, "src": "/root/.ansible/tmp/ansible-tmp-1601987750.22-12125-24658832547026/source", "state": "file", "uid": 0 } hadoop103.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a8f408afafd58c73986a8d2a305c72c524be1064", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_cert", "gid": 0, "group": "root", "md5sum": "1eccd159e93db3ecbd9f8a6f5da37516", "mode": "0644", "owner": "root", "size": 1346, "src": "/root/.ansible/tmp/ansible-tmp-1601987750.18-12127-102283570422989/source", "state": "file", "uid": 0 } hadoop101.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a8f408afafd58c73986a8d2a305c72c524be1064", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_cert", "gid": 0, "group": "root", "md5sum": "1eccd159e93db3ecbd9f8a6f5da37516", "mode": "0644", "owner": "root", "size": 1346, "src": "/root/.ansible/tmp/ansible-tmp-1601987750.25-12129-1128583651866/source", "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ll total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=~/hdfs_ca_key dest=${HADOOP_HOME}/etc/hadoop/conf" hadoop104.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a4a65fd1fe2d89af140ac1acc36ec6b62f9b5806", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_key", "gid": 0, "group": "root", "md5sum": "6c00b94f93f9424895e5954fdf4be26e", "mode": "0644", "owner": "root", "size": 1834, "src": "/root/.ansible/tmp/ansible-tmp-1601987788.65-12397-87419418248032/source", "state": "file", "uid": 0 } hadoop103.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a4a65fd1fe2d89af140ac1acc36ec6b62f9b5806", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_key", "gid": 0, "group": "root", "md5sum": "6c00b94f93f9424895e5954fdf4be26e", "mode": "0644", "owner": "root", "size": 1834, "src": "/root/.ansible/tmp/ansible-tmp-1601987788.64-12396-52377313403452/source", "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a4a65fd1fe2d89af140ac1acc36ec6b62f9b5806", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_key", "gid": 0, "group": "root", "md5sum": "6c00b94f93f9424895e5954fdf4be26e", "mode": "0644", "owner": "root", "size": 1834, "src": "/root/.ansible/tmp/ansible-tmp-1601987788.63-12394-76433468569818/source", "state": "file", "uid": 0 } hadoop105.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a4a65fd1fe2d89af140ac1acc36ec6b62f9b5806", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_key", "gid": 0, "group": "root", "md5sum": "6c00b94f93f9424895e5954fdf4be26e", "mode": "0644", "owner": "root", "size": 1834, "src": "/root/.ansible/tmp/ansible-tmp-1601987788.66-12400-246529012746559/source", "state": "file", "uid": 0 } hadoop101.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "a4a65fd1fe2d89af140ac1acc36ec6b62f9b5806", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs_ca_key", "gid": 0, "group": "root", "md5sum": "6c00b94f93f9424895e5954fdf4be26e", "mode": "0644", "owner": "root", "size": 1834, "src": "/root/.ansible/tmp/ansible-tmp-1601987788.65-12399-1022475281042/source", "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ansible all -m shell -a "ls -l ${HADOOP_HOME}/etc/hadoop/conf" hadoop104.yinzhengjie.com | CHANGED | rc=0 >> total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key -rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab hadoop102.yinzhengjie.com | CHANGED | rc=0 >> total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key -rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab hadoop101.yinzhengjie.com | CHANGED | rc=0 >> total 20 -rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key -rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab -rw-r--r-- 1 root root 115 Aug 13 18:55 host-rack.txt -rwxr-xr-x 1 root root 463 Aug 13 18:54 toplogy.py hadoop105.yinzhengjie.com | CHANGED | rc=0 >> total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key -rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab hadoop103.yinzhengjie.com | CHANGED | rc=0 >> total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key -rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]#

二.生成"keystore"和"trustores"文件

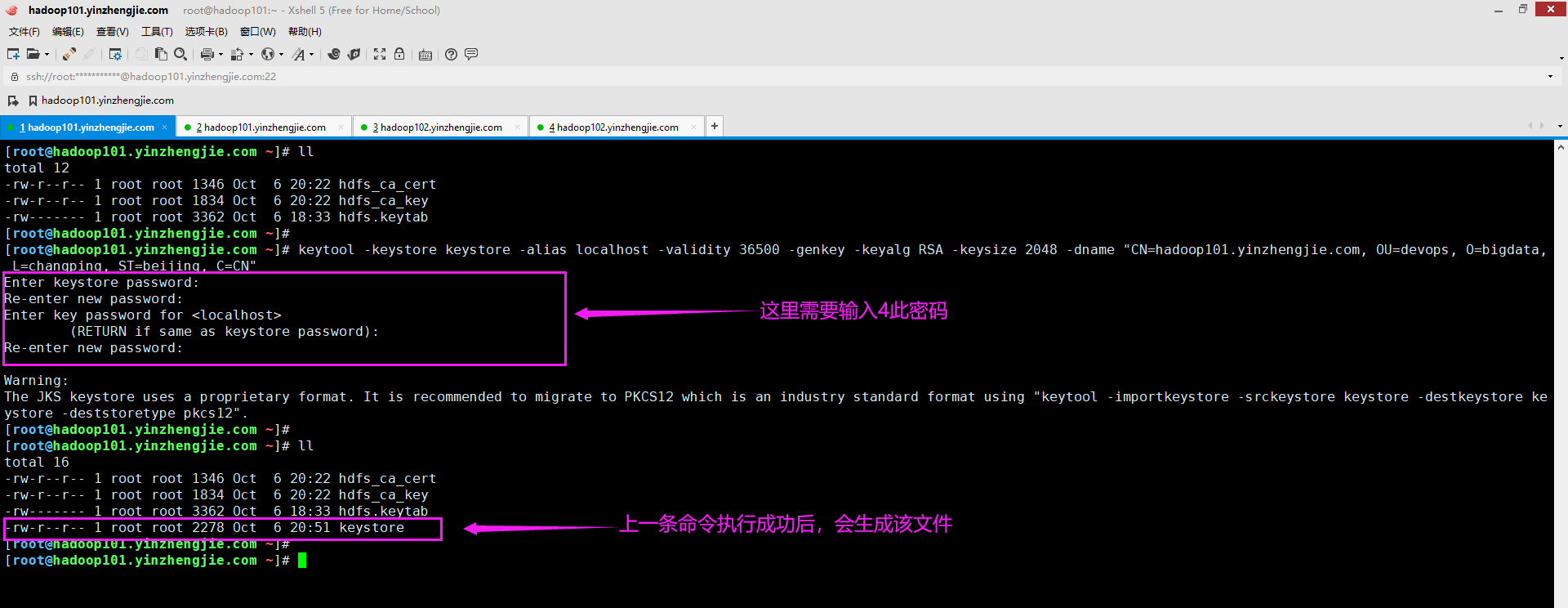

1>.生成keystore文件

[root@hadoop101.yinzhengjie.com ~]# ll total 12 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# keytool -keystore keystore -alias localhost -validity 36500 -genkey -keyalg RSA -keysize 2048 -dname "CN=hadoop101.yinzhengjie.com, OU=devops, O=bigdata, L=changping, ST=beijing, C=CN"Enter keystore password: Re-enter new password: Enter key password for <localhost> (RETURN if same as keystore password): Re-enter new password: Warning: The JKS keystore uses a proprietary format. It is recommended to migrate to PKCS12 which is an industry standard format using "keytool -importkeystore -srckeystore keystore -destkeystore ke ystore -deststoretype pkcs12".[root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ll total 16 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore [root@hadoop101.yinzhengjie.com ~]#

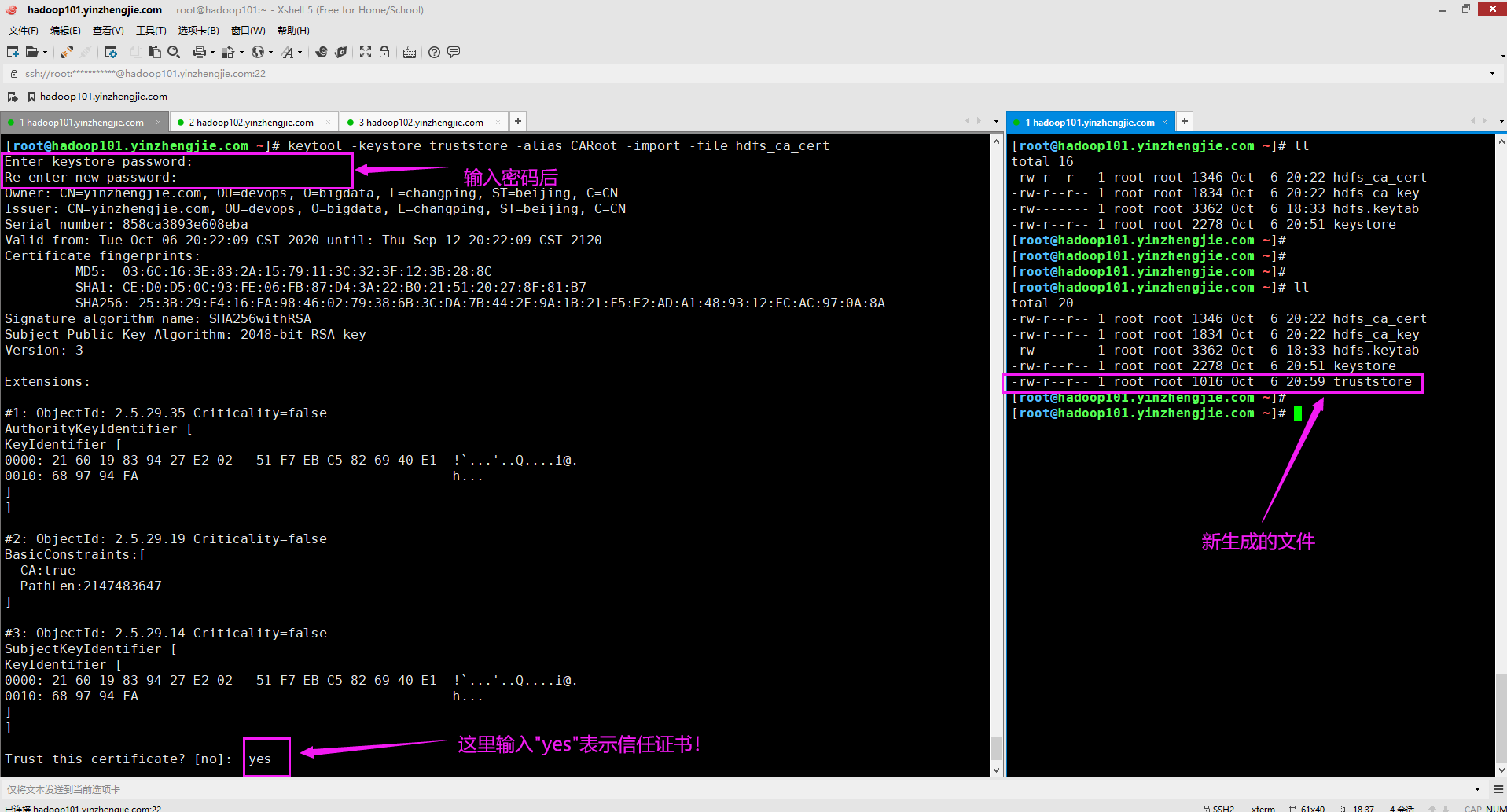

2>.生成truststore文件同时导入CA证书

[root@hadoop101.yinzhengjie.com ~]# ll total 16 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# keytool -keystore truststore -alias CARoot -import -file hdfs_ca_cert Enter keystore password: Re-enter new password: Owner: CN=yinzhengjie.com, OU=devops, O=bigdata, L=changping, ST=beijing, C=CN Issuer: CN=yinzhengjie.com, OU=devops, O=bigdata, L=changping, ST=beijing, C=CN Serial number: 858ca3893e608eba Valid from: Tue Oct 06 20:22:09 CST 2020 until: Thu Sep 12 20:22:09 CST 2120 Certificate fingerprints: MD5: 03:6C:16:3E:83:2A:15:79:11:3C:32:3F:12:3B:28:8C SHA1: CE:D0:D5:0C:93:FE:06:FB:87:D4:3A:22:B0:21:51:20:27:8F:81:B7 SHA256: 25:3B:29:F4:16:FA:98:46:02:79:38:6B:3C:DA:7B:44:2F:9A:1B:21:F5:E2:AD:A1:48:93:12:FC:AC:97:0A:8A Signature algorithm name: SHA256withRSA Subject Public Key Algorithm: 2048-bit RSA key Version: 3 Extensions: #1: ObjectId: 2.5.29.35 Criticality=false AuthorityKeyIdentifier [ KeyIdentifier [ 0000: 21 60 19 83 94 27 E2 02 51 F7 EB C5 82 69 40 E1 !`...'..Q....i@. 0010: 68 97 94 FA h... ] ] #2: ObjectId: 2.5.29.19 Criticality=false BasicConstraints:[ CA:true PathLen:2147483647 ] #3: ObjectId: 2.5.29.14 Criticality=false SubjectKeyIdentifier [ KeyIdentifier [ 0000: 21 60 19 83 94 27 E2 02 51 F7 EB C5 82 69 40 E1 !`...'..Q....i@. 0010: 68 97 94 FA h... ] ] Trust this certificate? [no]: yes Certificate was added to keystore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ll total 20 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore -rw-r--r-- 1 root root 1016 Oct 6 20:59 truststore [root@hadoop101.yinzhengjie.com ~]#

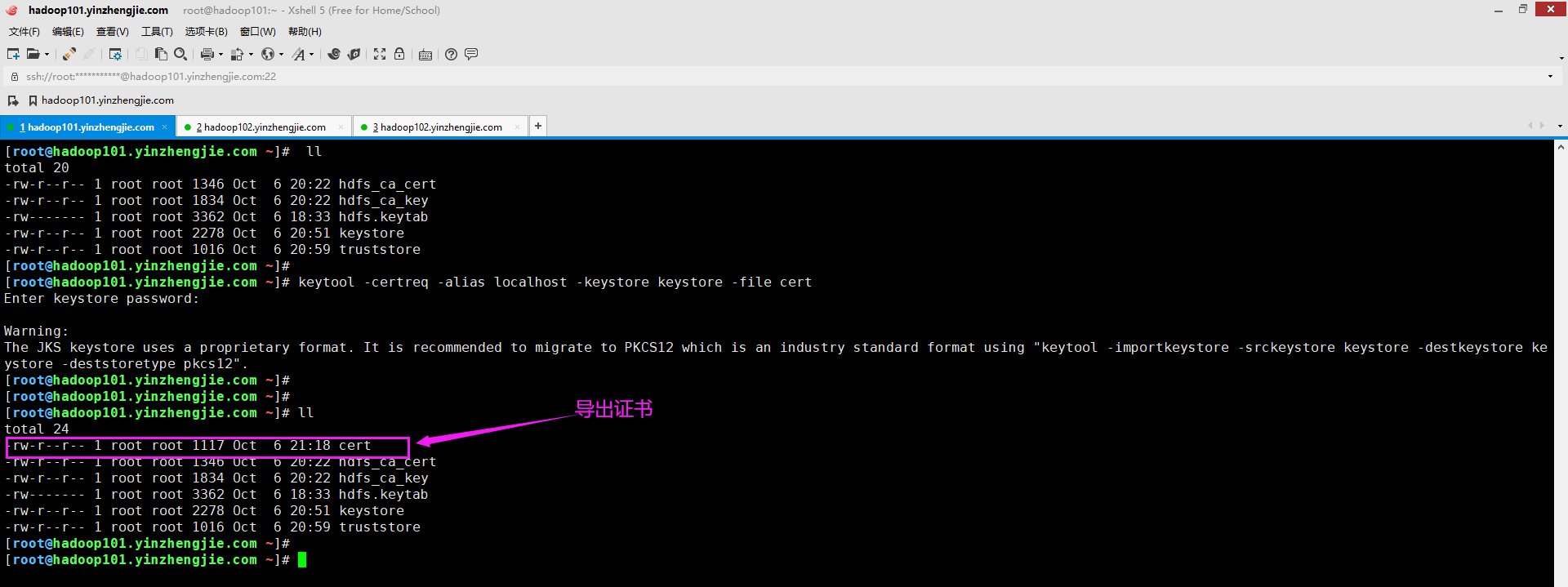

3>.从 keystore 中导出 cert

[root@hadoop101.yinzhengjie.com ~]# ll total 20 -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore -rw-r--r-- 1 root root 1016 Oct 6 20:59 truststore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# keytool -certreq -alias localhost -keystore keystore -file cert Enter keystore password: Warning: The JKS keystore uses a proprietary format. It is recommended to migrate to PKCS12 which is an industry standard format using "keytool -importkeystore -srckeystore keystore -destkeystore ke ystore -deststoretype pkcs12".[root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ll total 24 -rw-r--r-- 1 root root 1117 Oct 6 21:18 cert -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore -rw-r--r-- 1 root root 1016 Oct 6 20:59 truststore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

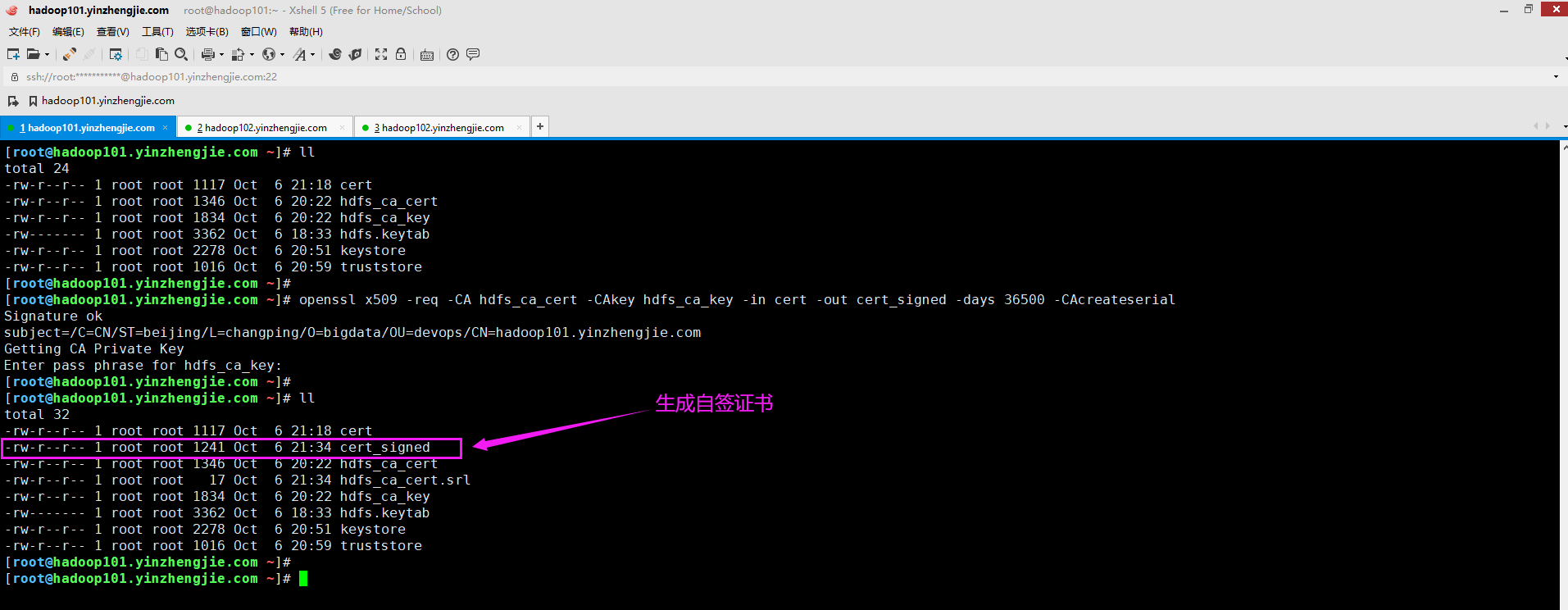

4>.用 CA 对 cert 签名,生成自签证书

[root@hadoop101.yinzhengjie.com ~]# ll total 24 -rw-r--r-- 1 root root 1117 Oct 6 21:18 cert -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore -rw-r--r-- 1 root root 1016 Oct 6 20:59 truststore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# openssl x509 -req -CA hdfs_ca_cert -CAkey hdfs_ca_key -in cert -out cert_signed -days 36500 -CAcreateserial Signature ok subject=/C=CN/ST=beijing/L=changping/O=bigdata/OU=devops/CN=hadoop101.yinzhengjie.com Getting CA Private Key Enter pass phrase for hdfs_ca_key: [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ll total 32 -rw-r--r-- 1 root root 1117 Oct 6 21:18 cert -rw-r--r-- 1 root root 1241 Oct 6 21:34 cert_signed -rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert -rw-r--r-- 1 root root 17 Oct 6 21:34 hdfs_ca_cert.srl -rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab -rw-r--r-- 1 root root 2278 Oct 6 20:51 keystore -rw-r--r-- 1 root root 1016 Oct 6 20:59 truststore [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

5>.将 CA 的 cert 和导入 keystore文件

[root@hadoop101.yinzhengjie.com ~]# keytool -keystore keystore -alias CARoot -import -file hdfs_ca_cert Enter keystore password: Owner: CN=yinzhengjie.com, OU=devops, O=bigdata, L=changping, ST=beijing, C=CN Issuer: CN=yinzhengjie.com, OU=devops, O=bigdata, L=changping, ST=beijing, C=CN Serial number: 858ca3893e608eba Valid from: Tue Oct 06 20:22:09 CST 2020 until: Thu Sep 12 20:22:09 CST 2120 Certificate fingerprints: MD5: 03:6C:16:3E:83:2A:15:79:11:3C:32:3F:12:3B:28:8C SHA1: CE:D0:D5:0C:93:FE:06:FB:87:D4:3A:22:B0:21:51:20:27:8F:81:B7 SHA256: 25:3B:29:F4:16:FA:98:46:02:79:38:6B:3C:DA:7B:44:2F:9A:1B:21:F5:E2:AD:A1:48:93:12:FC:AC:97:0A:8A Signature algorithm name: SHA256withRSA Subject Public Key Algorithm: 2048-bit RSA key Version: 3 Extensions: #1: ObjectId: 2.5.29.35 Criticality=false AuthorityKeyIdentifier [ KeyIdentifier [ 0000: 21 60 19 83 94 27 E2 02 51 F7 EB C5 82 69 40 E1 !`...'..Q....i@. 0010: 68 97 94 FA h... ] ] #2: ObjectId: 2.5.29.19 Criticality=false BasicConstraints:[ CA:true PathLen:2147483647 ] #3: ObjectId: 2.5.29.14 Criticality=false SubjectKeyIdentifier [ KeyIdentifier [ 0000: 21 60 19 83 94 27 E2 02 51 F7 EB C5 82 69 40 E1 !`...'..Q....i@. 0010: 68 97 94 FA h... ] ] Trust this certificate? [no]: yes Certificate was added to keystore Warning: The JKS keystore uses a proprietary format. It is recommended to migrate to PKCS12 which is an industry standard format us ing "keytool -importkeystore -srckeystore keystore -destkeystore keystore -deststoretype pkcs12".[root@hadoop101.yinzhengjie.com ~]#

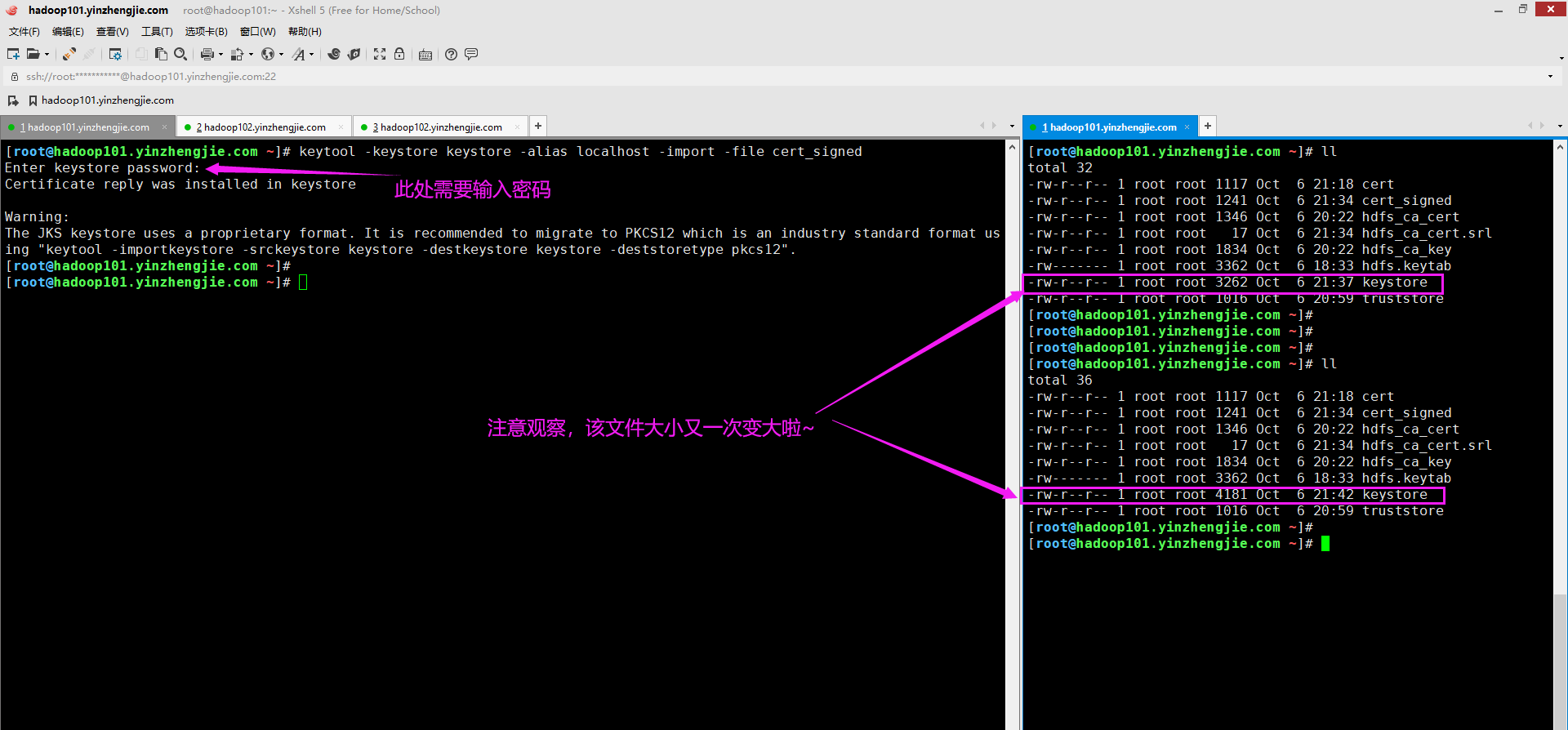

6>.用 CA 自签名之后的 cert 也导入 keystore文件

[root@hadoop101.yinzhengjie.com ~]# keytool -keystore keystore -alias localhost -import -file cert_signed Enter keystore password: Certificate reply was installed in keystore Warning: The JKS keystore uses a proprietary format. It is recommended to migrate to PKCS12 which is an industry standard format us ing "keytool -importkeystore -srckeystore keystore -destkeystore keystore -deststoretype pkcs12".[root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

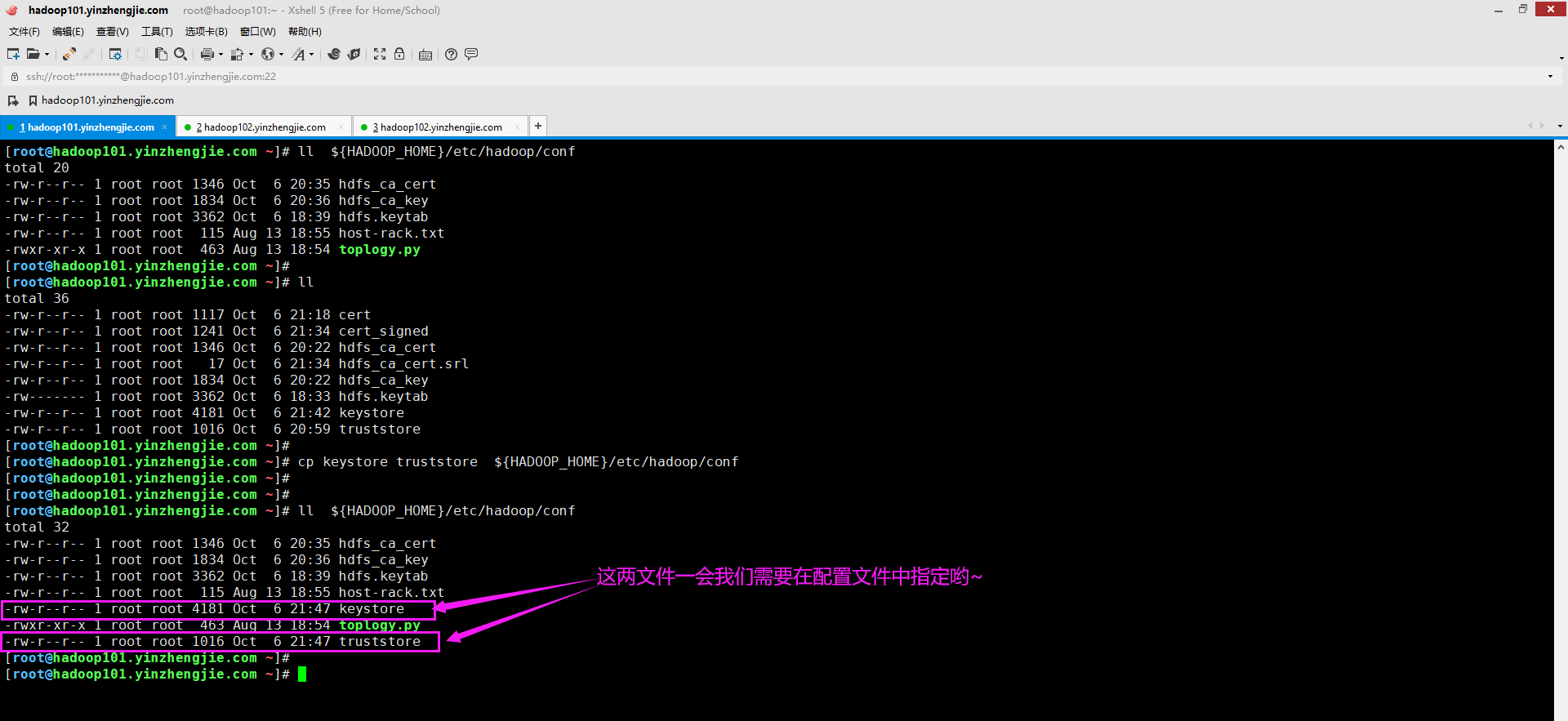

7>.将keystore,trustores存入到自定义目录,便于配置时方便找到它们

[root@hadoop101.yinzhengjie.com ~]# ll ${HADOOP_HOME}/etc/hadoop/conf

total 20

-rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert

-rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key

-rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab

-rw-r--r-- 1 root root 115 Aug 13 18:55 host-rack.txt

-rwxr-xr-x 1 root root 463 Aug 13 18:54 toplogy.py

[root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ll

total 36

-rw-r--r-- 1 root root 1117 Oct 6 21:18 cert

-rw-r--r-- 1 root root 1241 Oct 6 21:34 cert_signed

-rw-r--r-- 1 root root 1346 Oct 6 20:22 hdfs_ca_cert

-rw-r--r-- 1 root root 17 Oct 6 21:34 hdfs_ca_cert.srl

-rw-r--r-- 1 root root 1834 Oct 6 20:22 hdfs_ca_key

-rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab

-rw-r--r-- 1 root root 4181 Oct 6 21:42 keystore

-rw-r--r-- 1 root root 1016 Oct 6 20:59 truststore

[root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# cp keystore truststore ${HADOOP_HOME}/etc/hadoop/conf

[root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ll ${HADOOP_HOME}/etc/hadoop/conf

total 32

-rw-r--r-- 1 root root 1346 Oct 6 20:35 hdfs_ca_cert

-rw-r--r-- 1 root root 1834 Oct 6 20:36 hdfs_ca_key

-rw-r--r-- 1 root root 3362 Oct 6 18:39 hdfs.keytab

-rw-r--r-- 1 root root 115 Aug 13 18:55 host-rack.txt

-rw-r--r-- 1 root root 4181 Oct 6 21:47 keystore

-rwxr-xr-x 1 root root 463 Aug 13 18:54 toplogy.py

-rw-r--r-- 1 root root 1016 Oct 6 21:47 truststore

[root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]#

8>.在集群其它节点重复[1-7]的所有步骤

温馨提示:

注意观察上面的每一个步骤,比如FQDN,每个节点对应的主机名并不一致哟~

三.修改"hdfs-site.xml"配置文件

1>.修改"hdfs-site.xml"文件内容

[root@hadoop101.yinzhengjie.com ~]# vim /yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml <configuration> ... <property> <name>dfs.http.policy</name> <value>HTTPS_ONLY</value> <description>确定HDFS是否支持HTTPS(SSL)这将为HDFS守护程序配置HTTP端点:默认值为"HTTP_ONLY"(仅在http上提供服务),"HTTPS_ONLY"(仅在https上提供服务),"HTTP_AND_HTTPS"(同时提供服务在http和https上)</description> </property> ... </configuration> [root@hadoop101.yinzhengjie.com ~]# 温馨提示: DataNode节点需要将"dfs.http.policy"的属性设置未"HTTPS_ONLY",但是NameNode和Secondary NameNode需要将该值设置为"HTTP_AND_HTTPS"。 如果你将HDFS集群的所有节点的属性都设置成"HTTPS_ONLY",你会发现NameNode和Secondary NameNode的进程可以启动成功,但是它们的WebUI实例启动失败(也就是说你不能访问NameNode的50070端口以及Secondary NameNode的50090端口啦~)!

2>.将配置同步到集群其它datanode节点

[root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=${HADOOP_HOME}/etc/hadoop/hdfs-site.xml dest=${HADOOP_HOME}/etc/hadoop/" hadoop101.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "0adc0d87b88313df17ba1deb2d68a359c8dc9be4", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml", "gid": 190, "group": "systemd-journal", "mode": "0644", "owner": "12334", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml", "size": 11470, "state": "file", "uid": 12334 } hadoop103.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "0adc0d87b88313df17ba1deb2d68a359c8dc9be4", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml", "gid": 0, "group": "root", "md5sum": "87d3fa9d1b93d03fd2b509508086def4", "mode": "0644", "owner": "root", "size": 11470, "src": "/root/.ansible/tmp/ansible-tmp-1602000461.64-14771-240997395125955/source", "state": "file", "uid": 0 } hadoop105.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "0adc0d87b88313df17ba1deb2d68a359c8dc9be4", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml", "gid": 0, "group": "root", "md5sum": "87d3fa9d1b93d03fd2b509508086def4", "mode": "0644", "owner": "root", "size": 11470, "src": "/root/.ansible/tmp/ansible-tmp-1602000461.67-14775-126005848371918/source", "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "0adc0d87b88313df17ba1deb2d68a359c8dc9be4", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml", "gid": 0, "group": "root", "md5sum": "87d3fa9d1b93d03fd2b509508086def4", "mode": "0644", "owner": "root", "size": 11470, "src": "/root/.ansible/tmp/ansible-tmp-1602000461.62-14769-272249870401292/source", "state": "file", "uid": 0 } hadoop104.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "0adc0d87b88313df17ba1deb2d68a359c8dc9be4", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml", "gid": 0, "group": "root", "md5sum": "87d3fa9d1b93d03fd2b509508086def4", "mode": "0644", "owner": "root", "size": 11470, "src": "/root/.ansible/tmp/ansible-tmp-1602000461.64-14772-148620330070479/source", "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

四.配置ssl-client.xml文件

1>.基于模板文件创建ssl-client.xml文件

[root@hadoop101.yinzhengjie.com ~]# cp ${HADOOP_HOME}/etc/hadoop/ssl-client.xml.example ${HADOOP_HOME}/etc/hadoop/ssl-client.xml

2>.修改ssl-client.xml文件

[root@hadoop101.yinzhengjie.com ~]# vim ${HADOOP_HOME}/etc/hadoop/ssl-client.xml [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# cat ${HADOOP_HOME}/etc/hadoop/ssl-client.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to You under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <configuration> <property> <name>ssl.client.truststore.location</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/truststore</value> <description>Truststore to be used by clients like distcp. Must be specified. </description> </property> <property> <name>ssl.client.truststore.password</name> <value>yinzhengjie</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.truststore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> <property> <name>ssl.client.truststore.reload.interval</name> <value>10000</value> <description>Truststore reload check interval, in milliseconds. Default value is 10000 (10 seconds). </description> </property> <property> <name>ssl.client.keystore.location</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/keystore</value> <description>Keystore to be used by clients like distcp. Must be specified. </description> </property> <property> <name>ssl.client.keystore.password</name> <value>yinzhengjie</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.keystore.keypassword</name> <value>yinzhengjie</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.keystore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> </configuration> [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]#

3>.将ssl-client.xml文件同步到集群其它节点

[root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=${HADOOP_HOME}/etc/hadoop/ssl-client.xml dest=${HADOOP_HOME}/etc/hadoop/" hadoop104.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "335bcafcd6baf119d58fdd5f6510bed58b9cf31c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-client.xml", "gid": 0, "group": "root", "md5sum": "f3ab7ce75cb96fec12999a507d1f1032", "mode": "0644", "owner": "root", "size": 2459, "src": "/root/.ansible/tmp/ansible-tmp-1601998791.59-13171-228481578922367/source", "state": "file", "uid": 0 } hadoop103.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "335bcafcd6baf119d58fdd5f6510bed58b9cf31c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-client.xml", "gid": 0, "group": "root", "md5sum": "f3ab7ce75cb96fec12999a507d1f1032", "mode": "0644", "owner": "root", "size": 2459, "src": "/root/.ansible/tmp/ansible-tmp-1601998791.59-13170-263679465385553/source", "state": "file", "uid": 0 } hadoop105.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "335bcafcd6baf119d58fdd5f6510bed58b9cf31c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-client.xml", "gid": 0, "group": "root", "md5sum": "f3ab7ce75cb96fec12999a507d1f1032", "mode": "0644", "owner": "root", "size": 2459, "src": "/root/.ansible/tmp/ansible-tmp-1601998791.63-13174-270673831631234/source", "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "335bcafcd6baf119d58fdd5f6510bed58b9cf31c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-client.xml", "gid": 0, "group": "root", "md5sum": "f3ab7ce75cb96fec12999a507d1f1032", "mode": "0644", "owner": "root", "size": 2459, "src": "/root/.ansible/tmp/ansible-tmp-1601998791.58-13168-216772060676884/source", "state": "file", "uid": 0 } hadoop101.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "335bcafcd6baf119d58fdd5f6510bed58b9cf31c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-client.xml", "gid": 0, "group": "root", "mode": "0644", "owner": "root", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-client.xml", "size": 2459, "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

五.配置ssl-server.xml文件

1>.基于模板文件创建ssl-server.xml文件

[root@hadoop101.yinzhengjie.com ~]# cp ${HADOOP_HOME}/etc/hadoop/ssl-server.xml.example ${HADOOP_HOME}/etc/hadoop/ssl-server.xml

2>.修改ssl-server.xml文件

[root@hadoop101.yinzhengjie.com ~]# vim ${HADOOP_HOME}/etc/hadoop/ssl-server.xml [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# cat ${HADOOP_HOME}/etc/hadoop/ssl-server.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to You under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <configuration> <property> <name>ssl.server.truststore.location</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/truststore</value> <description>Truststore to be used by NN and DN. Must be specified. </description> </property> <property> <name>ssl.server.truststore.password</name> <value>yinzhengjie</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.server.truststore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> <property> <name>ssl.server.truststore.reload.interval</name> <value>10000</value> <description>Truststore reload check interval, in milliseconds. Default value is 10000 (10 seconds). </description> </property> <property> <name>ssl.server.keystore.location</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/keystore</value> <description>Keystore to be used by NN and DN. Must be specified. </description> </property> <property> <name>ssl.server.keystore.password</name> <value>yinzhengjie</value> <description>Must be specified. </description> </property> <property> <name>ssl.server.keystore.keypassword</name> <value>yinzhengjie</value> <description>Must be specified. </description> </property> <property> <name>ssl.server.keystore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> <property> <name>ssl.server.exclude.cipher.list</name> <value>TLS_ECDHE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA, SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_RC4_128_MD5</value> <description>Optional. The weak security cipher suites that you want excluded from SSL communication.</description> </property> </configuration> [root@hadoop101.yinzhengjie.com ~]#

3>.将ssl-server.xml文件同步到集群其它节点

[root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=${HADOOP_HOME}/etc/hadoop/ssl-server.xml dest=${HADOOP_HOME}/etc/hadoop/" hadoop103.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "ca744794ae2944ad05b0933750193b1e860ee8cd", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-server.xml", "gid": 0, "group": "root", "md5sum": "68d0c7a7b6da73a7ceab1172404ff380", "mode": "0644", "owner": "root", "size": 2840, "src": "/root/.ansible/tmp/ansible-tmp-1601999063.3-13384-31361930800381/source", "state": "file", "uid": 0 } hadoop105.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "ca744794ae2944ad05b0933750193b1e860ee8cd", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-server.xml", "gid": 0, "group": "root", "md5sum": "68d0c7a7b6da73a7ceab1172404ff380", "mode": "0644", "owner": "root", "size": 2840, "src": "/root/.ansible/tmp/ansible-tmp-1601999063.25-13388-191717002149571/source", "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "ca744794ae2944ad05b0933750193b1e860ee8cd", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-server.xml", "gid": 0, "group": "root", "md5sum": "68d0c7a7b6da73a7ceab1172404ff380", "mode": "0644", "owner": "root", "size": 2840, "src": "/root/.ansible/tmp/ansible-tmp-1601999063.19-13382-170842224507642/source", "state": "file", "uid": 0 } hadoop104.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "ca744794ae2944ad05b0933750193b1e860ee8cd", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-server.xml", "gid": 0, "group": "root", "md5sum": "68d0c7a7b6da73a7ceab1172404ff380", "mode": "0644", "owner": "root", "size": 2840, "src": "/root/.ansible/tmp/ansible-tmp-1601999063.25-13385-66836978382906/source", "state": "file", "uid": 0 } hadoop101.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "ca744794ae2944ad05b0933750193b1e860ee8cd", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-server.xml", "gid": 0, "group": "root", "mode": "0644", "owner": "root", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/ssl-server.xml", "size": 2840, "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

六.重启启动Hadoop集群

1>.重启HDFS集群

[root@hadoop101.yinzhengjie.com ~]# manage-hdfs.sh restart hadoop101.yinzhengjie.com | CHANGED | rc=0 >> stopping namenode hadoop105.yinzhengjie.com | CHANGED | rc=0 >> stopping secondarynamenode hadoop104.yinzhengjie.com | CHANGED | rc=0 >> no datanode to stop hadoop102.yinzhengjie.com | CHANGED | rc=0 >> no datanode to stop hadoop103.yinzhengjie.com | CHANGED | rc=0 >> no datanode to stop Stoping HDFS: [ OK ] hadoop101.yinzhengjie.com | CHANGED | rc=0 >> starting namenode, logging to /yinzhengjie/softwares/hadoop-2.10.0-fully-mode/logs/hadoop-root-namenode-hadoop101.yinzhengjie.com.out hadoop105.yinzhengjie.com | CHANGED | rc=0 >> starting secondarynamenode, logging to /yinzhengjie/softwares/hadoop/logs/hadoop-root-secondarynamenode-hadoop105.yinzhengjie.com.out hadoop104.yinzhengjie.com | CHANGED | rc=0 >> starting datanode, logging to /yinzhengjie/softwares/hadoop/logs/hadoop-root-datanode-hadoop104.yinzhengjie.com.out hadoop102.yinzhengjie.com | CHANGED | rc=0 >> starting datanode, logging to /yinzhengjie/softwares/hadoop/logs/hadoop-root-datanode-hadoop102.yinzhengjie.com.out hadoop103.yinzhengjie.com | CHANGED | rc=0 >> starting datanode, logging to /yinzhengjie/softwares/hadoop/logs/hadoop-root-datanode-hadoop103.yinzhengjie.com.out Starting HDFS: [ OK ] [root@hadoop101.yinzhengjie.com ~]#

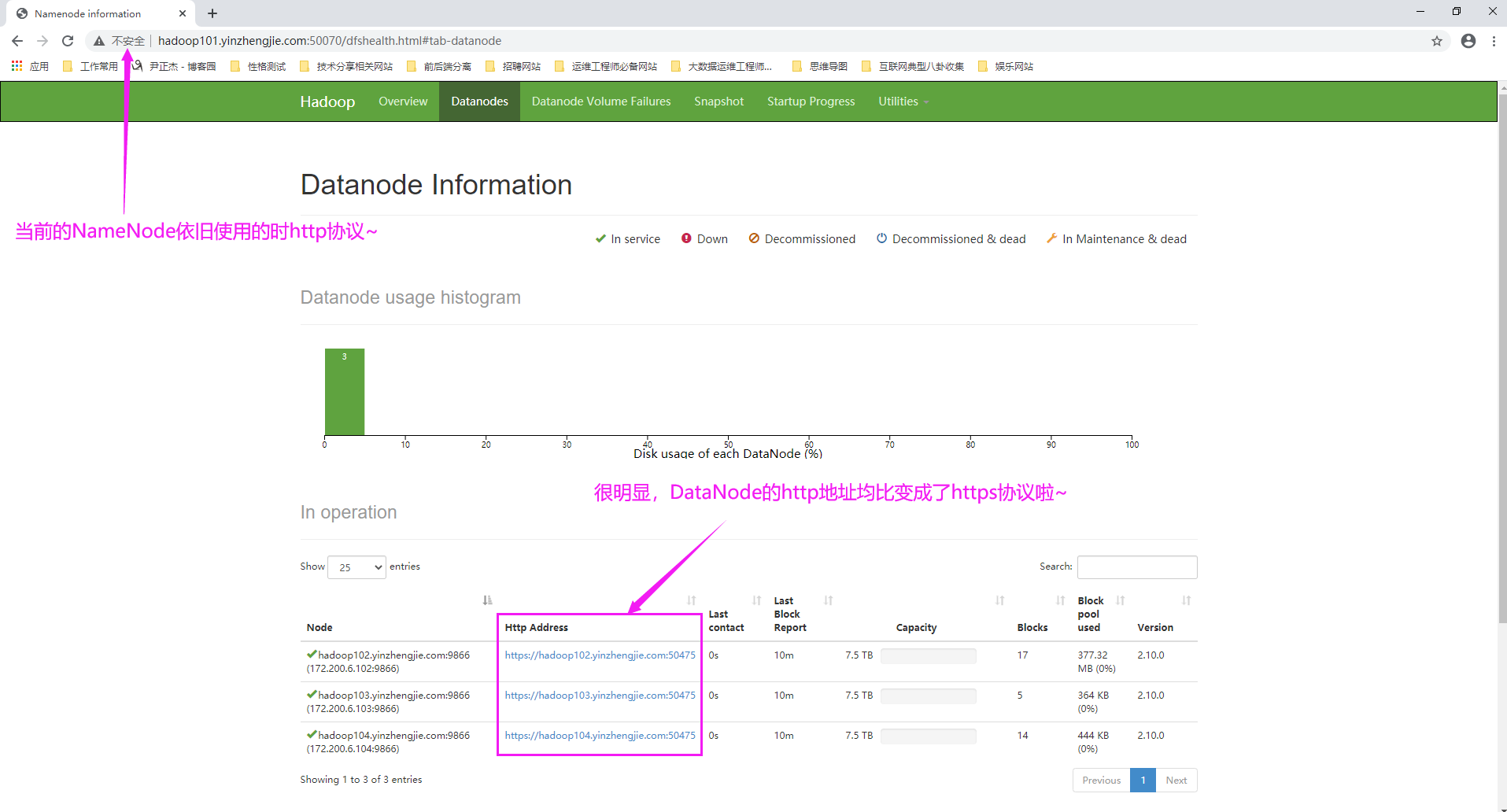

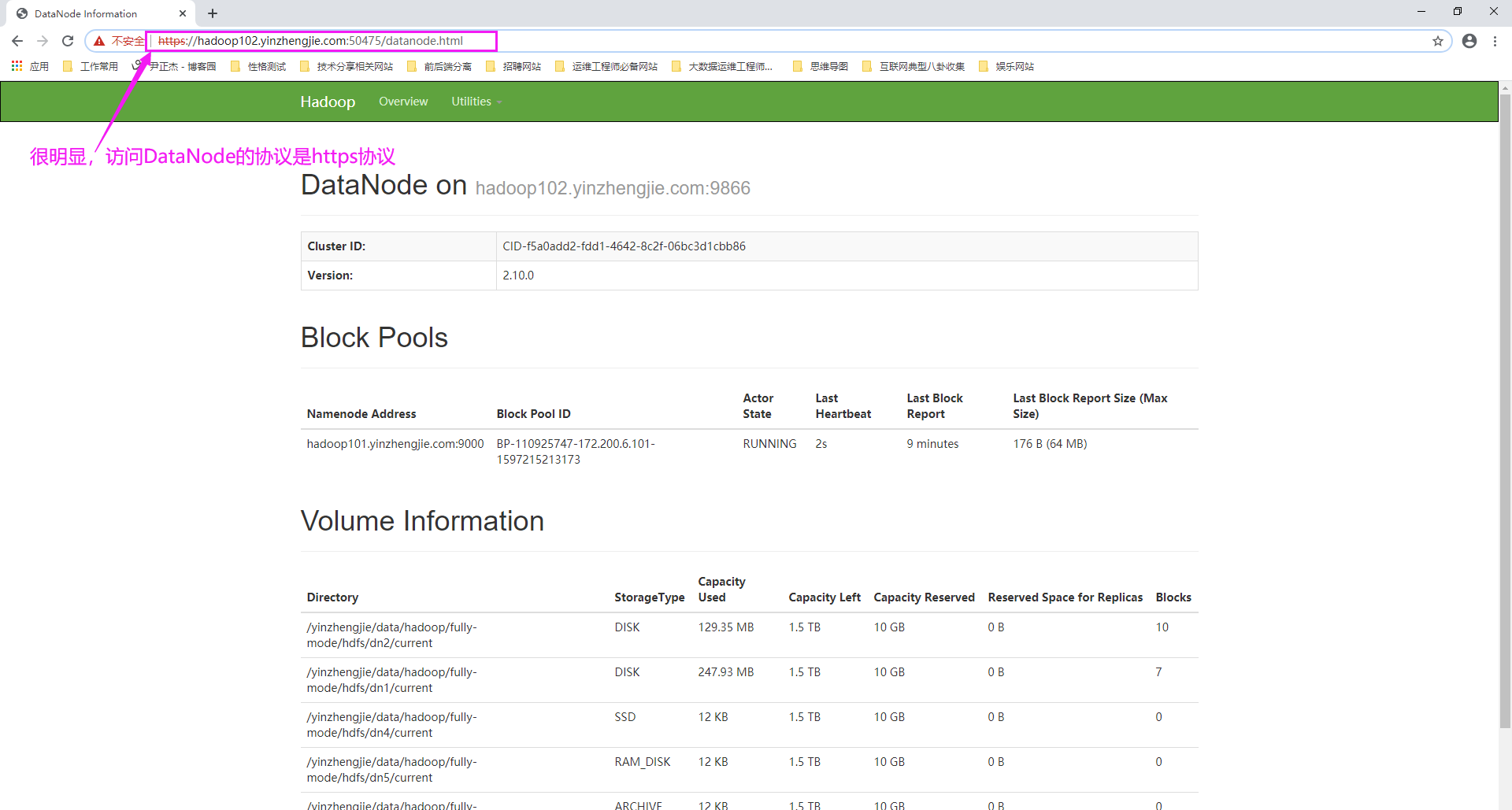

2>.查看NameNode的Web UI端口

3>.查看DataNode的Web UI

七.启动集群后可能出现的错误

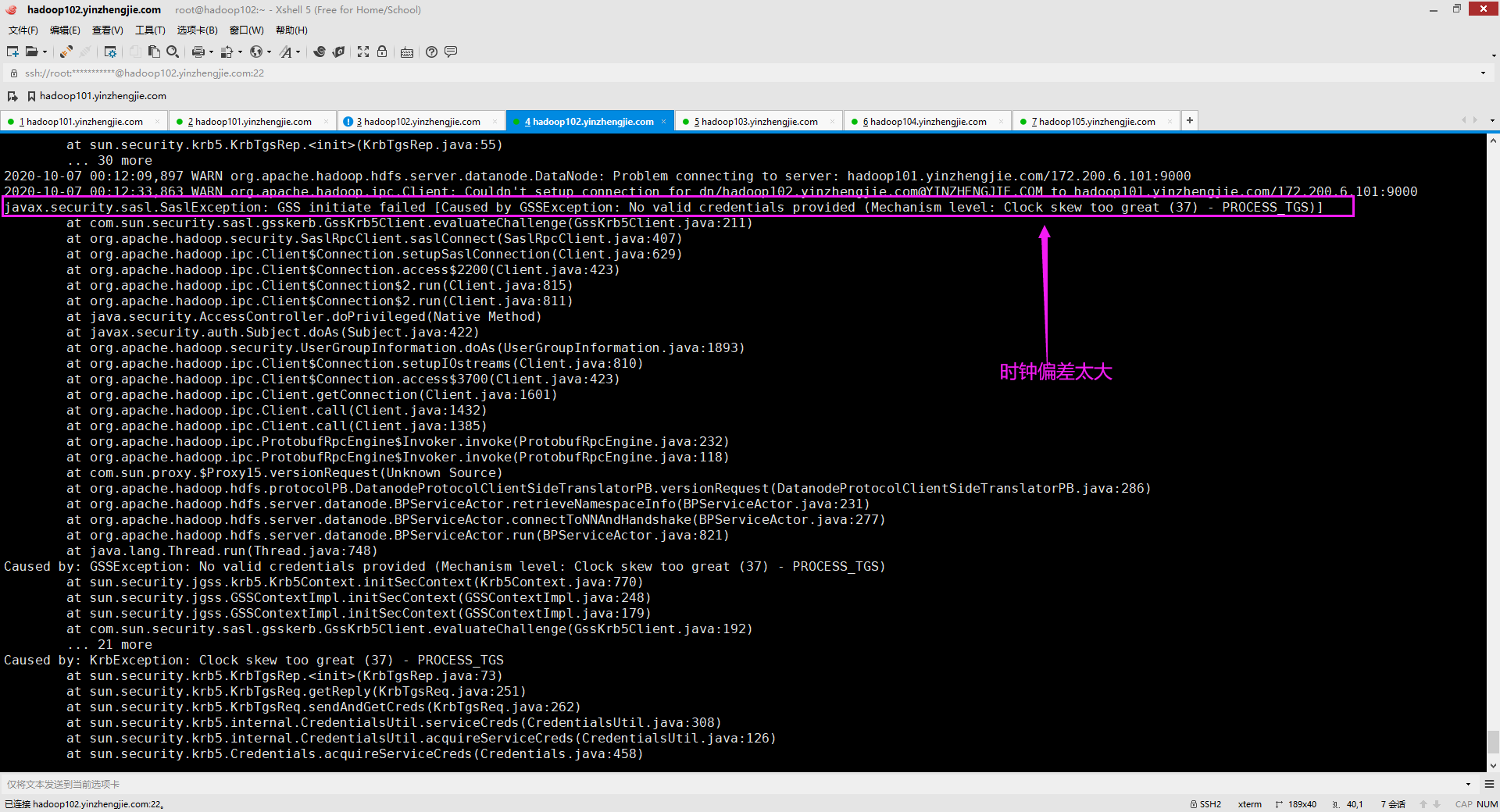

1>.javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Clock skew too great (37) - PROCESS_TGS)]

如下图所示,是由于集群时间差距过导致的,关于集群时间同步的组件比如nptd或者chrony均可以解决该问题。我推荐大家使用chrony组件来进行集群内时间同步。 博主推荐阅读: https://www.cnblogs.com/yinzhengjie/p/12292549.html

2>.其它故障排除案例

博主推荐阅读: https://www.cnblogs.com/yinzhengjie/p/13766307.html https://www.cnblogs.com/yinzhengjie/p/13742833.html

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/13461151.html,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。