Cloudera Certified Associate Administrator案例之Test篇

Cloudera Certified Associate Administrator案例之Test篇

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.准备工作(将CM升级到"60天使用的企业版")

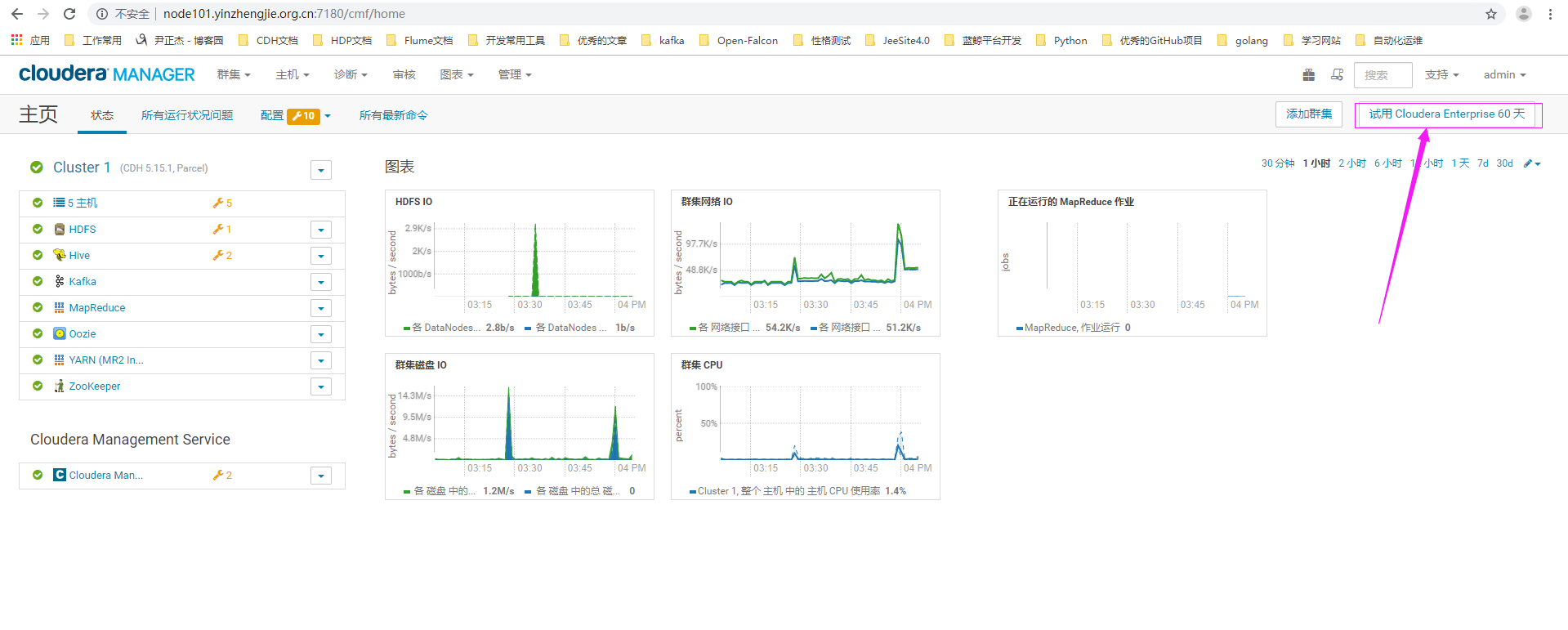

1>.在CM界面中点击"试用Cloudera Enterprise 60天"

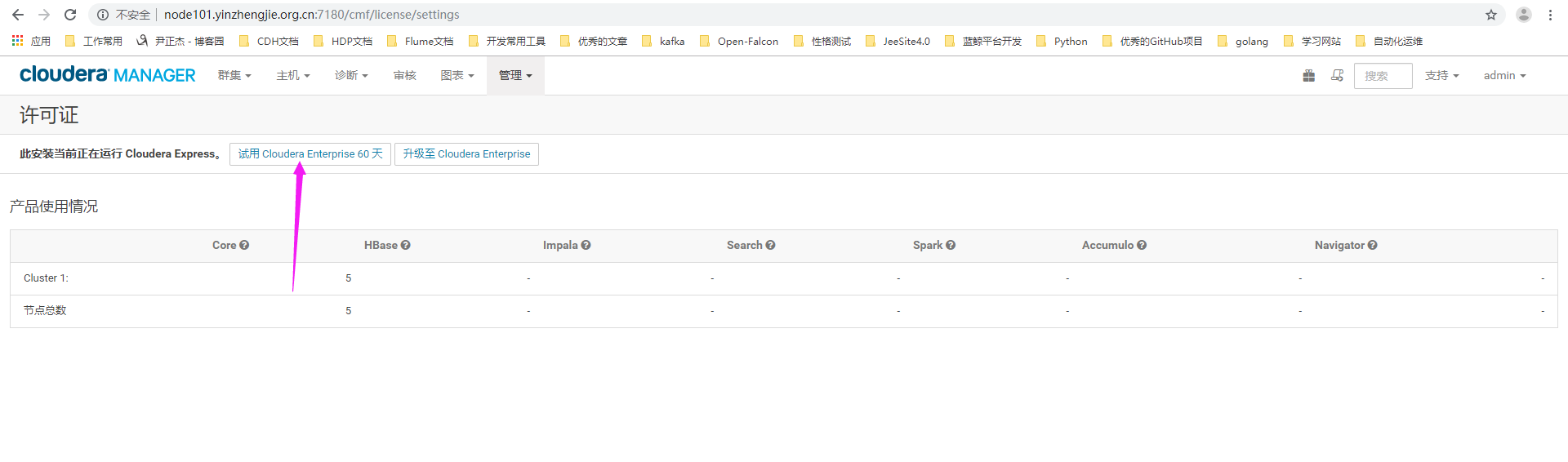

2>.进入许可证界面可以看到当前使用的是"Cloudera Express",点击"试用Cloudera Enterprise 60天""

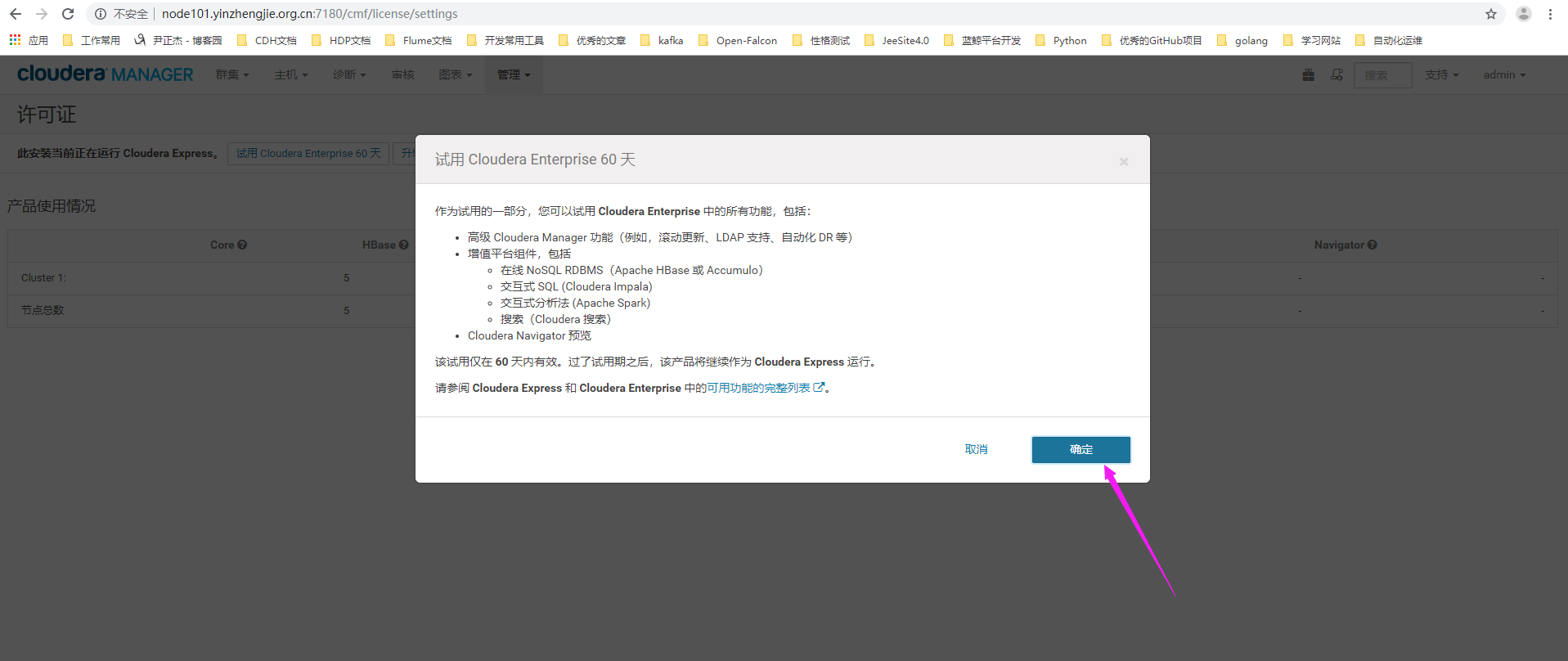

3>.点击确认

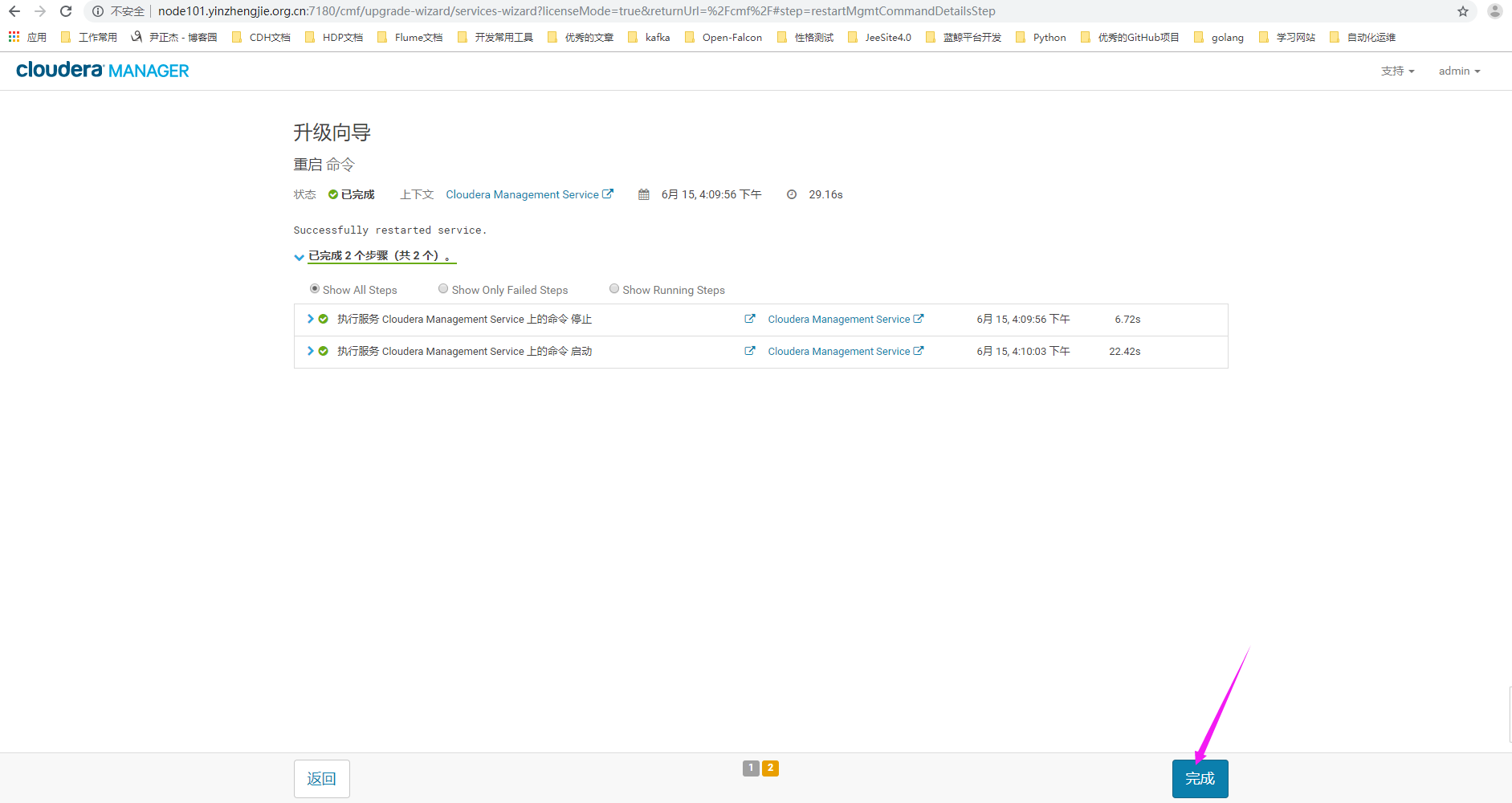

4>.进入升级向导,点击"继续"

5>.升级完成

6>.查看CM主界面

二.使用企业级的CM的快照功能

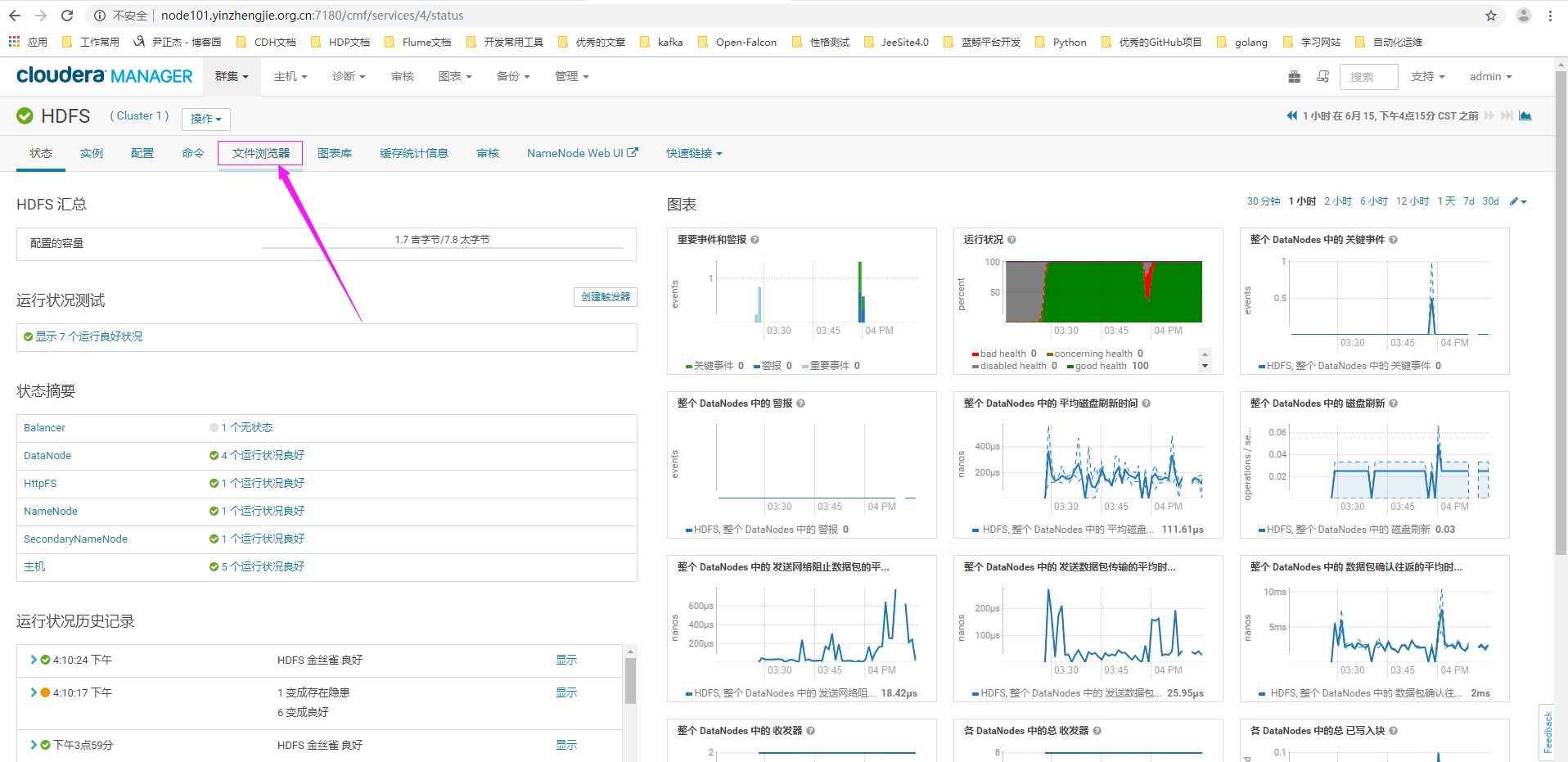

1>.点击HDFS中的"文件浏览器"

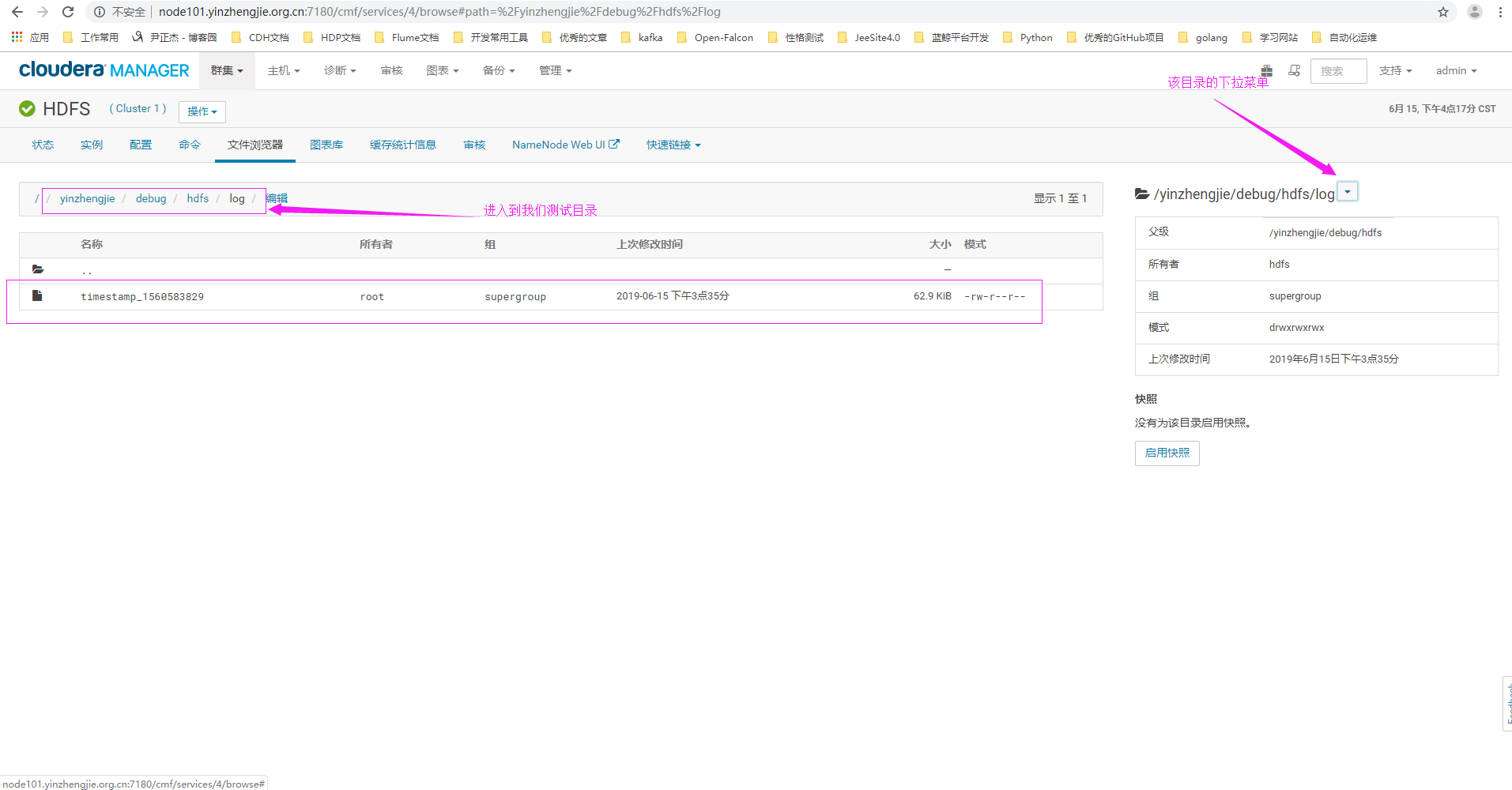

2>.进入我们的测试目录

3>.点击启用快照

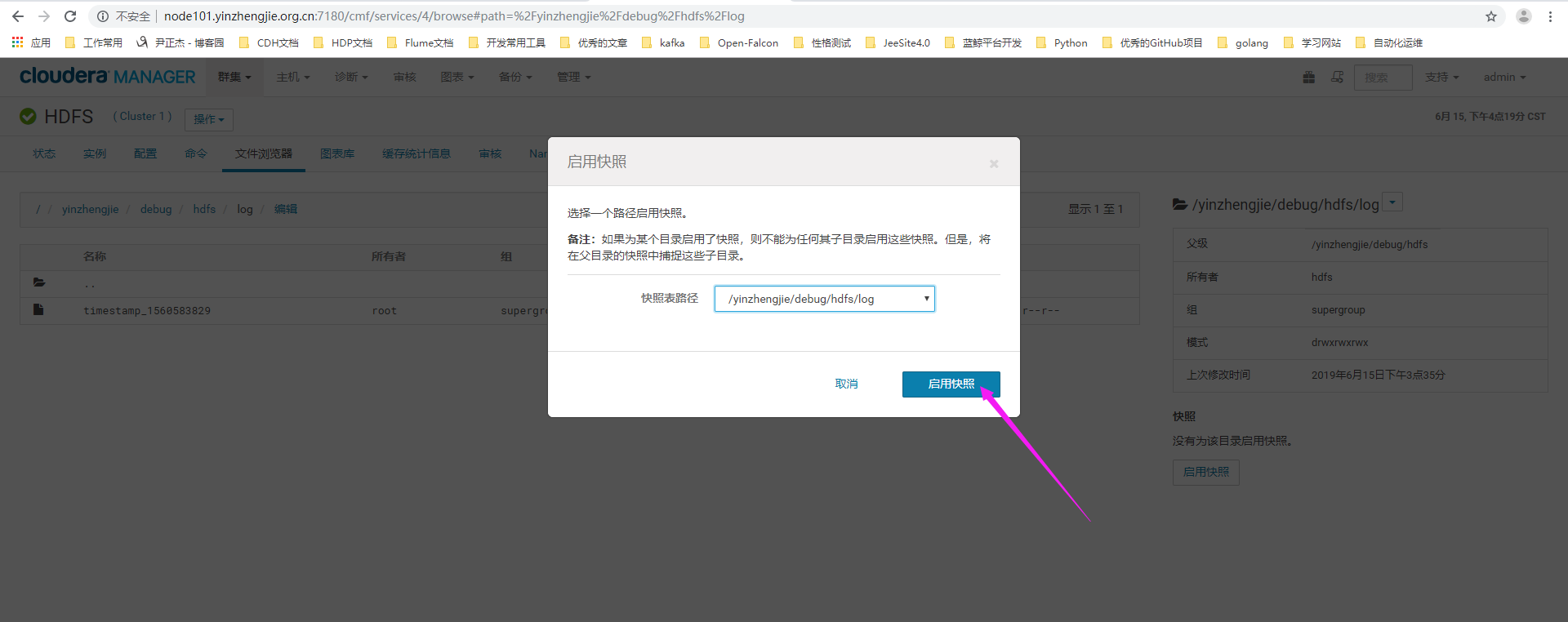

4>.弹出一个确认对话框,点击"启用快照"

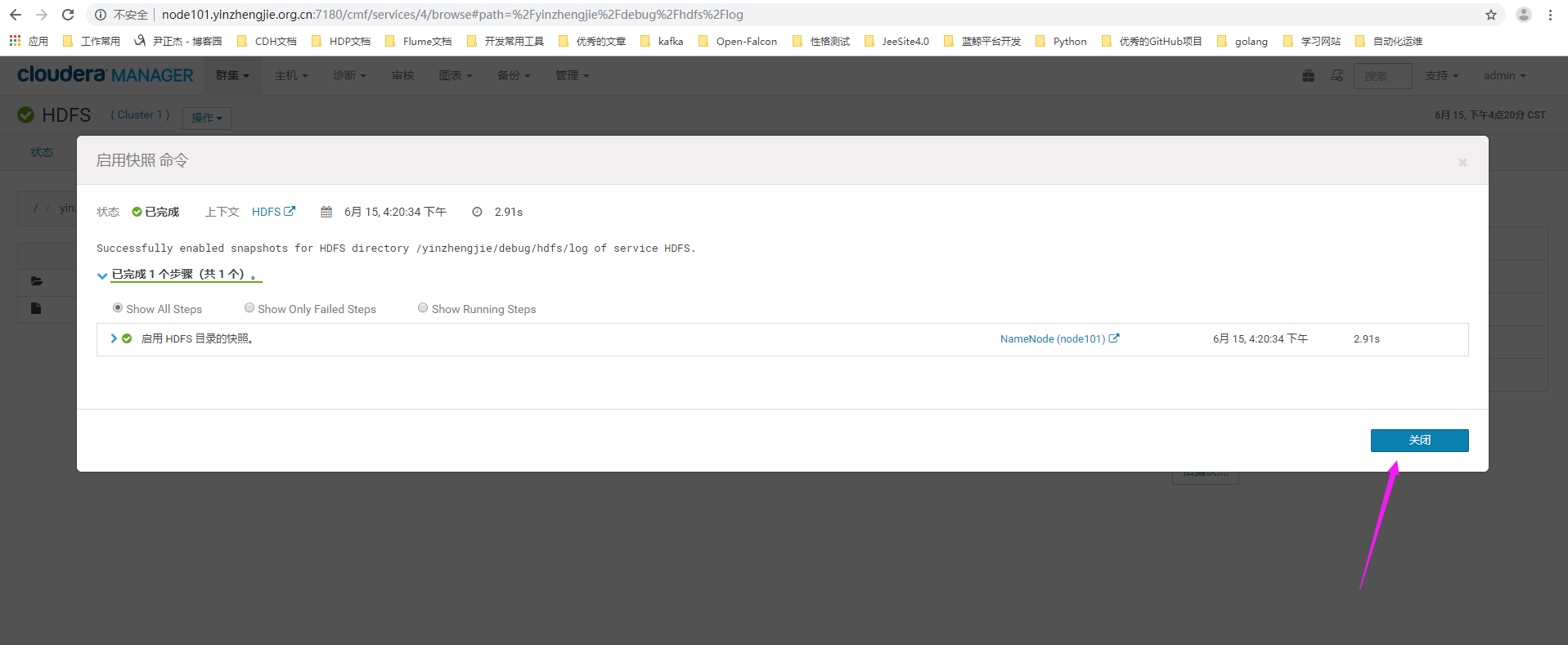

5>.快照启用成功

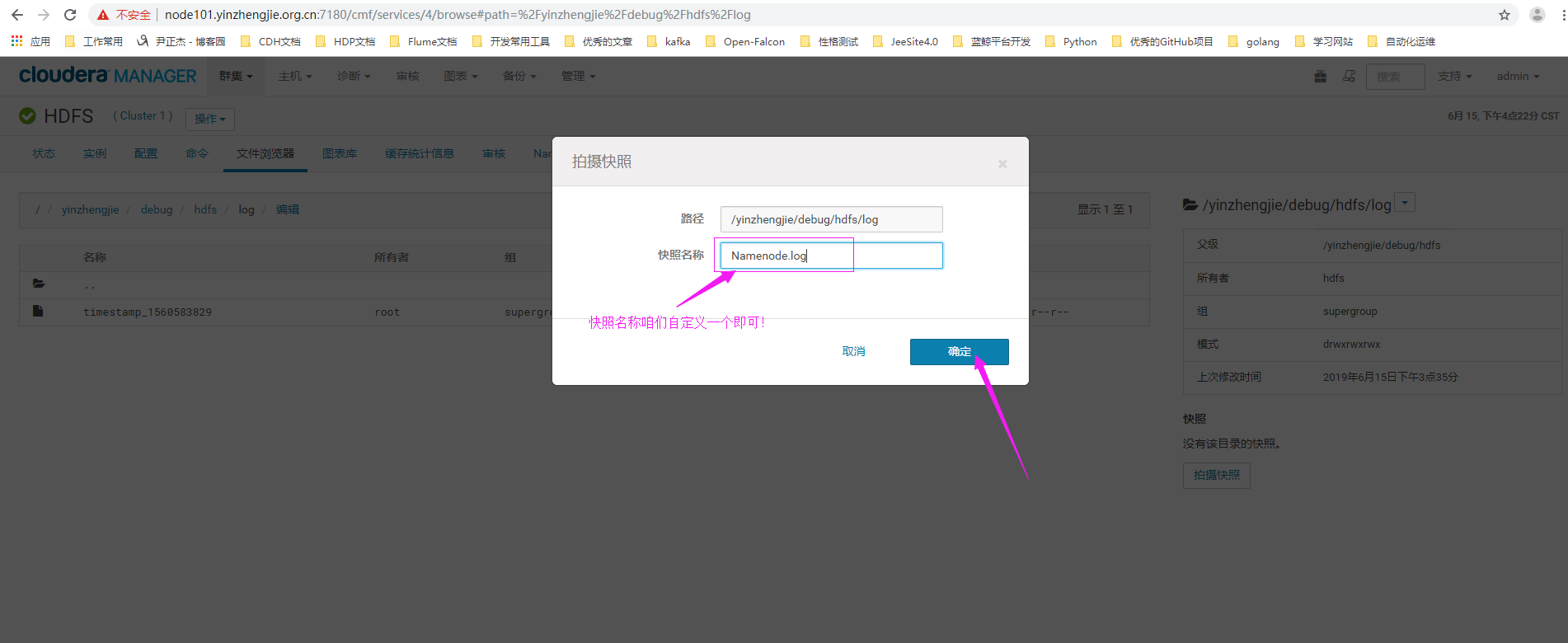

6>.点击拍摄快照

7>.给快照起一个名字

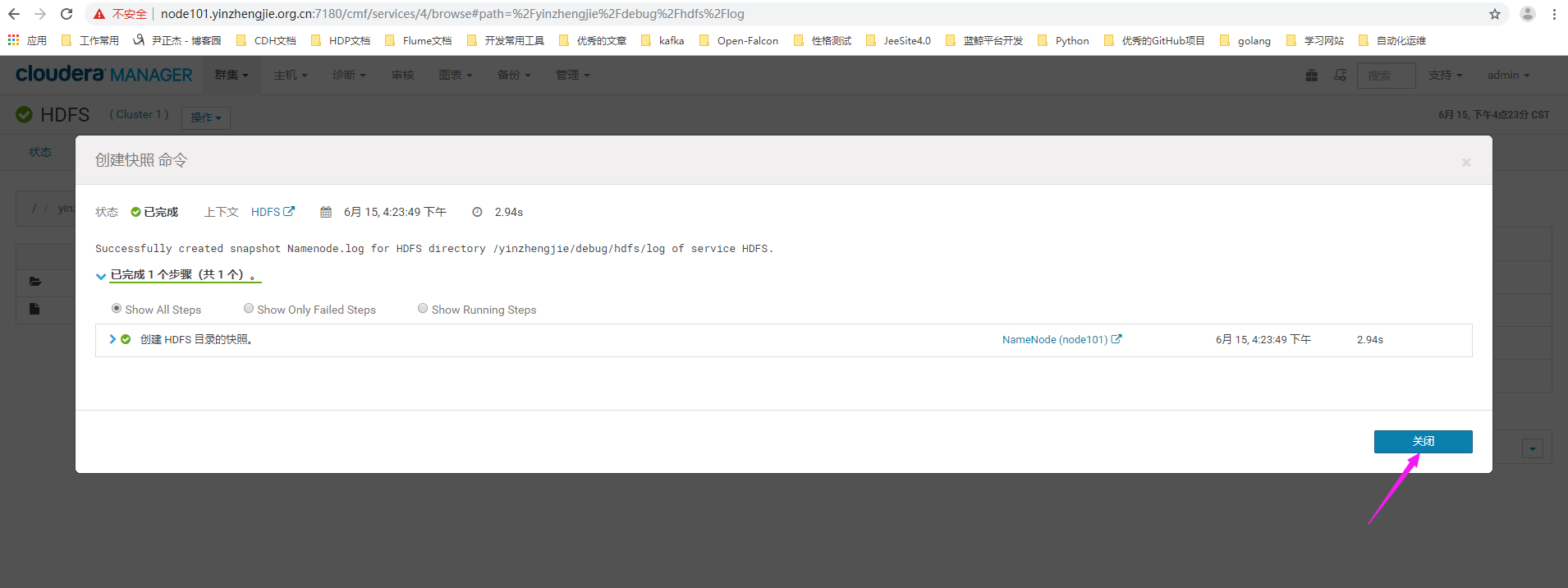

8>.等待快照创建完毕

9>.快照创建成功

19>.彻底删除做了快照的文件

[root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/debug/hdfs/log Found 1 items -rw-r--r-- 3 root supergroup 64384 2019-06-15 15:35 /yinzhengjie/debug/hdfs/log/timestamp_1560583829 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfs -rm -skipTrash /yinzhengjie/debug/hdfs/log/timestamp_1560583829 Deleted /yinzhengjie/debug/hdfs/log/timestamp_1560583829 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfs -ls /yinzhengjie/debug/hdfs/log [root@node101.yinzhengjie.org.cn ~]#

三.使用最近一个快照恢复数据

问题描述:

公司某用户在HDFS上存放了重要的文件,但是不小心将其删除了。幸运的是,该目录被设置为可快照的,并曾经创建过一次快照。请使用最近的一个快照回复数据。

要求恢复"/yinzhengjie/debug/hdfs/log"目录下的所有文件,并恢复文件原有的权限,所有者,ACL等。

解决方案:

快照在操作中日常运维中也是很有用的,不单是用于测试。我之前在博客中有介绍过Hadoop2.9.2版本是如何使用命令行的管理快照的方法,本次我们使用CM来操作。

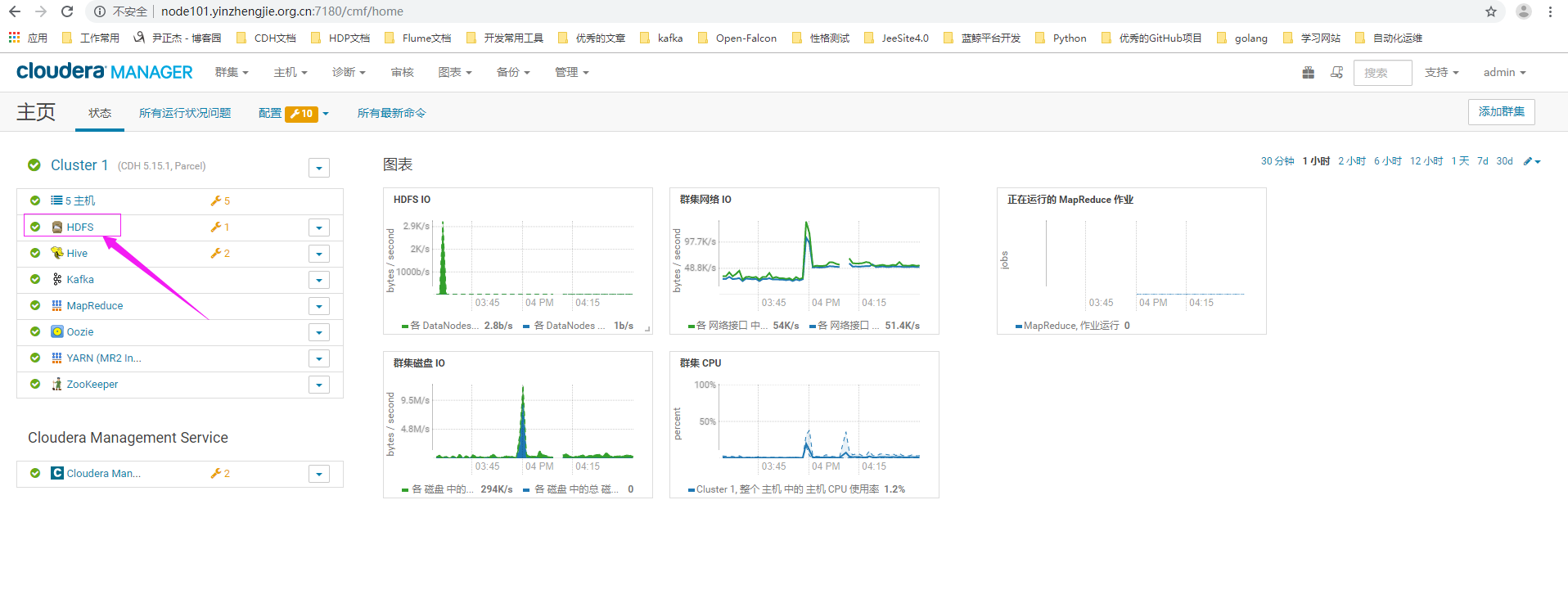

1>.点击HDFS服务

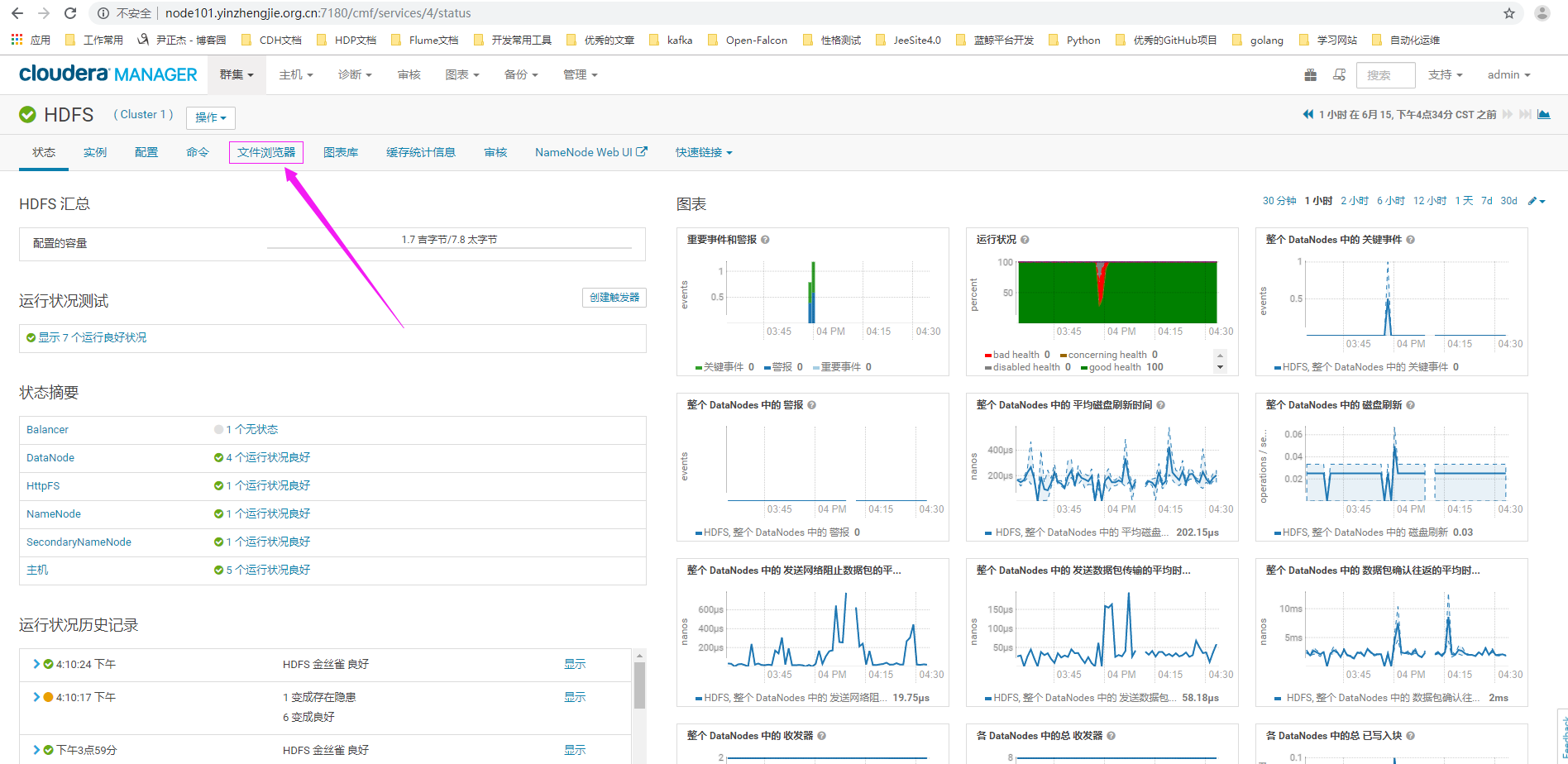

2>.点击文件浏览器

3>.进入我们要还原数据的目录,并点击"从快照还原目录"

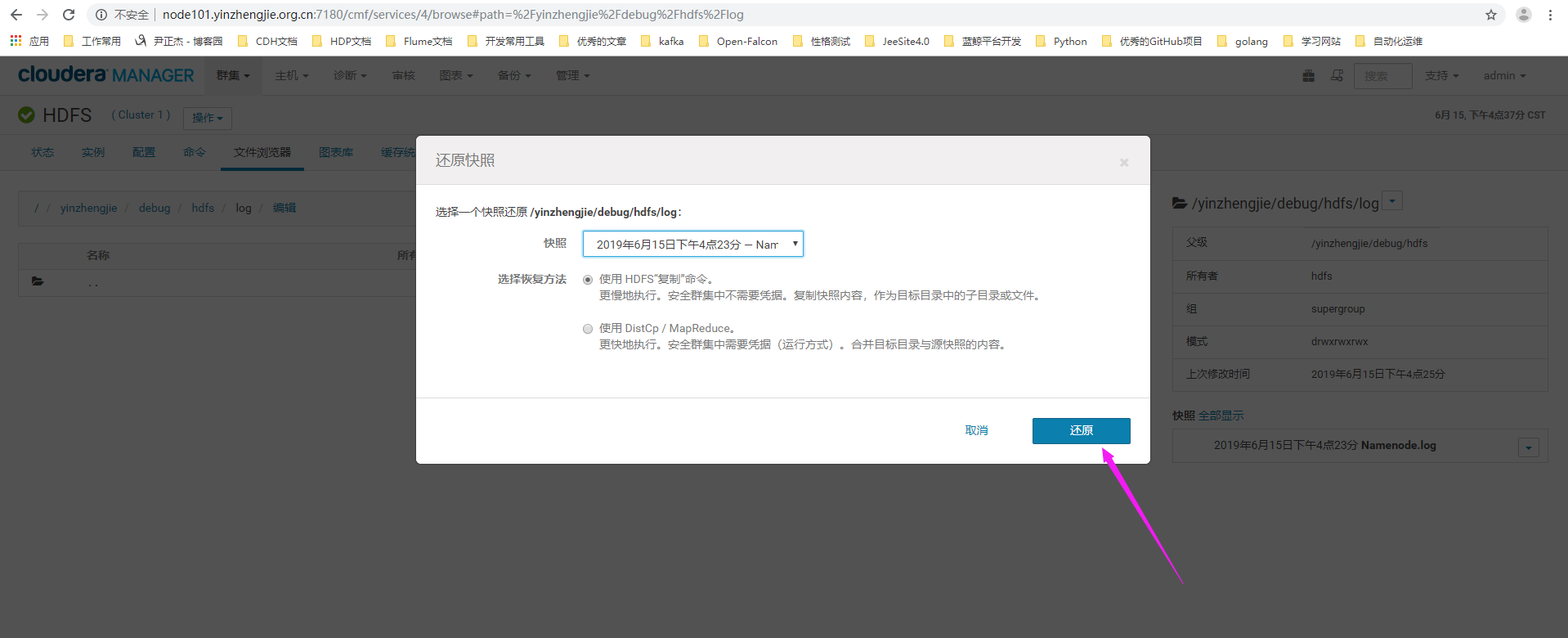

4>.选择快照及恢复的方法

5>.恢复完成,点击"关闭"

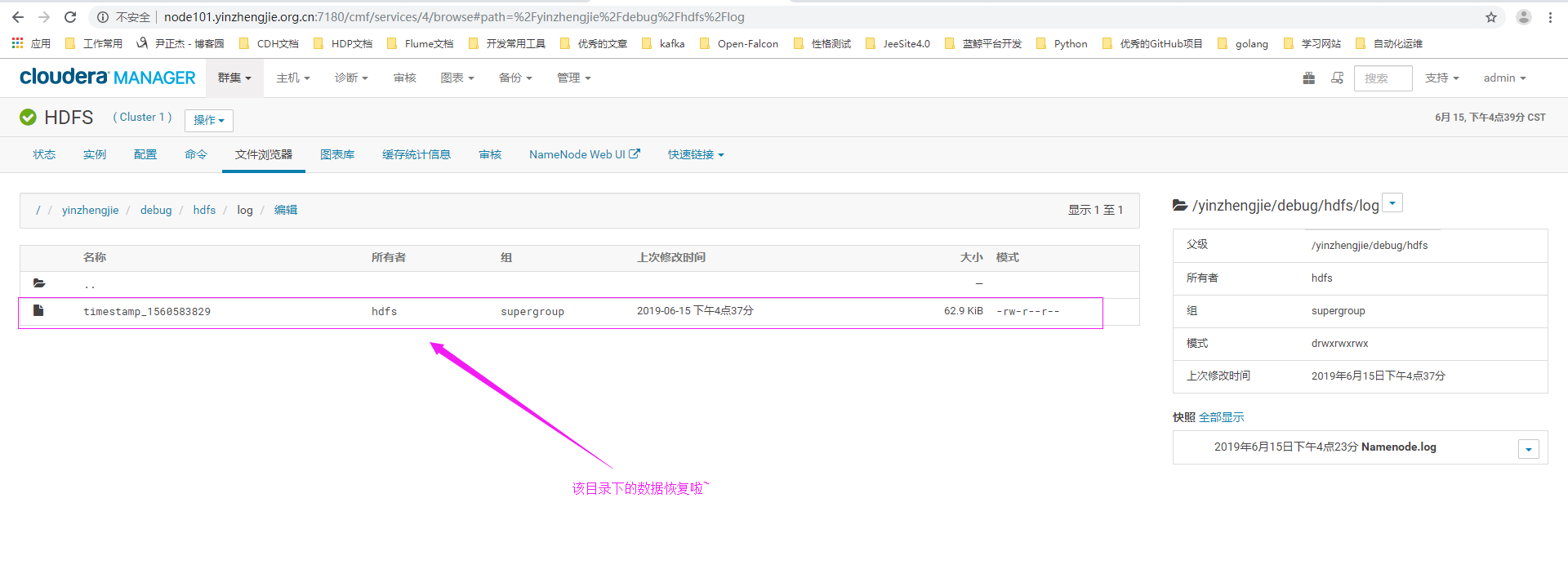

6>.刷新当前页面,发现数据恢复成功啦

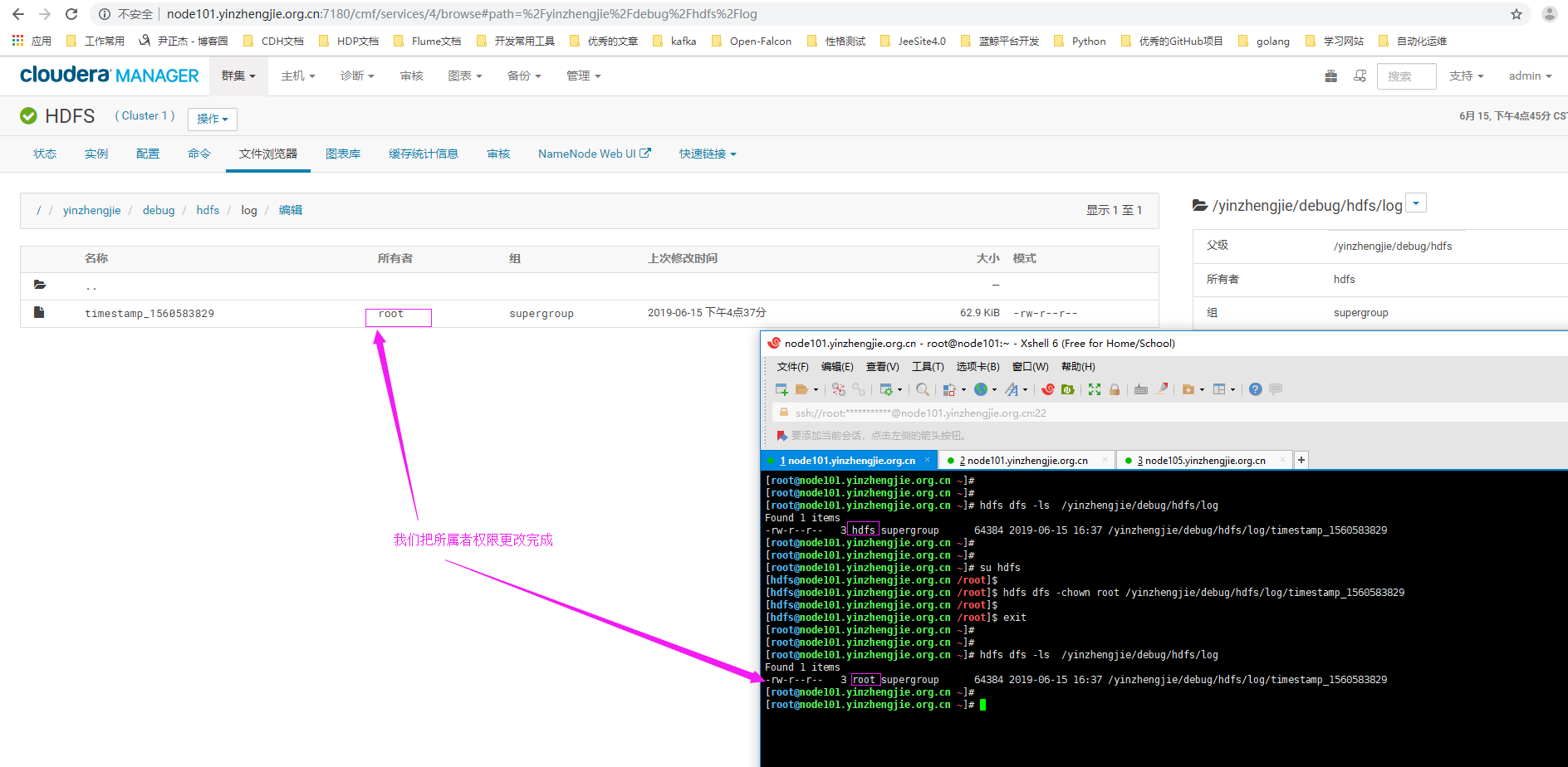

7>.恢复文件权限

四.运行一个mapreduce进程

问题描述: 公司一个运维人员尝试优化集群,但反而使得一些以前可以运行的MapReduce作业不能运行了。请你识别问题并予以纠正,并成功运行性能测试,要求为在Linux文件系统上找到hadoop-mapreduce-examples.jar包,并使用它完成三步测试: 1>.使用teragen 10000000 /user/yinzhengjie/data/day001/test_input 生成10000000行测试记录并输出到指定目录 2>.使用terasort /user/yinzhengjie/data/day001/test_input /user/yinzhengjie/data/day001/test_output 进行排序并输出到指定目录 3>.使用teravalidate /user/yinzhengjie/data/day001/test_output /user/yinzhengjie/data/day001/ts_validate检查输出结果 解决方案: 需要对MapReduce作业的常见错误会排查。按照上述操作执行即可,遇到问题自行处理。

1>.生成输入数据

[root@node101.yinzhengjie.org.cn ~]# find / -name hadoop-mapreduce-examples.jar /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# cd /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# hadoop jar hadoop-mapreduce-examples.jar teragen 10000000 /user/yinzhengjie/data/day001/test_input

[root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# hadoop jar hadoop-mapreduce-examples.jar teragen 10000000 /user/yinzhengjie/data/day001/test_input 19/05/22 19:38:39 INFO terasort.TeraGen: Generating 10000000 using 2 19/05/22 19:38:39 INFO mapreduce.JobSubmitter: number of splits:2 19/05/22 19:38:39 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1558520562958_0001 19/05/22 19:38:39 INFO impl.YarnClientImpl: Submitted application application_1558520562958_0001 19/05/22 19:38:40 INFO mapreduce.Job: The url to track the job: http://node101.yinzhengjie.org.cn:8088/proxy/application_1558520562958_0001/ 19/05/22 19:38:40 INFO mapreduce.Job: Running job: job_1558520562958_0001 19/05/22 19:38:47 INFO mapreduce.Job: Job job_1558520562958_0001 running in uber mode : false 19/05/22 19:38:47 INFO mapreduce.Job: map 0% reduce 0% 19/05/22 19:39:05 INFO mapreduce.Job: map 72% reduce 0% 19/05/22 19:39:10 INFO mapreduce.Job: map 100% reduce 0% 19/05/22 19:39:10 INFO mapreduce.Job: Job job_1558520562958_0001 completed successfully 19/05/22 19:39:10 INFO mapreduce.Job: Counters: 31 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=309374 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=167 HDFS: Number of bytes written=1000000000 HDFS: Number of read operations=8 HDFS: Number of large read operations=0 HDFS: Number of write operations=4 Job Counters Launched map tasks=2 Other local map tasks=2 Total time spent by all maps in occupied slots (ms)=40283 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=40283 Total vcore-milliseconds taken by all map tasks=40283 Total megabyte-milliseconds taken by all map tasks=41249792 Map-Reduce Framework Map input records=10000000 Map output records=10000000 Input split bytes=167 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=163 CPU time spent (ms)=29850 Physical memory (bytes) snapshot=722341888 Virtual memory (bytes) snapshot=5678460928 Total committed heap usage (bytes)=552599552 org.apache.hadoop.examples.terasort.TeraGen$Counters CHECKSUM=21472776955442690 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=1000000000 [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]#

2>.排序和输出

[root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# pwd /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# hadoop jar hadoop-mapreduce-examples.jar terasort /user/yinzhengjie/data/day001/test_input /user/yinzhengjie/data/day001/test_output 19/05/22 19:41:16 INFO terasort.TeraSort: starting 19/05/22 19:41:17 INFO input.FileInputFormat: Total input paths to process : 2 Spent 151ms computing base-splits. Spent 3ms computing TeraScheduler splits. Computing input splits took 155ms Sampling 8 splits of 8 Making 16 from 100000 sampled records Computing parititions took 1019ms Spent 1178ms computing partitions. 19/05/22 19:41:19 INFO mapreduce.JobSubmitter: number of splits:8 19/05/22 19:41:19 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1558520562958_0002 19/05/22 19:41:19 INFO impl.YarnClientImpl: Submitted application application_1558520562958_0002 19/05/22 19:41:19 INFO mapreduce.Job: The url to track the job: http://node101.yinzhengjie.org.cn:8088/proxy/application_1558520562958_0002/ 19/05/22 19:41:19 INFO mapreduce.Job: Running job: job_1558520562958_0002 19/05/22 19:41:26 INFO mapreduce.Job: Job job_1558520562958_0002 running in uber mode : false 19/05/22 19:41:26 INFO mapreduce.Job: map 0% reduce 0% 19/05/22 19:41:36 INFO mapreduce.Job: map 25% reduce 0% 19/05/22 19:41:38 INFO mapreduce.Job: map 38% reduce 0% 19/05/22 19:41:43 INFO mapreduce.Job: map 63% reduce 0% 19/05/22 19:41:47 INFO mapreduce.Job: map 75% reduce 0% 19/05/22 19:41:51 INFO mapreduce.Job: map 88% reduce 0% 19/05/22 19:41:52 INFO mapreduce.Job: map 100% reduce 0% 19/05/22 19:41:58 INFO mapreduce.Job: map 100% reduce 19% 19/05/22 19:42:04 INFO mapreduce.Job: map 100% reduce 38% 19/05/22 19:42:09 INFO mapreduce.Job: map 100% reduce 50% 19/05/22 19:42:10 INFO mapreduce.Job: map 100% reduce 56% 19/05/22 19:42:15 INFO mapreduce.Job: map 100% reduce 69% 19/05/22 19:42:17 INFO mapreduce.Job: map 100% reduce 75% 19/05/22 19:42:19 INFO mapreduce.Job: map 100% reduce 81% 19/05/22 19:42:21 INFO mapreduce.Job: map 100% reduce 88% 19/05/22 19:42:22 INFO mapreduce.Job: map 100% reduce 94% 19/05/22 19:42:25 INFO mapreduce.Job: map 100% reduce 100% 19/05/22 19:42:25 INFO mapreduce.Job: Job job_1558520562958_0002 completed successfully 19/05/22 19:42:25 INFO mapreduce.Job: Counters: 50 File System Counters FILE: Number of bytes read=439892507 FILE: Number of bytes written=880566708 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=1000001152 HDFS: Number of bytes written=1000000000 HDFS: Number of read operations=72 HDFS: Number of large read operations=0 HDFS: Number of write operations=32 Job Counters Launched map tasks=8 Launched reduce tasks=16 Data-local map tasks=6 Rack-local map tasks=2 Total time spent by all maps in occupied slots (ms)=60036 Total time spent by all reduces in occupied slots (ms)=69783 Total time spent by all map tasks (ms)=60036 Total time spent by all reduce tasks (ms)=69783 Total vcore-milliseconds taken by all map tasks=60036 Total vcore-milliseconds taken by all reduce tasks=69783 Total megabyte-milliseconds taken by all map tasks=61476864 Total megabyte-milliseconds taken by all reduce tasks=71457792 Map-Reduce Framework Map input records=10000000 Map output records=10000000 Map output bytes=1020000000 Map output materialized bytes=436922411 Input split bytes=1152 Combine input records=0 Combine output records=0 Reduce input groups=10000000 Reduce shuffle bytes=436922411 Reduce input records=10000000 Reduce output records=10000000 Spilled Records=20000000 Shuffled Maps =128 Failed Shuffles=0 Merged Map outputs=128 GC time elapsed (ms)=2054 CPU time spent (ms)=126560 Physical memory (bytes) snapshot=7872991232 Virtual memory (bytes) snapshot=68271607808 Total committed heap usage (bytes)=6595018752 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=1000000000 File Output Format Counters Bytes Written=1000000000 19/05/22 19:42:25 INFO terasort.TeraSort: done [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]#

[root@node102.yinzhengjie.org.cn ~]# hdfs dfs -ls /user/yinzhengjie/data/day001 Found 2 items drwxr-xr-x - root supergroup 0 2019-05-22 19:39 /user/yinzhengjie/data/day001/test_input drwxr-xr-x - root supergroup 0 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output [root@node102.yinzhengjie.org.cn ~]# [root@node102.yinzhengjie.org.cn ~]# hdfs dfs -ls /user/yinzhengjie/data/day001/test_input Found 3 items -rw-r--r-- 3 root supergroup 0 2019-05-22 19:39 /user/yinzhengjie/data/day001/test_input/_SUCCESS -rw-r--r-- 3 root supergroup 500000000 2019-05-22 19:39 /user/yinzhengjie/data/day001/test_input/part-m-00000 -rw-r--r-- 3 root supergroup 500000000 2019-05-22 19:39 /user/yinzhengjie/data/day001/test_input/part-m-00001 [root@node102.yinzhengjie.org.cn ~]# [root@node102.yinzhengjie.org.cn ~]# hdfs dfs -ls /user/yinzhengjie/data/day001/test_output Found 18 items -rw-r--r-- 1 root supergroup 0 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/_SUCCESS -rw-r--r-- 10 root supergroup 165 2019-05-22 19:41 /user/yinzhengjie/data/day001/test_output/_partition.lst -rw-r--r-- 1 root supergroup 62307000 2019-05-22 19:41 /user/yinzhengjie/data/day001/test_output/part-r-00000 -rw-r--r-- 1 root supergroup 62782700 2019-05-22 19:41 /user/yinzhengjie/data/day001/test_output/part-r-00001 -rw-r--r-- 1 root supergroup 61993900 2019-05-22 19:41 /user/yinzhengjie/data/day001/test_output/part-r-00002 -rw-r--r-- 1 root supergroup 63217700 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00003 -rw-r--r-- 1 root supergroup 62628600 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00004 -rw-r--r-- 1 root supergroup 62884100 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00005 -rw-r--r-- 1 root supergroup 63079700 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00006 -rw-r--r-- 1 root supergroup 61421800 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00007 -rw-r--r-- 1 root supergroup 61319800 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00008 -rw-r--r-- 1 root supergroup 61467300 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00009 -rw-r--r-- 1 root supergroup 62823400 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00010 -rw-r--r-- 1 root supergroup 63392200 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00011 -rw-r--r-- 1 root supergroup 62889200 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00012 -rw-r--r-- 1 root supergroup 62953000 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00013 -rw-r--r-- 1 root supergroup 62072800 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00014 -rw-r--r-- 1 root supergroup 62766800 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output/part-r-00015 [root@node102.yinzhengjie.org.cn ~]#

3>.验证输出

[root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# pwd /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]# hadoop jar hadoop-mapreduce-examples.jar teravalidate /user/yinzhengjie/data/day001/test_output /user/yinzhengjie/data/day001/ts_validate 19/05/22 19:46:27 INFO input.FileInputFormat: Total input paths to process : 16 Spent 29ms computing base-splits. Spent 3ms computing TeraScheduler splits. 19/05/22 19:46:27 INFO mapreduce.JobSubmitter: number of splits:16 19/05/22 19:46:27 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1558520562958_0003 19/05/22 19:46:27 INFO impl.YarnClientImpl: Submitted application application_1558520562958_0003 19/05/22 19:46:27 INFO mapreduce.Job: The url to track the job: http://node101.yinzhengjie.org.cn:8088/proxy/application_1558520562958_0003/ 19/05/22 19:46:27 INFO mapreduce.Job: Running job: job_1558520562958_0003 19/05/22 19:46:33 INFO mapreduce.Job: Job job_1558520562958_0003 running in uber mode : false 19/05/22 19:46:33 INFO mapreduce.Job: map 0% reduce 0% 19/05/22 19:46:38 INFO mapreduce.Job: map 6% reduce 0% 19/05/22 19:46:39 INFO mapreduce.Job: map 19% reduce 0% 19/05/22 19:46:43 INFO mapreduce.Job: map 31% reduce 0% 19/05/22 19:46:44 INFO mapreduce.Job: map 38% reduce 0% 19/05/22 19:46:48 INFO mapreduce.Job: map 50% reduce 0% 19/05/22 19:46:49 INFO mapreduce.Job: map 56% reduce 0% 19/05/22 19:46:53 INFO mapreduce.Job: map 69% reduce 0% 19/05/22 19:46:54 INFO mapreduce.Job: map 75% reduce 0% 19/05/22 19:46:59 INFO mapreduce.Job: map 88% reduce 0% 19/05/22 19:47:00 INFO mapreduce.Job: map 94% reduce 0% 19/05/22 19:47:04 INFO mapreduce.Job: map 100% reduce 0% 19/05/22 19:47:05 INFO mapreduce.Job: map 100% reduce 100% 19/05/22 19:47:05 INFO mapreduce.Job: Job job_1558520562958_0003 completed successfully 19/05/22 19:47:05 INFO mapreduce.Job: Counters: 50 File System Counters FILE: Number of bytes read=849 FILE: Number of bytes written=2639802 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=1000002320 HDFS: Number of bytes written=24 HDFS: Number of read operations=51 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=16 Launched reduce tasks=1 Data-local map tasks=9 Rack-local map tasks=7 Total time spent by all maps in occupied slots (ms)=59301 Total time spent by all reduces in occupied slots (ms)=3807 Total time spent by all map tasks (ms)=59301 Total time spent by all reduce tasks (ms)=3807 Total vcore-milliseconds taken by all map tasks=59301 Total vcore-milliseconds taken by all reduce tasks=3807 Total megabyte-milliseconds taken by all map tasks=60724224 Total megabyte-milliseconds taken by all reduce tasks=3898368 Map-Reduce Framework Map input records=10000000 Map output records=48 Map output bytes=1296 Map output materialized bytes=1537 Input split bytes=2320 Combine input records=0 Combine output records=0 Reduce input groups=33 Reduce shuffle bytes=1537 Reduce input records=48 Reduce output records=1 Spilled Records=96 Shuffled Maps =16 Failed Shuffles=0 Merged Map outputs=16 GC time elapsed (ms)=1027 CPU time spent (ms)=35550 Physical memory (bytes) snapshot=7814672384 Virtual memory (bytes) snapshot=48229584896 Total committed heap usage (bytes)=7076839424 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=1000000000 File Output Format Counters Bytes Written=24 [root@node101.yinzhengjie.org.cn /opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hadoop-mapreduce]#

[root@node102.yinzhengjie.org.cn ~]# hdfs dfs -ls /user/yinzhengjie/data/day001 Found 3 items drwxr-xr-x - root supergroup 0 2019-05-22 19:39 /user/yinzhengjie/data/day001/test_input drwxr-xr-x - root supergroup 0 2019-05-22 19:42 /user/yinzhengjie/data/day001/test_output drwxr-xr-x - root supergroup 0 2019-05-22 19:47 /user/yinzhengjie/data/day001/ts_validate [root@node102.yinzhengjie.org.cn ~]# [root@node102.yinzhengjie.org.cn ~]# hdfs dfs -ls /user/yinzhengjie/data/day001/ts_validate Found 2 items -rw-r--r-- 3 root supergroup 0 2019-05-22 19:47 /user/yinzhengjie/data/day001/ts_validate/_SUCCESS -rw-r--r-- 3 root supergroup 24 2019-05-22 19:47 /user/yinzhengjie/data/day001/ts_validate/part-r-00000 [root@node102.yinzhengjie.org.cn ~]# [root@node102.yinzhengjie.org.cn ~]# hdfs dfs -cat /user/yinzhengjie/data/day001/ts_validate/part-r-00000 #我们可以看到checksum是有内容,说明验证的数据是有序的。 checksum 4c49607ac53602 [root@node102.yinzhengjie.org.cn ~]# [root@node102.yinzhengjie.org.cn ~]#

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/11001500.html,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。