【文本分类-06】Transformer

目录

- 大纲概述

- 数据集合

- 数据处理

- 预训练word2vec模型

一、大纲概述

文本分类这个系列将会有8篇左右文章,从github直接下载代码,从百度云下载训练数据,在pycharm上导入即可使用,包括基于word2vec预训练的文本分类,与及基于近几年的预训练模型(ELMo,BERT等)的文本分类。总共有以下系列:

二、数据集合

数据集为IMDB 电影影评,总共有三个数据文件,在/data/rawData目录下,包括unlabeledTrainData.tsv,labeledTrainData.tsv,testData.tsv。在进行文本分类时需要有标签的数据(labeledTrainData),但是在训练word2vec词向量模型(无监督学习)时可以将无标签的数据一起用上。

训练数据地址:链接:https://pan.baidu.com/s/1-XEwx1ai8kkGsMagIFKX_g 提取码:rtz8

Transformer模型的相关介绍可见这几篇文章:介绍 ,源码讲解。

三、主要代码

3.1 配置训练参数:parameter_config.py

1 # Author:yifan

2 #需要的所有导入包,存放留用,转换到jupyter后直接使用

3 # 1 配置训练参数

4 class TrainingConfig(object):

5 epoches = 4

6 evaluateEvery = 100

7 checkpointEvery = 100

8 learningRate = 0.001

9

10 class ModelConfig(object):

11 embeddingSize = 200

12 filters = 128 #内层一维卷积核的数量,外层卷积核的数量应该等于embeddingSize,因为要确保每个layer后的输出维度和输入维度是一致的。

13 numHeads = 8 # Attention 的头数

14 numBlocks = 1 # 设置transformer block的数量

15 epsilon = 1e-8 # LayerNorm 层中的最小除数

16 keepProp = 0.9 # multi head attention 中的dropout

17 dropoutKeepProb = 0.5 # 全连接层的dropout

18 l2RegLambda = 0.0

19

20 class Config(object):

21 sequenceLength = 200 # 取了所有序列长度的均值

22 batchSize = 128

23 dataSource = "../data/preProcess/labeledTrain.csv"

24 stopWordSource = "../data/english"

25 numClasses = 1 # 二分类设置为1,多分类设置为类别的数目

26 rate = 0.8 # 训练集的比例

27 training = TrainingConfig()

28 model = ModelConfig()

29

30 # 实例化配置参数对象

31 config = Config()

3.2 获取训练数据:get_train_data.py

# Author:yifan

import json

from collections import Counter

import gensim

import pandas as pd

import numpy as np

import parameter_config

# 2 数据预处理的类,生成训练集和测试集

class Dataset(object):

def __init__(self, config):

self.config = config

self._dataSource = config.dataSource

self._stopWordSource = config.stopWordSource

self._sequenceLength = config.sequenceLength # 每条输入的序列处理为定长

self._embeddingSize = config.model.embeddingSize

self._batchSize = config.batchSize

self._rate = config.rate

self._stopWordDict = {}

self.trainReviews = []

self.trainLabels = []

self.evalReviews = []

self.evalLabels = []

self.wordEmbedding = None

self.labelList = []

def _readData(self, filePath):

"""

从csv文件中读取数据集,就本次测试的文件做记录

"""

df = pd.read_csv(filePath) #读取文件,是三列的数据,第一列是review,第二列sentiment,第三列rate

if self.config.numClasses == 1:

labels = df["sentiment"].tolist() #读取sentiment列的数据, 显示输出01序列数组25000条

elif self.config.numClasses > 1:

labels = df["rate"].tolist() #因为numClasses控制,本次取样没有取超过二分类 该处没有输出

review = df["review"].tolist()

reviews = [line.strip().split() for line in review] #按空格语句切分

return reviews, labels

def _labelToIndex(self, labels, label2idx):

"""

将标签转换成索引表示

"""

labelIds = [label2idx[label] for label in labels] #print(labels==labelIds) 结果显示为true,也就是两个一样

return labelIds

def _wordToIndex(self, reviews, word2idx):

"""将词转换成索引"""

reviewIds = [[word2idx.get(item, word2idx["UNK"]) for item in review] for review in reviews]

# print(max(max(reviewIds)))

# print(reviewIds)

return reviewIds #返回25000个无序的数组

def _genTrainEvalData(self, x, y, word2idx, rate):

"""生成训练集和验证集 """

reviews = []

# print(self._sequenceLength)

# print(len(x))

for review in x: #self._sequenceLength为200,表示长的切成200,短的补齐,x数据依旧是25000

if len(review) >= self._sequenceLength:

reviews.append(review[:self._sequenceLength])

else:

reviews.append(review + [word2idx["PAD"]] * (self._sequenceLength - len(review)))

# print(len(review + [word2idx["PAD"]] * (self._sequenceLength - len(review))))

#以下是按照rate比例切分训练和测试数据:

trainIndex = int(len(x) * rate)

trainReviews = np.asarray(reviews[:trainIndex], dtype="int64")

trainLabels = np.array(y[:trainIndex], dtype="float32")

evalReviews = np.asarray(reviews[trainIndex:], dtype="int64")

evalLabels = np.array(y[trainIndex:], dtype="float32")

return trainReviews, trainLabels, evalReviews, evalLabels

def _getWordEmbedding(self, words):

"""按照我们的数据集中的单词取出预训练好的word2vec中的词向量

反馈词和对应的向量(200维度),另外前面增加PAD对用0的数组,UNK对应随机数组。

"""

wordVec = gensim.models.KeyedVectors.load_word2vec_format("../word2vec/word2Vec.bin", binary=True)

vocab = []

wordEmbedding = []

# 添加 "pad" 和 "UNK",

vocab.append("PAD")

vocab.append("UNK")

wordEmbedding.append(np.zeros(self._embeddingSize)) # _embeddingSize 本文定义的是200

wordEmbedding.append(np.random.randn(self._embeddingSize))

# print(wordEmbedding)

for word in words:

try:

vector = wordVec.wv[word]

vocab.append(word)

wordEmbedding.append(vector)

except:

print(word + "不存在于词向量中")

# print(vocab[:3],wordEmbedding[:3])

return vocab, np.array(wordEmbedding)

def _genVocabulary(self, reviews, labels):

"""生成词向量和词汇-索引映射字典,可以用全数据集"""

allWords = [word for review in reviews for word in review] #单词数量5738236 reviews是25000个观点句子【】

subWords = [word for word in allWords if word not in self.stopWordDict] # 去掉停用词

wordCount = Counter(subWords) # 统计词频

sortWordCount = sorted(wordCount.items(), key=lambda x: x[1], reverse=True) #返回键值对,并按照数量排序

# print(len(sortWordCount)) #161330

# print(sortWordCount[:4],sortWordCount[-4:]) # [('movie', 41104), ('film', 36981), ('one', 24966), ('like', 19490)] [('daeseleires', 1), ('nice310', 1), ('shortsightedness', 1), ('unfairness', 1)]

words = [item[0] for item in sortWordCount if item[1] >= 5] # 去除低频词,低于5的

vocab, wordEmbedding = self._getWordEmbedding(words)

self.wordEmbedding = wordEmbedding

word2idx = dict(zip(vocab, list(range(len(vocab))))) #生成类似这种{'I': 0, 'love': 1, 'yanzi': 2}

uniqueLabel = list(set(labels)) #标签去重 最后就 0 1了

label2idx = dict(zip(uniqueLabel, list(range(len(uniqueLabel))))) #本文就 {0: 0, 1: 1}

self.labelList = list(range(len(uniqueLabel)))

# 将词汇-索引映射表保存为json数据,之后做inference时直接加载来处理数据

with open("../data/wordJson/word2idx.json", "w", encoding="utf-8") as f:

json.dump(word2idx, f)

with open("../data/wordJson/label2idx.json", "w", encoding="utf-8") as f:

json.dump(label2idx, f)

return word2idx, label2idx

def _readStopWord(self, stopWordPath):

"""

读取停用词

"""

with open(stopWordPath, "r") as f:

stopWords = f.read()

stopWordList = stopWords.splitlines()

# 将停用词用列表的形式生成,之后查找停用词时会比较快

self.stopWordDict = dict(zip(stopWordList, list(range(len(stopWordList)))))

def dataGen(self):

"""

初始化训练集和验证集

"""

# 初始化停用词

self._readStopWord(self._stopWordSource)

# 初始化数据集

reviews, labels = self._readData(self._dataSource)

# 初始化词汇-索引映射表和词向量矩阵

word2idx, label2idx = self._genVocabulary(reviews, labels)

# 将标签和句子数值化

labelIds = self._labelToIndex(labels, label2idx)

reviewIds = self._wordToIndex(reviews, word2idx)

# 初始化训练集和测试集

trainReviews, trainLabels, evalReviews, evalLabels = self._genTrainEvalData(reviewIds, labelIds, word2idx,

self._rate)

self.trainReviews = trainReviews

self.trainLabels = trainLabels

self.evalReviews = evalReviews

self.evalLabels = evalLabels

#获取前些模块的数据

# config =parameter_config.Config()

# data = Dataset(config)

# data.dataGen()

3.3 模型构建:mode_structure.py

关于transformer模型的一些使用心得:

1)在这里选择固定的one-hot的position embedding比论文中提出的利用正弦余弦函数生成的position embedding的效果要好,可能的原因是论文中提出的position embedding是作为可训练的值传入的,

这样就增加了模型的复杂度,在小数据集(IMDB训练集大小:20000)上导致性能有所下降。

2)mask可能不需要,添加mask和去除mask对结果基本没啥影响,也许在其他的任务或者数据集上有作用,但论文也并没有提出一定要在encoder结构中加入mask,mask更多的是用在decoder。

3)transformer的层数,transformer的层数可以根据自己的数据集大小调整,在小数据集上基本上一层就够了。

4)在subLayers上加dropout正则化,主要是在multi-head attention层加,因为feed forward是用卷积实现的,不加dropout应该没关系,当然如果feed forward用全连接层实现,那也加上dropout。

5)在小数据集上transformer的效果并不一定比Bi-LSTM + Attention好,在IMDB上效果就更差。

1 # Author:yifan

2 import numpy as np

3 import tensorflow as tf

4 import parameter_config

5

6 # 构建模型 3 Transformer模型

7 # 生成位置嵌入

8 def fixedPositionEmbedding(batchSize, sequenceLen):

9 embeddedPosition = []

10 for batch in range(batchSize):

11 x = []

12 for step in range(sequenceLen): #类似one-hot方式的构造

13 a = np.zeros(sequenceLen)

14 a[step] = 1

15 x.append(a)

16 embeddedPosition.append(x)

17 return np.array(embeddedPosition, dtype="float32")

18

19 # 模型构建

20 class Transformer(object):

21 """

22 Transformer Encoder 用于文本分类

23 """

24 def __init__(self, config, wordEmbedding):

25 # 定义模型的输入

26 self.inputX = tf.placeholder(tf.int32, [None, config.sequenceLength], name="inputX")

27 self.inputY = tf.placeholder(tf.int32, [None], name="inputY")

28 self.dropoutKeepProb = tf.placeholder(tf.float32, name="dropoutKeepProb")

29 self.embeddedPosition = tf.placeholder(tf.float32, [None, config.sequenceLength, config.sequenceLength], name="embeddedPosition")

30 self.config = config

31 # 定义l2损失

32 l2Loss = tf.constant(0.0)

33

34 # 词嵌入层, 位置向量的定义方式有两种:一是直接用固定的one-hot的形式传入,然后和词向量拼接,

35 # 在当前的数据集上表现效果更好。另一种

36 # 就是按照论文中的方法实现,这样的效果反而更差,可能是增大了模型的复杂度,在小数据集上表现不佳。

37 with tf.name_scope("embedding"):

38 # 利用预训练的词向量初始化词嵌入矩阵

39 self.W = tf.Variable(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec") ,name="W")

40 # 利用词嵌入矩阵将输入的数据中的词转换成词向量,维度[batch_size, sequence_length, embedding_size]

41 self.embedded = tf.nn.embedding_lookup(self.W, self.inputX)

42 self.embeddedWords = tf.concat([self.embedded, self.embeddedPosition], -1)

43

44 with tf.name_scope("transformer"):

45 for i in range(config.model.numBlocks):

46 with tf.name_scope("transformer-{}".format(i + 1)):

47 # 维度[batch_size, sequence_length, embedding_size]

48 multiHeadAtt = self._multiheadAttention(rawKeys=self.inputX, queries=self.embeddedWords,

49 keys=self.embeddedWords)

50 # 维度[batch_size, sequence_length, embedding_size]

51 self.embeddedWords = self._feedForward(multiHeadAtt,

52 [config.model.filters, config.model.embeddingSize + config.sequenceLength])

53

54 outputs = tf.reshape(self.embeddedWords, [-1, config.sequenceLength * (config.model.embeddingSize + config.sequenceLength)])

55 outputSize = outputs.get_shape()[-1].value

56

57 with tf.name_scope("dropout"):

58 outputs = tf.nn.dropout(outputs, keep_prob=self.dropoutKeepProb)

59

60 # 全连接层的输出

61 with tf.name_scope("output"):

62 outputW = tf.get_variable(

63 "outputW",

64 shape=[outputSize, config.numClasses],

65 initializer=tf.contrib.layers.xavier_initializer())

66

67 outputB= tf.Variable(tf.constant(0.1, shape=[config.numClasses]), name="outputB")

68 l2Loss += tf.nn.l2_loss(outputW)

69 l2Loss += tf.nn.l2_loss(outputB)

70 self.logits = tf.nn.xw_plus_b(outputs, outputW, outputB, name="logits")

71

72 if config.numClasses == 1:

73 self.predictions = tf.cast(tf.greater_equal(self.logits, 0.0), tf.float32, name="predictions")

74 elif config.numClasses > 1:

75 self.predictions = tf.argmax(self.logits, axis=-1, name="predictions")

76

77 # 计算二元交叉熵损失

78 with tf.name_scope("loss"):

79

80 if config.numClasses == 1:

81 losses = tf.nn.sigmoid_cross_entropy_with_logits(logits=self.logits, labels=tf.cast(tf.reshape(self.inputY, [-1, 1]),

82 dtype=tf.float32))

83 elif config.numClasses > 1:

84 losses = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=self.logits, labels=self.inputY)

85

86 self.loss = tf.reduce_mean(losses) + config.model.l2RegLambda * l2Loss

87

88 def _layerNormalization(self, inputs, scope="layerNorm"):

89 # LayerNorm层和BN层有所不同

90 epsilon = self.config.model.epsilon

91

92 inputsShape = inputs.get_shape() # [batch_size, sequence_length, embedding_size]

93

94 paramsShape = inputsShape[-1:]

95

96 # LayerNorm是在最后的维度上计算输入的数据的均值和方差,BN层是考虑所有维度的

97 # mean, variance的维度都是[batch_size, sequence_len, 1]

98 mean, variance = tf.nn.moments(inputs, [-1], keep_dims=True)

99

100 beta = tf.Variable(tf.zeros(paramsShape))

101

102 gamma = tf.Variable(tf.ones(paramsShape))

103 normalized = (inputs - mean) / ((variance + epsilon) ** .5)

104

105 outputs = gamma * normalized + beta

106

107 return outputs

108

109 def _multiheadAttention(self, rawKeys, queries, keys, numUnits=None, causality=False, scope="multiheadAttention"):

110 # rawKeys 的作用是为了计算mask时用的,因为keys是加上了position embedding的,其中不存在padding为0的值

111

112 numHeads = self.config.model.numHeads

113 keepProp = self.config.model.keepProp

114

115 if numUnits is None: # 若是没传入值,直接去输入数据的最后一维,即embedding size.

116 numUnits = queries.get_shape().as_list()[-1]

117

118 # tf.layers.dense可以做多维tensor数据的非线性映射,在计算self-Attention时,一定要对这三个值进行非线性映射,

119 # 其实这一步就是论文中Multi-Head Attention中的对分割后的数据进行权重映射的步骤,我们在这里先映射后分割,原则上是一样的。

120 # Q, K, V的维度都是[batch_size, sequence_length, embedding_size]

121 Q = tf.layers.dense(queries, numUnits, activation=tf.nn.relu)

122 K = tf.layers.dense(keys, numUnits, activation=tf.nn.relu)

123 V = tf.layers.dense(keys, numUnits, activation=tf.nn.relu)

124

125 # 将数据按最后一维分割成num_heads个, 然后按照第一维拼接

126 # Q, K, V 的维度都是[batch_size * numHeads, sequence_length, embedding_size/numHeads]

127 Q_ = tf.concat(tf.split(Q, numHeads, axis=-1), axis=0)

128 K_ = tf.concat(tf.split(K, numHeads, axis=-1), axis=0)

129 V_ = tf.concat(tf.split(V, numHeads, axis=-1), axis=0)

130

131 # 计算keys和queries之间的点积,维度[batch_size * numHeads, queries_len, key_len], 后两维是queries和keys的序列长度

132 similary = tf.matmul(Q_, tf.transpose(K_, [0, 2, 1]))

133

134 # 对计算的点积进行缩放处理,除以向量长度的根号值

135 scaledSimilary = similary / (K_.get_shape().as_list()[-1] ** 0.5)

136

137 # 在我们输入的序列中会存在padding这个样的填充词,这种词应该对最终的结果是毫无帮助的,原则上说当padding都是输入0时,

138 # 计算出来的权重应该也是0,但是在transformer中引入了位置向量,当和位置向量相加之后,其值就不为0了,因此在添加位置向量

139 # 之前,我们需要将其mask为0。虽然在queries中也存在这样的填充词,但原则上模型的结果之和输入有关,而且在self-Attention中

140 # queryies = keys,因此只要一方为0,计算出的权重就为0。

141 # 具体关于key mask的介绍可以看看这里: https://github.com/Kyubyong/transformer/issues/3

142

143 # 利用tf,tile进行张量扩张, 维度[batch_size * numHeads, keys_len] keys_len = keys 的序列长度

144 keyMasks = tf.tile(rawKeys, [numHeads, 1])

145

146 # 增加一个维度,并进行扩张,得到维度[batch_size * numHeads, queries_len, keys_len]

147 keyMasks = tf.tile(tf.expand_dims(keyMasks, 1), [1, tf.shape(queries)[1], 1])

148

149 # tf.ones_like生成元素全为1,维度和scaledSimilary相同的tensor, 然后得到负无穷大的值

150 paddings = tf.ones_like(scaledSimilary) * (-2 ** (32 + 1))

151

152 # tf.where(condition, x, y),condition中的元素为bool值,其中对应的True用x中的元素替换,对应的False用y中的元素替换

153 # 因此condition,x,y的维度是一样的。下面就是keyMasks中的值为0就用paddings中的值替换

154 maskedSimilary = tf.where(tf.equal(keyMasks, 0), paddings, scaledSimilary) # 维度[batch_size * numHeads, queries_len, key_len]

155

156 # 在计算当前的词时,只考虑上文,不考虑下文,出现在Transformer Decoder中。在文本分类时,可以只用Transformer Encoder。

157 # Decoder是生成模型,主要用在语言生成中

158 if causality:

159 diagVals = tf.ones_like(maskedSimilary[0, :, :]) # [queries_len, keys_len]

160 tril = tf.contrib.linalg.LinearOperatorTriL(diagVals).to_dense() # [queries_len, keys_len]

161 masks = tf.tile(tf.expand_dims(tril, 0), [tf.shape(maskedSimilary)[0], 1, 1]) # [batch_size * numHeads, queries_len, keys_len]

162

163 paddings = tf.ones_like(masks) * (-2 ** (32 + 1))

164 maskedSimilary = tf.where(tf.equal(masks, 0), paddings, maskedSimilary) # [batch_size * numHeads, queries_len, keys_len]

165

166 # 通过softmax计算权重系数,维度 [batch_size * numHeads, queries_len, keys_len]

167 weights = tf.nn.softmax(maskedSimilary)

168

169 # 加权和得到输出值, 维度[batch_size * numHeads, sequence_length, embedding_size/numHeads]

170 outputs = tf.matmul(weights, V_)

171

172 # 将多头Attention计算的得到的输出重组成最初的维度[batch_size, sequence_length, embedding_size]

173 outputs = tf.concat(tf.split(outputs, numHeads, axis=0), axis=2)

174

175 outputs = tf.nn.dropout(outputs, keep_prob=keepProp)

176

177 # 对每个subLayers建立残差连接,即H(x) = F(x) + x

178 outputs += queries

179 # normalization 层

180 outputs = self._layerNormalization(outputs)

181 return outputs

182

183 def _feedForward(self, inputs, filters, scope="multiheadAttention"):

184 # 在这里的前向传播采用卷积神经网络

185

186 # 内层

187 params = {"inputs": inputs, "filters": filters[0], "kernel_size": 1,

188 "activation": tf.nn.relu, "use_bias": True}

189 outputs = tf.layers.conv1d(**params)

190

191 # 外层

192 params = {"inputs": outputs, "filters": filters[1], "kernel_size": 1,

193 "activation": None, "use_bias": True}

194

195 # 这里用到了一维卷积,实际上卷积核尺寸还是二维的,只是只需要指定高度,宽度和embedding size的尺寸一致

196 # 维度[batch_size, sequence_length, embedding_size]

197 outputs = tf.layers.conv1d(**params)

198

199 # 残差连接

200 outputs += inputs

201

202 # 归一化处理

203 outputs = self._layerNormalization(outputs)

204

205 return outputs

206

207 def _positionEmbedding(self, scope="positionEmbedding"):

208 # 生成可训练的位置向量

209 batchSize = self.config.batchSize

210 sequenceLen = self.config.sequenceLength

211 embeddingSize = self.config.model.embeddingSize

212

213 # 生成位置的索引,并扩张到batch中所有的样本上

214 positionIndex = tf.tile(tf.expand_dims(tf.range(sequenceLen), 0), [batchSize, 1])

215

216 # 根据正弦和余弦函数来获得每个位置上的embedding的第一部分

217 positionEmbedding = np.array([[pos / np.power(10000, (i-i%2) / embeddingSize) for i in range(embeddingSize)]

218 for pos in range(sequenceLen)])

219

220 # 然后根据奇偶性分别用sin和cos函数来包装

221 positionEmbedding[:, 0::2] = np.sin(positionEmbedding[:, 0::2])

222 positionEmbedding[:, 1::2] = np.cos(positionEmbedding[:, 1::2])

223

224 # 将positionEmbedding转换成tensor的格式

225 positionEmbedding_ = tf.cast(positionEmbedding, dtype=tf.float32)

226

227 # 得到三维的矩阵[batchSize, sequenceLen, embeddingSize]

228 positionEmbedded = tf.nn.embedding_lookup(positionEmbedding_, positionIndex)

229

230 return positionEmbedded

3.4 模型训练:mode_trainning.py

1 import os

2 import datetime

3 import numpy as np

4 import tensorflow as tf

5 import parameter_config

6 import get_train_data

7 import mode_structure

8

9 #获取前些模块的数据

10 config =parameter_config.Config()

11 data = get_train_data.Dataset(config)

12 data.dataGen()

13

14 #4生成batch数据集

15 def nextBatch(x, y, batchSize):

16 # 生成batch数据集,用生成器的方式输出

17 perm = np.arange(len(x)) #返回[0 1 2 ... len(x)]的数组

18 np.random.shuffle(perm) #乱序

19 x = x[perm]

20 y = y[perm]

21 numBatches = len(x) // batchSize

22 for i in range(numBatches):

23 start = i * batchSize

24 end = start + batchSize

25 batchX = np.array(x[start: end], dtype="int64")

26 batchY = np.array(y[start: end], dtype="float32")

27 yield batchX, batchY

28

29 # 5 定义计算metrics的函数

30 """

31 定义各类性能指标

32 """

33 def mean(item: list) -> float:

34 """

35 计算列表中元素的平均值

36 :param item: 列表对象

37 :return:

38 """

39 res = sum(item) / len(item) if len(item) > 0 else 0

40 return res

41

42 def accuracy(pred_y, true_y):

43 """

44 计算二类和多类的准确率

45 :param pred_y: 预测结果

46 :param true_y: 真实结果

47 :return:

48 """

49 if isinstance(pred_y[0], list):

50 pred_y = [item[0] for item in pred_y]

51 corr = 0

52 for i in range(len(pred_y)):

53 if pred_y[i] == true_y[i]:

54 corr += 1

55 acc = corr / len(pred_y) if len(pred_y) > 0 else 0

56 return acc

57

58 def binary_precision(pred_y, true_y, positive=1):

59 """

60 二类的精确率计算

61 :param pred_y: 预测结果

62 :param true_y: 真实结果

63 :param positive: 正例的索引表示

64 :return:

65 """

66 corr = 0

67 pred_corr = 0

68 for i in range(len(pred_y)):

69 if pred_y[i] == positive:

70 pred_corr += 1

71 if pred_y[i] == true_y[i]:

72 corr += 1

73

74 prec = corr / pred_corr if pred_corr > 0 else 0

75 return prec

76

77 def binary_recall(pred_y, true_y, positive=1):

78 """

79 二类的召回率

80 :param pred_y: 预测结果

81 :param true_y: 真实结果

82 :param positive: 正例的索引表示

83 :return:

84 """

85 corr = 0

86 true_corr = 0

87 for i in range(len(pred_y)):

88 if true_y[i] == positive:

89 true_corr += 1

90 if pred_y[i] == true_y[i]:

91 corr += 1

92

93 rec = corr / true_corr if true_corr > 0 else 0

94 return rec

95

96 def binary_f_beta(pred_y, true_y, beta=1.0, positive=1):

97 """

98 二类的f beta值

99 :param pred_y: 预测结果

100 :param true_y: 真实结果

101 :param beta: beta值

102 :param positive: 正例的索引表示

103 :return:

104 """

105 precision = binary_precision(pred_y, true_y, positive)

106 recall = binary_recall(pred_y, true_y, positive)

107 try:

108 f_b = (1 + beta * beta) * precision * recall / (beta * beta * precision + recall)

109 except:

110 f_b = 0

111 return f_b

112

113 def multi_precision(pred_y, true_y, labels):

114 """

115 多类的精确率

116 :param pred_y: 预测结果

117 :param true_y: 真实结果

118 :param labels: 标签列表

119 :return:

120 """

121 if isinstance(pred_y[0], list):

122 pred_y = [item[0] for item in pred_y]

123

124 precisions = [binary_precision(pred_y, true_y, label) for label in labels]

125 prec = mean(precisions)

126 return prec

127

128 def multi_recall(pred_y, true_y, labels):

129 """

130 多类的召回率

131 :param pred_y: 预测结果

132 :param true_y: 真实结果

133 :param labels: 标签列表

134 :return:

135 """

136 if isinstance(pred_y[0], list):

137 pred_y = [item[0] for item in pred_y]

138

139 recalls = [binary_recall(pred_y, true_y, label) for label in labels]

140 rec = mean(recalls)

141 return rec

142

143 def multi_f_beta(pred_y, true_y, labels, beta=1.0):

144 """

145 多类的f beta值

146 :param pred_y: 预测结果

147 :param true_y: 真实结果

148 :param labels: 标签列表

149 :param beta: beta值

150 :return:

151 """

152 if isinstance(pred_y[0], list):

153 pred_y = [item[0] for item in pred_y]

154

155 f_betas = [binary_f_beta(pred_y, true_y, beta, label) for label in labels]

156 f_beta = mean(f_betas)

157 return f_beta

158

159 def get_binary_metrics(pred_y, true_y, f_beta=1.0):

160 """

161 得到二分类的性能指标

162 :param pred_y:

163 :param true_y:

164 :param f_beta:

165 :return:

166 """

167 acc = accuracy(pred_y, true_y)

168 recall = binary_recall(pred_y, true_y)

169 precision = binary_precision(pred_y, true_y)

170 f_beta = binary_f_beta(pred_y, true_y, f_beta)

171 return acc, recall, precision, f_beta

172

173 def get_multi_metrics(pred_y, true_y, labels, f_beta=1.0):

174 """

175 得到多分类的性能指标

176 :param pred_y:

177 :param true_y:

178 :param labels:

179 :param f_beta:

180 :return:

181 """

182 acc = accuracy(pred_y, true_y)

183 recall = multi_recall(pred_y, true_y, labels)

184 precision = multi_precision(pred_y, true_y, labels)

185 f_beta = multi_f_beta(pred_y, true_y, labels, f_beta)

186 return acc, recall, precision, f_beta

187

188 # 6 训练模型

189 # 生成训练集和验证集

190 trainReviews = data.trainReviews

191 trainLabels = data.trainLabels

192 evalReviews = data.evalReviews

193 evalLabels = data.evalLabels

194

195 wordEmbedding = data.wordEmbedding

196 labelList = data.labelList

197 embeddedPosition = mode_structure.fixedPositionEmbedding(config.batchSize, config.sequenceLength) #使用的是one-hot形式

198

199 # 训练模型

200 # 定义计算图

201 with tf.Graph().as_default():

202 session_conf = tf.ConfigProto(allow_soft_placement=True, log_device_placement=False)

203 session_conf.gpu_options.allow_growth=True

204 session_conf.gpu_options.per_process_gpu_memory_fraction = 0.9 # 配置gpu占用率

205 sess = tf.Session(config=session_conf)

206

207 # 定义会话

208 with sess.as_default():

209 transformer = mode_structure.Transformer(config, wordEmbedding)

210 globalStep = tf.Variable(0, name="globalStep", trainable=False)

211 # 定义优化函数,传入学习速率参数

212 optimizer = tf.train.AdamOptimizer(config.training.learningRate)

213 # 计算梯度,得到梯度和变量

214 gradsAndVars = optimizer.compute_gradients(transformer.loss)

215 # 将梯度应用到变量下,生成训练器

216 trainOp = optimizer.apply_gradients(gradsAndVars, global_step=globalStep)

217

218 # 用summary绘制tensorBoard

219 gradSummaries = []

220 for g, v in gradsAndVars:

221 if g is not None:

222 tf.summary.histogram("{}/grad/hist".format(v.name), g)

223 tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g))

224

225 outDir = os.path.abspath(os.path.join(os.path.curdir, "summarys"))

226 print("Writing to {}\n".format(outDir))

227

228 lossSummary = tf.summary.scalar("loss", transformer.loss)

229 summaryOp = tf.summary.merge_all()

230

231 trainSummaryDir = os.path.join(outDir, "train")

232 trainSummaryWriter = tf.summary.FileWriter(trainSummaryDir, sess.graph)

233 evalSummaryDir = os.path.join(outDir, "eval")

234 evalSummaryWriter = tf.summary.FileWriter(evalSummaryDir, sess.graph)

235

236

237 # 初始化所有变量

238 saver = tf.train.Saver(tf.global_variables(), max_to_keep=5)

239

240 # 保存模型的一种方式,保存为pb文件

241 savedModelPath = "../model/transformer/savedModel"

242 if os.path.exists(savedModelPath):

243 os.rmdir(savedModelPath)

244 builder = tf.saved_model.builder.SavedModelBuilder(savedModelPath)

245

246 sess.run(tf.global_variables_initializer())

247

248 def trainStep(batchX, batchY):

249 """

250 训练函数

251 """

252 feed_dict = {

253 transformer.inputX: batchX,

254 transformer.inputY: batchY,

255 transformer.dropoutKeepProb: config.model.dropoutKeepProb,

256 transformer.embeddedPosition: embeddedPosition

257 }

258 _, summary, step, loss, predictions = sess.run(

259 [trainOp, summaryOp, globalStep, transformer.loss, transformer.predictions],

260 feed_dict)

261

262 if config.numClasses == 1:

263 acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY)

264 elif config.numClasses > 1:

265 acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY,

266 labels=labelList)

267

268 trainSummaryWriter.add_summary(summary, step)

269 return loss, acc, prec, recall, f_beta

270

271 def devStep(batchX, batchY):

272 """

273 验证函数

274 """

275 feed_dict = {

276 transformer.inputX: batchX,

277 transformer.inputY: batchY,

278 transformer.dropoutKeepProb: 1.0,

279 transformer.embeddedPosition: embeddedPosition

280 }

281 summary, step, loss, predictions = sess.run(

282 [summaryOp, globalStep, transformer.loss, transformer.predictions],

283 feed_dict)

284

285 if config.numClasses == 1:

286 acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY)

287

288

289 elif config.numClasses > 1:

290 acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY,

291 labels=labelList)

292

293 trainSummaryWriter.add_summary(summary, step)

294

295 return loss, acc, prec, recall, f_beta

296

297 for i in range(config.training.epoches):

298 # 训练模型

299 print("start training model")

300 for batchTrain in nextBatch(trainReviews, trainLabels, config.batchSize):

301 loss, acc, prec, recall, f_beta = trainStep(batchTrain[0], batchTrain[1])

302

303 currentStep = tf.train.global_step(sess, globalStep)

304 print("train: step: {}, loss: {}, acc: {}, recall: {}, precision: {}, f_beta: {}".format(

305 currentStep, loss, acc, recall, prec, f_beta))

306 if currentStep % config.training.evaluateEvery == 0:

307 print("\nEvaluation:")

308

309 losses = []

310 accs = []

311 f_betas = []

312 precisions = []

313 recalls = []

314

315 for batchEval in nextBatch(evalReviews, evalLabels, config.batchSize):

316 loss, acc, precision, recall, f_beta = devStep(batchEval[0], batchEval[1])

317 losses.append(loss)

318 accs.append(acc)

319 f_betas.append(f_beta)

320 precisions.append(precision)

321 recalls.append(recall)

322

323 time_str = datetime.datetime.now().isoformat()

324 print("{}, step: {}, loss: {}, acc: {},precision: {}, recall: {}, f_beta: {}".format(time_str, currentStep, mean(losses),

325 mean(accs), mean(precisions),

326 mean(recalls), mean(f_betas)))

327

328 if currentStep % config.training.checkpointEvery == 0:

329 # 保存模型的另一种方法,保存checkpoint文件

330 path = saver.save(sess, "../model/Transformer/model/my-model", global_step=currentStep)

331 print("Saved model checkpoint to {}\n".format(path))

332

333 inputs = {"inputX": tf.saved_model.utils.build_tensor_info(transformer.inputX),

334 "keepProb": tf.saved_model.utils.build_tensor_info(transformer.dropoutKeepProb)}

335

336 outputs = {"predictions": tf.saved_model.utils.build_tensor_info(transformer.predictions)}

337

338 prediction_signature = tf.saved_model.signature_def_utils.build_signature_def(inputs=inputs, outputs=outputs,

339 method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME)

340 legacy_init_op = tf.group(tf.tables_initializer(), name="legacy_init_op")

341 builder.add_meta_graph_and_variables(sess, [tf.saved_model.tag_constants.SERVING],

342 signature_def_map={"predict": prediction_signature}, legacy_init_op=legacy_init_op)

343

344 builder.save()

3.5 预测:predict.py

1 # Author:yifan

2 import os

3 import csv

4 import time

5 import datetime

6 import random

7 import json

8 from collections import Counter

9 from math import sqrt

10 import gensim

11 import pandas as pd

12 import numpy as np

13 import tensorflow as tf

14 from sklearn.metrics import roc_auc_score, accuracy_score, precision_score, recall_score

15 import parameter_config

16 config =parameter_config.Config()

17 import mode_structure

18 embeddedPositions = mode_structure.fixedPositionEmbedding(config.batchSize, config.sequenceLength)[0] #使用的是one-hot形式

19 # print(type(embeddedPositions))

20 # print(embeddedPositions.shape)

21 #7预测代码

22 # x = "this movie is full of references like mad max ii the wild one and many others the ladybug´s face it´s a clear reference or tribute to peter lorre this movie is a masterpiece we´ll talk much more about in the future"

23 # x = "his movie is the same as the third level movie. There's no place to look good"

24 x = "This film is not good" #最终反馈为1 感觉不准

25 # x = "This film is bad" #最终反馈为0

26

27 # 注:下面两个词典要保证和当前加载的模型对应的词典是一致的

28 with open("../data/wordJson/word2idx.json", "r", encoding="utf-8") as f:

29 word2idx = json.load(f)

30 with open("../data/wordJson/label2idx.json", "r", encoding="utf-8") as f: #label2idx.json内容{"0": 0, "1": 1}

31 label2idx = json.load(f)

32 idx2label = {value: key for key, value in label2idx.items()}

33

34 #x 的处理,变成模型能识别的向量xIds

35 xIds = [word2idx.get(item, word2idx["UNK"]) for item in x.split(" ")] #返回x对应的向量

36 if len(xIds) >= config.sequenceLength: #xIds 句子单词个数是否超过了sequenceLength(200)

37 xIds = xIds[:config.sequenceLength]

38 print("ddd",xIds)

39 else:

40 xIds = xIds + [word2idx["PAD"]] * (config.sequenceLength - len(xIds))

41 print("xxx", xIds)

42

43 graph = tf.Graph()

44 with graph.as_default():

45 gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

46 session_conf = tf.ConfigProto(allow_soft_placement=True, log_device_placement=False, gpu_options=gpu_options)

47 sess = tf.Session(config=session_conf)

48

49 with sess.as_default():

50 # 恢复模型

51 checkpoint_file = tf.train.latest_checkpoint("../model/transformer/model/")

52 saver = tf.train.import_meta_graph("{}.meta".format(checkpoint_file))

53 saver.restore(sess, checkpoint_file)

54

55 # 获得需要喂给模型的参数,输出的结果依赖的输入值

56 inputX = graph.get_operation_by_name("inputX").outputs[0]

57 dropoutKeepProb = graph.get_operation_by_name("dropoutKeepProb").outputs[0]

58 embeddedPosition = graph.get_operation_by_name("embeddedPosition").outputs[0]

59 # inputX = tf.placeholder(tf.int32, [None, config.sequenceLength], name="inputX")

60 # dropoutKeepProb = tf.placeholder(tf.float32, name="dropoutKeepProb")

61 # embeddedPosition = tf.placeholder(tf.float32, [None, config.sequenceLength, config.sequenceLength],

62 # name="embeddedPosition") #这种方式不行

63

64 # 获得输出的结果

65 predictions = graph.get_tensor_by_name("output/predictions:0")

66 pred = sess.run(predictions, feed_dict={inputX: [xIds], dropoutKeepProb: 1.0, embeddedPosition: [embeddedPositions]})[0]

67

68 # print(pred)

69 pred = [idx2label[item] for item in pred]

70 print(pred)

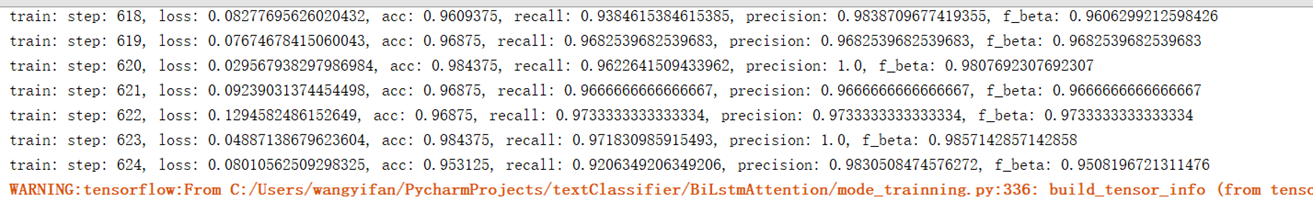

结果

相关代码可见:https://github.com/yifanhunter/NLP_textClassifier