【文本分类-05】BiLSTM+Attention

目录

- 大纲概述

- 数据集合

- 数据处理

- 预训练word2vec模型

一、大纲概述

文本分类这个系列将会有8篇左右文章,从github直接下载代码,从百度云下载训练数据,在pycharm上导入即可使用,包括基于word2vec预训练的文本分类,与及基于近几年的预训练模型(ELMo,BERT等)的文本分类。总共有以下系列:

word2vec预训练词向量

textCNN 模型

charCNN 模型

Bi-LSTM 模型

Bi-LSTM + Attention 模型

Transformer 模型

ELMo 预训练模型

BERT 预训练模型

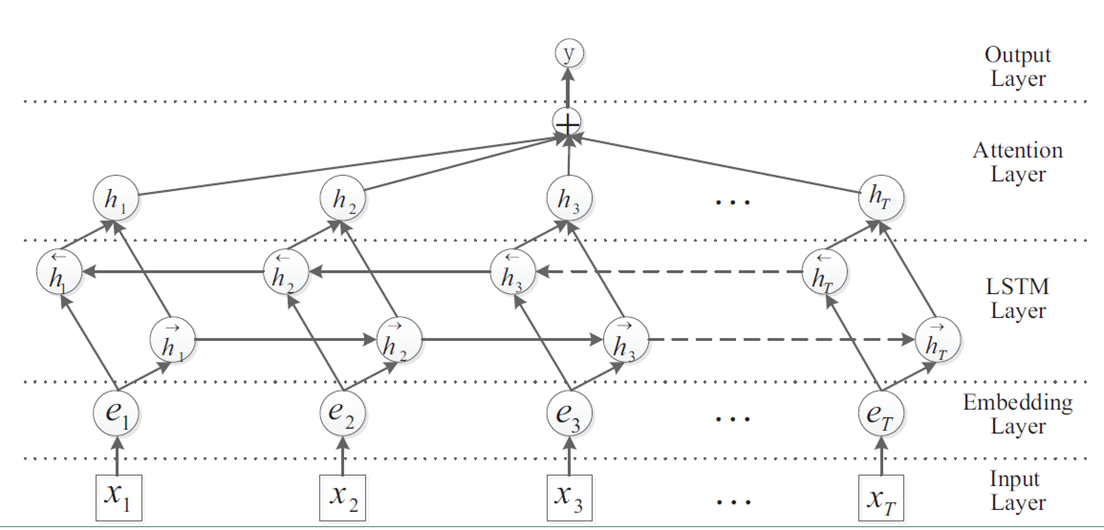

模型结构

Bi-LSTM + Attention模型来源于论文Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification。

Bi-LSTM + Attention 就是在Bi-LSTM的模型上加入Attention层,在Bi-LSTM中我们会用最后一个时序 的输出向量 作为特征向量,然后进行softmax分类。Attention是先计算每个时序的权重,然后将所有时序 的向量进行加权和作为特征向量,然后进行softmax分类。在实验中,加上Attention确实对结果有所提升。其模型结构如下图:

二、数据集合

数据集为IMDB 电影影评,总共有三个数据文件,在/data/rawData目录下,包括unlabeledTrainData.tsv,labeledTrainData.tsv,testData.tsv。在进行文本分类时需要有标签的数据(labeledTrainData),但是在训练word2vec词向量模型(无监督学习)时可以将无标签的数据一起用上。

训练数据地址:链接:https://pan.baidu.com/s/1-XEwx1ai8kkGsMagIFKX_g 提取码:rtz8

三、主要代码

3.1 配置训练参数:parameter_config.py

# Author:yifan

#需要的所有导入包,存放留用,转换到jupyter后直接使用

# 1 配置训练参数

class TrainingConfig(object):

epoches = 4

evaluateEvery = 100

checkpointEvery = 100

learningRate = 0.001

class ModelConfig(object):

embeddingSize = 200

hiddenSizes = [256, 128] # LSTM结构的神经元个数

dropoutKeepProb = 0.5

l2RegLambda = 0.0

class Config(object):

sequenceLength = 200 # 取了所有序列长度的均值

batchSize = 128

dataSource = "../data/preProcess/labeledTrain.csv"

stopWordSource = "../data/english"

numClasses = 1 # 二分类设置为1,多分类设置为类别的数目

rate = 0.8 # 训练集的比例

training = TrainingConfig()

model = ModelConfig()

# 实例化配置参数对象

config = Config()

3.2 获取训练数据:get_train_data.py

# Author:yifan

import json

from collections import Counter

import gensim

import pandas as pd

import numpy as np

import parameter_config

# 2 数据预处理的类,生成训练集和测试集

class Dataset(object):

def __init__(self, config):

self.config = config

self._dataSource = config.dataSource

self._stopWordSource = config.stopWordSource

self._sequenceLength = config.sequenceLength # 每条输入的序列处理为定长

self._embeddingSize = config.model.embeddingSize

self._batchSize = config.batchSize

self._rate = config.rate

self._stopWordDict = {}

self.trainReviews = []

self.trainLabels = []

self.evalReviews = []

self.evalLabels = []

self.wordEmbedding = None

self.labelList = []

def _readData(self, filePath):

"""

从csv文件中读取数据集,就本次测试的文件做记录

"""

df = pd.read_csv(filePath) #读取文件,是三列的数据,第一列是review,第二列sentiment,第三列rate

if self.config.numClasses == 1:

labels = df["sentiment"].tolist() #读取sentiment列的数据, 显示输出01序列数组25000条

elif self.config.numClasses > 1:

labels = df["rate"].tolist() #因为numClasses控制,本次取样没有取超过二分类 该处没有输出

review = df["review"].tolist()

reviews = [line.strip().split() for line in review] #按空格语句切分

return reviews, labels

def _labelToIndex(self, labels, label2idx):

"""

将标签转换成索引表示

"""

labelIds = [label2idx[label] for label in labels] #print(labels==labelIds) 结果显示为true,也就是两个一样

return labelIds

def _wordToIndex(self, reviews, word2idx):

"""将词转换成索引"""

reviewIds = [[word2idx.get(item, word2idx["UNK"]) for item in review] for review in reviews]

# print(max(max(reviewIds)))

# print(reviewIds)

return reviewIds #返回25000个无序的数组

def _genTrainEvalData(self, x, y, word2idx, rate):

"""生成训练集和验证集 """

reviews = []

# print(self._sequenceLength)

# print(len(x))

for review in x: #self._sequenceLength为200,表示长的切成200,短的补齐,x数据依旧是25000

if len(review) >= self._sequenceLength:

reviews.append(review[:self._sequenceLength])

else:

reviews.append(review + [word2idx["PAD"]] * (self._sequenceLength - len(review)))

# print(len(review + [word2idx["PAD"]] * (self._sequenceLength - len(review))))

#以下是按照rate比例切分训练和测试数据:

trainIndex = int(len(x) * rate)

trainReviews = np.asarray(reviews[:trainIndex], dtype="int64")

trainLabels = np.array(y[:trainIndex], dtype="float32")

evalReviews = np.asarray(reviews[trainIndex:], dtype="int64")

evalLabels = np.array(y[trainIndex:], dtype="float32")

return trainReviews, trainLabels, evalReviews, evalLabels

def _getWordEmbedding(self, words):

"""按照我们的数据集中的单词取出预训练好的word2vec中的词向量

反馈词和对应的向量(200维度),另外前面增加PAD对用0的数组,UNK对应随机数组。

"""

wordVec = gensim.models.KeyedVectors.load_word2vec_format("../word2vec/word2Vec.bin", binary=True)

vocab = []

wordEmbedding = []

# 添加 "pad" 和 "UNK",

vocab.append("PAD")

vocab.append("UNK")

wordEmbedding.append(np.zeros(self._embeddingSize)) # _embeddingSize 本文定义的是200

wordEmbedding.append(np.random.randn(self._embeddingSize))

# print(wordEmbedding)

for word in words:

try:

vector = wordVec.wv[word]

vocab.append(word)

wordEmbedding.append(vector)

except:

print(word + "不存在于词向量中")

# print(vocab[:3],wordEmbedding[:3])

return vocab, np.array(wordEmbedding)

def _genVocabulary(self, reviews, labels):

"""生成词向量和词汇-索引映射字典,可以用全数据集"""

allWords = [word for review in reviews for word in review] #单词数量5738236 reviews是25000个观点句子【】

subWords = [word for word in allWords if word not in self.stopWordDict] # 去掉停用词

wordCount = Counter(subWords) # 统计词频

sortWordCount = sorted(wordCount.items(), key=lambda x: x[1], reverse=True) #返回键值对,并按照数量排序

# print(len(sortWordCount)) #161330

# print(sortWordCount[:4],sortWordCount[-4:]) # [('movie', 41104), ('film', 36981), ('one', 24966), ('like', 19490)] [('daeseleires', 1), ('nice310', 1), ('shortsightedness', 1), ('unfairness', 1)]

words = [item[0] for item in sortWordCount if item[1] >= 5] # 去除低频词,低于5的

vocab, wordEmbedding = self._getWordEmbedding(words)

self.wordEmbedding = wordEmbedding

word2idx = dict(zip(vocab, list(range(len(vocab))))) #生成类似这种{'I': 0, 'love': 1, 'yanzi': 2}

uniqueLabel = list(set(labels)) #标签去重 最后就 0 1了

label2idx = dict(zip(uniqueLabel, list(range(len(uniqueLabel))))) #本文就 {0: 0, 1: 1}

self.labelList = list(range(len(uniqueLabel)))

# 将词汇-索引映射表保存为json数据,之后做inference时直接加载来处理数据

with open("../data/wordJson/word2idx.json", "w", encoding="utf-8") as f:

json.dump(word2idx, f)

with open("../data/wordJson/label2idx.json", "w", encoding="utf-8") as f:

json.dump(label2idx, f)

return word2idx, label2idx

def _readStopWord(self, stopWordPath):

"""

读取停用词

"""

with open(stopWordPath, "r") as f:

stopWords = f.read()

stopWordList = stopWords.splitlines()

# 将停用词用列表的形式生成,之后查找停用词时会比较快

self.stopWordDict = dict(zip(stopWordList, list(range(len(stopWordList)))))

def dataGen(self):

"""

初始化训练集和验证集

"""

# 初始化停用词

self._readStopWord(self._stopWordSource)

# 初始化数据集

reviews, labels = self._readData(self._dataSource)

# 初始化词汇-索引映射表和词向量矩阵

word2idx, label2idx = self._genVocabulary(reviews, labels)

# 将标签和句子数值化

labelIds = self._labelToIndex(labels, label2idx)

reviewIds = self._wordToIndex(reviews, word2idx)

# 初始化训练集和测试集

trainReviews, trainLabels, evalReviews, evalLabels = self._genTrainEvalData(reviewIds, labelIds, word2idx,

self._rate)

self.trainReviews = trainReviews

self.trainLabels = trainLabels

self.evalReviews = evalReviews

self.evalLabels = evalLabels

#获取前些模块的数据

# config =parameter_config.Config()

# data = Dataset(config)

# data.dataGen()

3.3 模型构建:mode_structure.py

import tensorflow as tf

import parameter_config

config = parameter_config.Config()

# 构建模型 3 Bi-LSTM + Attention模型

# 构建模型

class BiLSTMAttention(object):

def __init__(self, config, wordEmbedding):

# 定义模型的输入

self.inputX = tf.placeholder(tf.int32, [None, config.sequenceLength], name="inputX")

self.inputY = tf.placeholder(tf.int32, [None], name="inputY")

self.dropoutKeepProb = tf.placeholder(tf.float32, name="dropoutKeepProb")

# 定义l2损失

l2Loss = tf.constant(0.0)

# 词嵌入层

with tf.name_scope("embedding"):

# 利用预训练的词向量初始化词嵌入矩阵

self.W = tf.Variable(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec") ,name="W")

# 利用词嵌入矩阵将输入的数据中的词转换成词向量,维度[batch_size, sequence_length, embedding_size]

self.embeddedWords = tf.nn.embedding_lookup(self.W, self.inputX)

# 定义两层双向LSTM的模型结构

with tf.name_scope("Bi-LSTM"):

for idx, hiddenSize in enumerate(config.model.hiddenSizes):

with tf.name_scope("Bi-LSTM" + str(idx)):

# 定义前向LSTM结构

lstmFwCell = tf.nn.rnn_cell.DropoutWrapper(tf.nn.rnn_cell.LSTMCell(num_units=hiddenSize, state_is_tuple=True),

output_keep_prob=self.dropoutKeepProb)

# 定义反向LSTM结构

lstmBwCell = tf.nn.rnn_cell.DropoutWrapper(tf.nn.rnn_cell.LSTMCell(num_units=hiddenSize, state_is_tuple=True),

output_keep_prob=self.dropoutKeepProb)

# 采用动态rnn,可以动态的输入序列的长度,若没有输入,则取序列的全长

# outputs是一个元祖(output_fw, output_bw),其中两个元素的维度都是[batch_size, max_time, hidden_size],fw和bw的hidden_size一样

# self.current_state 是最终的状态,二元组(state_fw, state_bw),state_fw=[batch_size, s],s是一个元祖(h, c)

outputs_, self.current_state = tf.nn.bidirectional_dynamic_rnn(lstmFwCell, lstmBwCell,

self.embeddedWords, dtype=tf.float32,

scope="bi-lstm" + str(idx))

# 对outputs中的fw和bw的结果拼接 [batch_size, time_step, hidden_size * 2], 传入到下一层Bi-LSTM中

self.embeddedWords = tf.concat(outputs_, 2)

# 将最后一层Bi-LSTM输出的结果分割成前向和后向的输出

outputs = tf.split(self.embeddedWords, 2, -1)

# 在Bi-LSTM+Attention的论文中,将前向和后向的输出相加

with tf.name_scope("Attention"):

H = outputs[0] + outputs[1]

# 得到Attention的输出

output = self.attention(H)

outputSize = config.model.hiddenSizes[-1]

# 全连接层的输出

with tf.name_scope("output"):

outputW = tf.get_variable(

"outputW",

shape=[outputSize, config.numClasses],

initializer=tf.contrib.layers.xavier_initializer())

outputB= tf.Variable(tf.constant(0.1, shape=[config.numClasses]), name="outputB")

l2Loss += tf.nn.l2_loss(outputW)

l2Loss += tf.nn.l2_loss(outputB)

self.logits = tf.nn.xw_plus_b(output, outputW, outputB, name="logits")

if config.numClasses == 1:

self.predictions = tf.cast(tf.greater_equal(self.logits, 0.0), tf.float32, name="predictions")

elif config.numClasses > 1:

self.predictions = tf.argmax(self.logits, axis=-1, name="predictions")

# 计算二元交叉熵损失

with tf.name_scope("loss"):

if config.numClasses == 1:

losses = tf.nn.sigmoid_cross_entropy_with_logits(logits=self.logits, labels=tf.cast(tf.reshape(self.inputY, [-1, 1]),

dtype=tf.float32))

elif config.numClasses > 1:

losses = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=self.logits, labels=self.inputY)

self.loss = tf.reduce_mean(losses) + config.model.l2RegLambda * l2Loss

def attention(self, H):

"""

利用Attention机制得到句子的向量表示

"""

# 获得最后一层LSTM的神经元数量

hiddenSize = config.model.hiddenSizes[-1]

# 初始化一个权重向量,是可训练的参数

W = tf.Variable(tf.random_normal([hiddenSize], stddev=0.1))

# 对Bi-LSTM的输出用激活函数做非线性转换

M = tf.tanh(H)

# 对W和M做矩阵运算,W=[batch_size, time_step, hidden_size],计算前做维度转换成[batch_size * time_step, hidden_size]

# newM = [batch_size, time_step, 1],每一个时间步的输出由向量转换成一个数字

newM = tf.matmul(tf.reshape(M, [-1, hiddenSize]), tf.reshape(W, [-1, 1]))

# 对newM做维度转换成[batch_size, time_step]

restoreM = tf.reshape(newM, [-1, config.sequenceLength])

# 用softmax做归一化处理[batch_size, time_step]

self.alpha = tf.nn.softmax(restoreM)

# 利用求得的alpha的值对H进行加权求和,用矩阵运算直接操作

r = tf.matmul(tf.transpose(H, [0, 2, 1]), tf.reshape(self.alpha, [-1, config.sequenceLength, 1]))

# 将三维压缩成二维sequeezeR=[batch_size, hidden_size]

sequeezeR = tf.reshape(r, [-1, hiddenSize])

sentenceRepren = tf.tanh(sequeezeR)

# 对Attention的输出可以做dropout处理

output = tf.nn.dropout(sentenceRepren, self.dropoutKeepProb)

return output

3.4 模型训练:mode_trainning.py

1 import os

2 import datetime

3 import numpy as np

4 import tensorflow as tf

5 import parameter_config

6 import get_train_data

7 import mode_structure

8

9 #获取前些模块的数据

10 config =parameter_config.Config()

11 data = get_train_data.Dataset(config)

12 data.dataGen()

13

14 #4生成batch数据集

15 def nextBatch(x, y, batchSize):

16 # 生成batch数据集,用生成器的方式输出

17 perm = np.arange(len(x)) #返回[0 1 2 ... len(x)]的数组

18 np.random.shuffle(perm) #乱序

19 x = x[perm]

20 y = y[perm]

21 numBatches = len(x) // batchSize

22

23 for i in range(numBatches):

24 start = i * batchSize

25 end = start + batchSize

26 batchX = np.array(x[start: end], dtype="int64")

27 batchY = np.array(y[start: end], dtype="float32")

28 yield batchX, batchY

29

30 # 5 定义计算metrics的函数

31 """

32 定义各类性能指标

33 """

34 def mean(item: list) -> float:

35 """

36 计算列表中元素的平均值

37 :param item: 列表对象

38 :return:

39 """

40 res = sum(item) / len(item) if len(item) > 0 else 0

41 return res

42

43 def accuracy(pred_y, true_y):

44 """

45 计算二类和多类的准确率

46 :param pred_y: 预测结果

47 :param true_y: 真实结果

48 :return:

49 """

50 if isinstance(pred_y[0], list):

51 pred_y = [item[0] for item in pred_y]

52 corr = 0

53 for i in range(len(pred_y)):

54 if pred_y[i] == true_y[i]:

55 corr += 1

56 acc = corr / len(pred_y) if len(pred_y) > 0 else 0

57 return acc

58

59 def binary_precision(pred_y, true_y, positive=1):

60 """

61 二类的精确率计算

62 :param pred_y: 预测结果

63 :param true_y: 真实结果

64 :param positive: 正例的索引表示

65 :return:

66 """

67 corr = 0

68 pred_corr = 0

69 for i in range(len(pred_y)):

70 if pred_y[i] == positive:

71 pred_corr += 1

72 if pred_y[i] == true_y[i]:

73 corr += 1

74

75 prec = corr / pred_corr if pred_corr > 0 else 0

76 return prec

77

78 def binary_recall(pred_y, true_y, positive=1):

79 """

80 二类的召回率

81 :param pred_y: 预测结果

82 :param true_y: 真实结果

83 :param positive: 正例的索引表示

84 :return:

85 """

86 corr = 0

87 true_corr = 0

88 for i in range(len(pred_y)):

89 if true_y[i] == positive:

90 true_corr += 1

91 if pred_y[i] == true_y[i]:

92 corr += 1

93

94 rec = corr / true_corr if true_corr > 0 else 0

95 return rec

96

97 def binary_f_beta(pred_y, true_y, beta=1.0, positive=1):

98 """

99 二类的f beta值

100 :param pred_y: 预测结果

101 :param true_y: 真实结果

102 :param beta: beta值

103 :param positive: 正例的索引表示

104 :return:

105 """

106 precision = binary_precision(pred_y, true_y, positive)

107 recall = binary_recall(pred_y, true_y, positive)

108 try:

109 f_b = (1 + beta * beta) * precision * recall / (beta * beta * precision + recall)

110 except:

111 f_b = 0

112 return f_b

113

114 def multi_precision(pred_y, true_y, labels):

115 """

116 多类的精确率

117 :param pred_y: 预测结果

118 :param true_y: 真实结果

119 :param labels: 标签列表

120 :return:

121 """

122 if isinstance(pred_y[0], list):

123 pred_y = [item[0] for item in pred_y]

124

125 precisions = [binary_precision(pred_y, true_y, label) for label in labels]

126 prec = mean(precisions)

127 return prec

128

129 def multi_recall(pred_y, true_y, labels):

130 """

131 多类的召回率

132 :param pred_y: 预测结果

133 :param true_y: 真实结果

134 :param labels: 标签列表

135 :return:

136 """

137 if isinstance(pred_y[0], list):

138 pred_y = [item[0] for item in pred_y]

139

140 recalls = [binary_recall(pred_y, true_y, label) for label in labels]

141 rec = mean(recalls)

142 return rec

143

144 def multi_f_beta(pred_y, true_y, labels, beta=1.0):

145 """

146 多类的f beta值

147 :param pred_y: 预测结果

148 :param true_y: 真实结果

149 :param labels: 标签列表

150 :param beta: beta值

151 :return:

152 """

153 if isinstance(pred_y[0], list):

154 pred_y = [item[0] for item in pred_y]

155

156 f_betas = [binary_f_beta(pred_y, true_y, beta, label) for label in labels]

157 f_beta = mean(f_betas)

158 return f_beta

159

160 def get_binary_metrics(pred_y, true_y, f_beta=1.0):

161 """

162 得到二分类的性能指标

163 :param pred_y:

164 :param true_y:

165 :param f_beta:

166 :return:

167 """

168 acc = accuracy(pred_y, true_y)

169 recall = binary_recall(pred_y, true_y)

170 precision = binary_precision(pred_y, true_y)

171 f_beta = binary_f_beta(pred_y, true_y, f_beta)

172 return acc, recall, precision, f_beta

173

174 def get_multi_metrics(pred_y, true_y, labels, f_beta=1.0):

175 """

176 得到多分类的性能指标

177 :param pred_y:

178 :param true_y:

179 :param labels:

180 :param f_beta:

181 :return:

182 """

183 acc = accuracy(pred_y, true_y)

184 recall = multi_recall(pred_y, true_y, labels)

185 precision = multi_precision(pred_y, true_y, labels)

186 f_beta = multi_f_beta(pred_y, true_y, labels, f_beta)

187 return acc, recall, precision, f_beta

188

189 # 6 训练模型

190 # 生成训练集和验证集

191 trainReviews = data.trainReviews

192 trainLabels = data.trainLabels

193 evalReviews = data.evalReviews

194 evalLabels = data.evalLabels

195 wordEmbedding = data.wordEmbedding

196 labelList = data.labelList

197

198 # 定义计算图

199 with tf.Graph().as_default():

200

201 session_conf = tf.ConfigProto(allow_soft_placement=True, log_device_placement=False)

202 session_conf.gpu_options.allow_growth=True

203 session_conf.gpu_options.per_process_gpu_memory_fraction = 0.9 # 配置gpu占用率

204

205 sess = tf.Session(config=session_conf)

206

207 # 定义会话

208 with sess.as_default():

209 bilstmattention = mode_structure.BiLSTMAttention(config, wordEmbedding)

210 globalStep = tf.Variable(0, name="globalStep", trainable=False)

211 # 定义优化函数,传入学习速率参数

212 optimizer = tf.train.AdamOptimizer(config.training.learningRate)

213 # 计算梯度,得到梯度和变量

214 gradsAndVars = optimizer.compute_gradients(bilstmattention.loss)

215 # 将梯度应用到变量下,生成训练器

216 trainOp = optimizer.apply_gradients(gradsAndVars, global_step=globalStep)

217

218 # 用summary绘制tensorBoard

219 gradSummaries = []

220 for g, v in gradsAndVars:

221 if g is not None:

222 tf.summary.histogram("{}/grad/hist".format(v.name), g)

223 tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g))

224

225 outDir = os.path.abspath(os.path.join(os.path.curdir, "summarys"))

226 print("Writing to {}\n".format(outDir))

227

228 lossSummary = tf.summary.scalar("loss", bilstmattention.loss)

229 summaryOp = tf.summary.merge_all()

230

231 trainSummaryDir = os.path.join(outDir, "train")

232 trainSummaryWriter = tf.summary.FileWriter(trainSummaryDir, sess.graph)

233

234 evalSummaryDir = os.path.join(outDir, "eval")

235 evalSummaryWriter = tf.summary.FileWriter(evalSummaryDir, sess.graph)

236

237

238 # 初始化所有变量

239 saver = tf.train.Saver(tf.global_variables(), max_to_keep=5)

240

241 # 保存模型的一种方式,保存为pb文件

242 savedModelPath = "../model/bilstm-atten/savedModel"

243 if os.path.exists(savedModelPath):

244 os.rmdir(savedModelPath)

245 builder = tf.saved_model.builder.SavedModelBuilder(savedModelPath)

246

247 sess.run(tf.global_variables_initializer())

248

249 def trainStep(batchX, batchY):

250 """

251 训练函数

252 """

253 feed_dict = {

254 bilstmattention.inputX: batchX,

255 bilstmattention.inputY: batchY,

256 bilstmattention.dropoutKeepProb: config.model.dropoutKeepProb

257 }

258 _, summary, step, loss, predictions = sess.run(

259 [trainOp, summaryOp, globalStep, bilstmattention.loss, bilstmattention.predictions],

260 feed_dict)

261 timeStr = datetime.datetime.now().isoformat()

262

263 if config.numClasses == 1:

264 acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY)

265

266 elif config.numClasses > 1:

267 acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY,

268 labels=labelList)

269

270 trainSummaryWriter.add_summary(summary, step)

271

272 return loss, acc, prec, recall, f_beta

273

274 def devStep(batchX, batchY):

275 """

276 验证函数

277 """

278 feed_dict = {

279 bilstmattention.inputX: batchX,

280 bilstmattention.inputY: batchY,

281 bilstmattention.dropoutKeepProb: 1.0

282 }

283 summary, step, loss, predictions = sess.run(

284 [summaryOp, globalStep, bilstmattention.loss, bilstmattention.predictions],

285 feed_dict)

286

287 if config.numClasses == 1:

288

289 acc, precision, recall, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY)

290 elif config.numClasses > 1:

291 acc, precision, recall, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY, labels=labelList)

292

293 evalSummaryWriter.add_summary(summary, step)

294

295 return loss, acc, precision, recall, f_beta

296

297 for i in range(config.training.epoches):

298 # 训练模型

299 print("start training model")

300 for batchTrain in nextBatch(trainReviews, trainLabels, config.batchSize):

301 loss, acc, prec, recall, f_beta = trainStep(batchTrain[0], batchTrain[1])

302

303 currentStep = tf.train.global_step(sess, globalStep)

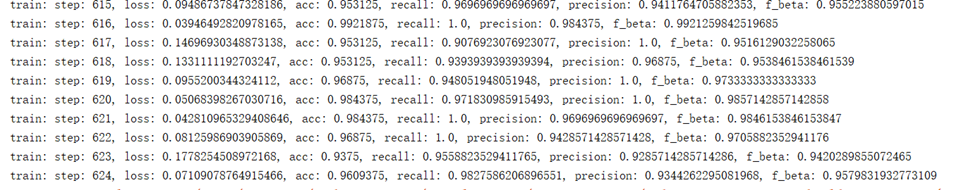

304 print("train: step: {}, loss: {}, acc: {}, recall: {}, precision: {}, f_beta: {}".format(

305 currentStep, loss, acc, recall, prec, f_beta))

306 if currentStep % config.training.evaluateEvery == 0:

307 print("\nEvaluation:")

308

309 losses = []

310 accs = []

311 f_betas = []

312 precisions = []

313 recalls = []

314

315 for batchEval in nextBatch(evalReviews, evalLabels, config.batchSize):

316 loss, acc, precision, recall, f_beta = devStep(batchEval[0], batchEval[1])

317 losses.append(loss)

318 accs.append(acc)

319 f_betas.append(f_beta)

320 precisions.append(precision)

321 recalls.append(recall)

322

323 time_str = datetime.datetime.now().isoformat()

324 print("{}, step: {}, loss: {}, acc: {},precision: {}, recall: {}, f_beta: {}".format(time_str, currentStep, mean(losses),

325 mean(accs), mean(precisions),

326 mean(recalls), mean(f_betas)))

327

328 if currentStep % config.training.checkpointEvery == 0:

329 # 保存模型的另一种方法,保存checkpoint文件

330 path = saver.save(sess, "../model/bilstm-atten/model/my-model", global_step=currentStep)

331 print("Saved model checkpoint to {}\n".format(path))

332

333 inputs = {"inputX": tf.saved_model.utils.build_tensor_info(bilstmattention.inputX),

334 "keepProb": tf.saved_model.utils.build_tensor_info(bilstmattention.dropoutKeepProb)}

335

336 outputs = {"predictions": tf.saved_model.utils.build_tensor_info(bilstmattention.predictions)}

337

338 prediction_signature = tf.saved_model.signature_def_utils.build_signature_def(inputs=inputs, outputs=outputs,

339 method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME)

340 legacy_init_op = tf.group(tf.tables_initializer(), name="legacy_init_op")

341 builder.add_meta_graph_and_variables(sess, [tf.saved_model.tag_constants.SERVING],

342 signature_def_map={"predict": prediction_signature}, legacy_init_op=legacy_init_op)

343

344 builder.save()

3.5 预测:predict.py

# Author:yifan

import os

import csv

import time

import datetime

import random

import json

from collections import Counter

from math import sqrt

import gensim

import pandas as pd

import numpy as np

import tensorflow as tf

from sklearn.metrics import roc_auc_score, accuracy_score, precision_score, recall_score

import parameter_config

config =parameter_config.Config()

#7预测代码

x = "this movie is full of references like mad max ii the wild one and many others the ladybug´s face it´s a clear reference or tribute to peter lorre this movie is a masterpiece we´ll talk much more about in the future"

# x = "his movie is the same as the third level movie. There's no place to look good"

# x = "This film is not good" #最终反馈为0

# x = "This film is bad" #最终反馈为0

x = "this movie is full of references like mad max ii the wild one and many others the ladybug´s face it´s a clear reference or tribute to peter lorre this movie is a masterpiece we´ll talk much more about in the future"

# 注:下面两个词典要保证和当前加载的模型对应的词典是一致的

with open("../data/wordJson/word2idx.json", "r", encoding="utf-8") as f:

word2idx = json.load(f)

with open("../data/wordJson/label2idx.json", "r", encoding="utf-8") as f:

label2idx = json.load(f)

idx2label = {value: key for key, value in label2idx.items()}

xIds = [word2idx.get(item, word2idx["UNK"]) for item in x.split(" ")]

if len(xIds) >= config.sequenceLength:

xIds = xIds[:config.sequenceLength]

else:

xIds = xIds + [word2idx["PAD"]] * (config.sequenceLength - len(xIds))

graph = tf.Graph()

with graph.as_default():

gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

session_conf = tf.ConfigProto(allow_soft_placement=True, log_device_placement=False, gpu_options=gpu_options)

sess = tf.Session(config=session_conf)

with sess.as_default():

checkpoint_file = tf.train.latest_checkpoint("../model/bilstm-atten/model/")

saver = tf.train.import_meta_graph("{}.meta".format(checkpoint_file))

saver.restore(sess, checkpoint_file)

# 获得需要喂给模型的参数,输出的结果依赖的输入值

inputX = graph.get_operation_by_name("inputX").outputs[0]

dropoutKeepProb = graph.get_operation_by_name("dropoutKeepProb").outputs[0]

# 获得输出的结果

predictions = graph.get_tensor_by_name("output/predictions:0")

pred = sess.run(predictions, feed_dict={inputX: [xIds], dropoutKeepProb: 1.0})[0]

# print(pred)

pred = [idx2label[item] for item in pred]

print(pred)

结果

相关代码可见:https://github.com/yifanhunter/NLP_textClassifier