34.Flannel网络组件

GitHub - flannel-io/flannel:flannel 是一种用于容器的网络结构,专为 Kubernetes 设计

| 有CoreOS开源的针对k8s的网络服务,其目的为解决k8s集群中各主机上的pod相互通信的问题,其借助与etcd维护网络IP地址分配,并为每一个node服务器分配一个不同的IP地址段 |

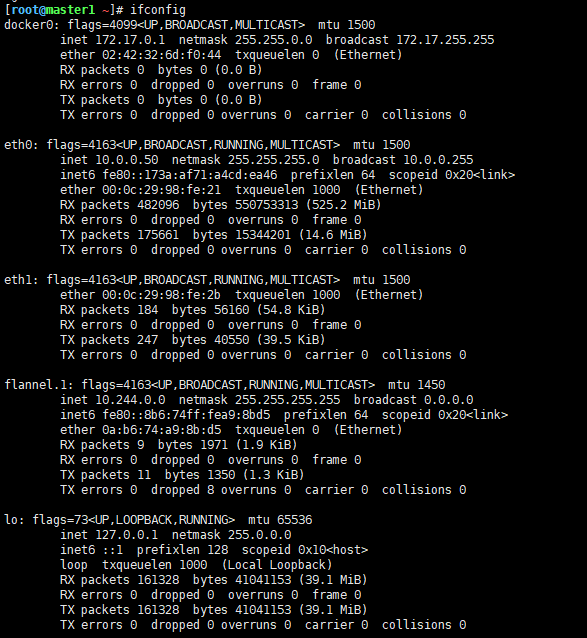

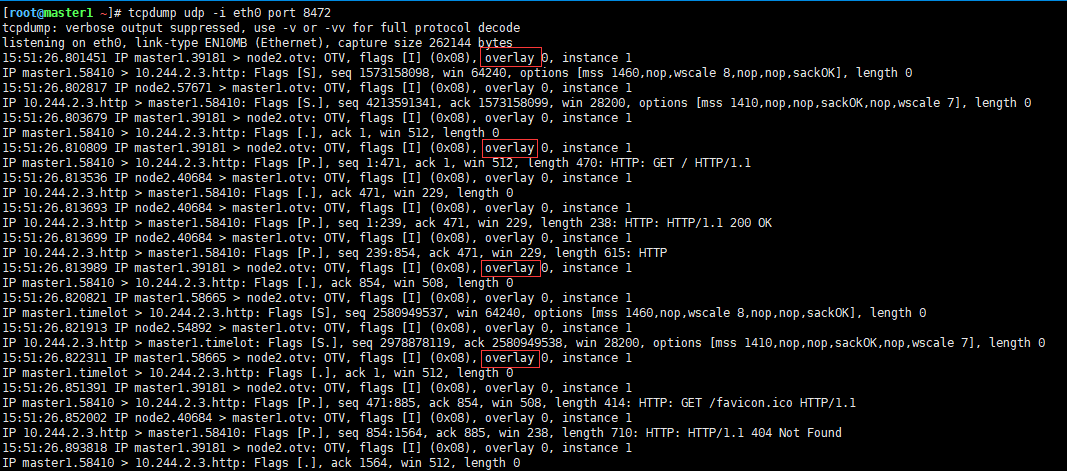

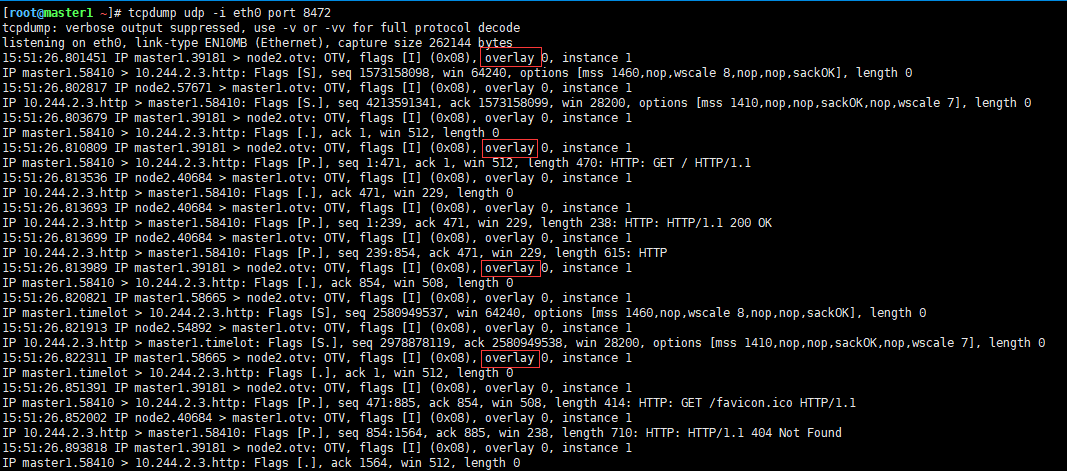

流量从eth0走出,是UDP协议,端口是8472

34.1 flannel网络模式

| Flannel 网络模式(后端模型)Flannel目前有三种实现方式 UDP/VXLAN/HOST-GW |

| |

| UDP:早期版本的Flannel使用UDP封装完成报文的跨主机转发,其安全性及性能略有不足 |

| |

| |

| VXLAN:Linux内核在2012年底的v3.7.0之后加入了VXLAN协议支持,因此新版本的Flannel也有UDP转换为VXLAN,VXLAN本质上是一种tunnel隧道协议,用来基于3层网络实现虚拟的2层网络,目前flannel的网络模型已经是基于VXLAN的叠加覆盖网络,目前推荐使用vxlan作为其网络模型 |

| |

| Host-gw:也就是Host GateWay通过在node节点上创建到达各目标容器地址的路由表而完成报文的转发,因此这种方式要求各node节点本身必须处于同一个局域网二层网络中,因此不适用于网络变动频繁或比较大型的网络环境,但是其性能较好。 |

| Cni0:网桥设备,每创建一个pod都会创建一对veth pair,其中一端是pod中的eth0,另一端是Cni0网桥中的端口网卡,Pod中从网卡eth0发出的流量都会发送到Cni0网桥设备的端口网卡上,Cni0设备获得的IP地址是该节点分配到的网段的第一个地址 |

| Flannel.1:overlay网络的设备,用来进行vxlan报文的处理(封包和解包),不同node之间的pod数据流量都从overlay设备以隧道的形式发送到对端 |

34.2 flannel实验

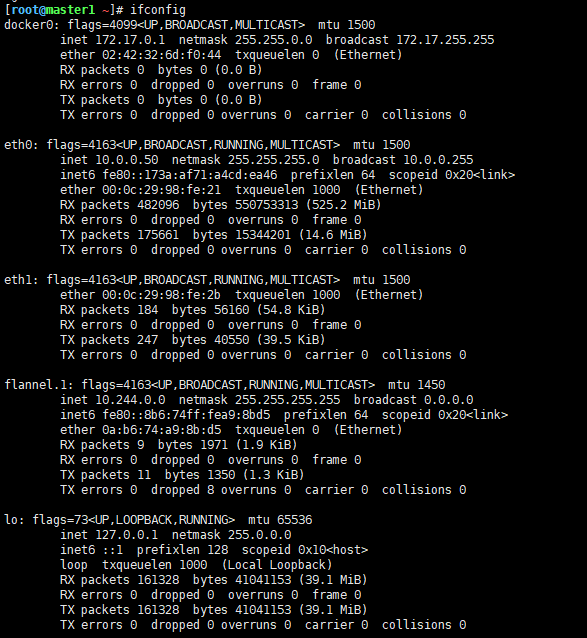

| [root@master1 ~] |

| docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 |

| inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 |

| ether 02:42:32:6d:f0:44 txqueuelen 0 (Ethernet) |

| RX packets 0 bytes 0 (0.0 B) |

| RX errors 0 dropped 0 overruns 0 frame 0 |

| TX packets 0 bytes 0 (0.0 B) |

| TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

| |

| eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 |

| inet 10.0.0.50 netmask 255.255.255.0 broadcast 10.0.0.255 |

| inet6 fe80::173a:af71:a4cd:ea46 prefixlen 64 scopeid 0x20<link> |

| ether 00:0c:29:98:fe:21 txqueuelen 1000 (Ethernet) |

| RX packets 482096 bytes 550753313 (525.2 MiB) |

| RX errors 0 dropped 0 overruns 0 frame 0 |

| TX packets 175661 bytes 15344201 (14.6 MiB) |

| TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

| |

| eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 |

| ether 00:0c:29:98:fe:2b txqueuelen 1000 (Ethernet) |

| RX packets 184 bytes 56160 (54.8 KiB) |

| RX errors 0 dropped 0 overruns 0 frame 0 |

| TX packets 247 bytes 40550 (39.5 KiB) |

| TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

| |

| flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 |

| inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0 |

| inet6 fe80::8b6:74ff:fea9:8bd5 prefixlen 64 scopeid 0x20<link> |

| ether 0a:b6:74:a9:8b:d5 txqueuelen 0 (Ethernet) |

| RX packets 9 bytes 1971 (1.9 KiB) |

| RX errors 0 dropped 0 overruns 0 frame 0 |

| TX packets 11 bytes 1350 (1.3 KiB) |

| TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0 |

| |

| lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 |

| inet 127.0.0.1 netmask 255.0.0.0 |

| inet6 ::1 prefixlen 128 scopeid 0x10<host> |

| loop txqueuelen 1000 (Local Loopback) |

| RX packets 161328 bytes 41041153 (39.1 MiB) |

| RX errors 0 dropped 0 overruns 0 frame 0 |

| TX packets 161328 bytes 41041153 (39.1 MiB) |

| TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

| |

| [root@master1 ~] |

| udp UNCONN 0 0 *:8472 *:* |

| [root@master1 ~] |

| Kernel IP routing table |

| Destination Gateway Genmask Flags Metric Ref Use Iface |

| 0.0.0.0 10.0.0.2 0.0.0.0 UG 100 0 0 eth0 |

| 10.0.0.0 0.0.0.0 255.255.255.0 U 100 0 0 eth0 |

| 10.244.1.0 10.244.1.0 255.255.255.0 UG 0 0 0 flannel.1 |

| 10.244.2.0 10.244.2.0 255.255.255.0 UG 0 0 0 flannel.1 |

| 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 |

| [root@master1 ~] |

| apiVersion: apps/v1 |

| kind: Deployment |

| metadata: |

| name: nginx-deployment |

| labels: |

| app: nginx |

| spec: |

| replicas: 3 |

| selector: |

| matchLabels: |

| app: nginx |

| template: |

| metadata: |

| labels: |

| app: nginx |

| spec: |

| containers: |

| - name: nginx |

| image: nginx:latest |

| ports: |

| - containerPort: 80 |

| |

| [root@master1 ~] |

| apiVersion: v1 |

| kind: Service |

| metadata: |

| name: nginx-service |

| labels: |

| app: nginx |

| spec: |

| ports: |

| - port: 88 |

| targetPort: 80 |

| selector: |

| app: nginx |

| type: NodePort |

| [root@master1 ~] |

| |

| [root@master1 ~] |

| NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

| kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 14m |

| nginx-service NodePort 10.254.112.145 <none> 88:32095/TCP 3m52s |

| [root@master1 ~] |

| /run/flannel |

| /var/lib/docker/overlay2/37cdd3a3925063f7467587ceff307c24851cf8e2f6f477b0713d7e27d903ab7c/diff/flannel |

| /var/lib/docker/overlay2/b6fedea8403fe56e2d8fe9806cb0bf56fbe1f2f07b989d010fe0de8edf1b0865/diff/run/flannel |

| /var/lib/docker/overlay2/b6fedea8403fe56e2d8fe9806cb0bf56fbe1f2f07b989d010fe0de8edf1b0865/merged/run/flannel |

| /opt/cni/bin/flannel |

| [root@master1 ~] |

| FLANNEL_NETWORK=10.244.0.0/16 |

| FLANNEL_SUBNET=10.244.0.1/24 |

| FLANNEL_MTU=1450 |

| FLANNEL_IPMASQ=true |

34.3 flannel vxlan配置

| [root@master1 ~] |

| |

| |

| |

| |

| apiVersion: v1 |

| data: |

| cni-conf.json: | |

| { |

| "name": "cbr0", |

| "plugins": [ |

| { |

| "type": "flannel", |

| "delegate": { |

| "hairpinMode": true, |

| "isDefaultGateway": true |

| } |

| }, |

| { |

| "type": "portmap", |

| "capabilities": { |

| "portMappings": true |

| } |

| } |

| ] |

| } |

| net-conf.json: | |

| { |

| "Network": "10.244.0.0/16", |

| "Backend": { |

| "Type": "vxlan" |

| } |

| } |

| kind: ConfigMap |

| metadata: |

| annotations: |

| kubectl.kubernetes.io/last-applied-configuration: | |

| {"apiVersion":"v1","data":{"cni-conf.json":"{\n \"name\": \"cbr0\",\n \"plugins\": [\n {\n \"type\": \"flannel\",\n \"delegate\": {\n \"hairpinMode\": true,\n \"isDefaultGateway\": true\n }\n },\n {\n \"type\": \"portmap\",\n \"capabilities\": {\n \"portMappings\": true\n }\n }\n ]\n}\n","net-conf.json":"{\n \"Network\": \"10.244.0.0/16\",\n \"Backend\": {\n \"Type\": \"vxlan\"\n }\n}\n"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app":"flannel","tier":"node"},"name":"kube-flannel-cfg","namespace":"kube-system"}} |

| creationTimestamp: "2022-12-31T07:41:36Z" |

| labels: |

| app: flannel |

| tier: node |

| name: kube-flannel-cfg |

| namespace: kube-system |

| resourceVersion: "663" |

| selfLink: /api/v1/namespaces/kube-system/configmaps/kube-flannel-cfg |

| uid: d31a75e8-dc0e-45a6-8c46-df5778344cb5 |

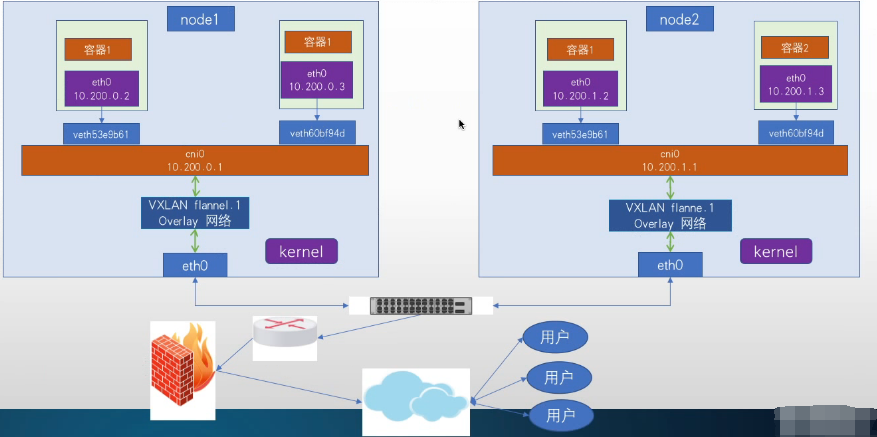

34.5 flannel vxlan架构图

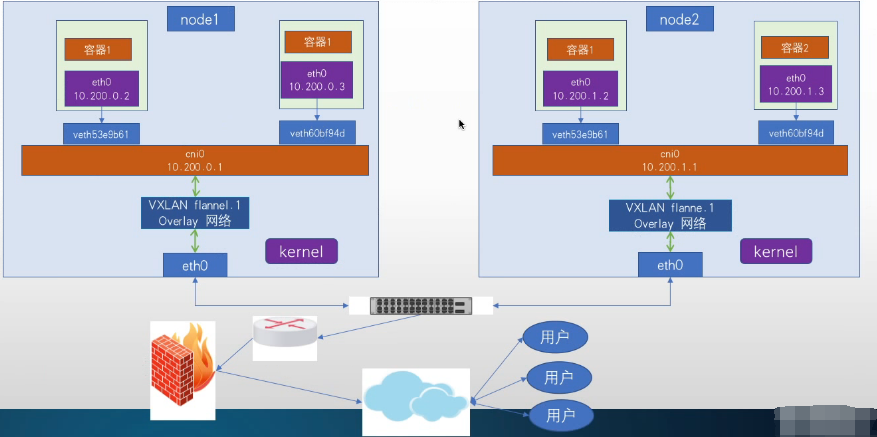

1.首先node1的一个pod想去访问node2的pod,在容器都会有一个eth0的网卡,在宿主机都会有一个veth的网卡,他们都指向cni0网桥在这里进行报文的封装,然后再通过flannel网卡overlay隧道网络到宿主机的eth0,一个UDP的端口8473出去,下一跳是目的主机的宿主机网卡

2.到了对端的eth0网卡进行解包查看IP地址是去往哪个pod的容器,在通过flannel网卡cni0网桥,进入对应的veth到达容器

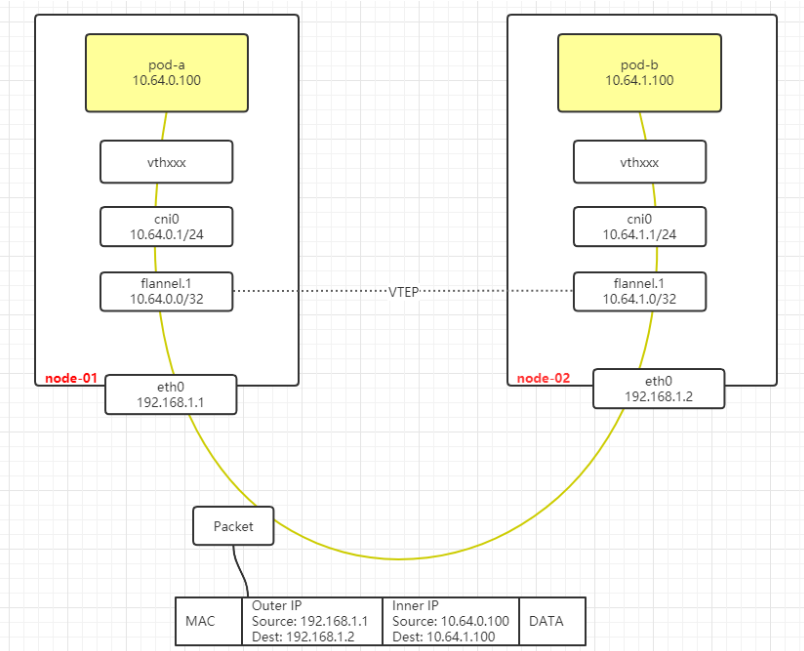

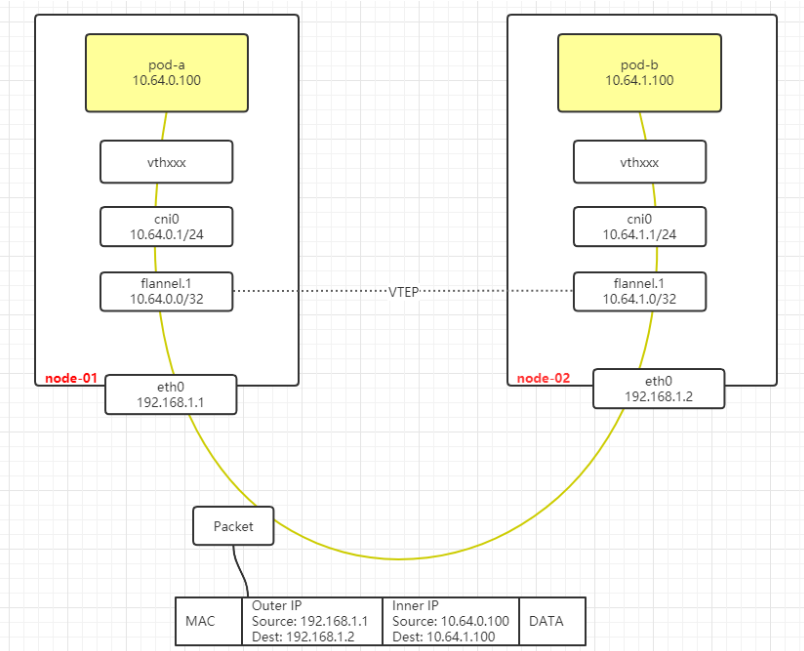

pod跨节点通信

通信流程:

- pod-a访问pod-b 因为两者IP不在同一个子网,首先数据会先到默认网关也就是cni010.64.0.1

- 节点上面的静态路由可以看出来10.64.1.0/24 是指向flannel.1的

- flannel.1 会使用vxlan协议把原始IP包加上目的mac地址封装成二层数据帧

- 原始vxlan数据帧无法在物理二层网络中通信,flannel.1 (linux 内核支持vxlan,此步骤由内核完成)会把数据帧封装成UDP报文经过物理网络发送到node-02

- node-02收到UDP报文中带有vxlan头信息,会转交给flannel.1解封装得到原始数据

- flannel.1 根据直连路由转发到cni0 上面

- cni0 转发给pod-2

34.6 flannel通信流程

1.源pod发起请求,此时报文中源IP为pod的eth0的IP,源mac为pod的eth0的mac,目的pod为目的pod的IP,目的的mac为网关(cni0)的MAC

| tcpdump -nn -vvv -i veth91d6855 -nn -vvv ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 |

| |

| |

| tcpdump -nn -vvv -i vethe2908f0c -nn -vvv ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 -w aa.pcap |

2.数据报文通过veth peer发送给网关cni0,检查目的mac就是发给自己的,cni0进行目标IP检查,如果是同一个网桥的报文就直接转发,不是的话就发送给flannel.1此时报文被修改如下

| 源IP:Pod IP |

| 目的IP:Pod IP |

| 源MAC: 源Pod MAC |

| 目的MAC: cni MAC |

| |

| tcpdump -nn -vvv -i cni0 -nn -vvv ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! pot 53 |

3.到达flannel.1检查目的mac就是发给自己的,开始匹配路由表,先实现overlay报文的内层封装(主要是修改目的的Pod的对端flannel.1的MAC、源MAC为当前宿主机flannel.1的MAC)

| |

| [root@master1 ~] |

| 0e:91:58:e5:59:c0 dst 10.0.0.52 self permanent |

| 2a:d7:30:a8:8c:27 dst 10.0.0.51 self permanent |

| [root@master1 ~] |

| NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES |

| nginx-deployment-585449566-drqvg 1/1 Running 0 113m 10.244.2.4 node2 <none> <none> |

| nginx-deployment-585449566-j99kt 1/1 Running 0 113m 10.244.2.3 node2 <none> <none> |

| nginx-deployment-585449566-r966g 1/1 Running 0 113m 10.244.1.3 node1 <none> <none> |

| [root@master1 ~] |

| root@nginx-deployment-585449566-drqvg:/ |

| |

| root@nginx-deployment-585449566-drqvg:/ |

| NIC statistics: |

| peer_ifindex: 9 |

| |

| [root@node2 ~] |

| 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000 |

| link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 |

| 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000 |

| link/ether 00:0c:29:bd:8e:52 brd ff:ff:ff:ff:ff:ff |

| 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000 |

| link/ether 00:0c:29:bd:8e:5c brd ff:ff:ff:ff:ff:ff |

| 4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default |

| link/ether 02:42:79:c9:c4:0d brd ff:ff:ff:ff:ff:ff |

| 5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default |

| link/ether 0e:91:58:e5:59:c0 brd ff:ff:ff:ff:ff:ff |

| 6: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000 |

| link/ether 16:d1:46:3a:de:ee brd ff:ff:ff:ff:ff:ff |

| 7: vetha18db6f3@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default |

| link/ether 4e:fb:54:9c:0c:43 brd ff:ff:ff:ff:ff:ff link-netnsid 0 |

| 8: vethe2c60553@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default |

| link/ether 12:e3:9e:78:38:ef brd ff:ff:ff:ff:ff:ff link-netnsid 1 |

| 9: vethe2908f0c@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default |

| link/ether 0a:e9:7b:e1:f6:a1 brd ff:ff:ff:ff:ff:ff link-netnsid 2 |

| |

| |

| tcpdump -nn -vvv -i vethe2908f0c -nn -vvv ! port 22 and ! port 2379 and ! port 6443 and ! port 10250 and ! arp and ! port 53 |

然后node2容器里ping百度域名查看是否可以抓取到

| root@nginx-deployment-585449566-drqvg:/ |

node2抓取的