27. HPA

27.1 Pod伸缩简介

根据当前pod的负载,动态调整pod副本数量,业务高峰期自动扩容pod的副本数以尽快响应pod的请求

在业务低峰期对pod进行缩容,实现降本增效的目的

公有云支持node级别的弹性伸缩

27.2 Scale命令扩容

root@k8s-master1:~/k8s-data/yaml/dubbo

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 1/1 1 1 6d21h

tomcat-app1-deployment 1/1 1 1 6d22h

root@k8s-master1:~/k8s-data/yaml/dubbo

27.3 动态伸缩控制器类型

基于pod资源利用率,调整对单个pod的最大资源限制,不能与HPA同时使用

基于集群中node资源使用情况,动态伸缩node节点,从而保证有CPU和内存资源用于创建pod

27.4 HPA控制器简介

Horizontal Pod Autoscaling(HPA)控制器,根据预定义好的阈值及pod当前的资源利用率,自动控制在k8s集群中运行的pod数量(自动弹性水平自动伸缩)

--horizontal-pod-autoscaler-sync-period

--horizontal-pod-autoscaler-downscale-stabilization

--horizontal-pod-autoscaler-sync-period

--horizontal-pod-autoscaler-cpu-initialization-period

--horizontal-pod-autoscaler-initial-readiness-delay

--horizontal-pod-autoscaler-tolerance

触发条件:avg(CurrentPodsConsumption)/Target >1.1 或 <0.9=把N个pod的数据相加后根据pod的数量计算出平均数除以阈值,大于1.1就扩容,小于0.9就缩容

计算公式:TargetNumOfPods = ceil(sum (CurrentPodsCPUUtilization)/Target)

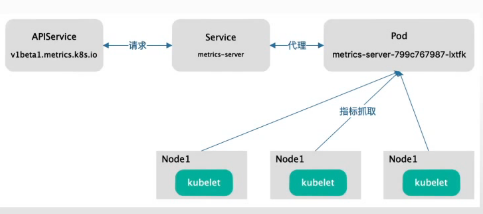

指标数据需要部署metrics-server,即HPA使用metrics-server作为数据源

在k8s 1.1引入HPA控制器,早期使用Heapster组件采集pod指标数据,在k8s 1.11版本开始使用Metrices Server完成数据采集,然后将采集到的数据通过API,例如:metrics.k8s.io、custom.metrics.k8s.io、external.metrics.k8s.io、然后再把数据提供给HPA控制器进行查询,以实现基于某个资源利用率对pod进行扩缩容的目的

27.5 metrics-server部署

metrics-server 是Kubernetes内置的容器资源指标来源

metrics-server 从node节点上的kubelet收集资源指标,并通过Metrics API在 Kubernetes apiserver中公开指标数据,以供Horizontal Pod Autoscaler和Vertical Pod Autoscaler使用,也可以通过访问kubectl top node/pod 查看指标数据

root@k8s-master1:~/20220814/metrics-server-0.6.1-case

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: harbor.nbrhce.com/demo/metrics-server:v0.6.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

27.6 HPA实现

root@k8s-master1:~/20220814/metrics-server-0.6.1-case

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app1-deployment-label

name: tomcat-app1-deployment

namespace: demo

spec:

replicas: 2

selector:

matchLabels:

app: tomcat-app1-selector

template:

metadata:

labels:

app: tomcat-app1-selector

spec:

containers:

- name: tomcat-app1-container

image: lorel/docker-stress-ng

args: ["--vm" , "2" , "--vm-bytes" , "256M" ]

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

name: http

env :

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

---

kind: Service

apiVersion: v1

metadata:

labels:

app: tomcat-app1-service-label

name: tomcat-app1-service

namespace: demo

spec:

type : NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 40003

selector:

app: tomcat-app1-selector

root@k8s-master1:~/20220814/metrics-server-0.6.1-case

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

namespace: demo

name: tomcat-app1-podautoscaler

labels:

app: tomcat-app1

version: v2beta1

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: tomcat-app1-deployment

minReplicas: 3

maxReplicas: 10

targetCPUUtilizationPercentage: 60

root@k8s-master1:~/20220814/metrics-server-0.6.1-case

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

tomcat-app1-podautoscaler Deployment/tomcat-app1-deployment 199%/60% 3 10 10 15m