7. DaemonSet

https://www.cnblogs.com/Netsharp/p/15501138.html

DaemonSet:它叫守护进程集缩写为ds,在所有节点或者是匹配的节点上都部署一个Pod

DaemonSet:在当前集群中每个节点运行同一个Pod,当有新的节点加入集群时也会为新的节点配置相同的Pod,当节点从集群中移除时其Pod也会被kubernetes回收,删除DaemonSet控制器将删除其创建的所有的Pod

例如:

运行集群存储的daemon,比如ceph或者glusterd

节点的CNI网络插件

节点日志的收集:filebeat

节点的监控: node exporter

服务暴露:部署一个ingress nginx

7.1 定义一个DaemonSet资源

[root@master01 daemonset]# cat nginx-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: nginx

name: nginx

namespace: default

spec:

revisionHistoryLimit: 10 # 历史记录保留的个数

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:1.15.2

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

#查看是否创建成功

[root@master01 daemonset]# kubectl get ds

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

nginx 3 3 2 3 2 <none> 4s

#这里已经成功了 因为我有三个节点所以有三个POD

[root@master01 daemonset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 2 122m

nginx-cmn4t 1/1 Running 0 9s

nginx-sbrjh 1/1 Running 0 9s

nginx-z6j6h 1/1 Running 0 9s

7.2 指定标签调度

#先创建两个标签 准备调度

[root@master01 daemonset]# kubectl label nodes node01 ds=true

node/node01 labeled

[root@master01 daemonset]# kubectl label nodes node02 ds=true

node/node02 labeled

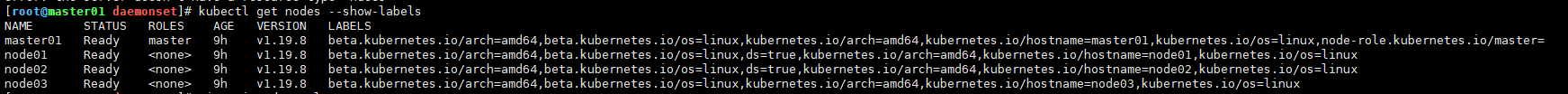

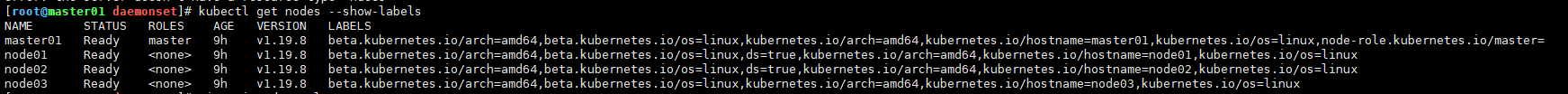

#查看节点的Pod 标签是否成功

[root@master01 daemonset]# kubectl get nodes --show-labels

#nodeSelector 标签选择器

[root@master01 daemonset]# cat nginx-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: nginx

name: nginx

namespace: default

spec:

revisionHistoryLimit: 10 # 历史记录保留的个数

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

nodeSelector:

ds: "true"

containers:

- image: nginx:1.15.2

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

#再次查看Pod状态 你会发现没有 ds=true的标签就会被删除掉 只留下存在标签的

[root@master01 daemonset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 2 129m

nginx-cmn4t 1/1 Running 0 6m51s

nginx-sbrjh 0/1 Terminating 0 6m51s

nginx-z6j6h 0/1 Terminating 0 6m51s

[root@master01 daemonset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 2 136m

nginx-j86d8 1/1 Running 0 7m22s

nginx-vs22j 1/1 Running 0 7m32s

#如果想把删除的Pod在加进来、重新给他添加一个标签即可

[root@master01 daemonset]# kubectl label nodes node03 ds=true

node/node03 labeled

#打上这个标签后会自动的运行Pod

[root@master01 daemonset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 2 140m

nginx-j86d8 1/1 Running 0 10m

nginx-vs22j 1/1 Running 0 11m

nginx-zdpnm 1/1 Running 0 13s

7.3 回滚

#daemonset不建议使用RollingUpdate影响很高 建议使用OnDelet

#默认是RollingUpdate 滚动更新

[root@master01 daemonset]# kubectl edit ds nginx

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

#然后就更新成功了

[root@master01 daemonset]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 2 148m

nginx-hzptc 1/1 Running 0 2m45s

nginx-s2twh 1/1 Running 0 2m39s

nginx-t2754 1/1 Running 0 2m21s

#建议使用

updateStrategy:

type: OnDelete

#删除一个pod才会触发更新

[root@master01 daemonset]# kubectl delete pod nginx-hzptc

pod "nginx-hzptc" deleted

#也可以查看历史版本

[root@master01 daemonset]# kubectl rollout history ds nginx

7.4 部署一个web例子

root@deploy-harbor:~/20220731/k8s-Resource-N70/case13-DaemonSet# cat 1-DaemonSet-webserver.yaml

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: myserver-myapp

namespace: myserver

spec:

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

hostNetwork: true

hostPID: true

containers:

- name: myserver-myapp-frontend

image: nginx:1.20.2-alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend

namespace: myserver

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30018

protocol: TCP

type: NodePort

selector:

app: myserver-myapp-frontend

7.5 部署日志收集例

root@deploy-harbor:~/20220731/k8s-Resource-N70/case13-DaemonSet# cat 2-DaemonSet-fluentd.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

7.6 部署prometheus例

root@deploy-harbor:~/20220731/k8s-Resource-N70/case13-DaemonSet# cat 3-DaemonSet-prometheus.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

containers:

- image: prom/node-exporter:v1.3.1

imagePullPolicy: IfNotPresent

name: prometheus-node-exporter

ports:

- containerPort: 9100

hostPort: 9100

protocol: TCP

name: metrics

volumeMounts:

- mountPath: /host/proc

name: proc

- mountPath: /host/sys

name: sys

- mountPath: /host

name: rootfs

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

hostNetwork: true

hostPID: true

---

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: "true"

labels:

k8s-app: node-exporter

name: node-exporter

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 9100

nodePort: 39100

protocol: TCP

selector:

k8s-app: node-exporter