Etcd 备份与恢复

14.1 Etcd概述

etcd是一个构建高可用的分布式键值(key-value)数据库。etcd内部采用raft协议作为一致性算法,它是基于GO语言实现。

14.2 Etcd属性

- 完全复制

集群中的每个节点都可以使用完整的存档

- 高可用性

etcd可用于避免硬件的单点故障或网络问题

- 一致性

每次读取都会返回跨多主机的最新写入

- 简单

包括一个定义良好、面向用户的API(gRPC)

- 快速

每秒10000次写入的基准速度

- 可靠

使用Raft算法实现了存储的合理分布Etcd的工作原理

14.3 Etcd服务配置

root@k8s-etcd1:~# cat /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd #数据保存目录

ExecStart=/usr/local/bin/etcd \ #二进制文件路径

--name=etcd-192.168.1.71 \ #当前node名称也是IP地址

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/etcd.pem \

--peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

#通告自己的集群端口

--initial-advertise-peer-urls=https://192.168.1.71:2380 \

#集群之间通讯端口

--listen-peer-urls=https://192.168.1.71:2380 \

#客户端访问地址

--listen-client-urls=https://192.168.1.71:2379,http://127.0.0.1:2379 \

#通告自己客户端端口

--advertise-client-urls=https://192.168.1.71:2379 \

#创建集群使用的token一个集群内的节点保持一致

--initial-cluster-token=etcd-cluster-0 \

#集群所有节点信息

--initial-cluster=etcd-192.168.1.71=https://192.168.1.71:2380,etcd-192.168.1.72=https://192.168.1.72:2380,etcd-192.168.1.73=https://192.168.1.73:2380 \

#新建集群为new,存在的为existing

--initial-cluster-state=new \

#数据目录路径

--data-dir=/var/lib/etcd \

--wal-dir= \

--snapshot-count=50000 \

#每小时压缩一次,一小时以后每隔一小时的10分之1也就是6分钟压缩一次

--auto-compaction-retention=1 \

#周期性压缩

--auto-compaction-mode=periodic \

#(请求的最大字节、数默认一个key最大1.5mb、官方推荐最大10mb)

--max-request-bytes=10485760 \

#磁盘存储空间大小限制,默认为2G,此值超过8G启动会有警告信息

--quota-backend-bytes=8589934592

Restart=always

RestartSec=15

LimitNOFILE=65536

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

14.4 Etcd检查集群信息

- 检查集群心跳检测

#defrag 是顺序io方式存储

#ETCDCTL_API=3 声明api版本

ETCDCTL_API=3 /usr/local/bin/etcdctl defrag --cluster --endpoints=https://192.168.1.71:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem

#结果 心跳已经是通过的了

Finished defragmenting etcd member[https://192.168.1.71:2379]

Finished defragmenting etcd member[https://192.168.1.72:2379]

Finished defragmenting etcd member[https://192.168.1.73:2379]

- 检查集群是否健康-http

#etcd1

root@k8s-etcd1:~# etcdctl endpoint health

127.0.0.1:2379 is healthy: successfully committed proposal: took = 3.131033ms

#etcd2

root@k8s-etcd2:~# etcdctl endpoint health

127.0.0.1:2379 is healthy: successfully committed proposal: took = 9.114311ms

#etcd3

root@k8s-etcd3:~# etcdctl endpoint health

127.0.0.1:2379 is healthy: successfully committed proposal: took = 13.232431ms

- 检查集群是否健康-https

export NODE_IPS="192.168.1.71 192.168.1.72 192.168.1.73"

for ip in ${NODE_IPS}; do ETCDCTL_API=3 /opt/kube/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;

done

#执行结果

https://192.168.1.76:2379 is healthy: successfully committed proposal: took = 8.57508ms

https://192.168.1.77:2379 is healthy: successfully committed proposal: took = 10.019689ms

https://192.168.1.78:2379 is healthy: successfully committed proposal: took = 8.723699ms

14.5 Etcd增删改查

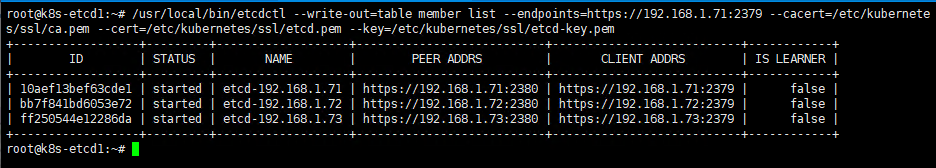

- 以表格的形式输出

#IS LEARNER 显示内容是否同步数据

root@k8s-etcd1:~# /usr/local/bin/etcdctl --write-out=table member list --endpoints=https://192.168.1.71:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem

+------------------+---------+-------------------+---------------------------+---------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-------------------+---------------------------+---------------------------+------------+

| 10aef13bef63cde1 | started | etcd-192.168.1.71 | https://192.168.1.71:2380 | https://192.168.1.71:2379 | false |

| bb7f841bd6053e72 | started | etcd-192.168.1.72 | https://192.168.1.72:2380 | https://192.168.1.72:2379 | false |

| ff250544e12286da | started | etcd-192.168.1.73 | https://192.168.1.73:2380 | https://192.168.1.73:2379 | false |

+------------------+---------+-------------------+---------------------------+---------------------------+------------+

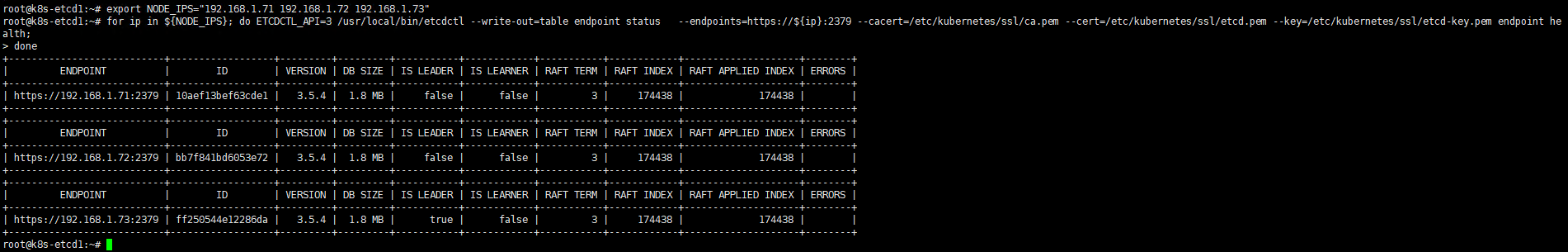

- 显示集群节点详细状态

export NODE_IPS="192.168.1.71 192.168.1.72 192.168.1.73"

for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table endpoint status --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;

done

- 查看集群所有的key

#没事不要敲这个 感觉属于全部遍历

root@k8s-etcd1:~# etcdctl get / --keys-only --prefix

#查看集群中nginx keys

root@k8s-etcd1:~# etcdctl get / --keys-only --prefix | grep nginx

#查看集群中所有的namespace

root@k8s-etcd1:~# etcdctl get / --keys-only --prefix | grep namespace

- 创建数据、查看数据、删除数据

root@k8s-etcd1:~# etcdctl put /node "192.168.1.100"

OK

root@k8s-etcd1:~# etcdctl get /node

/node

192.168.1.100

root@k8s-etcd1:~# etcdctl del /node

1

14.6 Etcd数据watch机制

功能:基于不断监看数据,发生变化就主动触发通知客户端,Etcd v3的watch机制支持watch某个固定的key,也支持watch一个范围

概述:在etcd1上watch一个key,没有此key也可以执行watch,后期可以再创建

root@k8s-etcd1:~# etcdctl put /node "192.168.1.100"

OK

root@k8s-etcd1:~# etcdctl watch /node

PUT

root@k8s-etcd2:~# etcdctl put /node "192.168.1.101"

OK

root@k8s-etcd1:~# etcdctl watch /node

PUT

/node

192.168.1.101

14.7 Etcd数据删除

这样删除数据的话是直接绕过了etcd,所以这种方式很危险

#我要删除net-test1

root@deploy-harbor:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68555f5f97-p255g 1/1 Running 0 140m

kube-system calico-node-gdc8m 0/1 CrashLoopBackOff 267 (4m34s ago) 47h

kube-system calico-node-h5drr 0/1 CrashLoopBackOff 267 (3m28s ago) 47h

linux60 linux60-tomcat-app1-deployment-595f7ff67c-2h8vv 1/1 Running 0 140m

myserver linux70-nginx-deployment-55dc5fdcf9-g7lkt 1/1 Running 0 140m

myserver linux70-nginx-deployment-55dc5fdcf9-mrxlp 1/1 Running 0 140m

myserver linux70-nginx-deployment-55dc5fdcf9-q6x59 1/1 Running 0 140m

myserver linux70-nginx-deployment-55dc5fdcf9-s5h42 1/1 Running 0 140m

myserver net-test1 1/1 Running 0 18s

myserver net-test2 1/1 Running 0 11s

myserver net-test3 1/1 Running 0 7s

#在etcd查找

root@k8s-etcd1:~# etcdctl get / --keys-only --prefix | grep 'net-test'

/registry/events/myserver/net-test1.17298ad770ac777d

/registry/events/myserver/net-test1.17298ad798f19b5c

/registry/events/myserver/net-test1.17298ad79a391fd2

/registry/events/myserver/net-test1.17298ad79f5cee1f

/registry/events/myserver/net-test2.17298ad8f4d8457e

/registry/events/myserver/net-test2.17298ad91bb1f309

/registry/events/myserver/net-test2.17298ad91d15fb7e

/registry/events/myserver/net-test2.17298ad921246080

/registry/events/myserver/net-test3.17298ad9fa9cf679

/registry/events/myserver/net-test3.17298ada1eeb346b

/registry/events/myserver/net-test3.17298ada20011319

/registry/events/myserver/net-test3.17298ada243b48d8

/registry/pods/myserver/net-test1

/registry/pods/myserver/net-test2

/registry/pods/myserver/net-test3

#删除

root@k8s-etcd1:~# etcdctl del /registry/pods/myserver/net-test1

1

#你会发现这条数据就没有了

root@deploy-harbor:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68555f5f97-p255g 1/1 Running 0 141m

kube-system calico-node-gdc8m 0/1 Running 268 (5m44s ago) 47h

kube-system calico-node-h5drr 0/1 CrashLoopBackOff 267 (4m38s ago) 47h

linux60 linux60-tomcat-app1-deployment-595f7ff67c-2h8vv 1/1 Running 0 141m

myserver linux70-nginx-deployment-55dc5fdcf9-g7lkt 1/1 Running 0 141m

myserver linux70-nginx-deployment-55dc5fdcf9-mrxlp 1/1 Running 0 141m

myserver linux70-nginx-deployment-55dc5fdcf9-q6x59 1/1 Running 0 141m

myserver linux70-nginx-deployment-55dc5fdcf9-s5h42 1/1 Running 0 141m

myserver net-test2 1/1 Running 0 81s

myserver net-test3 1/1 Running 0 77s

14.8 Etcd V3 API版本数据备份与恢复

WAL是write ahead log(预写日志)的缩写,顾名思义,也就是在执行真正的写操作之前先写一个日志,叫预写日志

WAL:存放预写式日志,最大的作用是记录了整个数据变化的全部历程。在etcd中所有数据修改前提交,都要先写入WAL中

预写日志存放路径:真正存放数据的地方

root@k8s-etcd1:~# ll /var/lib/etcd/member/snap/db

-rw------- 1 root root 2445312 Nov 21 08:15 /var/lib/etcd/member/snap/db

- 备份

root@k8s-etcd1:~# etcdctl snapshot save /tmp/test.sb

{"level":"info","ts":"2022-11-21T08:17:54.386Z","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/tmp/test.sb.part"}

{"level":"info","ts":"2022-11-21T08:17:54.387Z","logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":"2022-11-21T08:17:54.388Z","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":"2022-11-21T08:17:54.424Z","logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":"2022-11-21T08:17:54.436Z","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"2.4 MB","took":"now"}

{"level":"info","ts":"2022-11-21T08:17:54.436Z","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/tmp/test.sb"}

Snapshot saved at /tmp/test.sb

- 恢复

- --data-dir="/opt/etcd" 这个目录可以不可以存在数据 但是可以为空目录

root@k8s-etcd1:~# etcdctl snapshot restore /tmp/test.sb --data-dir="/opt/etcd"

- 恢复效果

#是新创建的目录有数据了

root@k8s-etcd1:~# ll /opt/etcd/

total 12

drwxr-xr-x 3 root root 4096 Nov 21 08:22 ./

drwxr-xr-x 3 root root 4096 Nov 21 08:21 ../

drwx------ 4 root root 4096 Nov 21 08:22 member/

root@k8s-etcd1:~# ll /opt/etcd/member/

total 16

drwx------ 4 root root 4096 Nov 21 08:22 ./

drwxr-xr-x 3 root root 4096 Nov 21 08:22 ../

drwx------ 2 root root 4096 Nov 21 08:22 snap/

drwx------ 2 root root 4096 Nov 21 08:22 wal/

#在恢复集群有两种方法

方法一:新创建的目录有恢复的数据了然后更改etcd启动服务文件、更改路径换成新的即可

root@k8s-etcd1:~# vim /etc/systemd/system/etcd.service

WorkingDirectory=/var/lib/etcd

--data-dir=/var/lib/etcd

方法二:新创建的目录有恢复的数据了然后把etcd启动服务文件的路径下的数据删除,在把新恢复的数据拷贝过去即可

例如是这个目录 去把这个目录下数据删除

--data-dir=/var/lib/etcd

14.9 Etcd 自动备份数据脚本

root@k8s-etcd1:~# mkdir -p /data/etcd-backup-dir

#备份脚本

root@k8s-etcd1:~# cat scripts.sh

#!/bin/bash

#Etcd time scripts backup auther quyi

DATE=`date +%Y-%m-%d_%H-%M-%S`

ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot save /data/etcd-backup-dir/etcd-snapshot-${DATE}.db &>/dev/null

#定时任务

root@k8s-etcd1:~# crontab -l

00 00 * * * /bin/bash /root/scripts.sh &>/dev/null

14.10 Ansible-实现Etcd备份与恢复

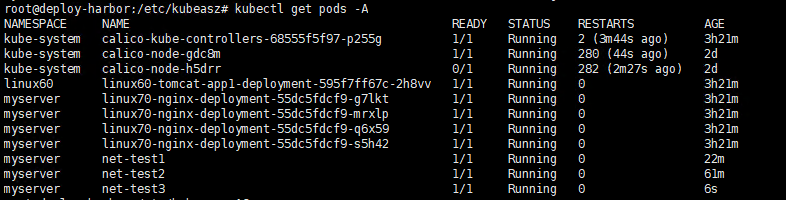

- 先创建了一个容器

root@deploy-harbor:/etc/kubeasz# kubectl run net-test3 --image=centos:7.9.2009 sleep 10000000 -n myserver

- 查看以下所有的pod

root@deploy-harbor:/etc/kubeasz# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68555f5f97-p255g 1/1 Running 2 (3m44s ago) 3h21m

kube-system calico-node-gdc8m 1/1 Running 280 (44s ago) 2d

kube-system calico-node-h5drr 0/1 Running 282 (2m27s ago) 2d

linux60 linux60-tomcat-app1-deployment-595f7ff67c-2h8vv 1/1 Running 0 3h21m

myserver linux70-nginx-deployment-55dc5fdcf9-g7lkt 1/1 Running 0 3h21m

myserver linux70-nginx-deployment-55dc5fdcf9-mrxlp 1/1 Running 0 3h21m

myserver linux70-nginx-deployment-55dc5fdcf9-q6x59 1/1 Running 0 3h21m

myserver linux70-nginx-deployment-55dc5fdcf9-s5h42 1/1 Running 0 3h21m

myserver net-test1 1/1 Running 0 22m

myserver net-test2 1/1 Running 0 61m

myserver net-test3 1/1 Running 0 6s

- Ansible-采取备份

root@deploy-harbor:/etc/kubeasz# ./ezctl backup k8s-cluster1

- 备份存放位置与备份情况

#备份成功后会生成这两个备份文件、目前这两个里面数据是一致的、要是恢复数据的话会执行snapshot.db文件 就把它认为源文件吧

root@deploy-harbor:/etc/kubeasz/clusters/k8s-cluster1/backup# ls

snapshot_202211210908.db snapshot.db

- 删除net-test

root@k8s-etcd1:~# etcdctl get / --keys-only --prefix | grep net-test3

/registry/events/myserver/net-test3.17298ad9fa9cf679

/registry/events/myserver/net-test3.17298ada1eeb346b

/registry/events/myserver/net-test3.17298ada20011319

/registry/events/myserver/net-test3.17298ada243b48d8

/registry/events/myserver/net-test3.17298c517a5b6447

/registry/events/myserver/net-test3.17298e3220c07e42

/registry/events/myserver/net-test3.17298e3249c37734

/registry/events/myserver/net-test3.17298e324ae2165e

/registry/events/myserver/net-test3.17298e324f55fadf

/registry/pods/myserver/net-test3

root@k8s-etcd1:~# etcdctl del /registry/pods/myserver/net-test3

1

- Ansible-采取数据恢复

#要先把时间点的备份文件、给复制成源文件内容 复制并改名

#这里作为演示 刚刚其实备份这两个文件数据是相同的

root@deploy-harbor:/etc/kubeasz/clusters/k8s-cluster1/backup# cp snapshot_202211210908.db snapshot.db

#开始恢复数据

root@deploy-harbor:/etc/kubeasz# ./ezctl restore k8s-cluster1

#查看数据 已经net-test3已经回来了

root@deploy-harbor:/etc/kubeasz# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68555f5f97-p255g 1/1 Running 3 (39s ago) 3h33m

kube-system calico-node-gdc8m 0/1 Running 283 (5m32s ago) 2d

kube-system calico-node-h5drr 1/1 Running 286 (5m36s ago) 2d

linux60 linux60-tomcat-app1-deployment-595f7ff67c-2h8vv 1/1 Running 0 3h33m

myserver linux70-nginx-deployment-55dc5fdcf9-g7lkt 1/1 Running 0 3h33m

myserver linux70-nginx-deployment-55dc5fdcf9-mrxlp 1/1 Running 0 3h33m

myserver linux70-nginx-deployment-55dc5fdcf9-q6x59 1/1 Running 0 3h33m

myserver linux70-nginx-deployment-55dc5fdcf9-s5h42 1/1 Running 0 3h33m

myserver net-test1 1/1 Running 0 34m

myserver net-test2 1/1 Running 0 73m

myserver net-test3 1/1 Running 0 12m

14.11 ETCD数据恢复流程

当etcd集群宕机数量超过集群总节点数一半以上的时候(如总数为三台宕机两台)就会导致整个集群宕机,后期需要重新恢复数据,则恢复流程如下:

- 恢复服务器系统

- 重新部署ETCD集群

- 停止 kube-apiserver/conreoller-manager/scheduler/kubelet/kube-proxy

- 停止ETCD集群

- 各ETCD节点恢复同一份备份数据

- 启动各节点恢复同一份备份数据

- 启动kube-apiserver/controller-manager/scheduler/kubelet/kube-proxy

- 验证k8s master状态及pod数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号