hadoop-spark

| https://www.cnblogs.com/luo630/p/13271637.html |

| https://www.cnblogs.com/dintalk/p/12234718.html |

| https://blog.csdn.net/qq_34319644/article/details/115555522 |

| https://blog.csdn.net/LZB_XM/article/details/125306125 |

| https://blog.csdn.net/wild46cat/article/details/53731703 |

| https://blog.csdn.net/qq_38712932/article/details/84197154 |

hadoop部署

| https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/stable/ |

| |

| [root@hadoop ~] |

| total 856152 |

| -rw-r--r-- 1 root root 695457782 Jul 30 03:11 hadoop-3.3.4.tar.gz |

| -rw-r--r-- 1 root root 181238643 Jun 9 23:20 jdk-8u60-linux-x64.tar.gz |

| drwxr-xr-x 2 hadoop hadoop 6 Sep 22 18:35 soft |

| |

| |

| mkdir soft |

| |

| tar xf hadoop-3.3.4.tar.gz -C app/ |

| tar xf jdk-8u60-linux-x64.tar.gz -C app/ |

| |

| ln -s /root/app/hadoop-3.3.4/bin/hadoop /usr/bin/ |

| ln -s /root/app/jdk1.8.0_60/bin/java /usr/bin/ |

| |

| [root@hadoop soft] |

| /root/soft |

| |

| |

| tar xf jdk-8u60-linux-x64.tar.gz -C soft/ |

| |

| ln -s jdk1.8.0_60 jdk |

| [root@hadoop soft] |

| [root@hadoop soft] |

| JAVA_HOME=/root/soft/jdk/ |

| PATH=$PATH:$JAVA_HOME/bin |

| [root@hadoop soft] |

| [root@hadoop ~] |

| java version "1.8.0_60" |

| Java(TM) SE Runtime Environment (build 1.8.0_60-b27) |

| Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode) |

| [root@hadoop ~] |

| [root@hadoop soft] |

| [root@hadoop soft] |

| total 0 |

| lrwxrwxrwx 1 root root 24 Sep 22 18:46 hadoop -> /root/soft/hadoop-3.3.4/ |

| drwxr-xr-x 10 1024 1024 215 Jul 29 22:44 hadoop-3.3.4 |

| lrwxrwxrwx 1 root root 11 Sep 22 18:39 jdk -> jdk1.8.0_60 |

| drwxr-xr-x 8 10 143 266 Sep 22 18:39 jdk1.8.0_60 |

| |

| |

| [root@hadoop soft] |

| [root@hadoop soft] |

| JAVA_HOME=/root/soft/jdk/ |

| PATH=$PATH:$JAVA_HOME/bin |

| HADOOP_HOME=/root/soft/hadoop/ |

| PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin |

| [root@hadoop soft] |

| |

| [root@hadoop ~] |

| export JAVA_HOME=/root/soft/jdk/ |

- 将start-dfs.sh,stop-dfs.sh两个文件顶部添加以下参数

| |

| [root@hadoop ~] |

| export HDFS_NAMENODE_USER=root |

| export HDFS_DATANODE_USER=root |

| export HDFS_SECONDARYNAMENODE_USER=root |

| export YARN_RESOURCEMANAGER_USER=root |

| export YARN_NODEMANAGER_USER=root |

- 将start-yarn.sh,stop-yarn.sh两个文件添加环境变量

| [root@hadoop sbin] |

| export HDFS_NAMENODE_USER=root |

| export HDFS_DATANODE_USER=root |

| export HDFS_SECONDARYNAMENODE_USER=root |

| export YARN_RESOURCEMANAGER_USER=root |

| export YARN_NODEMANAGER_USER=root |

| export HADOOP_SECURE_DN_USER=yarn |

| [root@hadoop sbin] |

| StrictHostKeyChecking no |

| ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa |

| cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys |

| |

| |

| ssh localhost |

| |

| |

| |

| [root@hadoop ~] |

| <?xml version="1.0" encoding="UTF-8"?> |

| <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> |

| <configuration> |

| |

| <property> |

| <name>fs.default.name</name> |

| <value>hdfs://localhost:9000</value> |

| </property> |

| <property> |

| <name>hadoop.tmp.dir</name> |

| <value>/root/soft/hadoop/data/tmp/</value> |

| </property> |

| |

| </configuration> |

| |

| [root@hadoop ~] |

| <?xml version="1.0" encoding="UTF-8"?> |

| <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> |

| <configuration> |

| <property> |

| <name>dfs.namenode.http-address</name> |

| <value>0.0.0.0:50070</value> |

| <description>A base for other temporary directories.</description> |

| </property> |

| |

| </configuration> |

- 验证是否安装完毕(注意,提交的目录当前用户需要有权限,因为本地部署不需要启动服务,它用的就是Linux操作系统,如果普通用户把文件直接提交到根的话肯定会报异常的哟!)

-

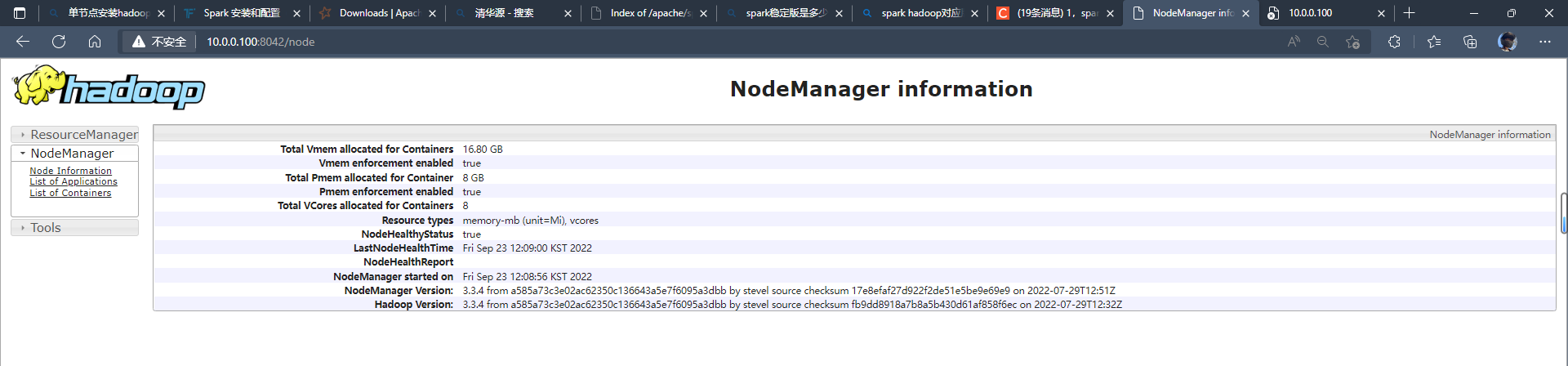

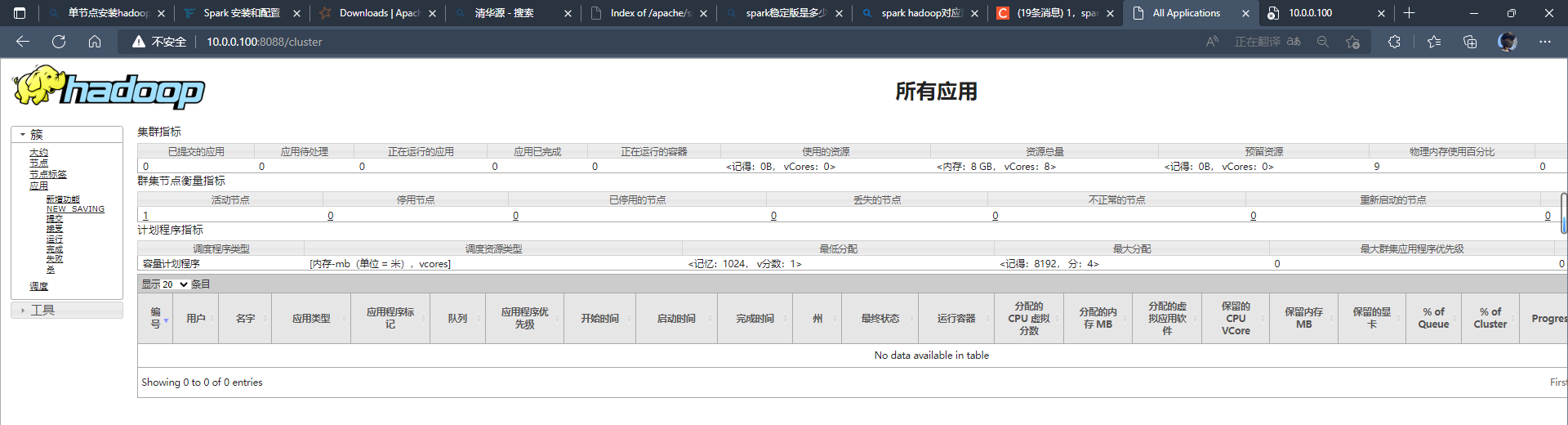

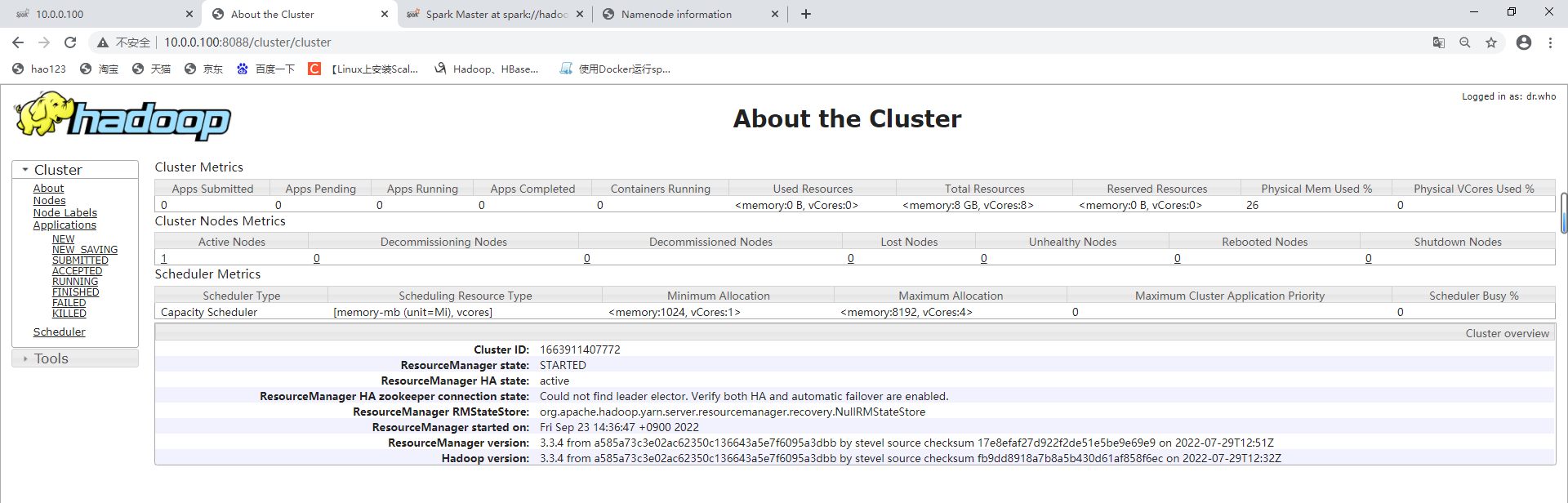

hadoop信息页

-

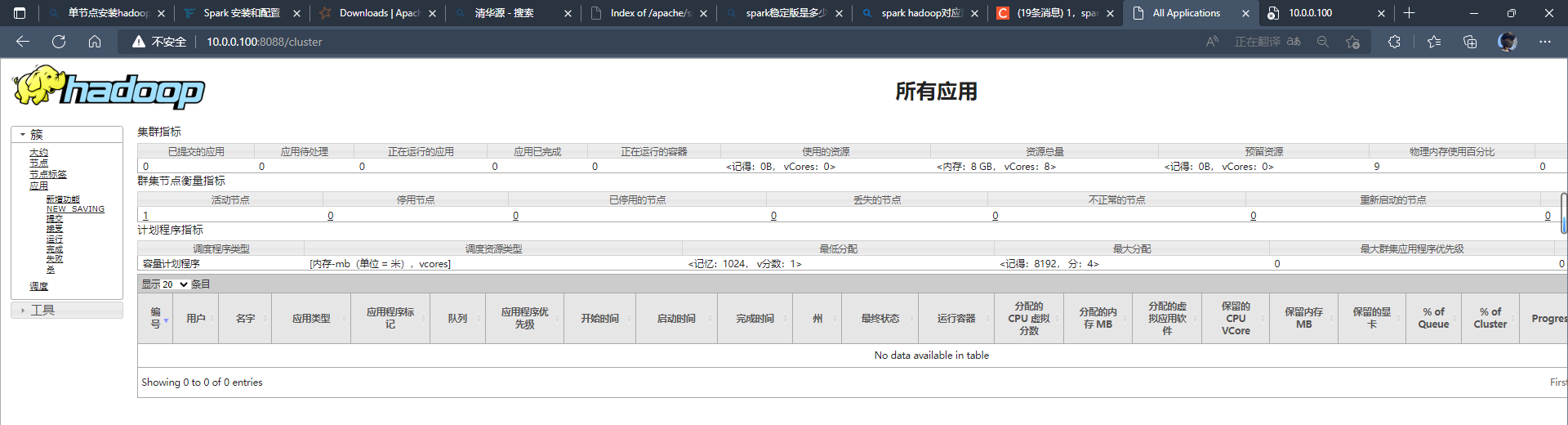

hadoop页面

scala部署

| [root@hadoop soft] |

| [root@hadoop ~] |

| |

| [root@hadoop soft] |

| export SCALA_HOME=/data/soft/scala |

| export PATH=$SCALA_HOME/bin:$PATH |

spark部署

| [root@hadoop ~] |

| |

| [root@hadoop ~] |

| [root@hadoop soft] |

| [root@hadoop soft] |

| export SPARK_HOME=/root/soft/spark |

| export PATH=$PATH:$SPARK_HOME/bin |

| [root@hadoop conf] |

| export JAVA_HOME=/root/soft/jdk/ |

| PATH=$PATH:$JAVA_HOME/bin |

| |

| HADOOP_HOME=/root/soft/hadoop/ |

| export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop |

| PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin |

| |

| export SPARK_HOME=/root/soft/spark |

| export PATH=$PATH:$SPARK_HOME/bin |

| export SPARK_MASTER_PORT=7077 |

| |

| export SCALA_HOME=/root/soft/scala |

| export PATH=$SCALA_HOME/bin:$PATH |

| |

- 修改脚本端口(怕你宿主机上已经使用了8080这里可以改也可以不改)

| [root@hadoop ~] |

| |

| if [ "$SPARK_MASTER_WEBUI_PORT" = "" ]; then |

| SPARK_MASTER_WEBUI_PORT=8081 |

| fi |

- spark配置历史监听端口---没研究明白可以不用整可以忽略这里

| [root@hadoop conf] |

| /root/soft/spark/conf |

| [root@hadoop conf] |

| [root@hadoop conf] |

| |

| spark.master spark://master:7077 |

| spark.eventLog.enabled true |

| spark.eventLog.dir hdfs://namenode:8021/directory |

| spark.history.ui.port 18080 |

| |

| |

| |

| ./soft/spark/bin/spark-submit run-example --master spark://10.0.0.100:7077 sql.SparkSQLExample |

| |

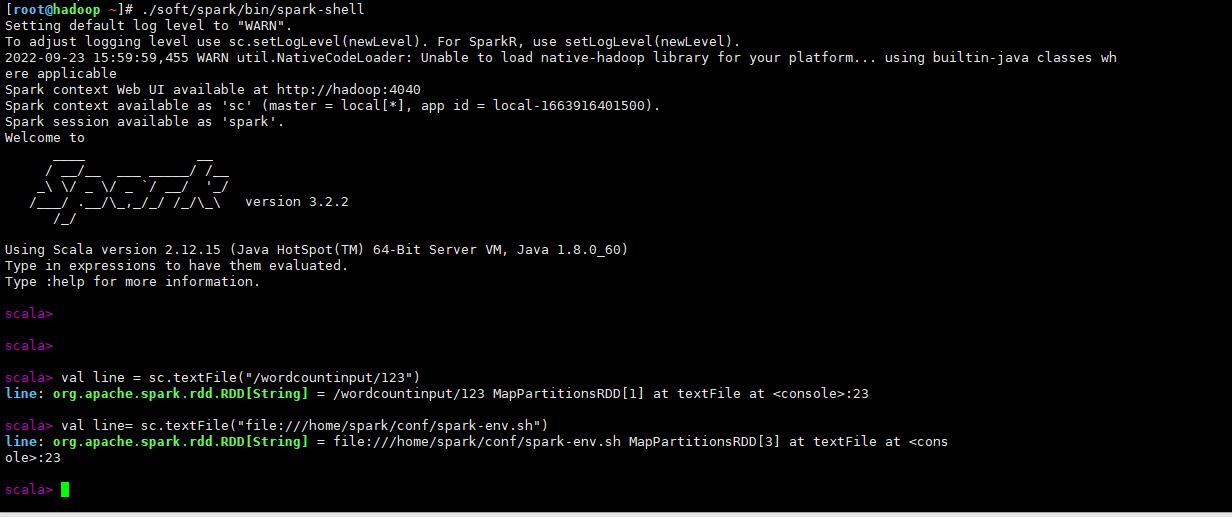

| |

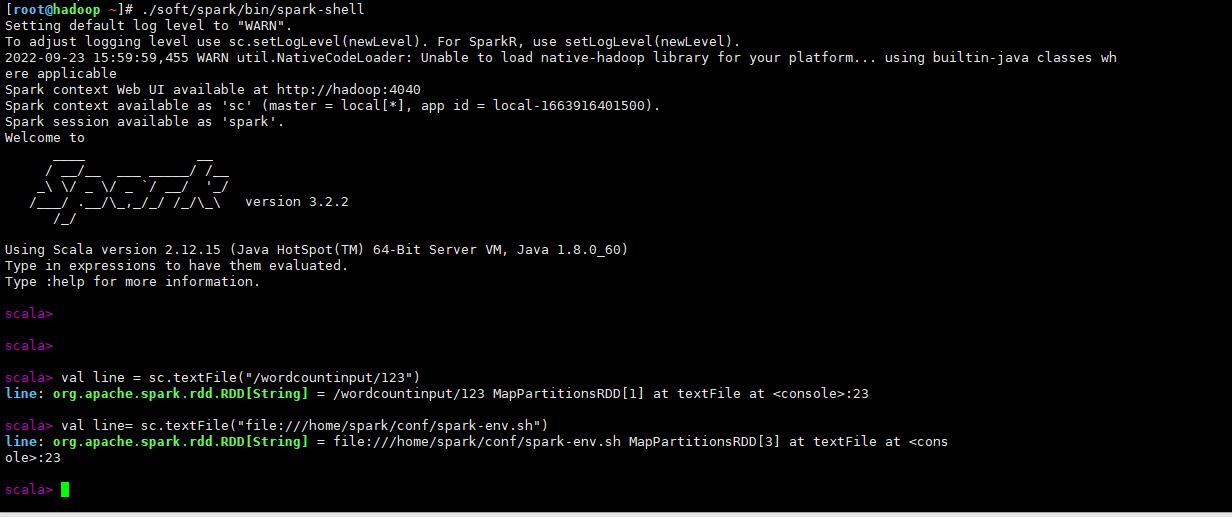

| [root@hadoop ~] |

| scala> val line = sc.textFile("/wordcountinput/123") |

| line: org.apache.spark.rdd.RDD[String] = /wordcountinput/123 MapPartitionsRDD[1] at textFile at <console>:23 |

| |

| scala> val line= sc.textFile("file:///home/spark/conf/spark-env.sh") |

| line: org.apache.spark.rdd.RDD[String] = file:///home/spark/conf/spark-env.sh MapPartitionsRDD[3] at textFile at <console>:23 |

| |

| scala> :quit |

所有启动测试

如果上面的都安装完成了那么久安照这个顺序执行一下吧

- hdfs格式化-第一次就初始化把-以后就不用了应该

| ./soft/hadoop/bin/hdfs namenode -format |

| ./soft/hadoop/sbin/start-all.sh |

| ./soft/spark/sbin/start-all.sh |

| ./soft/spark/bin/spark-shell |

| val textFile = spark.read.textFile("hdfs://localhost:9000/input/README.txt") |

| spark-submit --class org.apache.spark.examples.SparkPi --master local[*] spark-examples-1.6.3-hadoop2.4.0.jar 100 |

- 问题:如果遇到初始化的时候没有nodename与dataname

- 问题已经解决了:在上述配置中可以看到

| |

| 那就是删除/tmp/目录下的所有数据 |

| 然后找到这个xml文件添加东西 |

| |

| [root@hadoop hadoop] |

| <?xml version="1.0" encoding="UTF-8"?> |

| <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> |

| <configuration> |

| |

| <property> |

| <name>hadoop.tmp.dir</name> |

| <value>/root/soft/hadoop/hadoop_tmp</value> |

| <description>A base for other temporary directories.</description> |

| </property> |

| |

| </configuration> |

| |

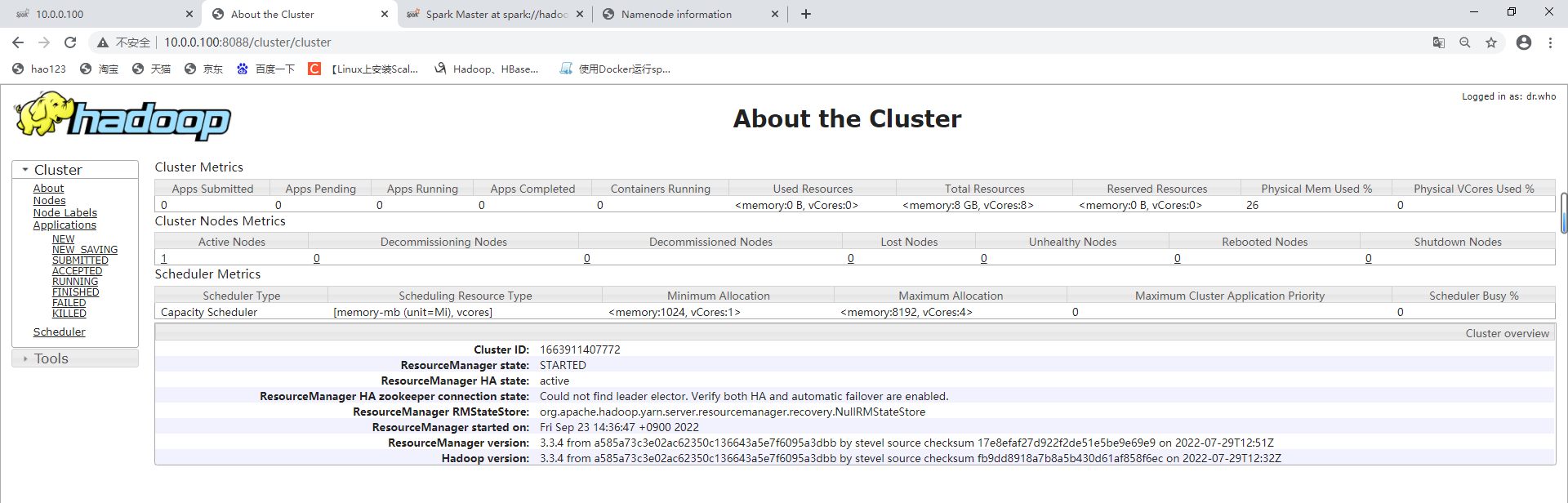

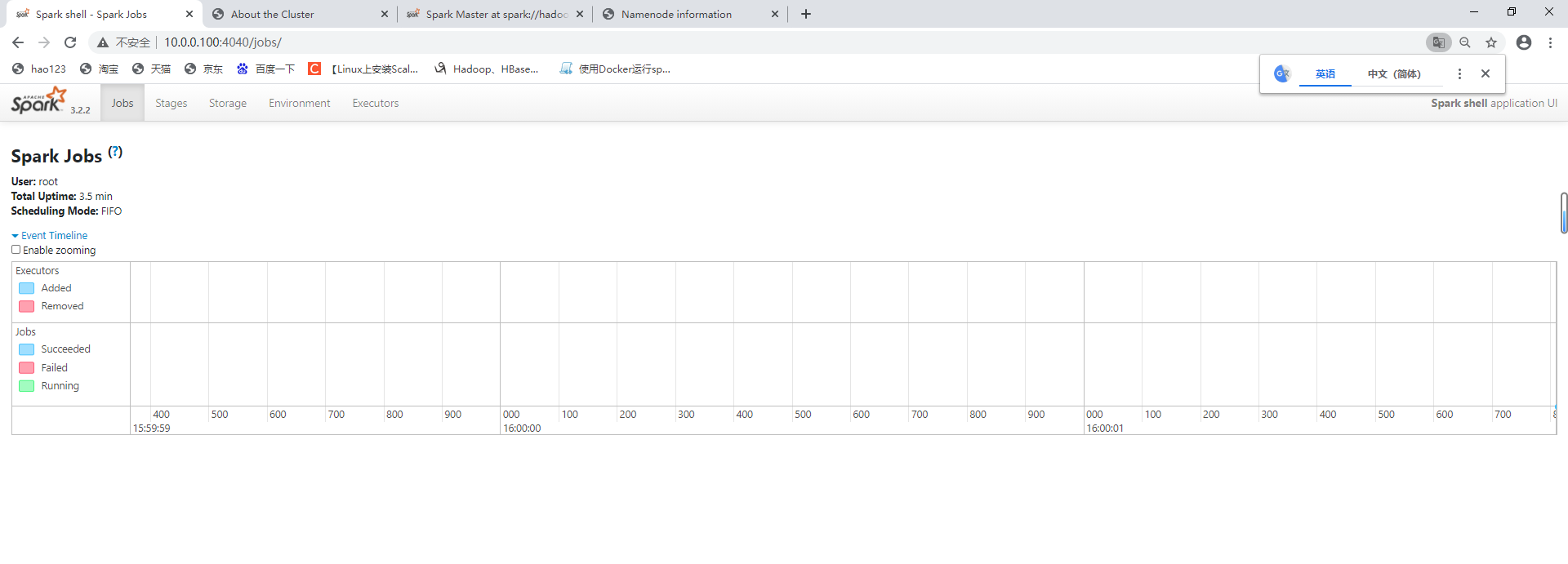

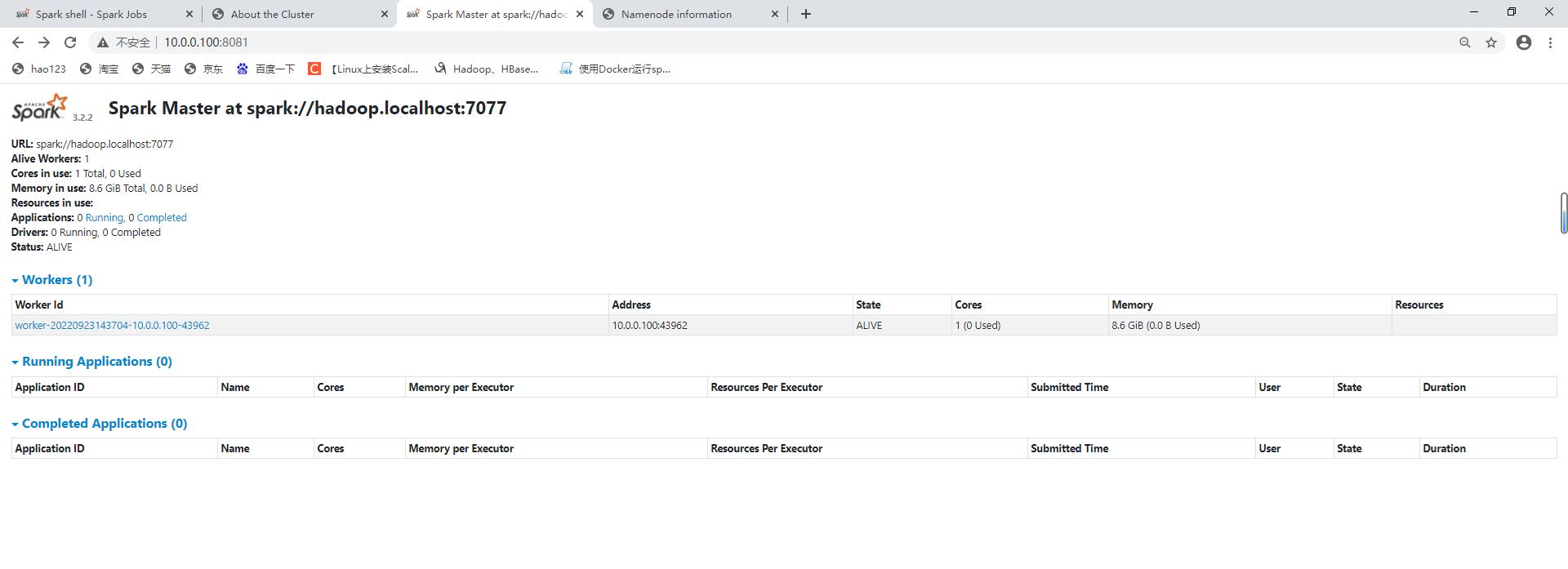

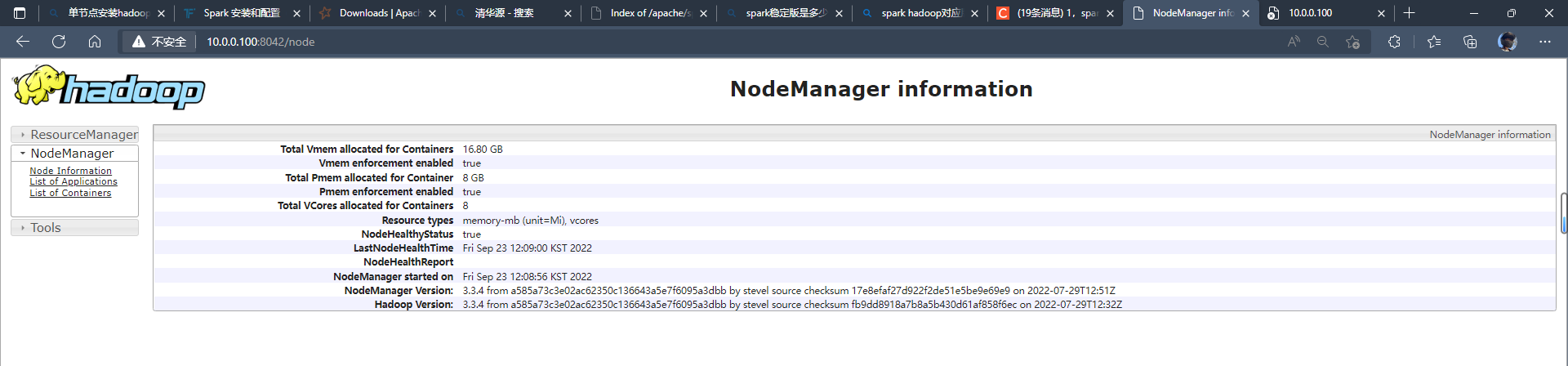

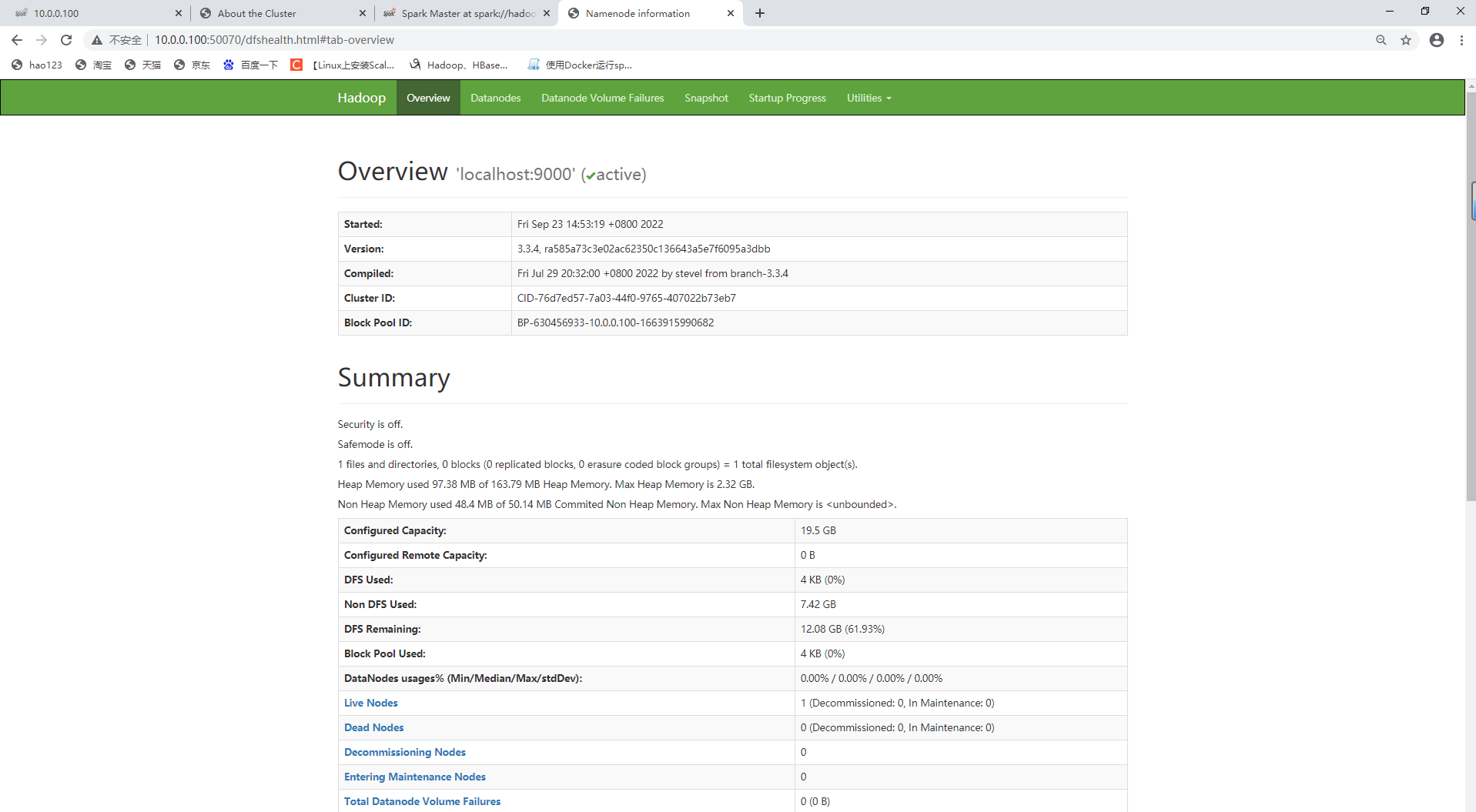

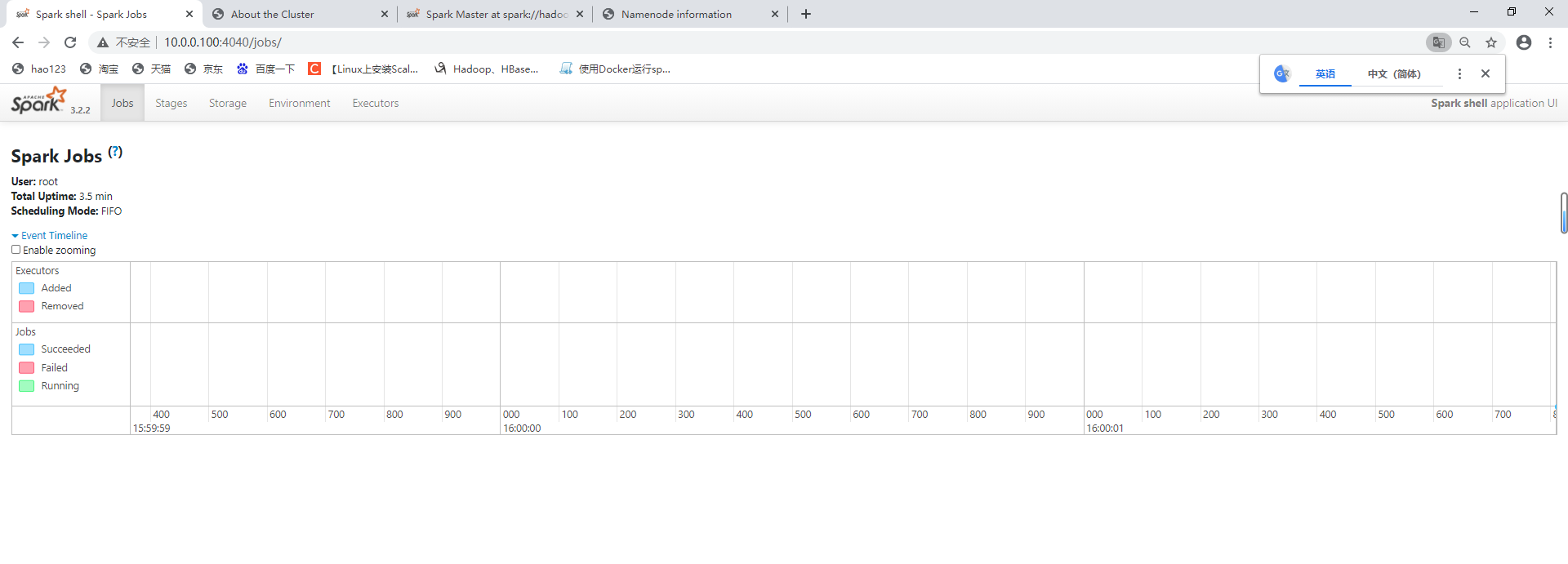

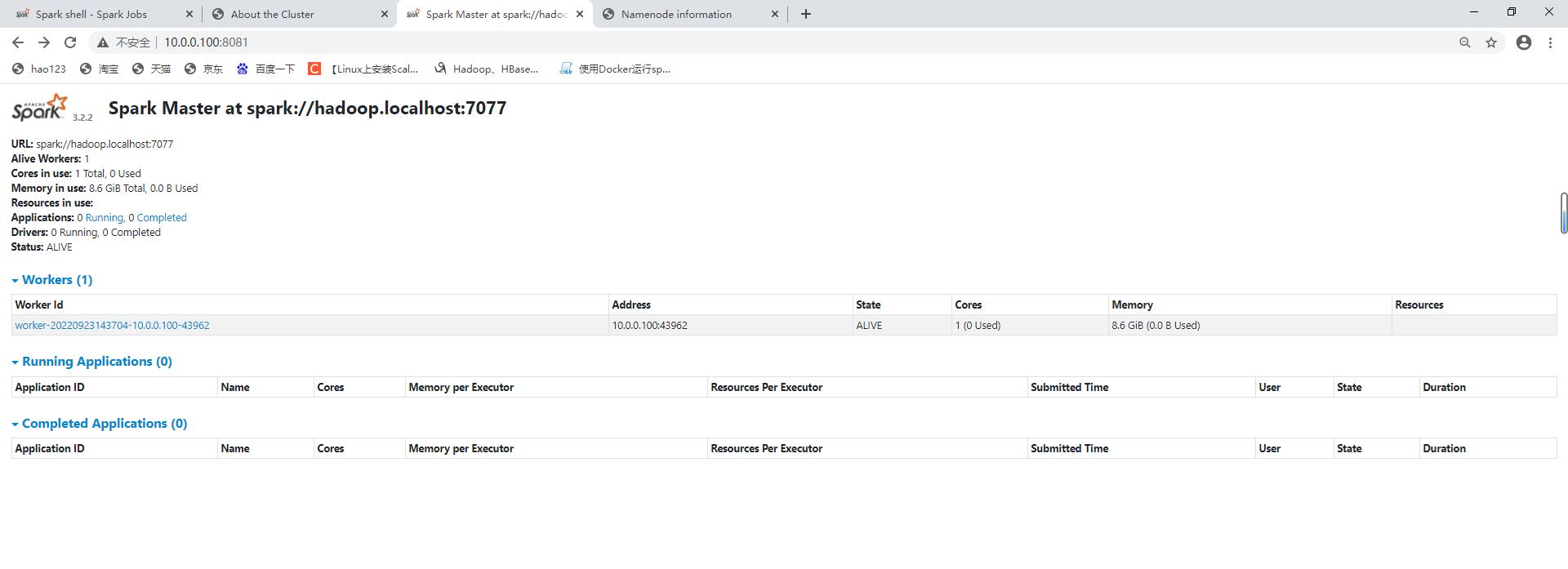

最终所有效率页面图

-

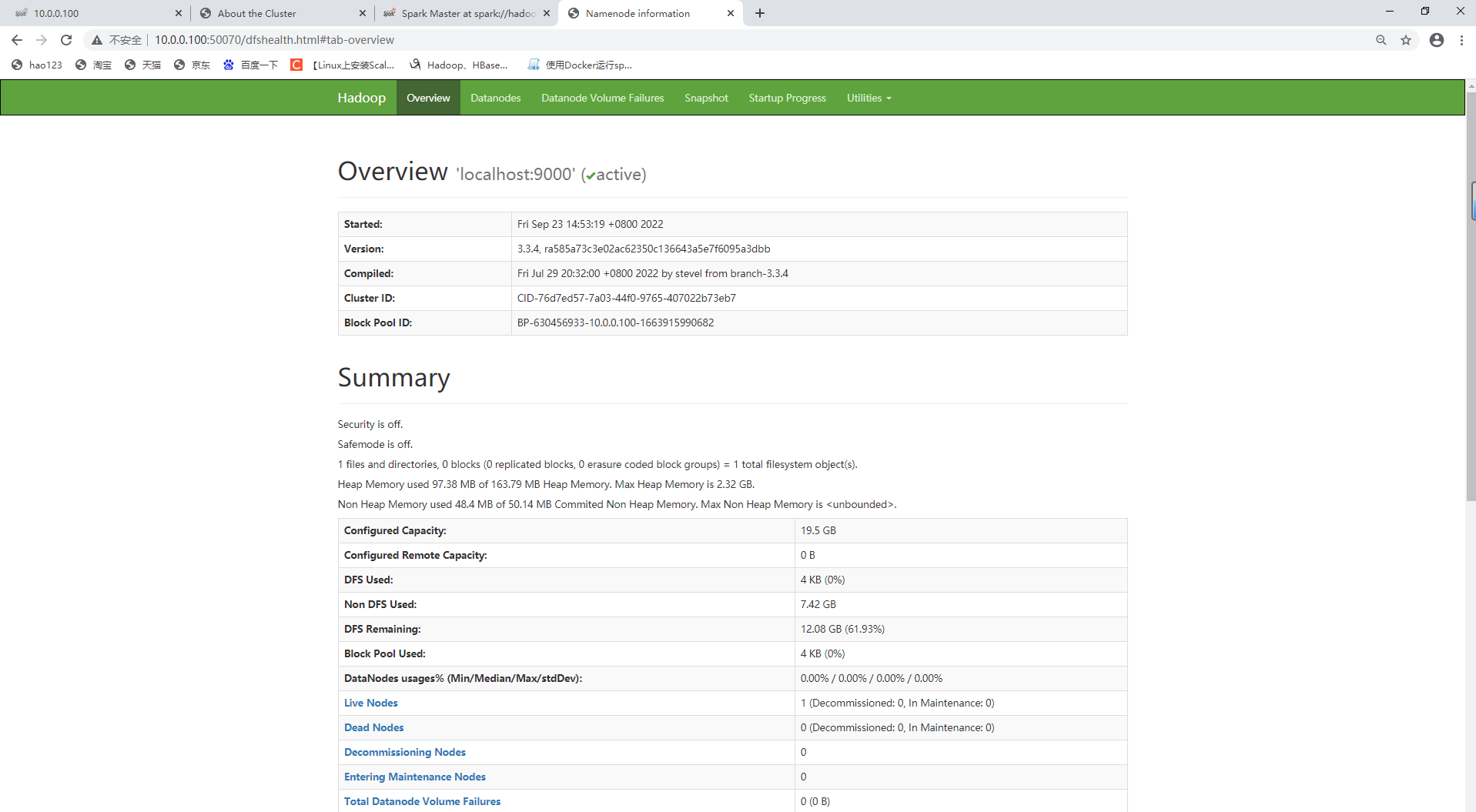

hdfs:50070

-

spark-shell:

容器化hadoop与spark

| https://github.com/bambrow/docker-hadoop-workbench |

| docker build -t hadoop-spark . |

| |

| docker run -it --privileged=true hadoop-spark |

| |

| docker run -d --name hadoop-spark -p 8088:8088 -p 8080:8080 -p 50070:50070 -p 4040:4040 --restart=always hadoop-spark:latest |

Dockerfile

如果你的docker版本低那么就注释掉健康检查机制

最好是1.17以上版本,如果不满足健康检查机制里有参数会不支持

| [root@hadoop-docker Container] |

| FROM centos:7.9.2009 |

| LABEL auther=QuYi hadoop=3.3.4 jdk=1.8 scala=2.11.12 spark=3.2.2 |

| |

| |

| WORKDIR /root/soft/ |

| |

| |

| COPY CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo |

| COPY epel.repo /etc/yum.repos.d/epel.repo |

| |

| |

| |

| ADD hadoop-3.3.4.tar.gz /root/soft/ |

| ADD jdk-8u60-linux-x64.tar.gz /root/soft/ |

| ADD scala-2.11.12.tgz /root/soft/ |

| ADD spark-3.2.2-bin-hadoop3.2.tgz /root/soft/ |

| |

| |

| RUN mv /root/soft/hadoop-3.3.4 /root/soft/hadoop \ |

| && mv /root/soft/jdk1.8.0_60 /root/soft/jdk \ |

| && mv /root/soft/scala-2.11.12 /root/soft/scala \ |

| && mv /root/soft/spark-3.2.2-bin-hadoop3.2 /root/soft/spark \ |

| && ln -s /root/soft/hadoop/bin/hadoop /usr/bin/ \ |

| && ln -s /root/soft/jdk/bin/java /usr/bin/ \ |

| && ln -s /root/soft/scala/bin/scala /usr/bin/ \ |

| && ln -s /root/soft/spark/bin/spark /usr/bin/ |

| |

| |

| ENV JAVA_HOME="/root/soft/jdk/" |

| ENV PATH="$PATH:$JAVA_HOME/bin" |

| ENV HADOOP_HOME="/root/soft/hadoop/" |

| ENV PATH="$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin" |

| ENV JAVA_LIBRARY_PATH="/root/soft/hadoop/lib/native" |

| |

| ENV SPARK_HOME="/root/soft/spark" |

| ENV PATH="$PATH:$SPARK_HOME/bin" |

| |

| ENV SCALA_HOME="/root/soft/scala" |

| ENV PATH="$SCALA_HOME/bin:$PATH" |

| |

| |

| RUN sed -i '2iexport HDFS_NAMENODE_USER=root' /root/soft/hadoop/sbin/start-dfs.sh \ |

| && sed -i '3iexport HDFS_DATANODE_USER=root' /root/soft/hadoop/sbin/start-dfs.sh \ |

| && sed -i '4iexport HDFS_SECONDARYNAMENODE_USER=root' /root/soft/hadoop/sbin/start-dfs.sh \ |

| && sed -i '5iexport YARN_RESOURCEMANAGER_USER=root' /root/soft/hadoop/sbin/start-dfs.sh \ |

| && sed -i '6iexport YARN_NODEMANAGER_USER=root' /root/soft/hadoop/sbin/start-dfs.sh |

| |

| |

| RUN sed -i '2iexport HDFS_NAMENODE_USER=root' /root/soft/hadoop/sbin/stop-dfs.sh \ |

| && sed -i '3iexport HDFS_DATANODE_USER=root' /root/soft/hadoop/sbin/stop-dfs.sh \ |

| && sed -i '4iexport HDFS_SECONDARYNAMENODE_USER=root' /root/soft/hadoop/sbin/stop-dfs.sh \ |

| && sed -i '5iexport YARN_RESOURCEMANAGER_USER=root' /root/soft/hadoop/sbin/stop-dfs.sh \ |

| && sed -i '6iexport YARN_NODEMANAGER_USER=root' /root/soft/hadoop/sbin/stop-dfs.sh |

| |

| |

| RUN sed -i '2iexport JAVA_HOME=/root/soft/jdk/' /root/soft/hadoop/etc/hadoop/hadoop-env.sh |

| |

| |

| RUN sed -i '2iexport HDFS_NAMENODE_USER=root' /root/soft/hadoop/sbin/start-yarn.sh \ |

| && sed -i '3iexport HDFS_DATANODE_USER=root' /root/soft/hadoop/sbin/start-yarn.sh \ |

| && sed -i '4iexport HDFS_SECONDARYNAMENODE_USER=root' /root/soft/hadoop/sbin/start-yarn.sh \ |

| && sed -i '5iexport YARN_RESOURCEMANAGER_USER=root' /root/soft/hadoop/sbin/start-yarn.sh \ |

| && sed -i '6iexport YARN_NODEMANAGER_USER=root' /root/soft/hadoop/sbin/start-yarn.sh \ |

| && sed -i '7iexport HADOOP_SECURE_DN_USER=yarn' /root/soft/hadoop/sbin/start-yarn.sh |

| |

| |

| |

| RUN sed -i '2iexport HDFS_NAMENODE_USER=root' /root/soft/hadoop/sbin/stop-yarn.sh \ |

| && sed -i '3iexport HDFS_DATANODE_USER=root' /root/soft/hadoop/sbin/stop-yarn.sh \ |

| && sed -i '4iexport HDFS_SECONDARYNAMENODE_USER=root' /root/soft/hadoop/sbin/stop-yarn.sh \ |

| && sed -i '5iexport YARN_RESOURCEMANAGER_USER=root' /root/soft/hadoop/sbin/stop-yarn.sh \ |

| && sed -i '6iexport YARN_NODEMANAGER_USER=root' /root/soft/hadoop/sbin/stop-yarn.sh \ |

| && sed -i '7iexport HADOOP_SECURE_DN_USER=yarn' /root/soft/hadoop/sbin/stop-yarn.sh |

| |

| |

| RUN sed -i '2iexport JAVA_HOME=/root/soft/jdk/' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '3iPATH=$PATH:$JAVA_HOME/bin' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '4iHADOOP_HOME=/root/soft/hadoop/' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '5iexport HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '6iPATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '7iexport SPARK_HOME=/root/soft/spark' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '8iexport PATH=$PATH:$SPARK_HOME/bin' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '9iexport SPARK_MASTER_PORT=7077' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '10iexport SCALA_HOME=/root/soft/scala' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i '11iexport PATH=$SCALA_HOME/bin:$PATH' /root/soft/spark/conf/spark-env.sh.template \ |

| && sed -i 's#SPARK_MASTER_WEBUI_PORT=8080#SPARK_MASTER_WEBUI_PORT=8081#g' /root/soft/spark/conf/spark-env.sh.template |

| |

| |

| |

| |

| |

| RUN yum install -y openssh openssh-clients openssh-server iproute initscripts nc \ |

| && /usr/sbin/sshd-keygen -A \ |

| && /usr/sbin/sshd \ |

| && /usr/bin/ssh-keygen -t rsa -P '' -f /root/.ssh/id_rsa \ |

| && cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys \ |

| && ssh-copy-id -i /root/.ssh/id_rsa.pub -o StrictHostKeyChecking=no root@localhost \ |

| && chmod 700 /root/.ssh \ |

| && chmod 600 /root/.ssh/authorized_keys \ |

| && chmod og-wx /root/.ssh/authorized_keys \ |

| && sed -i '4iStrictHostKeyChecking no' /etc/ssh/ssh_config \ |

| && sed -i '3iPort 22' /etc/ssh/sshd_config \ |

| && /usr/sbin/sshd \ |

| && mkdir -p /root/soft/hadoop/logs \ |

| && echo `hostname -I` 'localhost' >> /etc/hosts |

| |

| |

| |

| COPY core-site.xml /root/soft/hadoop/etc/hadoop/ |

| COPY hdfs-site.xml /root/soft/hadoop/etc/hadoop/ |

| |

| |

| COPY entrypoint.sh / |

| |

| |

| RUN chmod 777 /entrypoint.sh |

| |

| |

| |

| EXPOSE 8040 9864 9000 8042 9866 9867 9868 33389 50070 8088 8030 36638 8031 8032 8033 7077 41904 8081 8082 4044 |

| |

| HEALTHCHECK --interval=5s \ |

| --timeout=3s \ |

| --start-period=30s \ |

| --retries=3 \ |

| CMD echo test | nc localhost 8088 || exit 1 |

| |

| CMD ["/entrypoint.sh"] |

目录结构

| [root@oss-server Container-docker] |

| total 1178676 |

| -rw-r--r-- 1 root root 2523 Aug 4 15:04 CentOS-Base.repo |

| -rw-r--r-- 1 root root 982 Sep 25 16:01 core-site.xml |

| -rw-r--r-- 1 root root 6285 Sep 26 16:29 Dockerfile |

| -rw-r--r-- 1 root root 945 Sep 26 16:55 docker-hadoopspark.sh |

| -rwxr-xr-x 1 root root 648 Sep 26 15:44 entrypoint.sh |

| -rw-r--r-- 1 root root 664 Aug 4 15:04 epel.repo |

| -rw-r--r-- 1 root root 695457782 Sep 26 10:54 hadoop-3.3.4.tar.gz |

| -rw-r--r-- 1 root root 952 Sep 25 15:23 hdfs-site.xml |

| -rw-r--r-- 1 root root 181238643 Sep 26 10:55 jdk-8u60-linux-x64.tar.gz |

| -rw-r--r-- 1 root root 29114457 Sep 26 10:55 scala-2.11.12.tgz |

| -rw-r--r-- 1 root root 301112604 Sep 26 10:57 spark-3.2.2-bin-hadoop3.2.tgz |

做个记录命令脚本

| [root@oss-server Container-docker] |

| |

| |

| |

| |

| |

| docker run -d --name HadoopSpark -p 8088:8088 -p 8080:8080 -p 50070:50070 -p 4040:4040 --restart=always hadoop-spark:latest |

| |

| |

| |

| |

| docker exec -it HadoopSpark bash |

| |

| |

| /root/soft/spark/bin/spark-shell |

| |

| |

| |

| |

| docker exec -it HadoopSpark bash |

| |

| |

| /root/soft/hadoop/bin/hdfs namenode -format |

| |

| |

| /root/soft/hadoop/sbin/start-all.sh |

| |

| |

| |

| |