三、Celery Linux操作系统后台启动的服务制作

0、使用到的技术

Celery + Django + RabbitMQ + Shell

1、编写这篇文章的背景

主要实现在Linux操作系统写成脚本的启动方式,Windows操作系统暂不做介绍,有兴趣看官方的文档。

1.1、Celery启动类型的两种:

1、worker : 专门处理接收数据处理调度的任务

2、Beat : 专门处理定时的周期任务

所以编写系统启动脚本的时候,有分以上两种。

1.2、官方在Linux操作系统介绍,增加为操作系统的服务方式有三种:

1、Shell 脚本方式【通用性高,难度也比较高,需要懂Shell】

2、systemctl Linux操作系统的主流【操作系统自带,无需安装什么插件等】

3、supervisor 交给第三方Python插件管理【好处:进程挂掉,会自动拉取,无需让我们操作】

本篇文章主要介绍这三种方式,其它不懂的,请参考官方文档:https://docs.celeryproject.org/en/stable/userguide/daemonizing.html

2、使用Shell 方式,管理celery worker、beat系统服务

注意:脚本不是本人所写,都是来来自官网:https://github.com/celery/celery/tree/master/extra/generic-init.d

2.0、命令的使用方法

脚本名字 : celeryd 命令使用方法 : /etc/init.d/celeryd {start|stop|restart|status} 配置文件 : /etc/default/celeryd

2.1、Celery worker启动脚本

2.1.1、编写worker启动脚本

vi /etc/init.d/celeryd

#!/bin/sh -e # ============================================ # celeryd - Starts the Celery worker daemon. # ============================================ # # :Usage: /etc/init.d/celeryd {start|stop|force-reload|restart|try-restart|status} # :Configuration file: /etc/default/celeryd (or /usr/local/etc/celeryd on BSD) # # See http://docs.celeryproject.org/en/latest/userguide/daemonizing.html#generic-init-scripts ### BEGIN INIT INFO # Provides: celeryd # Required-Start: $network $local_fs $remote_fs # Required-Stop: $network $local_fs $remote_fs # Default-Start: 2 3 4 5 # Default-Stop: 0 1 6 # Short-Description: celery task worker daemon ### END INIT INFO # # # To implement separate init-scripts, copy this script and give it a different # name. That is, if your new application named "little-worker" needs an init, # you should use: # # cp /etc/init.d/celeryd /etc/init.d/little-worker # # You can then configure this by manipulating /etc/default/little-worker. # VERSION=10.1 echo "celery init v${VERSION}." if [ $(id -u) -ne 0 ]; then echo "Error: This program can only be used by the root user." echo " Unprivileged users must use the 'celery multi' utility, " echo " or 'celery worker --detach'." exit 1 fi origin_is_runlevel_dir () { set +e dirname $0 | grep -q "/etc/rc.\.d" echo $? } # Can be a runlevel symlink (e.g., S02celeryd) if [ $(origin_is_runlevel_dir) -eq 0 ]; then SCRIPT_FILE=$(readlink "$0") else SCRIPT_FILE="$0" fi SCRIPT_NAME="$(basename "$SCRIPT_FILE")" DEFAULT_USER="celery" DEFAULT_PID_FILE="/var/run/celery/%n.pid" DEFAULT_LOG_FILE="/var/log/celery/%n%I.log" DEFAULT_LOG_LEVEL="INFO" DEFAULT_NODES="celery" DEFAULT_CELERYD="-m celery worker --detach" if [ -d "/etc/default" ]; then CELERY_CONFIG_DIR="/etc/default" else CELERY_CONFIG_DIR="/usr/local/etc" fi # 配置文件的位置 CELERY_DEFAULTS=${CELERY_DEFAULTS:-"$CELERY_CONFIG_DIR/${SCRIPT_NAME}"} # Make sure executable configuration script is owned by root _config_sanity() { local path="$1" local owner=$(ls -ld "$path" | awk '{print $3}') local iwgrp=$(ls -ld "$path" | cut -b 6) local iwoth=$(ls -ld "$path" | cut -b 9) if [ "$(id -u $owner)" != "0" ]; then echo "Error: Config script '$path' must be owned by root!" echo echo "Resolution:" echo "Review the file carefully, and make sure it hasn't been " echo "modified with mailicious intent. When sure the " echo "script is safe to execute with superuser privileges " echo "you can change ownership of the script:" echo " $ sudo chown root '$path'" exit 1 fi if [ "$iwoth" != "-" ]; then # S_IWOTH echo "Error: Config script '$path' cannot be writable by others!" echo echo "Resolution:" echo "Review the file carefully, and make sure it hasn't been " echo "modified with malicious intent. When sure the " echo "script is safe to execute with superuser privileges " echo "you can change the scripts permissions:" echo " $ sudo chmod 640 '$path'" exit 1 fi if [ "$iwgrp" != "-" ]; then # S_IWGRP echo "Error: Config script '$path' cannot be writable by group!" echo echo "Resolution:" echo "Review the file carefully, and make sure it hasn't been " echo "modified with malicious intent. When sure the " echo "script is safe to execute with superuser privileges " echo "you can change the scripts permissions:" echo " $ sudo chmod 640 '$path'" exit 1 fi } if [ -f "$CELERY_DEFAULTS" ]; then _config_sanity "$CELERY_DEFAULTS" echo "Using config script: $CELERY_DEFAULTS" # 加载配置文件的信息到该脚本程序中 . "$CELERY_DEFAULTS" fi # Sets --app argument for CELERY_BIN CELERY_APP_ARG="" if [ ! -z "$CELERY_APP" ]; then CELERY_APP_ARG="--app=$CELERY_APP" fi # Options to su # can be used to enable login shell (CELERYD_SU_ARGS="-l"), # or even to use start-stop-daemon instead of su. CELERYD_SU=${CELERY_SU:-"su"} CELERYD_SU_ARGS=${CELERYD_SU_ARGS:-""} CELERYD_USER=${CELERYD_USER:-$DEFAULT_USER} # Set CELERY_CREATE_DIRS to always create log/pid dirs. CELERY_CREATE_DIRS=${CELERY_CREATE_DIRS:-0} CELERY_CREATE_RUNDIR=$CELERY_CREATE_DIRS CELERY_CREATE_LOGDIR=$CELERY_CREATE_DIRS if [ -z "$CELERYD_PID_FILE" ]; then CELERYD_PID_FILE="$DEFAULT_PID_FILE" CELERY_CREATE_RUNDIR=1 fi if [ -z "$CELERYD_LOG_FILE" ]; then CELERYD_LOG_FILE="$DEFAULT_LOG_FILE" CELERY_CREATE_LOGDIR=1 fi CELERYD_LOG_LEVEL=${CELERYD_LOG_LEVEL:-${CELERYD_LOGLEVEL:-$DEFAULT_LOG_LEVEL}} CELERY_BIN=${CELERY_BIN:-"celery"} CELERYD_MULTI=${CELERYD_MULTI:-"$CELERY_BIN multi"} CELERYD_NODES=${CELERYD_NODES:-$DEFAULT_NODES} export CELERY_LOADER if [ -n "$2" ]; then CELERYD_OPTS="$CELERYD_OPTS $2" fi CELERYD_LOG_DIR=`dirname $CELERYD_LOG_FILE` CELERYD_PID_DIR=`dirname $CELERYD_PID_FILE` # Extra start-stop-daemon options, like user/group. if [ -n "$CELERYD_CHDIR" ]; then DAEMON_OPTS="$DAEMON_OPTS --workdir=$CELERYD_CHDIR" fi check_dev_null() { if [ ! -c /dev/null ]; then echo "/dev/null is not a character device!" exit 75 # EX_TEMPFAIL fi } maybe_die() { if [ $? -ne 0 ]; then echo "Exiting: $* (errno $?)" exit 77 # EX_NOPERM fi } create_default_dir() { if [ ! -d "$1" ]; then echo "- Creating default directory: '$1'" mkdir -p "$1" maybe_die "Couldn't create directory $1" echo "- Changing permissions of '$1' to 02755" chmod 02755 "$1" maybe_die "Couldn't change permissions for $1" if [ -n "$CELERYD_USER" ]; then echo "- Changing owner of '$1' to '$CELERYD_USER'" chown "$CELERYD_USER" "$1" maybe_die "Couldn't change owner of $1" fi if [ -n "$CELERYD_GROUP" ]; then echo "- Changing group of '$1' to '$CELERYD_GROUP'" chgrp "$CELERYD_GROUP" "$1" maybe_die "Couldn't change group of $1" fi fi } check_paths() { if [ $CELERY_CREATE_LOGDIR -eq 1 ]; then create_default_dir "$CELERYD_LOG_DIR" fi if [ $CELERY_CREATE_RUNDIR -eq 1 ]; then create_default_dir "$CELERYD_PID_DIR" fi } create_paths() { create_default_dir "$CELERYD_LOG_DIR" create_default_dir "$CELERYD_PID_DIR" } export PATH="${PATH:+$PATH:}/usr/sbin:/sbin" _get_pidfiles () { # note: multi < 3.1.14 output to stderr, not stdout, hence the redirect. ${CELERYD_MULTI} expand "${CELERYD_PID_FILE}" ${CELERYD_NODES} 2>&1 } _get_pids() { found_pids=0 my_exitcode=0 for pidfile in $(_get_pidfiles); do local pid=`cat "$pidfile"` local cleaned_pid=`echo "$pid" | sed -e 's/[^0-9]//g'` if [ -z "$pid" ] || [ "$cleaned_pid" != "$pid" ]; then echo "bad pid file ($pidfile)" one_failed=true my_exitcode=1 else found_pids=1 echo "$pid" fi if [ $found_pids -eq 0 ]; then echo "${SCRIPT_NAME}: All nodes down" exit $my_exitcode fi done } _chuid () { ${CELERYD_SU} ${CELERYD_SU_ARGS} "$CELERYD_USER" -c "$CELERYD_MULTI $*" } start_workers () { if [ ! -z "$CELERYD_ULIMIT" ]; then # 设置该程序打开文件数 ulimit -n $CELERYD_ULIMIT fi _chuid $* start $CELERYD_NODES $DAEMON_OPTS \ --pidfile="$CELERYD_PID_FILE" \ --logfile="$CELERYD_LOG_FILE" \ --loglevel="$CELERYD_LOG_LEVEL" \ $CELERY_APP_ARG \ $CELERYD_OPTS } dryrun () { (C_FAKEFORK=1 start_workers --verbose) } stop_workers () { _chuid stopwait $CELERYD_NODES --pidfile="$CELERYD_PID_FILE" } restart_workers () { _chuid restart $CELERYD_NODES $DAEMON_OPTS \ --pidfile="$CELERYD_PID_FILE" \ --logfile="$CELERYD_LOG_FILE" \ --loglevel="$CELERYD_LOG_LEVEL" \ $CELERY_APP_ARG \ $CELERYD_OPTS } kill_workers() { _chuid kill $CELERYD_NODES $DAEMON_OPTS --pidfile="$CELERYD_PID_FILE" } restart_workers_graceful () { echo "WARNING: Use with caution in production" echo "The workers will attempt to restart, but they may not be able to." local worker_pids= worker_pids=`_get_pids` [ "$one_failed" ] && exit 1 for worker_pid in $worker_pids; do local failed= kill -HUP $worker_pid 2> /dev/null || failed=true if [ "$failed" ]; then echo "${SCRIPT_NAME} worker (pid $worker_pid) could not be restarted" one_failed=true else echo "${SCRIPT_NAME} worker (pid $worker_pid) received SIGHUP" fi done [ "$one_failed" ] && exit 1 || exit 0 } check_status () { my_exitcode=0 found_pids=0 local one_failed= for pidfile in $(_get_pidfiles); do if [ ! -r $pidfile ]; then echo "${SCRIPT_NAME} down: no pidfiles found" one_failed=true break fi local node=`basename "$pidfile" .pid` local pid=`cat "$pidfile"` local cleaned_pid=`echo "$pid" | sed -e 's/[^0-9]//g'` if [ -z "$pid" ] || [ "$cleaned_pid" != "$pid" ]; then echo "bad pid file ($pidfile)" one_failed=true else local failed= kill -0 $pid 2> /dev/null || failed=true if [ "$failed" ]; then echo "${SCRIPT_NAME} (node $node) (pid $pid) is down, but pidfile exists!" one_failed=true else echo "${SCRIPT_NAME} (node $node) (pid $pid) is up..." fi fi done [ "$one_failed" ] && exit 1 || exit 0 } case "$1" in start) check_dev_null check_paths start_workers ;; stop) check_dev_null check_paths stop_workers ;; reload|force-reload) echo "Use restart" ;; status) check_status ;; restart) check_dev_null check_paths restart_workers ;; graceful) check_dev_null restart_workers_graceful ;; kill) check_dev_null kill_workers ;; dryrun) check_dev_null dryrun ;; try-restart) check_dev_null check_paths restart_workers ;; create-paths) check_dev_null create_paths ;; check-paths) check_dev_null check_paths ;; *) echo "Usage: /etc/init.d/${SCRIPT_NAME} {start|stop|restart|graceful|kill|dryrun|create-paths}" exit 64 # EX_USAGE ;; esac exit 0

chmod +x /etc/init.d/celeryd

2.1.2、编写worker的配置文件,位置:/etc/default/celeryd【需要修改位置的话,请修改celeryd脚本即可】

vi /etc/default/celeryd

# 节点名字【标识作用】,日志也会以这个名字开头,自定义即可,启动多个的写法:CELERYD_NODES="worker1 worker2 worker3" 或 CELERYD_NODES=10 CELERYD_NODES="worker1" # 配置celery的位置,查看位置:which celery CELERY_BIN="/usr/local/Python-3.6.12/bin/celery" # 配置实例化app,一般指的创建app实例的文件,django项目则配置项目名,完整的配置方法:CELERY_APP="proj.tasks:app" CELERY_APP="django_celery_project" # 进入哪个workerr的目录 CELERYD_CHDIR="/opt/django_celery_project/" # --time-limit : 限制处理任务的时长 # --concurrency : 设置最高的并发数 # 多个启动worker,对每个woker进行单独的配置方法:CELERYD_OPTS="--time-limit=300 -c 8 -c:worker2 4 -c:worker3 2 -Ofair:worker1" CELERYD_OPTS="--time-limit=300 --concurrency=8" # 设置日志的级别:开发环境:DEBUG,生产环境:INFO CELERYD_LOG_LEVEL="DEBUG" # 设置存放目志位置 CELERYD_LOG_FILE="/var/log/celery/%n%I.log" # 设置启动程序存放pid文件 CELERYD_PID_FILE="/var/run/celery/%n.pid" # 设置启动程序的用户,需要手动创建用户和组 CELERYD_USER="celery" CELERYD_GROUP="celery" # 1:自动创建需要的目录文件并且设置运行程序所需的用户和组,0:需要手动处理 CELERY_CREATE_DIRS=1 # 设置celery最大的文件打开数 CELERYD_ULIMIT=65535

2.1.3、创建celery用户和组

useradd celery

2.1.4、启动celeryd worker服务

/etc/init.d/celeryd start celery init v10.1. Using config script: /etc/default/celeryd - Creating default directory: '/var/log/celery' - Changing permissions of '/var/log/celery' to 02755 - Changing owner of '/var/log/celery' to 'celery' - Changing group of '/var/log/celery' to 'celery' - Creating default directory: '/var/run/celery' - Changing permissions of '/var/run/celery' to 02755 - Changing owner of '/var/run/celery' to 'celery' - Changing group of '/var/run/celery' to 'celery' celery multi v4.4.7 (cliffs) > Starting nodes... > worker1@localhost.localdomain: OK

2.1.5、检查启动的进程

[root@localhost ~]# ps -ef | grep python root 1003 1 0 18:24 ? 00:00:03 /usr/bin/python2 -Es /usr/sbin/tuned -l -P celery 29173 1 3 23:57 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 -m celery worker --loglevel=INFO --time-limit=300 --concurrency=1 --logfile=/var/log/celery/worker1%I.log --pidfile=/var/run/celery/worker1.pid --hostname=worker1@localhost.localdomain celery 29177 29173 0 23:57 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 -m celery worker --loglevel=INFO --time-limit=300 --concurrency=1 --logfile=/var/log/celery/worker1%I.log --pidfile=/var/run/celery/worker1.pid --hostname=worker1@localhost.localdomain

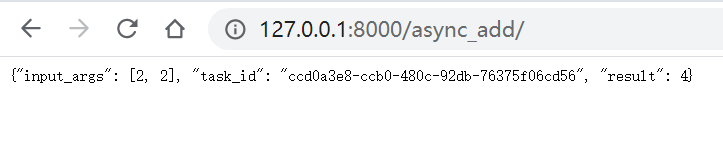

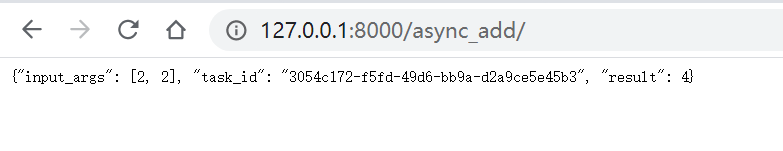

2.1.6、验证是否运行任务正常【如下图,表示worker启动脚本制作成功】

2.2、Celery beat启动脚本

2.2.1、编写beat启动脚本

vi /etc/init.d/celerybeat

#!/bin/sh -e # ========================================================= # celerybeat - Starts the Celery periodic task scheduler. # ========================================================= # # :Usage: /etc/init.d/celerybeat {start|stop|force-reload|restart|try-restart|status} # :Configuration file: /etc/default/celerybeat or /etc/default/celeryd # # See http://docs.celeryproject.org/en/latest/userguide/daemonizing.html#generic-init-scripts ### BEGIN INIT INFO # Provides: celerybeat # Required-Start: $network $local_fs $remote_fs # Required-Stop: $network $local_fs $remote_fs # Default-Start: 2 3 4 5 # Default-Stop: 0 1 6 # Short-Description: celery periodic task scheduler ### END INIT INFO # Cannot use set -e/bash -e since the kill -0 command will abort # abnormally in the absence of a valid process ID. #set -e VERSION=10.1 echo "celery init v${VERSION}." if [ $(id -u) -ne 0 ]; then echo "Error: This program can only be used by the root user." echo " Unpriviliged users must use 'celery beat --detach'" exit 1 fi origin_is_runlevel_dir () { set +e dirname $0 | grep -q "/etc/rc.\.d" echo $? } # Can be a runlevel symlink (e.g., S02celeryd) if [ $(origin_is_runlevel_dir) -eq 0 ]; then SCRIPT_FILE=$(readlink "$0") else SCRIPT_FILE="$0" fi SCRIPT_NAME="$(basename "$SCRIPT_FILE")" # /etc/init.d/celerybeat: start and stop the celery periodic task scheduler daemon. # Make sure executable configuration script is owned by root _config_sanity() { local path="$1" local owner=$(ls -ld "$path" | awk '{print $3}') local iwgrp=$(ls -ld "$path" | cut -b 6) local iwoth=$(ls -ld "$path" | cut -b 9) if [ "$(id -u $owner)" != "0" ]; then echo "Error: Config script '$path' must be owned by root!" echo echo "Resolution:" echo "Review the file carefully, and make sure it hasn't been " echo "modified with mailicious intent. When sure the " echo "script is safe to execute with superuser privileges " echo "you can change ownership of the script:" echo " $ sudo chown root '$path'" exit 1 fi if [ "$iwoth" != "-" ]; then # S_IWOTH echo "Error: Config script '$path' cannot be writable by others!" echo echo "Resolution:" echo "Review the file carefully, and make sure it hasn't been " echo "modified with malicious intent. When sure the " echo "script is safe to execute with superuser privileges " echo "you can change the scripts permissions:" echo " $ sudo chmod 640 '$path'" exit 1 fi if [ "$iwgrp" != "-" ]; then # S_IWGRP echo "Error: Config script '$path' cannot be writable by group!" echo echo "Resolution:" echo "Review the file carefully, and make sure it hasn't been " echo "modified with malicious intent. When sure the " echo "script is safe to execute with superuser privileges " echo "you can change the scripts permissions:" echo " $ sudo chmod 640 '$path'" exit 1 fi } scripts="" if test -f /etc/default/celeryd; then scripts="/etc/default/celeryd" _config_sanity /etc/default/celeryd . /etc/default/celeryd fi # 配置文件位置 EXTRA_CONFIG="/etc/default/${SCRIPT_NAME}" if test -f "$EXTRA_CONFIG"; then scripts="$scripts, $EXTRA_CONFIG" _config_sanity "$EXTRA_CONFIG" # 加载配置文件 . "$EXTRA_CONFIG" fi echo "Using configuration: $scripts" CELERY_BIN=${CELERY_BIN:-"celery"} DEFAULT_USER="celery" DEFAULT_PID_FILE="/var/run/celery/beat.pid" DEFAULT_LOG_FILE="/var/log/celery/beat.log" DEFAULT_LOG_LEVEL="INFO" DEFAULT_CELERYBEAT="$CELERY_BIN beat" CELERYBEAT=${CELERYBEAT:-$DEFAULT_CELERYBEAT} CELERYBEAT_LOG_LEVEL=${CELERYBEAT_LOG_LEVEL:-${CELERYBEAT_LOGLEVEL:-$DEFAULT_LOG_LEVEL}} CELERYBEAT_SU=${CELERYBEAT_SU:-"su"} CELERYBEAT_SU_ARGS=${CELERYBEAT_SU_ARGS:-""} # Sets --app argument for CELERY_BIN CELERY_APP_ARG="" if [ ! -z "$CELERY_APP" ]; then CELERY_APP_ARG="--app=$CELERY_APP" fi CELERYBEAT_USER=${CELERYBEAT_USER:-${CELERYD_USER:-$DEFAULT_USER}} # Set CELERY_CREATE_DIRS to always create log/pid dirs. CELERY_CREATE_DIRS=${CELERY_CREATE_DIRS:-0} CELERY_CREATE_RUNDIR=$CELERY_CREATE_DIRS CELERY_CREATE_LOGDIR=$CELERY_CREATE_DIRS if [ -z "$CELERYBEAT_PID_FILE" ]; then CELERYBEAT_PID_FILE="$DEFAULT_PID_FILE" CELERY_CREATE_RUNDIR=1 fi if [ -z "$CELERYBEAT_LOG_FILE" ]; then CELERYBEAT_LOG_FILE="$DEFAULT_LOG_FILE" CELERY_CREATE_LOGDIR=1 fi export CELERY_LOADER CELERYBEAT_OPTS="$CELERYBEAT_OPTS -f $CELERYBEAT_LOG_FILE -l $CELERYBEAT_LOG_LEVEL" if [ -n "$2" ]; then CELERYBEAT_OPTS="$CELERYBEAT_OPTS $2" fi CELERYBEAT_LOG_DIR=`dirname $CELERYBEAT_LOG_FILE` CELERYBEAT_PID_DIR=`dirname $CELERYBEAT_PID_FILE` # Extra start-stop-daemon options, like user/group. CELERYBEAT_CHDIR=${CELERYBEAT_CHDIR:-$CELERYD_CHDIR} if [ -n "$CELERYBEAT_CHDIR" ]; then DAEMON_OPTS="$DAEMON_OPTS --workdir=$CELERYBEAT_CHDIR" fi export PATH="${PATH:+$PATH:}/usr/sbin:/sbin" check_dev_null() { if [ ! -c /dev/null ]; then echo "/dev/null is not a character device!" exit 75 # EX_TEMPFAIL fi } maybe_die() { if [ $? -ne 0 ]; then echo "Exiting: $*" exit 77 # EX_NOPERM fi } create_default_dir() { if [ ! -d "$1" ]; then echo "- Creating default directory: '$1'" mkdir -p "$1" maybe_die "Couldn't create directory $1" echo "- Changing permissions of '$1' to 02755" chmod 02755 "$1" maybe_die "Couldn't change permissions for $1" if [ -n "$CELERYBEAT_USER" ]; then echo "- Changing owner of '$1' to '$CELERYBEAT_USER'" chown "$CELERYBEAT_USER" "$1" maybe_die "Couldn't change owner of $1" fi if [ -n "$CELERYBEAT_GROUP" ]; then echo "- Changing group of '$1' to '$CELERYBEAT_GROUP'" chgrp "$CELERYBEAT_GROUP" "$1" maybe_die "Couldn't change group of $1" fi fi } check_paths() { if [ $CELERY_CREATE_LOGDIR -eq 1 ]; then create_default_dir "$CELERYBEAT_LOG_DIR" fi if [ $CELERY_CREATE_RUNDIR -eq 1 ]; then create_default_dir "$CELERYBEAT_PID_DIR" fi } create_paths () { create_default_dir "$CELERYBEAT_LOG_DIR" create_default_dir "$CELERYBEAT_PID_DIR" } is_running() { pid=$1 ps $pid > /dev/null 2>&1 } wait_pid () { pid=$1 forever=1 i=0 while [ $forever -gt 0 ]; do if ! is_running $pid; then echo "OK" forever=0 else kill -TERM "$pid" i=$((i + 1)) if [ $i -gt 60 ]; then echo "ERROR" echo "Timed out while stopping (30s)" forever=0 else sleep 0.5 fi fi done } stop_beat () { echo -n "Stopping ${SCRIPT_NAME}... " if [ -f "$CELERYBEAT_PID_FILE" ]; then wait_pid $(cat "$CELERYBEAT_PID_FILE") else echo "NOT RUNNING" fi } _chuid () { ${CELERYBEAT_SU} ${CELERYBEAT_SU_ARGS} \ "$CELERYBEAT_USER" -c "$CELERYBEAT $*" } start_beat () { if [ ! -z "$CELERYD_ULIMIT" ]; then ulimit -n $CELERYD_ULIMIT fi echo "Starting ${SCRIPT_NAME}..." _chuid $CELERY_APP_ARG $CELERYBEAT_OPTS $DAEMON_OPTS --detach \ --pidfile="$CELERYBEAT_PID_FILE" } check_status () { local failed= local pid_file=$CELERYBEAT_PID_FILE if [ ! -e $pid_file ]; then echo "${SCRIPT_NAME} is down: no pid file found" failed=true elif [ ! -r $pid_file ]; then echo "${SCRIPT_NAME} is in unknown state, user cannot read pid file." failed=true else local pid=`cat "$pid_file"` local cleaned_pid=`echo "$pid" | sed -e 's/[^0-9]//g'` if [ -z "$pid" ] || [ "$cleaned_pid" != "$pid" ]; then echo "${SCRIPT_NAME}: bad pid file ($pid_file)" failed=true else local failed= kill -0 $pid 2> /dev/null || failed=true if [ "$failed" ]; then echo "${SCRIPT_NAME} (pid $pid) is down, but pid file exists!" failed=true else echo "${SCRIPT_NAME} (pid $pid) is up..." fi fi fi [ "$failed" ] && exit 1 || exit 0 } case "$1" in start) check_dev_null check_paths start_beat ;; stop) check_paths stop_beat ;; reload|force-reload) echo "Use start+stop" ;; status) check_status ;; restart) echo "Restarting celery periodic task scheduler" check_paths stop_beat && check_dev_null && start_beat ;; create-paths) check_dev_null create_paths ;; check-paths) check_dev_null check_paths ;; *) echo "Usage: /etc/init.d/${SCRIPT_NAME} {start|stop|restart|create-paths|status}" exit 64 # EX_USAGE ;; esac exit 0

chmod +x /etc/init.d/celerybeat

2.2.2、编写beat的配置文件,位置:/etc/default/celerybeat【需要修改位置的话,请修改beat脚本即可】

vi /etc/default/celerybeat

# 配置celery的位置,查看位置:which celery CELERY_BIN="/usr/local/Python-3.6.12/bin/celery" # 配置实例化app,一般指的创建app实例的文件,django项目则配置项目名,完整的配置方法:CELERY_APP="proj.tasks:app" CELERY_APP="django_celery_project" # Django项目必须配置 export DJANGO_SETTINGS_MODULE='django_celery_project.settings' # 进入哪个worker的目录 CELERYBEAT_CHDIR="/opt/django_celery_project/" # 额外的参数,即是beat序列化存放的位置 CELERYBEAT_OPTS="--schedule=/var/run/celery/celerybeat-schedule" # PID文件的完整路径 CELERYBEAT_PID_FILE=/var/run/celery/celerybeatd.pid # 日志文件的完整路径 CELERYBEAT_LOG_FILE=/var/run/celery/celerybeatd.log # 要使用的日志级别 CELERYBEAT_LOG_LEVEL=INFO # 运行用户 CELERYBEAT_USER=celery # 运行组 CELERYBEAT_GROUP=celery [root@localhost ~]# vi /etc/default/celerybeat # 配置celery的位置,查看位置:which celery CELERY_BIN="/usr/local/Python-3.6.12/bin/celery" # 配置实例化app,一般指的创建app实例的文件,django项目则配置项目名,完整的配置方法:CELERY_APP="proj.tasks:app" CELERY_APP="django_celery_project" # Django项目必须配置 export DJANGO_SETTINGS_MODULE='django_celery_project.settings' # 进入哪个worker的目录 CELERYBEAT_CHDIR="/opt/django_celery_project/" # 额外的参数,即是beat序列化存放的位置 CELERYBEAT_OPTS="--schedule=/var/run/celery/celerybeat-schedule" # PID文件的完整路径 CELERYBEAT_PID_FILE=/var/run/celery/celerybeatd.pid # 日志文件的完整路径 CELERYBEAT_LOG_FILE=/var/log/celery/celerybeatd.log # 要使用的日志级别 CELERYBEAT_LOG_LEVEL=INFO # 运行用户 CELERYBEAT_USER=celery # 运行组 CELERYBEAT_GROUP=celery

2.2.3、启动celeryd beat服务

[root@localhost ~]# /etc/init.d/celerybeat start celery init v10.1. Using configuration: /etc/default/celeryd, /etc/default/celerybeat Starting celerybeat...

2.2.4、检查beat进程

[root@localhost ~]# ps -ef | grep python | grep beat celery 34419 1 1 10:57 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 /usr/local/Python-3.6.12/bin/celery beat --app=django_celery_project

--schedule=/var/run/celery/celerybeat-schedule -f /var/log/celery/celerybeatd.log -l INFO --workdir=/opt/django_celery_project/

--detach --pidfile=/var/run/celery/celerybeatd.pid

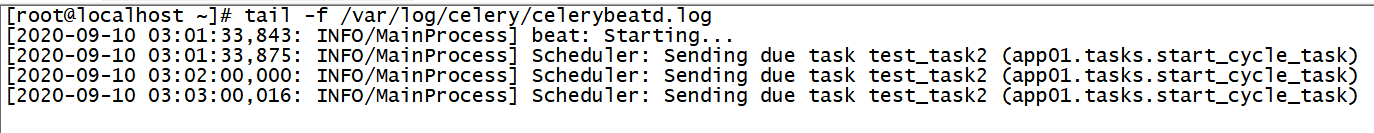

2.2.5、查看日志,是否运行

2.2.6、通过日志看出一切都是正常

3、使用Systemd方式,管理celery worker、beat系统服务

3.0、命令的使用方法

使用方法: systemctl {start|stop|restart|status} celery.service

systemctl {start|stop|restart|status} celerybeat.service

配置文件位置:

/etc/celery/conf.d/celery.conf

celery

3.1、Celery worker systemd的启动

3.1.0、创建存放配置文件的目录

mkdir /etc/celery/conf.d/ -p

useradd celery

3.1.1、编写systemd启动脚本

vi /etc/systemd/system/celery.service

[Unit] Description=Celery Service After=network.target [Service] Type=forking User=celery Group=celery # 配置文件位置 EnvironmentFile=/etc/celery/conf.d/celery.conf # 运行脚本进入该目录再运行程序 WorkingDirectory=/opt/django_celery_project ExecStart=/bin/sh -c '${CELERY_BIN} multi start ${CELERYD_NODES} \ -A ${CELERY_APP} --pidfile=${CELERYD_PID_FILE} \ --logfile=${CELERYD_LOG_FILE} --loglevel=${CELERYD_LOG_LEVEL} ${CELERYD_OPTS}' ExecStop=/bin/sh -c '${CELERY_BIN} multi stopwait ${CELERYD_NODES} \ --pidfile=${CELERYD_PID_FILE}' ExecReload=/bin/sh -c '${CELERY_BIN} multi restart ${CELERYD_NODES} \ -A ${CELERY_APP} --pidfile=${CELERYD_PID_FILE} \ --logfile=${CELERYD_LOG_FILE} --loglevel=${CELERYD_LOG_LEVEL} ${CELERYD_OPTS}' [Install] WantedBy=multi-user.target

3.1.2、编写启动的配置文件

vi /etc/celery/conf.d/celery.conf

# 节点名字【标识作用】,日志也会以这个名字开头,自定义即可,启动多个的写法:CELERYD_NODES="worker1 worker2 worker3" 或 CELERYD_NODES=10 CELERYD_NODES="worker1" # 配置celery的位置,查看位置:which celery CELERY_BIN="/usr/local/Python-3.6.12/bin/celery" # 配置实例化app,一般指的创建app实例的文件,django项目则配置项目名,完整的配置方法:CELERY_APP="proj.tasks:app" CELERY_APP="django_celery_project" # --time-limit : 限制处理任务的时长 # --concurrency : 设置最高的并发数 # 多个启动worker,对每个woker进行单独的配置方法:CELERYD_OPTS="--time-limit=300 -c 8 -c:worker2 4 -c:worker3 2 -Ofair:worker1" CELERYD_OPTS="--time-limit=300 --concurrency=1" # 设置日志的级别:开发环境:DEBUG,生产环境:INFO CELERYD_LOG_LEVEL="INFO" # 设置存放目志位置 CELERYD_LOG_FILE="/var/log/celery/%n%I.log" # 设置启动程序存放pid文件 CELERYD_PID_FILE="/var/run/celery/%n.pid" # Celery Beat 会使用到:额外的参数,即是beat序列化存放的位置 CELERYBEAT_SCHEDULE="/var/run/celery/celerybeat-schedule" # Celery Beat 会使用到,存放日志和pid位置 CELERYBEAT_PID_FILE="/var/run/celery/beat.pid" CELERYBEAT_LOG_FILE="/var/log/celery/beat.log"

3.1.3、利用systemd,启动程序时,我们自动创建程序需要的文件夹

vi /etc/tmpfiles.d/celery.conf d /var/run/celery 0755 celery celery - d /var/log/celery 0755 celery celery -

# 执行创建所需要目录

systemd-tmpfiles --create /etc/tmpfiles.d/celery.conf

3.1.3、重新加载systemd,使用celery.service生效以及服务开启、关闭、重启

systemctl daemon-reload

systemctl start celery

systemctl stop celery

systemctl restart celery

3.1.4、检查启动进程

[root@localhost ~]# ps -ef | grep celery celery 35411 1 4 11:56 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 -m celery worker --loglevel=INFO --time-limit=300 --concurrency=1 --logfile=/var/log/celery/worker1%I.log --pidfile=/var/run/celery/worker1.pid --hostname=worker1@localhost.localdomain celery 35415 35411 0 11:56 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 -m celery worker --loglevel=INFO --time-limit=300 --concurrency=1 --logfile=/var/log/celery/worker1%I.log --pidfile=/var/run/celery/worker1.pid --hostname=worker1@localhost.localdomain

3.1.5、测试worker服务可用性

3.2、Celery beat systemd的启动

3.2.1、编写systemd启动脚本

vi /etc/systemd/system/celerybeat.service

[Unit] Description=Celery Beat Service After=network.target [Service] Type=simple User=celery Group=celery EnvironmentFile=/etc/celery/conf.d/celery.conf WorkingDirectory=/opt/django_celery_project ExecStart=/bin/sh -c '${CELERY_BIN} beat \ -A ${CELERY_APP} --pidfile=${CELERYBEAT_PID_FILE} \ --logfile=${CELERYBEAT_LOG_FILE} \ --loglevel=${CELERYD_LOG_LEVEL} \ --schedule=${CELERYBEAT_SCHEDULE}' [Install] WantedBy=multi-user.target

3.2.2、使脚本生效

systemctl daemon-reload

3.2.3、使用systemd启动celery beat的服务

systemctl start celerybeat

3.2.4、检查进程是否开启

[root@localhost ~]# ps -ef | grep beat celery 39121 1 10 23:17 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 /usr/local/Python-3.6.12/bin/celery beat -A django_celery_project

--pidfile=/var/run/celery/beat.pid --logfile=/var/log/celery/beat.log --loglevel=INFO --schedule=/var/run/celery/celerybeat-schedule root 39126 38169 0 23:17 pts/2 00:00:00 grep --color=auto beat

3.3.5、systemd celery beat系统服务配置成功【这里不在演示一步一步测试效果,可以参考shell 脚本启动方式,跟上面一样的方法】

4、使用supervisor方法,管理celery worker、beat系统服务

4.0、安装软件

yum install supervisor -y

4.1、启动supervisor服务

systemctl enable supervisord;

systemctl start supervisord;

4.2、编写celery的worker和beat的supervisor配置文件

4.2.1、编写supervisor对管理celery worker的配置

vi /etc/supervisord.d/celery_worker.ini

[program:celery_worker] command=/opt/django_celery_project/celery_worker.sh numprocs=1 directory=/opt/django_celery_project autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,1 stopsignal=QUIT stopwaitsecs=10 user=celery redirect_stderr=true stdout_logfile=/var/log/celery/supervisor_celery_worker.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false

4.2.2、supervisor调用的启动脚本

vi /opt/django_celery_project/celery_worker.sh

#!/bin/bash # 这个是用supervisor启动celery worker的程序 # 2020-09-10 CELERY_BIN="/usr/local/Python-3.6.12/bin/celery" CELERY_APP="django_celery_project" CELERYD_OPTS="--time-limit=300 --concurrency=1" CELERYD_LOG_LEVEL="INFO" CELERYD_LOG_FILE="/var/log/celery/%n%I.log" CELERYD_PID_FILE="/var/run/celery/%n.pid" exec ${CELERY_BIN} worker -A ${CELERY_APP} --pidfile=${CELERYD_PID_FILE} --logfile=${CELERYD_LOG_FILE} --loglevel=${CELERYD_LOG_LEVEL} ${CELERYD_OPTS}

chown celery.celery celery_worker.sh chmod +x celery_worker.sh

4.2.3、使supervisor加载celery_worker.ini配置文件,并且自动启动celery worker服务

supervisorctl update supervisorctl status celery_worker RUNNING pid 43195, uptime 0:00:35

4.2.4、检查celery worker进程是否正常

ps -ef | grep celery root 42837 41168 0 12:41 pts/3 00:00:00 tail -f /var/log/celery/celery.log celery 43195 42718 0 13:40 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6

/usr/local/Python-3.6.12/bin/celery worker -A django_celery_project --pidfile=/var/run/celery/%n.pid --logfile=/var/log/celery/%n%I.log --loglevel=INFO --time-limit=300 --concurrency=1 celery 43199 43195 0 13:40 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6

/usr/local/Python-3.6.12/bin/celery worker -A django_celery_project --pidfile=/var/run/celery/%n.pid --logfile=/var/log/celery/%n%I.log --loglevel=INFO --time-limit=300 --concurrency=1

4.2.5、进程启动起来,证明是配置成功

4.3.1、编写supervisor对管理celery beat的配置

vi /etc/supervisord.d/celery_beat.ini

[program:celery_beat] command=/opt/django_celery_project/celery_beat.sh numprocs=1 directory=/opt/django_celery_project autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,1 stopsignal=QUIT stopwaitsecs=10 user=celery redirect_stderr=true stdout_logfile=/var/log/celery/supervisor_celery_beat.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false

4.3.2、supervisor调用的启动脚本

vi /opt/django_celery_project/celery_beat.sh

#!/bin/bash # 这个是celery beat 启动脚本 # 2020-09-11 CELERY_BIN="/usr/local/Python-3.6.12/bin/celery" CELERY_APP="django_celery_project" CELERYD_OPTS="--time-limit=300 --concurrency=1" CELERYD_LOG_LEVEL="INFO" CELERYD_LOG_FILE="/var/log/celery/${CELERY_APP}.log" CELERYD_PID_FILE="/var/run/celery/${CELERY_APP}.pid" CELERYBEAT_OPTS="/var/run/celery/celerybeat-schedule" ${CELERY_BIN} beat --app=${CELERY_APP} --schedule=${CELERYBEAT_OPTS} -f ${CELERYD_LOG_FILE} -l ${CELERYD_LOG_LEVEL} --pidfile=${CELERYD_PID_FILE}

chown celery.celery celery_beat.sh chmod +x celery_beat.sh

4.3.3、使supervisor加载celery_beat.ini配置文件,并且自动启动celery beat服务

supervisorctl update

supervisorctl status

celery_beat RUNNING pid 43621, uptime 0:02:02

celery_worker RUNNING pid 43638, uptime 0:01:17

4.3.4、检查celery beat进程是否正常

ps -ef | grep beat celery 43621 42718 0 14:21 ? 00:00:00 /bin/bash /opt/django_celery_project/celery_beat.sh celery 43622 43621 0 14:21 ? 00:00:00 /usr/local/Python-3.6.12/bin/python3.6 /usr/local/Python-3.6.12/bin/celery beat

--app=django_celery_project --schedule=/var/run/celery/celerybeat-schedule -f /var/log/celery/django_celery_project.log -l INFO

--pidfile=/var/run/celery/django_celery_project.pid

4.4.5、进程启动起来,证明是配置成功

5、总结

这是实现Celery启动服务的三种方式: 1、Shell脚本启动 2、Systemd启动 3、Supervisor启动 也是最完整的教程,来自笔者对官方文档的学习,并且记录下来。