ZooKeeper集群搭建

ZooKeeper 是一个分布式服务框架,它主要是用来解决分布式应用中经常遇到的一些数据管理问题,如:命名服务、状态同步、配置中心、集群管理等。

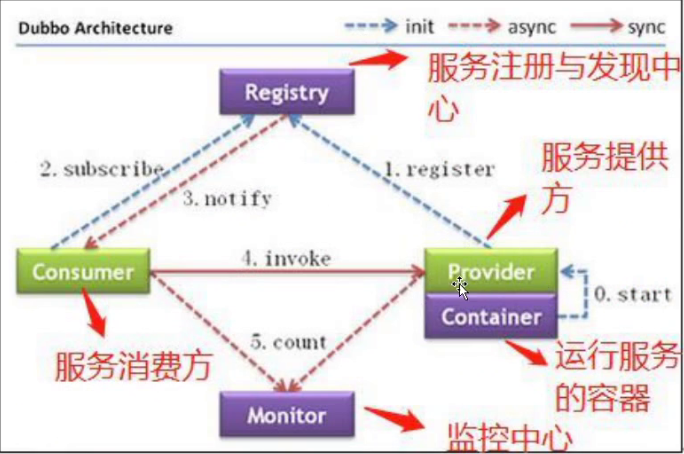

消费者模型

0 生产者启动 1 生产者注册至 zookeeper(生产者的代码启动) 2 消费者启动并订阅频道(消费者往那个频道注册的就会到那个频道去找,频道都是写死的) 3 zookeeper 通知消费者事件(如果生产者的地址有变化或者挂了都会通知消费者) 4 消费者调用生产者(消费者根据注册中心返回的地址去调用生产者,此时消费者就可以直接访问生产者了) 5 监控中心负责统计和监控服务状态(监控服务是否正常)

集群有leader主节点和follower的角色,数据写入比较特殊,为了保证数据的一致性,zookeeper也是复制式的集群,数据写的操作只能在leader写入,数据写入到leader也会同步到follower,如果写入是在follower的话,follower会把请求转发给leader,leader写完再同步

到别的follower节点上,这个时候别的follower服务器就拥有和leader相同的数据了,然后客户端就可以到follower读取数据就可以了

领导者

1.事务请求的唯一调度和策略者,从而保证集群事务(事务就是写入数据)处理的顺序性,按照请求的顺序去写数据

2.集群内部各服务器的调度者,这个调度就是就是写数据区处理写操作在把数据同步给其他的follower服务器,这个数据是由leader去管理的

3.一个集群只要一个leader,如果有2个leader的话,可能一部分写入到节点1和节点2的follower,一部分数据写入到节点1和节点3的follower这个数据就乱套了,服务器挂了以后数据会丢失,为了保证数据的一致性只能一个服务器可以写就是leader写完之后再往别的服务器同步,2个leader就是脑裂会导致数据不一致

跟随者(Follower),

1.处理客户端的非事务请求(处理写请求),转发事务(事务就写入数据)请求给leader服务器,转发写请求给leader,在zookeeper集群当中follower一定知道谁是leader

2.参与事务请求Proposal的投票就是进行leader选举,包括初始化集群的时候进行投票选举出leader

3.参与leader选举的投票

观察者:follower和observer的唯一区别在于observe的机器不参与leader的选举过程,observer也可以处理客户读请求,也不参与写操作的过半写成功策略(过半写成功),因此observe机器可以在不影响写性能的情况下提升集群的读性能。

写成功策略:过半写成功,客户端发起写请求的话,为快速响应客户端,leader写入数据以后同步数据到follower,此时有一半的服务器加上leader同步数据成功就响应客户,就响应客户写入成功,避免客户等待响应时间过长就是写成功策略

客户端:请求的发起方,就是app应用程序

配置说明

# grep -v "^#" /usr/local/zookeeper/conf/zoo.cfg #配置文件内容 tickTime=2000 #服务器与服务器之间的单次心跳检测时间间隔,单位为毫秒 initLimit=10 #集群中 leader 服务器与 follower 服务器初始连接心跳次数,即多少个 2000 毫秒 syncLimit=5 # leader 与 follower 之间连接完成之后,后期检测发送和应答的心跳次数,如果该 follower 在设置的时间内(5*2000)不能与leader 进行通信,那么此follower 将被视为不可用。 dataDir=/usr/local/zookeeper/data #自定义的 zookeeper 保存数据的目录 clientPort=2181 #客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求 maxClientCnxns=128 #单个客户端 IP 可以和 zookeeper 保持的连接数 autopurge.snapRetainCount=3 #3.4.0 中的新增功能:启用后,ZooKeeper 自动清除功能会将 autopurge.snapRetainCount 最新快照和相应的事务日志分别保留在dataDir 和 dataLogDir 中,并删除其余部分,默认值为3。最小值为3。 autopurge.purgeInterval=1 # 3.4.0 及之后版本,ZK 提供了自动清理日志和快照文件的功能,这个参数指定了清理频率,单位是小时,需要配置一个1 或更大的整数,默认是 0,表示不开启自动清理功能 #集群配置 2888:leader和follower数据同步端口,只要在leader开启,follower会链接此2888端口同步数据 3888:选举端口和通告使用,leader和follower开启此端口 2181:是客户端的端口,leader和follower开启此端口 server.1=172.18.0.101:2888:3888 # server.服务器编号=服务器IP(这里ip在k8s环境写名称需要codedns支持名称解析):LF 数据同步端口:LF 选举端口 server.2=172.18.0.102:2888:3888 server.3=172.18.0.103:2888:3888

集群选举

#选举过程当中集群任何服务器节点都可以处于下面的角色状态,第一种LOOKING处于寻找Leader状态,集群在没有初始化的时候会短暂的处于LOOKING状态,寻找leader,LOOKING状态很短暂,一旦选出leader之后他们就会自动转换他们的角色,最终选出leader之后,处于LEADING领导者状态服务器就是leader角色,参与FOLLOWING跟随者状态的服务器就是follower,zookeeper选举是根据配置文件的server配置的地址和myid进行选举的

LOOKING:寻找 Leader 状态,处于LOOKING状态需要进入通告进入选举流程,集群初始化也服务器也会处于LOOKING状态,一旦选出leader之后就会自动转换角色

LEADING:领导者状态,处于该状态的节点说明是角色已经是Leader,一个集群只有一个leader

FOLLOWING:跟随者状态,表示 Leader 已经选举出来,当前节点角色是follower只处理读请求,写转发给leader

OBSERVER:观察者状态,表明当前节点角色是 observer,不参与投票和选举此角色使用的很少

选举id

ZXID(zookeeper transaction id):每个改变 Zookeeper 状态的操作都会形成一个对应的 zxid,事务id。

myid:服务器的唯一标识(SID),通过配置 myid 文件指定,集群中唯一。

选举过程当leader挂了或者是集群初始化,所有服务器都会处于短暂的LOOKING处于寻找Leader状态,然后在集群进行通告myid和zxid,在zookeeper集群初始化的时候,第一次没有任何数据的时候myid高的节点会选举为leader,myid是集群服务器的唯一标识,在后期zookeeper集群有数据的时候,就会跟zxid来进行选举zxid是事务id,事务id越新就是数据越齐全,那个节点的zxid最新那个节点就会被选举为leader,选举出服务器以后,此时处于LEADING状态的服务器就是leader,处于FOLLOWING状态的服务器就是follower,选举就完成了

集群搭建

#修改主机名称 hostnamectl set-hostname zk1 hostnamectl set-hostname zk2 hostnamectl set-hostname zk3 #安装openjdk8 apt update apt install -y openjdk-8-jdk #检查jdk root@zk1:~# java -version openjdk version "1.8.0_382" OpenJDK Runtime Environment (build 1.8.0_382-8u382-ga-1~20.04.1-b05) OpenJDK 64-Bit Server VM (build 25.382-b05, mixed mode) #上传安装包3个节点解压安装包 tar -xf apache-zookeeper-3.6.3-bin.tar.gz #修改配置文件 root@zk1:/tools/apache-zookeeper-3.6.3-bin# cp -a /tools/apache-zookeeper-3.6.3-bin/conf/zoo_sample.cfg /tools/apache-zookeeper-3.6.3-bin/conf/zoo.cfg #配置文件说明 root@zk1:/tools/apache-zookeeper-3.6.3-bin# cat /tools/apache-zookeeper-3.6.3-bin/conf/zoo.cfg tickTime=2000 #服务器与服务器之间的单次心跳检测时间间隔,单位为毫秒 initLimit=10 #集群中 leader 服务器与 follower 服务器初始连接心跳次数,即多少个 2000 毫秒 syncLimit=5 #leader 与 follower 之间连接完成之后,后期检测发送和应答的心跳次数,如果该 follower 在设置的时间内(5*2000)不能与leader 进行通信,那么此follower 将被视为不可用。 dataDir=/tmp/zookeeper #数据保存的目录 clientPort=2181 #客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求 maxClientCnxns=128 #单个客户端 IP 可以和 zookeeper 保持的连接数 #autopurge.snapRetainCount=3 #3.4.0 中的新增功能:启用后,ZooKeeper 自动清除功能会将 autopurge.snapRetainCount 最新快照和相应的事务日志分别保留在dataDir 和 dataLogDir 中,并删除其余部分,默认值为3。最小值为3。 #autopurge.purgeInterval=1#3.4.0 及之后版本,ZK 提供了自动清理日志和快照文件的功能,这个参数指定了清理频率,单位是小时,需要配置一个1 或更大的整数,默认是 0,表示不开启自动清理功能 #集群配置 2888:leader和follower数据同步端口,只要在leader开启,follower会链接此2888端口同步数据 3888:选举端口和通告使用,leader和follower开启此端口 2181:是客户端的端口,leader和follower开启此端口 server.1=172.18.0.101:2888:3888 # server.服务器编号=服务器IP(这里ip在k8s环境写名称需要codedns支持名称解析):LF 数据同步端口:LF 选举端口 server.2=172.18.0.102:2888:3888 server.3=172.18.0.103:2888:3888 #创建数据目录 #在数据目录创建myid,zookeeper在启动的过程中会在数据目录加载myid并且作为自己的myid,myid越大初始化的时候myid最大的会被选举为leader,myid在选举的使用 mkdir -p /usr/local/zookeeper/data #配置myid # echo "1" > /usr/local/zookeeper/data/myid #自己的集群id root@zk1:/tools/apache-zookeeper-3.6.3-bin# echo "1" > /usr/local/zookeeper/data/myid root@zk2:/tools# echo "2" > /usr/local/zookeeper/data/myid root@zk3:/tools# echo "3" > /usr/local/zookeeper/data/myid #3台服务器修改配置文件 cat >/tools/apache-zookeeper-3.6.3-bin/conf/zoo.cfg<<'EOF' tickTime=2000 initLimit=10 syncLimit=5 dataDir=/usr/local/zookeeper/data clientPort=2181 maxClientCnxns=4096 autopurge.snapRetainCount=128 autopurge.purgeInterval=1 server.1=10.0.0.70:2888:3888 server.2=10.0.0.72:2888:3888 server.3=10.0.0.73:2888:3888 EOF #集群启动 cd /tools/apache-zookeeper-3.6.3-bin/bin/ && ./zkServer.sh start root@zk1:~# cd /tools/apache-zookeeper-3.6.3-bin/bin/ && ./zkServer.sh start /usr/bin/java ZooKeeper JMX enabled by default Using config: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg Starting zookeeper ... STARTED root@zk2:~# cd /tools/apache-zookeeper-3.6.3-bin/bin/ && ./zkServer.sh start /usr/bin/java ZooKeeper JMX enabled by default Using config: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg Starting zookeeper ... STARTED root@zk3:~# cd /tools/apache-zookeeper-3.6.3-bin/bin/ && ./zkServer.sh start /usr/bin/java ZooKeeper JMX enabled by default Using config: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg Starting zookeeper ... STARTED #查看启动日志 root@zk1:/tools/apache-zookeeper-3.6.3-bin/bin# tail -f /tools/apache-zookeeper-3.6.3-bin/logs/zookeeper* ==> /tools/apache-zookeeper-3.6.3-bin/logs/zookeeper_audit.log <== ==> /tools/apache-zookeeper-3.6.3-bin/logs/zookeeper-root-server-zk1.out <== at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:607) at org.apache.zookeeper.server.quorum.QuorumCnxManager.initiateConnection(QuorumCnxManager.java:383) at org.apache.zookeeper.server.quorum.QuorumCnxManager$QuorumConnectionReqThread.run(QuorumCnxManager.java:457) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:750) 2023-09-23 18:27:44,446 [myid:1] - INFO [ListenerHandler-/10.0.0.70:3888:QuorumCnxManager$Listener$ListenerHandler@1070] - Received connection request from /10.0.0.73:13975 2023-09-23 18:27:44,449 [myid:1] - INFO [WorkerReceiver[myid=1]:FastLeaderElection$Messenger$WorkerReceiver@389] - Notification: my state:FOLLOWING; n.sid:3, n.state:LOOKING, n.leader:3, n.round:0x1, n.peerEpoch

:0x0, n.zxid:0x0, message format version:0x2, n.config version:0x0 #检查端口2181 root@zk1:/tools/apache-zookeeper-3.6.3-bin/bin# lsof -i:2181 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME java 69305 root 65u IPv6 176392 0t0 TCP *:2181 (LISTEN) root@zk2:/tools/apache-zookeeper-3.6.3-bin/bin# lsof -i:2181 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME java 34641 root 65u IPv6 124106 0t0 TCP *:2181 (LISTEN) root@zk3:/tools/apache-zookeeper-3.6.3-bin/bin# lsof -i:2181 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME java 34940 root 65u IPv6 126251 0t0 TCP *:2181 (LISTEN) #查看集群节点状态,启动集群,启动要快 #查看集群状态 root@zk3:/tools/apache-zookeeper-3.6.3-bin/bin# ./zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower root@zk2:/tools/apache-zookeeper-3.6.3-bin/bin# ./zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader root@zk1:/tools/apache-zookeeper-3.6.3-bin/bin# ./zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower #任意节点使用客户端命令链接zk ls查看当前路径/ create创建什么数据 delete删除数据 get查看数据 set修改数据 一般情况下数据都是由应用程序往里面写的 root@zk1:/tools/apache-zookeeper-3.6.3-bin/bin# ./zkCli.sh /usr/bin/java Connecting to localhost:2181 #查看根目录 [zk: localhost:2181(CONNECTED) 1] ls / [zookeeper] #创建数据,类似生产者注册相当于是注册了10.0.0.100的服务器 [zk: localhost:2181(CONNECTED) 2] create /host1 "10.0.0.70" Created /host1 #zookeeper默认是树形结构 #查看根的数据 [zk: localhost:2181(CONNECTED) 5] ls / [host1, zookeeper] [zk: localhost:2181(CONNECTED) 6] ls /host1 [] #get类似消费者获取服务器地址 [zk: localhost:2181(CONNECTED) 7] get /host1 10.0.0.70 #删除host1的数据 [zk: localhost:2181(CONNECTED) 8] delete /host1 #检查 [zk: localhost:2181(CONNECTED) 9] get /host1 Node does not exist: /host1 [zk: localhost:2181(CONNECTED) 10] ls /host1 Node does not exist: /host1 [zk: localhost:2181(CONNECTED) 11] ls / [zookeeper] ##需要先创建父目录在创建子目录,应用程序可以递归创建 #创建父目录 [zk: localhost:2181(CONNECTED) 12] create /wbiao Created /wbiao #创建host1 [zk: localhost:2181(CONNECTED) 15] create /wbiao/host1 "10.0.0.100" Created /wbiao/host1 #创建host2 [zk: localhost:2181(CONNECTED) 18] create /wbiao/host2 "10.0.0.102" Created /wbiao/host2 #查看目录 [zk: localhost:2181(CONNECTED) 19] ls / [wbiao, zookeeper] [zk: localhost:2181(CONNECTED) 20] ls /wbiao [host1, host2] [zk: localhost:2181(CONNECTED) 21] ls /wbiao/host1 [] [zk: localhost:2181(CONNECTED) 22] ls /wbiao/host2 [] #查看数据 [zk: localhost:2181(CONNECTED) 23] get /wbiao/host1 10.0.0.100 [zk: localhost:2181(CONNECTED) 24] get /wbiao/host2 10.0.0.102 #删除需要先删除父目录的子目录,在删除父目录 [zk: localhost:2181(CONNECTED) 25] delete /wbiao/host1 [zk: localhost:2181(CONNECTED) 26] delete /wbiao/host2 [zk: localhost:2181(CONNECTED) 27] delete /wbiao [zk: localhost:2181(CONNECTED) 28] ls / [zookeeper] #程序注册的地址,服务器地址注册和服务器地址读取是程序自动执行 [zk: localhost:2181(CONNECTED) 29] create wbiao Path must start with / character [zk: localhost:2181(CONNECTED) 30] create /wbiao Created /wbiao [zk: localhost:2181(CONNECTED) 31] create /wbiao/kafka Created /wbiao/kafka [zk: localhost:2181(CONNECTED) 32] create /wbiao/kafka/nodes Created /wbiao/kafka/nodes [zk: localhost:2181(CONNECTED) 33] create /wbiao/kafka/nodes/id-1 "10.0.0.1" Created /wbiao/kafka/nodes/id-1 [zk: localhost:2181(CONNECTED) 34] get /wbiao/kafka/nodes/id-1 10.0.0.1 #查看同步数据的链接3888端口,3888的端口会一直和follower保持链接 root@zk3:/tools/apache-zookeeper-3.6.3-bin/bin# lsof -i:3888 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME java 34940 root 77u IPv6 127165 0t0 TCP 10.0.0.73:3888 (LISTEN) java 34940 root 78u IPv6 126259 0t0 TCP 10.0.0.73:13975->10.0.0.70:3888 (ESTABLISHED) java 34940 root 79u IPv6 124790 0t0 TCP 10.0.0.73:19083->10.0.0.72:3888 (ESTABLISHED) #在第一次zookeeper集群启动的时候,服务器都没有数据,此时就根据myid最大的选举为leader,在有数据的时候,就看事务id,事务id越新数据就越全,就会被选举为leader #查看日志 root@zk3:/tools/apache-zookeeper-3.6.3-bin/bin# cat /tools/apache-zookeeper-3.6.3-bin/logs/zookeeper-root-server-zk3.out 2023-09-23 18:27:37,782 [myid:] - INFO [main:QuorumPeerConfig@174] - Reading configuration from: /tools/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg 2023-09-23 18:27:37,788 [myid:] - INFO [main:QuorumPeerConfig@460] - clientPortAddress is 0.0.0.0:2181 2023-09-23 18:27:37,788 [myid:] - INFO [main:QuorumPeerConfig@464] - secureClientPort is not set 2023-09-23 18:27:37,788 [myid:] - INFO [main:QuorumPeerConfig@480] - observerMasterPort is not set 2023-09-23 18:27:37,788 [myid:] - INFO [main:QuorumPeerConfig@497] - metricsProvider.className is org.apache.zookeeper.metrics.impl.DefaultMetricsProvider 2023-09-23 18:27:37,797 [myid:3] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 128 2023-09-23 18:27:37,797 [myid:3] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 1 2023-09-23 18:27:37,798 [myid:3] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@139] - Purge task started. 2023-09-23 18:27:37,802 [myid:3] - INFO [main:ManagedUtil@44] - Log4j 1.2 jmx support found and enabled. 2023-09-23 18:27:37,802 [myid:3] - INFO [PurgeTask:FileTxnSnapLog@124] - zookeeper.snapshot.trust.empty : false 2023-09-23 18:27:37,807 [myid:3] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@145] - Purge task completed. 2023-09-23 18:27:37,811 [myid:3] - INFO [main:QuorumPeerMain@151] - Starting quorum peer, myid=3 2023-09-23 18:27:37,827 [myid:3] - INFO [main:ServerMetrics@62] - ServerMetrics initialized with provider org.apache.zookeeper.metrics.impl.DefaultMetricsProvider@6108b2d7 2023-09-23 18:27:37,836 [myid:3] - INFO [main:ServerCnxnFactory@169] - Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory 2023-09-23 18:27:37,837 [myid:3] - WARN [main:ServerCnxnFactory@309] - maxCnxns is not configured, using default value 0. 2023-09-23 18:27:37,839 [myid:3] - INFO [main:NIOServerCnxnFactory@666] - Configuring NIO connection handler with 10s sessionless connection timeout, 1 selector thread(s), 8 worker threads, and 64 kB direct

buffers. 2023-09-23 18:27:37,845 [myid:3] - INFO [main:NIOServerCnxnFactory@674] - binding to port 0.0.0.0/0.0.0.0:2181 2023-09-23 18:27:37,853 [myid:3] - INFO [main:QuorumPeer@753] - zookeeper.quorumCnxnTimeoutMs=-1 2023-09-23 18:27:37,880 [myid:3] - INFO [main:Log@169] - Logging initialized @15506ms to org.eclipse.jetty.util.log.Slf4jLog 2023-09-23 18:27:37,973 [myid:3] - WARN [main:ContextHandler@1660] - o.e.j.s.ServletContextHandler@4d50efb8{/,null,STOPPED} contextPath ends with /* 2023-09-23 18:27:37,973 [myid:3] - WARN [main:ContextHandler@1671] - Empty contextPath 2023-09-23 18:27:37,992 [myid:3] - INFO [main:X509Util@77] - Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation 2023-09-23 18:27:37,993 [myid:3] - INFO [main:FileTxnSnapLog@124] - zookeeper.snapshot.trust.empty : false 2023-09-23 18:27:37,993 [myid:3] - INFO [main:QuorumPeer@1692] - Local sessions disabled 2023-09-23 18:27:37,993 [myid:3] - INFO [main:QuorumPeer@1703] - Local session upgrading disabled 2023-09-23 18:27:37,993 [myid:3] - INFO [main:QuorumPeer@1670] - tickTime set to 2000 2023-09-23 18:27:37,993 [myid:3] - INFO [main:QuorumPeer@1714] - minSessionTimeout set to 4000 2023-09-23 18:27:37,993 [myid:3] - INFO [main:QuorumPeer@1725] - maxSessionTimeout set to 40000 2023-09-23 18:27:37,994 [myid:3] - INFO [main:QuorumPeer@1750] - initLimit set to 10 2023-09-23 18:27:37,994 [myid:3] - INFO [main:QuorumPeer@1937] - syncLimit set to 5 2023-09-23 18:27:37,994 [myid:3] - INFO [main:QuorumPeer@1952] - connectToLearnerMasterLimit set to 0 2023-09-23 18:27:38,008 [myid:3] - INFO [main:ZookeeperBanner@42] - 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - ______ _ 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - |___ / | | 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - / / ___ ___ | | __ ___ ___ _ __ ___ _ __ 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - / / / _ \ / _ \ | |/ / / _ \ / _ \ | '_ \ / _ \ | '__| 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - / /__ | (_) | | (_) | | < | __/ | __/ | |_) | | __/ | | 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - /_____| \___/ \___/ |_|\_\ \___| \___| | .__/ \___| |_| 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - | | 2023-09-23 18:27:38,009 [myid:3] - INFO [main:ZookeeperBanner@42] - |_| 2023-09-23 18:27:38,010 [myid:3] - INFO [main:ZookeeperBanner@42] - 2023-09-23 18:27:38,035 [myid:3] - INFO [main:Environment@98] - Server environment:zookeeper.version=3.6.3--6401e4ad2087061bc6b9f80dec2d69f2e3c8660a, built on 04/08/2021 16:35 GMT

客户端查看数据

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架