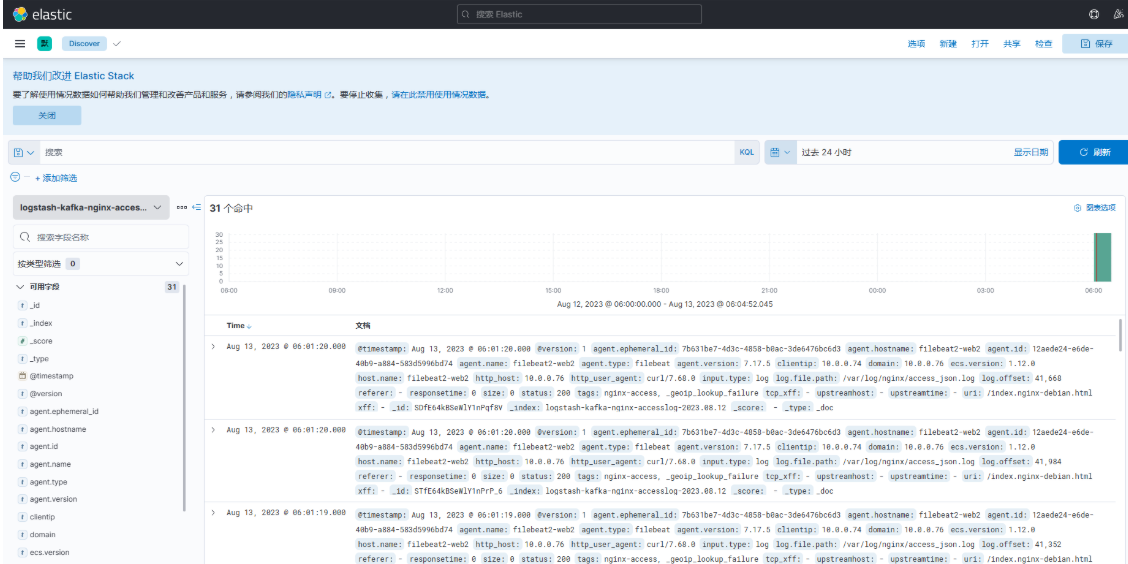

filebeat收集nginx日志发送到kafka,Logstash 读取 Kafka 日志消息队列到Elasticsearch,kibana做图形展示

服务器配置

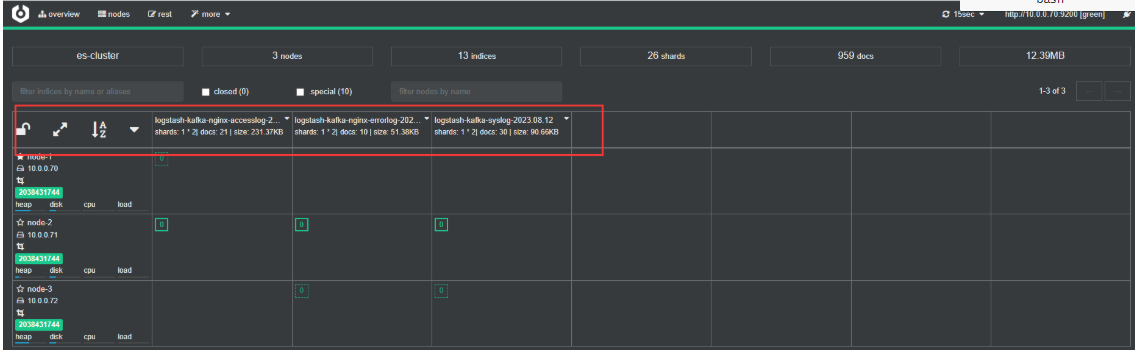

es集群:10.0.0.70、10.0.0.71、10.0.0.72

kibana:10.0.0.73

mysql:10.0.0.73

filebeat1-web1:10.0.0.74

filebeat2-web2:10.0.0.76

kafka1-logstash1:10.0.0.77

kafka2-logstash2:10.0.0.78

kafka3-logstash3:10.0.0.79

kafka和logstash配置

#修改kafka和logstash的主机名称 hostnamectl set-hostname kafka1-logstash1-node1 hostnamectl set-hostname kafka2-logstash2-node2 hostnamectl set-hostname kafka3-logstash3-node3 #修改filebeat的主机名称 hostnamectl set-hostname filebeat1-web1 hostnamectl set-hostname filebeat2-web2 #脚本安装kafka和logstash root@kafka1-logstash1-node1:/tools# bash /tools/install_kafka.sh 请输入node编号(默认为 1): 1 root@kafka2-logstash2-node2:/tools# bash /tools/install_kafka.sh 请输入node编号(默认为 1): 2 root@kafka3-logstash3-node3:/tools# bash /tools/install_kafka.sh 请输入node编号(默认为 1): 3 #检查kafka集群 root@kafka1-logstash1-node1:/tools# /usr/local/zookeeper/bin/zkServer.sh status /usr/bin/java ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower root@kafka2-logstash2-node2:~# /usr/local/zookeeper/bin/zkServer.sh status /bin/java ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader root@kafka3-logstash3-node3:~# /usr/local/zookeeper/bin/zkServer.sh status /bin/java ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower

配置nginx和filebeat

#配置filebeat1-web1和filebeat2-web2的nginx日志为json格式 root@filebeat1-web1:~# cat /etc/nginx/nginx.conf user www-data; worker_processes auto; pid /run/nginx.pid; include /etc/nginx/modules-enabled/*.conf; events { worker_connections 768; } http { sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3; # Dropping SSLv3, ref: POODLE ssl_prefer_server_ciphers on; log_format access_json '{"@timestamp":"$time_iso8601",' '"host":"$server_addr",' '"clientip":"$remote_addr",' '"size":$body_bytes_sent,' '"responsetime":$request_time,' '"upstreamtime":"$upstream_response_time",' '"upstreamhost":"$upstream_addr",' '"http_host":"$host",' '"uri":"$uri",' '"domain":"$host",' '"xff":"$http_x_forwarded_for",' '"referer":"$http_referer",' '"tcp_xff":"$proxy_protocol_addr",' '"http_user_agent":"$http_user_agent",' '"status":"$status"}'; access_log /var/log/nginx/access_json.log access_json ; error_log /var/log/nginx/error.log; gzip on; include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; } #配置2台filebeat链接kafka root@filebeat1-web1:~# cat /etc/filebeat/filebeat.yml filebeat.inputs: - type: log enabled: true paths: - /var/log/nginx/access_json.log json.keys_under_root: true json.overwrite_keys: true tags: ["nginx-access"] - type: log enabled: true paths: - /var/log/nginx/error.log tags: ["nginx-error"] - type: log enabled: true paths: - /var/log/syslog tags: ["syslog"] output.kafka: hosts: ["10.0.0.77:9092","10.0.0.78:9092","10.0.0.79:9092"] topic: filebeat-log #指定kafka的topic partition.round_robin: reachable_only: true #true表示只发布到可用的分区,false时表示所有分区,如果一个节点down,会block required_acks: 1 #如果为0,错误消息可能会丢失,1等待写入主分区(默认),-1等待写入副本分区 compression: gzip #实现gzip的压缩 max_message_bytes: 1000000 #每条消息最大长度,以字节为单位,如果超过将丢弃 #重启 systemctl restart nginx #重启 systemctl restart filebeat.service #前台启动查看日志 filebeat -c /etc/filebeat/filebeat.yml -e

配置logstash链接kafka取日志到es

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 | #配置3台logstash链接kafka输出日志到es集群cat >/etc/logstash/conf.d/kafka-to-es.conf<<'EOF'input { kafka { bootstrap_servers => "10.0.0.77:9092,10.0.0.78:9092,10.0.0.203:79" topics => "filebeat-log" codec => "json" group_id => "logstash" #消费者组的名称,3台logstash consumer_threads => "3" #建议设置为和kafka的分区相同的值为线程数 decorate_events => true #此属性会将当前topic、offset、group、partition等信息也带到message中 client_id => "logstashCli1" #kafka分组的客户端id每台logstash都要不一样# topics_pattern => "nginx-.*" #通过正则表达式匹配topic,而非像上面topics=>指定固定值 }}filter { if "nginx-access" in [tags] { geoip { source => "clientip" target => "geoip" } }}output { #stdout {} #调试使用 if "nginx-access" in [tags] { elasticsearch { hosts => ["10.0.0.70:9200","10.0.0.71:9200","10.0.0.72:9200"] index => "logstash-kafka-nginx-accesslog-%{+YYYY.MM.dd}" } }if "nginx-error" in [tags] { elasticsearch { hosts => ["10.0.0.70:9200","10.0.0.71:9200","10.0.0.72:9200"] index => "logstash-kafka-nginx-errorlog-%{+YYYY.MM.dd}" } }if "syslog" in [tags] { elasticsearch { hosts => ["10.0.0.70:9200","10.0.0.71:9200","10.0.0.72:9200"] index => "logstash-kafka-syslog-%{+YYYY.MM.dd}" } }}EOF<br><br> |

#做语法检查

logstash -f /etc/logstash/conf.d/kafka-to-es.conf -t

#前台启动

logstash -f /etc/logstash/conf.d/kafka-to-es.conf -r

#后台启动

systemctl enable --now logstash

#访问触发访问日志

root@filebeat1-web1:~# curl 10.0.0.74 -I

HTTP/1.1 200 OK

Server: nginx/1.18.0 (Ubuntu)

Date: Sat, 12 Aug 2023 20:54:57 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Wed, 09 Aug 2023 15:29:54 GMT

Connection: keep-alive

ETag: "64d3b0f2-264"

Accept-Ranges: bytes

root@filebeat1-web1:~# curl 10.0.0.76 -I

HTTP/1.1 200 OK

Server: nginx/1.18.0 (Ubuntu)

Date: Sat, 12 Aug 2023 20:55:00 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Wed, 09 Aug 2023 15:29:54 GMT

Connection: keep-alive

ETag: "64d3b0f2-264"

Accept-Ranges: bytes

#触发错误访问日志

root@filebeat1-web1:~# curl 10.0.0.76/xxx -I

HTTP/1.1 404 Not Found

Server: nginx/1.18.0 (Ubuntu)

Date: Sat, 12 Aug 2023 20:55:03 GMT

Content-Type: text/html

Content-Length: 162

Connection: keep-alive

root@filebeat1-web1:~# curl 10.0.0.74/xxx -I

HTTP/1.1 404 Not Found

Server: nginx/1.18.0 (Ubuntu)

Date: Sat, 12 Aug 2023 20:55:06 GMT

Content-Type: text/html

Content-Length: 162

Connection: keep-alive

#触发系统日志

systemctl start syslog.socket rsyslog.service

systemctl stop syslog.socket rsyslog.service

#查看kafka的组

cd /usr/local/kafka/bin && ./kafka-consumer-groups.sh --bootstrap-server 10.0.0.78:9092 --list

#查看kafka的logstash组的消息

cd /usr/local/kafka/bin && ./kafka-consumer-groups.sh --bootstrap-server 10.0.0.78:9092 --group logstash --describe

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了