[Machine Learning] kNN近邻算法

KNN算法的原理很简单:

1. 物以类聚,人以群分:最直观的证据就是你离谁近,所以你们是一类的。

2. 为了防止异类(特殊情况):取最近的N个点,算概率。

所以算法的大致过程:

计算预测数据与每一条训练集(其实并没有经过训练)的距离,然后对结果进行排序。取距离最小的N个点,统计这N歌点每个类出现的次数,对次数进行排序。预测结果就是在N个点中出现最多的类

from numpy import * import operator def createDataSet() : group = array([[1.0, 1.1], [1.0, 1.0], [0, 0], [0, 1.1]]) labels = ['A', 'A', 'B', 'B'] return group, labels ''' tile(array, (intR, intC): 对矩阵进行组合,纵向复制intR次, 横向复制intC次 比如 : tile([1,2,3], (3, 2)) 输出 [ [1, 2, 3, 1, 2, 3], [1, 2, 3, 1, 2, 3], [1, 2, 3, 1, 2, 3] ] array减法, 两个 行列数相等的矩阵,对应位置做减法 argsort(array, axis) 对矩阵进行排序,axis=0 按列排序, axis=1 按行排序 输出的是排序的索引。比如输出[0,2,1], 排序结果结果为 array[0],array[2].array[1] aorted(iteratorItems, key, reverse) 对可迭代的对象进行排序 ''' def classify0(intX, dataSet, labels, k) : # 假设输入 intX = [0, 0], dataSet = array([[1.0, 1.1], [1.0, 1.0], [0, 0], [0, 1.1]]), labels = ['A', 'A', 'B', 'B'], k = 3 dataSetSize = dataSet.shape[0]; # 行数 dataSetSize = 4 diffMat = tile(intX, (dataSetSize, 1)) - dataSet # 矩阵差 diffMat = array([[-1.0, -1.1], [-1.0, -1.0], [0, 0], [0, -1.1]]) sqDiffMat = diffMat ** 2 # 平方 sqDiffMat = array([[1, 1.21], [1, 1], [0, 0], [0, 1.21]]) sqDistances = sqDiffMat.sum(axis=1) # 行和,axis=0时输出纵和 sqDistances = array([2.21, 2, 0, 1.21]) distances = sqDistances ** 0.5 # 开平方 distances = array([1.41, 1.48, 0, 1.1]) sortedDistIndicies = distances.argsort() # 排序 sortedDistIndicies = array([2, 3, 0, 1]) classCount = {} for i in range(k) : voteIlabel = labels[sortedDistIndicies[i]] classCount[voteIlabel] = classCount.get(voteIlabel, 0) + 1 # {label:count}, 取距离最小的三个, 统计label出现的次数,最终 classCount = {'B': 2, 'A': 1} sortedClassCount = sorted(classCount.items(), key=operator.itemgetter(1), reverse=True) # 对dict按照v值(distance)进行倒序排序 sortedClassCount = [('B', 2), ('A', 1)] return sortedClassCount[0][0] # 返回第一个tuple的第一个值,也就是出现次数最高的label, 这里返回‘B’ if __name__ == "__main__": group, labels = createDataSet() classify0([0, 0], group, labels, 3)

''' 从txt文件中读取数据。 将前三个数据(输入)存储在 returnMat 中, 第四个数据存储在 classLabelVector 中 ''' def file2matrix(filename) : fr = open(filename) arrayOLines = fr.readlines() numberOfLines = len(arrayOLines) # 文本行数 returnMat = zeros((numberOfLines, 3)) # 用0填充的 文本行数x3 的矩阵 classLabelVector = [] index = 0 for line in arrayOLines: # 遍历每一行数据 line = line.strip() # 去掉换行符 listFromLine = line.split('\t') # 通过空格分割数据, 返回一个list returnMat[index, :] = listFromLine[0:3] # 将前三个数据存储为 returnMat 矩阵的一行 classLabelVector.append(int(listFromLine[-1])) # 将最后一个数据存储在classLabelVector index += 1 return returnMat, classLabelVector ''' 归一化处理,这个地方有一点问题, 这样处理完所有的数据肯定是在0-1之间的,我觉得这样明显是改变矩阵的特征值和特征向量的,至少我现在还没证明这个处理过程是符合矩阵的恒等变换的。 ''' def autoNorm(dataSet) : minVals = dataSet.min(0) maxVals = dataSet.max(0) ranges = maxValue - minVals normDataSet = zeros(shape(dataSet)) m = dataSet.shape[0] normDataSet = dataSet - tile(minVals, (m, 1)) normDataSet = normDataSet / tile(ranges, (m, 1)) return normDataSet, ranges, minVals ''' 约会数据测试 ''' def datingClassTest() : hoRatio = 0.10 # 提取 0.10 也就是 10% 的数据作为测试集 datingDataMat, datingLabels = file2matrix('datingTestSet.txt') # 读取数据 normMat, ranges, minVals = autoNorm(datingDataMat) # 归一化处理 m = normMat.shape[0] # 总数据量 numTestVecs = int(m * hoRatio) # 测试集数据量 errorCount = 0.0 for i in range(numTestVecs): # 显然测试集的数据为前10%, 训练集的数据为剩下的90%, 取距离最小的3个数据作为预测集 classifierResult = classify0(normMat[i, :], normMat[numTestVecs : m, :], datingLabels[numTestVecs : m], 3) print("the classifier came back with: %d, the real answer is: %d" % (classifierResult, datingLabels[i])) if (classifierResult != datingLabels[i]) : errorCount += 1.0 print("the total error rate is: %f" % (errorCount/float(numTestVecs))) # 错误率

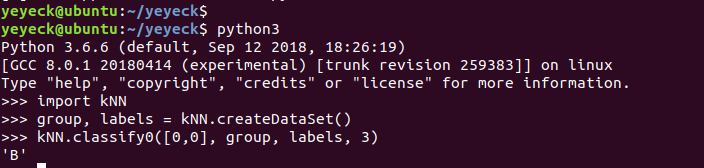

测试

yeyeck@ubuntu:~/yeyeck$ python3 Python 3.6.6 (default, Sep 12 2018, 18:26:19) [GCC 8.0.1 20180414 (experimental) [trunk revision 259383]] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import kNN >>> kNN.datingClassTest() the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 3 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 3, the real answer is: 3 the classifier came back with: 2, the real answer is: 2 the classifier came back with: 1, the real answer is: 1 the classifier came back with: 3, the real answer is: 1 the total error rate is: 0.050000

欢迎访问我的个人博客站点:

https://yeyeck.com

浙公网安备 33010602011771号

浙公网安备 33010602011771号