Scrapy之设置随机User-Agent和IP代理

大多数情况下,网站都会根据我们的请求头信息来区分你是不是一个爬虫程序,如果一旦识别出这是一个爬虫程序,很容易就会拒绝我们的请求,因此我们需要给我们的爬虫手动添加请求头信息,来模拟浏览器的行为,但是当我们需要大量的爬取某一个网站的时候,一直使用同一个User-Agent显然也是不够的,因此,我们本节的内容就是学习在scrapy中设置随机的User-Agent。Scrapy中设置随机User-Agent是通过下载器中间件(Downloader Middleware)来实现的。

除了切换User-Agent之外,另外一个重要的方式就是设置IP代理,以防止我们的爬虫被拒绝,下面我们就来演示scrapy如何设置随机IPProxy。

设置随机User-Agent

既然要用到随机User-Agent,那么我们就要手动的为我们的爬虫准备一批可用的User-Agent,因此首先在settings.py文件中添加如下的信息。

MY_USER_AGENT = [ "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)", "Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)", "Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)", "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)", "Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)", "Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)", "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)", "Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6", "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1", "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0", "Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5", "Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20", "Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER", "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E; LBBROWSER)", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 LBBROWSER", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E)", "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; 360SE)", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E)", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.89 Safari/537.1", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.89 Safari/537.1", "Mozilla/5.0 (iPad; U; CPU OS 4_2_1 like Mac OS X; zh-cn) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8C148 Safari/6533.18.5", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0b13pre) Gecko/20110307 Firefox/4.0b13pre", "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:16.0) Gecko/20100101 Firefox/16.0", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11", "Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36", ]

当然,你可以去搜索更多的User-Agent添加进来。

而后,在middlewares.py文件中添加如下的信息,这也是我们设置User-Agent的主要逻辑,先上代码再解释

import scrapy from scrapy import signals from scrapy.downloadermiddlewares.useragent import UserAgentMiddleware import random class MyUserAgentMiddleware(UserAgentMiddleware): ''' 设置User-Agent ''' def __init__(self, user_agent): self.user_agent = user_agent @classmethod def from_crawler(cls, crawler): return cls( user_agent=crawler.settings.get('MY_USER_AGENT') ) def process_request(self, request, spider): agent = random.choice(self.user_agent) request.headers['User-Agent'] = agent

可以看到整个过程非常的简单,相关模块的导入就不说了,我们首先自定义了一个类,这个类继承自UserAgentMiddleware。之前已经说过,scrapy为我们提供了from_crawler()的方法,用于访问相关的设置信息,这里就是用到了这个方法,从settings里面取出我们的USER_AGENT列表,而后就是随机从列表中选择一个,添加到headers里面,最后默认返回了None。

最后一步,就是将我们自定义的这个MyUserAgentMiddleware类添加到DOWNLOADER_MIDDLEWARES,像下面这样。

DOWNLOADER_MIDDLEWARES = { 'scrapy.downloadermiddleware.useragent.UserAgentMiddleware': None, 'myproject.middlewares.MyUserAgentMiddleware': 400, }

到这里,全部的设置就算完成了

设置随机IPProxy

同样的你想要设置IPProxy ,首先需要找到可用的IPProxy ,通常情况下,一些代理网站会提供一些免费的ip代理,但是其稳定性和可用性很难得到保证,但是初学阶段,只能硬着头皮去找了,当然后期我们可以有其他的方法来寻找可用的IP代理,拿到可用的IPProxy 以后,将其添加到settings.py文件中,像下面这样,当然了,当你看到这篇文章的时候,他们肯定是已经失效了,你需要自己去找了。

PROXIES = ['http://183.207.95.27:80', 'http://111.6.100.99:80', 'http://122.72.99.103:80', 'http://106.46.132.2:80', 'http://112.16.4.99:81', 'http://123.58.166.113:9000', 'http://118.178.124.33:3128', 'http://116.62.11.138:3128', 'http://121.42.176.133:3128', 'http://111.13.2.131:80', 'http://111.13.7.117:80', 'http://121.248.112.20:3128', 'http://112.5.56.108:3128', 'http://42.51.26.79:3128', 'http://183.232.65.201:3128', 'http://118.190.14.150:3128', 'http://123.57.221.41:3128', 'http://183.232.65.203:3128', 'http://166.111.77.32:3128', 'http://42.202.130.246:3128', 'http://122.228.25.97:8101', 'http://61.136.163.245:3128', 'http://121.40.23.227:3128', 'http://123.96.6.216:808', 'http://59.61.72.202:8080', 'http://114.141.166.242:80', 'http://61.136.163.246:3128', 'http://60.31.239.166:3128', 'http://114.55.31.115:3128', 'http://202.85.213.220:3128']

而后,在middlewares.py文件中,添加下面的代码。

import scrapy from scrapy import signals import random class ProxyMiddleware(object): ''' 设置Proxy ''' def __init__(self, ip): self.ip = ip @classmethod def from_crawler(cls, crawler): return cls(ip=crawler.settings.get('PROXIES')) def process_request(self, request, spider): ip = random.choice(self.ip) request.meta['proxy'] = ip

其基本的逻辑和上一篇设置User-Agent非常类似,因此这个地方不多赘述。

最后将我们自定义的类添加到下载器中间件设置中,如下。

DOWNLOADER_MIDDLEWARES = { 'myproject.middlewares.ProxyMiddleware': 543, }

测试我们的代理

为了检测我们的代理是否设置成功,下面就以一个例子来进行测试。

我们在spider.py文件中写入下面的代码

import scrapy class Spider(scrapy.Spider): name = 'ip' allowed_domains = [] def start_requests(self): url = 'http://ip.chinaz.com/getip.aspx' for i in range(4): yield scrapy.Request(url=url, callback=self.parse, dont_filter=True) def parse(self,response): print(response.text)

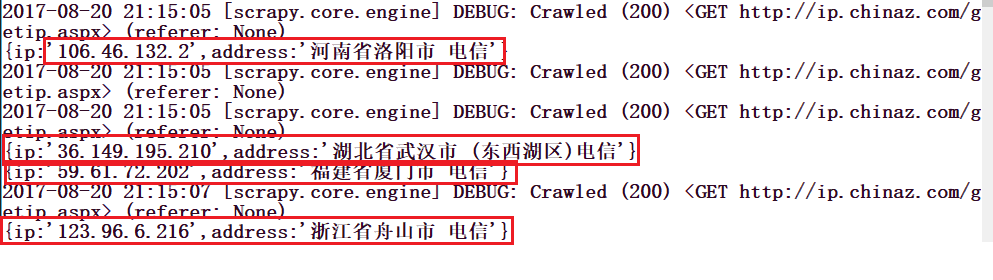

上面的代码中,url = 'http://ip.chinaz.com/getip.aspx'这个网站可以显示我们的ip地址,我们用它来测试,请注意,在Request()中,我们必须添加dont_filter=True因为我们多次请求的是同一个网址,scrapy默认会把重复的网址过滤掉。运行这个项目,我们可以看到如下的输出。

设置IP池或用户代理

(1)设置IP池

步骤1:在settings.py文件中添加代理服务器的IP信息,如:

1 # 设置IP池 2 IPPOOL = [ 3 {"ipaddr": "221.230.72.165:80"}, 4 {"ipaddr": "175.154.50.162:8118"}, 5 {"ipaddr": "111.155.116.212:8123"} 6 ]

步骤2:创建下载中间文件middlewares.py(与settings.py同一个目录),如:

#创建方法,cmd命令行,如项目为modetest,

E:\workspace\PyCharm\codeSpace\modetest\modetest>echo #middlewares.py

1 # -*- coding: utf-8 -*- 2 # 导入随机模块 3 import random 4 # 导入settings文件中的IPPOOL 5 from .settings import IPPOOL 6 # 导入官方文档对应的HttpProxyMiddleware 7 from scrapy.contrib.downloadermiddleware.httpproxy import HttpProxyMiddleware 8 9 class IPPOOlS(HttpProxyMiddleware): 10 # 初始化 11 def __init__(self, ip=''): 12 self.ip = ip 13 14 # 请求处理 15 def process_request(self, request, spider): 16 # 先随机选择一个IP 17 thisip = random.choice(IPPOOL) 18 print("当前使用IP是:"+ thisip["ipaddr"]) 19 request.meta["proxy"] = "http://"+thisip["ipaddr"]

步骤3:在settings.py中配置下载中间件

1 # 配置下载中间件的连接信息 2 DOWNLOADER_MIDDLEWARES = { 3 'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware':123, 4 'modetest.middlewares.IPPOOlS' : 125 5 }

(2)设置用户代理

步骤1:在settings.py文件中添加用户代理池的信息(配置几个浏览器'User-Agent'),如:

1 # 设置用户代理池 2 UPPOOL = [ 3 "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.79 Safari/537.36 Edge/14.14393" 4 ]

步骤2:创建下载中间文件uamid.py(与settings.py同一个目录),如:

#创建方法,cmd命令行,如项目为modetest,

E:\workspace\PyCharm\codeSpace\modetest\modetest>echo #uamind.py

1 # -*- coding: utf-8 -*-# 2 # 导入随机模块 3 import random 4 # 导入settings文件中的UPPOOL 5 from .settings import UPPOOL 6 # 导入官方文档对应的HttpProxyMiddleware 7 from scrapy.contrib.downloadermiddleware.useragent import UserAgentMiddleware 8 9 class Uamid(UserAgentMiddleware): 10 # 初始化 注意一定要user_agent,不然容易报错 11 def __init__(self, user_agent=''): 12 self.user_agent = user_agent 13 14 # 请求处理 15 def process_request(self, request, spider): 16 # 先随机选择一个用户代理 17 thisua = random.choice(UPPOOL) 18 print("当前使用User-Agent是:"+thisua) 19 request.headers.setdefault('User-Agent',thisua)

步骤3:在settings.py中配置下载中间件

1 # 配置下载中间件的连接信息 2 DOWNLOADER_MIDDLEWARES = { 3 'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 2, 4 'modetest.uamid.Uamid': 1 5 }

总而言之,有时候避免不了配置这类信息,所以直接在settings中都一起配置了如下,直接粘贴到settings.py文件的最后面

# 设置IP池和用户代理 # 禁止本地Cookie COOKIES_ENABLED = False # 设置IP池 IPPOOL = [ {"ipaddr": "221.230.72.165:80"}, {"ipaddr": "175.154.50.162:8118"}, {"ipaddr": "111.155.116.212:8123"} ] # 设置用户代理池 UPPOOL = [ "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.79 Safari/537.36 Edge/14.14393" ] # 配置下载中间件的连接信息 DOWNLOADER_MIDDLEWARES = { #'scrapy.contrib.downloadermiddlewares.httpproxy.HttpProxyMiddleware':123, #'modetest.middlewares.IPPOOlS' : 125, 'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 2, 'modetest.uamid.Uamid': 1 }

————————————————

版权声明:本文为CSDN博主「菲宇」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/bbwangj/article/details/89891463

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· Qt个人项目总结 —— MySQL数据库查询与断言