SpringBoot整合Kafka

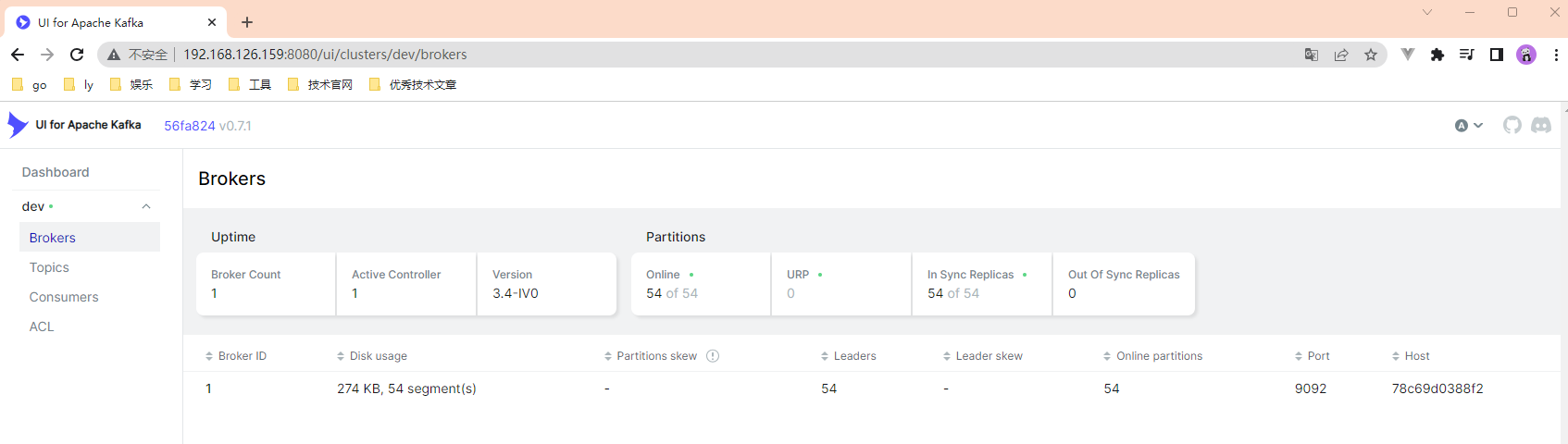

1、安装kafka

这里我是用的是docker-compose方式安装

(1) 安装docker和docker-compose

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo systemctl enable docker --now

#测试工作

docker ps

# 批量安装所有软件

docker compose

(2) docker-compose.yml

version: '3.9'

services:

zookeeper:

image: bitnami/zookeeper:latest

container_name: zookeeper

restart: always

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

networks:

- backend

kafka:

image: bitnami/kafka:3.4.0

container_name: kafka

restart: always

depends_on:

- zookeeper

ports:

- "9092:9092"

environment:

ALLOW_PLAINTEXT_LISTENER: yes

KAFKA_CFG_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

networks:

- backend

# kafka-ui界面

kafka-ui:

image: provectuslabs/kafka-ui:latest

container_name: kafka-ui

restart: always

depends_on:

- kafka

ports:

- "8080:8080"

environment:

KAFKA_CLUSTERS_0_NAME: dev

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: kafka:9092

networks:

- backend

networks:

backend:

name: backend

(3) 一键启动

docker compose -f docker-compose.yml up -d

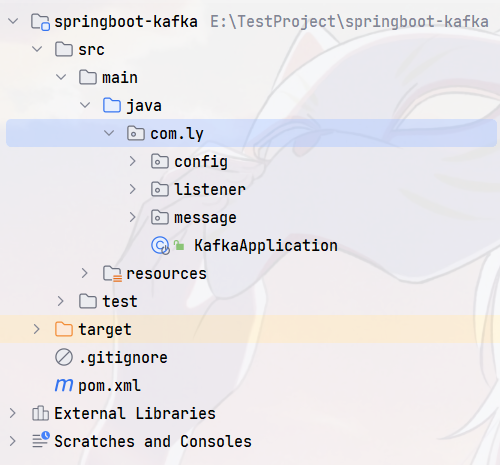

2、SpringBoot整合Kafka

(1) 基础环境搭建

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.ly</groupId>

<artifactId>springboot-kafka</artifactId>

<version>1.0-SNAPSHOT</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.1.0</version>

</parent>

<properties>

<maven.compiler.source>17</maven.compiler.source>

<maven.compiler.target>17</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.28</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

</dependency>

</dependencies>

</project>

配置文件

server:

port: 9000

spring:

kafka:

bootstrap-servers:

- 192.168.126.159:9092

producer:

# key的序列化方式

key-serializer: org.apache.kafka.common.serialization.StringSerializer

# value的序列化方式

value-serializer: org.springframework.kafka.support.serializer.JsonSerializer

(2) 发消息

KafkaTest

package com.ly;

import com.ly.message.Person;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.util.StopWatch;

import java.util.concurrent.CompletableFuture;

import java.util.concurrent.ExecutionException;

/**

* @author ly (个人博客:https://www.cnblogs.com/ybbit)

* @date 2023-07-01 14:03

* @tags 喜欢就去努力的争取

*/

@SpringBootTest

public class KafkaTest {

@Autowired

private KafkaTemplate kafkaTemplate;

/**

* 发送字符串

*/

@Test

public void testSend() {

// 开启计时

StopWatch stopWatch = new StopWatch();

CompletableFuture[] futures = new CompletableFuture[10000];

stopWatch.start();

for (int i = 0; i < 10000; i++) {

CompletableFuture future = kafkaTemplate.send("news", "ly-" + i, "我的宝" + i);

futures[i] = future;

}

CompletableFuture.allOf(futures).join(); // join

stopWatch.stop();

long time = stopWatch.getTotalTimeMillis();

System.out.println("耗费的时间为:" + time + "ms ");

}

/**

* 发送对象:

* 这里需要配置value的序列化方式,默认是StringSerializer, 会出现报错

* @throws ExecutionException

* @throws InterruptedException

*/

@Test

public void sendObject() throws ExecutionException, InterruptedException {

CompletableFuture future = kafkaTemplate.send("news2", "obj-1", new Person("ly", 25));

System.out.println("future.get() = " + future.get());

}

}

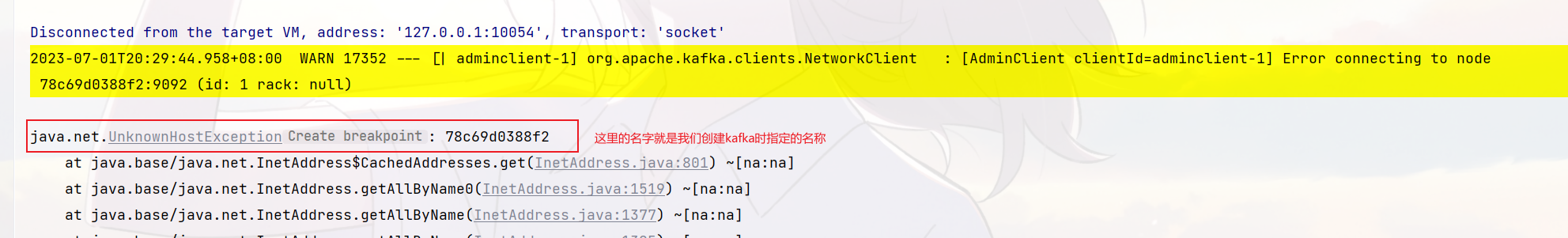

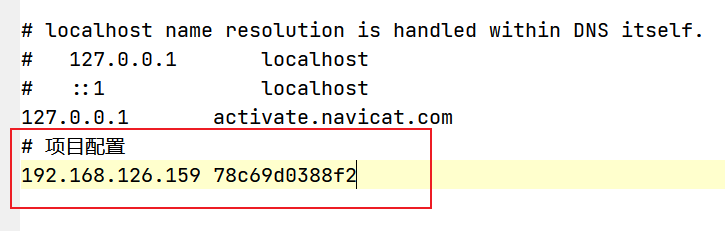

注意:这里启动会报一个错,找不到对应的kafka主机

解决办法:修改hosts文件(一定要以管理员方式修改)

提示:hosts文件所在位置

C:\Windows\System32\drivers\etc

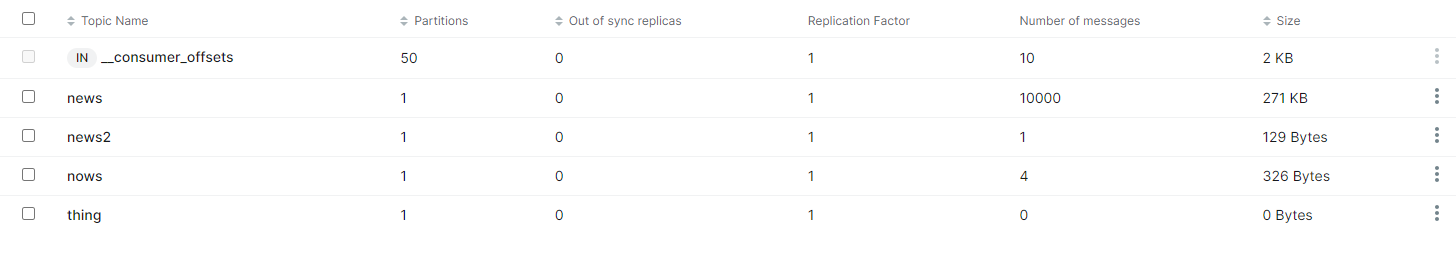

测试结果

(3) 消费消息

LyKafkaListener

package com.ly.listener;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.annotation.PartitionOffset;

import org.springframework.kafka.annotation.TopicPartition;

import org.springframework.stereotype.Component;

/**

* @author ly (个人博客:https://www.cnblogs.com/ybbit)

* @date 2023-07-01 14:57

* @tags 喜欢就去努力的争取

*/

@Component

public class LyKafkaListener {

/**

* 默认监听是和消息队列的最后一个消息开始

*

* @param consumerRecord

*/

@KafkaListener(topics = "nows", groupId = "ly")

public void listener1(ConsumerRecord consumerRecord) {

String topic = consumerRecord.topic();

System.out.println("其他信息 ============ topic = " + topic);

Object key = consumerRecord.key();

Object value = consumerRecord.value();

System.out.println("listener1 接收消息 ============ key = value :" + key + "=" + value);

}

/**

* 获取完整消息

*/

@KafkaListener(groupId = "baobao", topicPartitions = {

@TopicPartition(topic = "nows",

partitionOffsets = {

@PartitionOffset(partition = "0", initialOffset = "0")

})

})

public void listener2(ConsumerRecord consumerRecord) {

Object key = consumerRecord.key();

Object value = consumerRecord.value();

System.out.println("listener2 接收消息 ============ key = value :" + key + "=" + value);

}

}

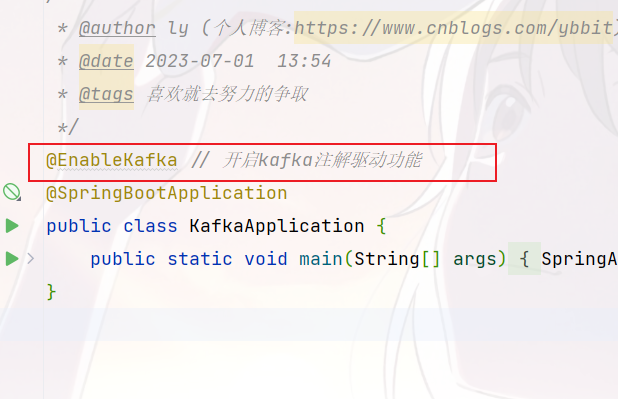

注意:需要开启Kafka注解驱动功能

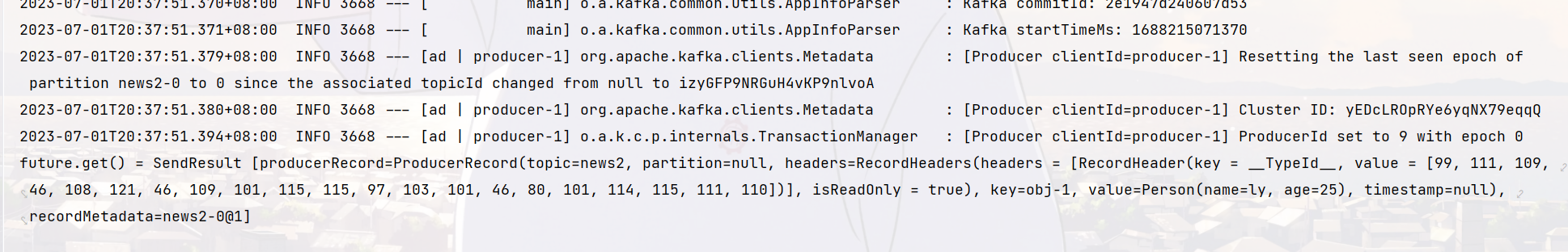

测试结果

更多详细的用法参考SpringBoot整合Kafka官方文档

浙公网安备 33010602011771号

浙公网安备 33010602011771号