http://www.fxguide.com/featured/pixars-opensubdiv-v2-a-detailed-look/

Pixar’s OpenSubdiv V2: A detailed look

By Mike Seymour

September 18, 2013

Subdivision is key modeling tool that allows greater accuracy and the OpenSubdiv project aims to standardize and speed up the process. It is production proven and about to be adopted for wide scale use by products like Autodesk’s Maya and Side Effects Software’s Houdini. In this exclusive and in-depth piece we explore the history, the uses and the innovative new approaches OpenSubdiv offers.

The subdivision of a surface is really a process whereby one can represent a smooth surface via a coarser piecewise linear polygon mesh, and then calculate a smooth surface as the limit of a recursive process of subdividing each polygonal face into smaller faces that better approximate the smooth surface. OpenSubdiv is at the heart of Pixar’s modeling pipeline and it is now being expanded to many other pipelines due to its open source approach.

Catmull-Clark subdivision surfaces were originally invented in the 1970s. The specification was extended with local edits, creases, and other features, and formalized into a usable technique for animation in 1998 at Pixar. Until recently the technology had not enjoyed widespread adoption in animation. This is about to change. Pixar has now decided to release its subdivision patents and working codebase in the hope that giving away its high-performance GPU-accelerated code will create a standard for geometry throughout the animation industry.

Think of the limit surface as the ‘true’ shape.

Subdivision surfaces are used for final rendering of character shapes and models as they produce a smooth and controllable ‘limit surface’. However, subdivision surfaces when represented at desktop level or say in an animation package are often drawn as just their polygonal control hulls purely for performance reasons. The ‘polygonal control hull’ is an approximation that is offset from the true limit surface. But looking at your work as an approximation in the interactive viewport makes it difficult to see exact details and achieve a good performance. For example, it is hard to judge fingers touching the neck of a bottle or hands touching a cheek without a limit surface. It also makes it difficult to see poke-throughs in cloth simulation if the skin and cloth are both only rough approximations.

Is Subdivision new?

People

have built high polygon models for years, but the same results can be

achieved now with a much lower poly count with OpenSubdiv. Subdivision

surfaces generally provide great advantages such as arbitrary topology

and fantastic control. You can put the points where you need them and

one does not need to add extra points – one just models naturally. Many

people switched to some form of subdivision in around 2005-2006 when

Maya first offered subdivision. Prior to Maya’s adoption, studios with

RenderMan would simply model in poly cages and pass those to RenderMan

for subdivision. In 2005 Maya began offering an accurate CPU based

“preview” which artists found invaluable. But the definition of

subdivision varies greatly and it is hard to move a model from package

to package or renderer to renderer and have them load and look the same.

If the industry is fairly agreed that subdivision is a good idea – and

even the geometry primitive of the future – then, as Pixar TD Manuel

Kraemer points out, “we should at least agree on what the definition of a

subdivision surface - Catmull-Clark or whatever – we should agree on

what it really is and the devil is in the detail!”

People

have built high polygon models for years, but the same results can be

achieved now with a much lower poly count with OpenSubdiv. Subdivision

surfaces generally provide great advantages such as arbitrary topology

and fantastic control. You can put the points where you need them and

one does not need to add extra points – one just models naturally. Many

people switched to some form of subdivision in around 2005-2006 when

Maya first offered subdivision. Prior to Maya’s adoption, studios with

RenderMan would simply model in poly cages and pass those to RenderMan

for subdivision. In 2005 Maya began offering an accurate CPU based

“preview” which artists found invaluable. But the definition of

subdivision varies greatly and it is hard to move a model from package

to package or renderer to renderer and have them load and look the same.

If the industry is fairly agreed that subdivision is a good idea – and

even the geometry primitive of the future – then, as Pixar TD Manuel

Kraemer points out, “we should at least agree on what the definition of a

subdivision surface - Catmull-Clark or whatever – we should agree on

what it really is and the devil is in the detail!”

OpenSubdiv is a consistent implementation of subdivision, with GPU and CPU versions, and one that promises greater interoperability and interchange – in short, it offers a professional standard. The resulting output from an OpenSubdiv pipeline is streamlined but it also means that models will look the same and have the same controls, weights and look in a variety of packages, something not true before OpenSubdiv.

OpenSubdiv offers features like creases (edge sharpness), limit surface evaluation, hierarchical edits. Plus it offers modeling speed and memory reduction (very important with sculpting), and it offers interoperability.

An example in practice

Both Maya’s animation interface and Pixar’s proprietary Presto animation system can take up to 100ms to subdivide a character of 30,000 polygons to the second level of subdivision (500,000 polygons). But doing the same thing with OpenSubdiv’s GPU codepath takes 3ms and allows the user to see the smooth, accurate limit surface at all times. The aim of OpenSubdiv is to allow the artist to work with a more faithful representation of what you are doing. It can render faster, and allow for greater interoperability. But this is not a plugin. You cannot simply run your existing high poly count models through OpenSubdiv to make them faster. Rather, you need to think about modeling at much lower resolution and let the subdivision process – managed by OpenSubdiv – provide the high quality, high poly count surface at render time.

There are some key aspects to the OpenSubdiv project, including some really interesting new opportunities:

- Simplicity with edges/creases

- Efficiency with textures

- GPU implementation

- Hierarchical modeling

But before looking at those current issues it is worth looking at some of the background of OpenSubdiv.

Heritage and background

“We’re very excited…It’s nice to have a standard implementation that’s going to produce the same results in all software. This is huge for interoperability between software packages.”

OpenSubdiv is an open source initiative that saw Pixar allow use of key patents and technology in the general community. Pixar has heavily promoted OpenSubdiv in an effort to one day have a powerful interchange format that both works very quickly on the GPU and very reliably on the CPU. While subdiv did exist outside the work Pixar was using internally, most implementations were based on papers and research done by staff at Pixar. And ever since Pixar moved to allow their research to be in the open source community many other companies have started to come on board. The team at Luxology licensed the core technology before there was even a formal ‘OpenSubdiv program’.

Autodesk has aggressively supported Pixar both for their internal tools and now in Maya so that soon everyone can benefit. Side Effects Software is coming on board with Houdini and now even realtime applications are appearing. Remarkably it is only a year since Pixar announced their decision to put subdiv into the open source community. The idea came from a pitch that Manuel Kraemer made to Bill Polson, Pixar’s Director of Industry Strategy. As Polson recalls it, pretty much everything that Kraemer pitched in that meeting has come to fruition today. “I don’t think enough credit can go to Manuel Kraemer,” Polson says. “A few years ago he came into my office and said, ‘Bill, I think we need to do some real work with subdivision and try and get it to be an industry standard. He kind of laid it all out on my whiteboard – I wish I’d taken a picture of that whiteboard!”.

The concern Pixar’s internal team had was that it just made too much sense to not have a standard, but as Pixar owned many of the key patents and the keys to RenderMan, it would be hard for anyone else to do as good a job, or deal with some of the complexity like facevarying, hierarchy or the important ‘creasing’. This meant that a less than optimal version could, de facto, become a standard if Pixar kept quiet and withheld support of their technology and patents. Pixar would then be faced with having a standard that was inferior to their internal model and all hopes of interoperability would be lost. Furthermore, for subdiv to work well Pixar wanted a great GPU implementation and any graphics card company would, for the same reasons, end up not supporting the possibly ‘secret’ superior Pixar version. By Pixar adopting the open source model it stands a great chance of companies like Autodesk, The Foundry, Smith Micro Software (Poser) and Nvidia completely and faithfully supporting the Pixar model, and that benefits everyone.

In giving their subdiv away Pixar would get back both industry adoption (and thus hardware support) and Pixar artists – and artists worldwide – could work more effectively. Pixar has actually done comprehensive studies on artists animating with IK rigs etc that show not only is responsiveness cost effective, but as systems pass certain responsiveness thresholds (50 ~ 60 frames per second in terms of interactive refresh times) artists grip their pens or mice less firmly, relaxing arm and wrist muscles and thus reducing strain injuries. By making their artists able to enjoy faster responsiveness, Pixar saves production time – and more importantly their artists are healthier.

1. Simplicity with edges/creases

Professional models use a lot of bevels – they mirror the reality of the real world while also catching the light and making any manufactured object seem less computer generated. Bevels are common place but one level of bevel on a cube just on the face count from 6 to 26 and the edges to 48 (from 12). To make that bevel a subtle curved edge, the faces jump to say 98 and the edges jump to 192. OpenSubdiv has a single crease value that can adjust a bevel from sharp to rounded.

The crease editor does not change the base edge count. This adding of detail and polish without exploding the data size of the model has been used extensively and until now entirely by Pixar.

Creases was an major extension of Subdiv. The first feature film to use creases was The Incredibles (2004).

The complexity solution of creases was useful on a character such as Buddy Pine and Syndrome’s gadgets – but was vital on say the hubs of Lightning McQueen’s wheels in Cars (2006).

Below are the base mesh (left) and the subdivided final (right).

McQueen is a very interesting example. He was 6 to 10 times heavier in faces than anything that had come before at Pixar (partly as he has a fully modeled engine and transmission that few is any viewers have ever noticed or seen). But then came Wall•E in 2008. “This guy in a single one of his tracks has more faces than McQueen put together. Wall•E is equivalent of almost 3 McQueens,” says Kraemer. ”Heavy as Wall•E is, which is an order of magnitude more complex – if he was modeled out of polygons and you included all the bevels and softening of edges that Wall•E really needs, if done with polygons – it would have been completely impossible to work with, you would not be able to animate it, you would not be able to deform it, pose it, articulate it – nothing. All these operations have to move the vertices around, not just draw them on the screen but actually move them in space, but it would have been impossible (without Subdiv).”

Complexity of parts and their bevels was much higher than any other lead character had been before for Pixar.

2. Efficiency

Using OpenSubdiv versus a simple polymesh can mean a big difference in mesh count. To match a simple coffee cup using OpenSubdiv allows a base mesh of 472 Tris to produce a nice curved OpenSubdiv final coffee cup model, with creasing control etc. To produce a high level poly mesh you would need to go to around 7552 triangles (level 2) for a smooth cup to animate with. To subdivide to 4 levels one would end up with a cup model equal to 120832 Tris, which is quite possible if you expected to get close to complex compound handle shapes (vs a base mesh of only 472 Tris).

It is important that an individual model be efficient and so characters such as Woody from Toy Story

result in a workable model which is 30,000 faces. McQueen’s car

represents some 70,000 faces, but there is another important

consideration. If one moves to focusing on background props and elements

the multiplying effect can be even more impactful. It is great to save

complexity on hero characters for animator feedback and responsiveness

but it is absolutely vital to manage background complexity. In Monsters University

there was a simple balustrade object. It was very simple compared to a

hero character, just a mere 581 faces and 664 verts when modeled.

It is important that an individual model be efficient and so characters such as Woody from Toy Story

result in a workable model which is 30,000 faces. McQueen’s car

represents some 70,000 faces, but there is another important

consideration. If one moves to focusing on background props and elements

the multiplying effect can be even more impactful. It is great to save

complexity on hero characters for animator feedback and responsiveness

but it is absolutely vital to manage background complexity. In Monsters University

there was a simple balustrade object. It was very simple compared to a

hero character, just a mere 581 faces and 664 verts when modeled.

Below is a background balustrade used in Monsters University, (left) Base mesh, (right) Subdiv.

But consider that one object is re-used on a host of buildings and places on the MU campus. The one simple balustrade was used and modified 2083 times in one scene. If the item was identical it could be instanced, but if each is different and/or instancing is not possible, then the cost really adds up. Any simplification in faces and verts on that object is a saving realized thousands of times over. Double its complexity and you could equally add 40 McQueen hero car characters for the same cost, or a hundred Woody level characters. In making a film, not spending your memory and model complexity budget on background objects without compromising the film’s subtle complexity is vital.

Perhaps a better example than say the Balustrade would be dorm room set from Monsters University.

The first image (left below) is the Subdiv cage, and it has 415K faces, or some 55 times the complexity of a coffee cups model above. The second image (right below) is the polygonalized version (at two levels of subdivision), this would offer a similar quality result in a poly based pipeline. The second image is 6.75 M faces, which would be equivalent to 900 ‘coffee cups’ in comparative terms.

And there are more gains to be made.

In the GPU currently there is partial refinement of the subdivision surface. The GPU has Feature Adaptive Refinement, it refines the area of the mesh around an adaptive refinement area more densely. Subdivision is a process that does not care about topology at all.

Subdivision gives the same result as Bspline patches wherever the surface is regular, all quads in a grid. But whereas Bsplines fail in irregular areas, subdivision always works. That was the original motivation for its invention. The OpenSubdiv GPU codepath uses this fact to aggressively render Bspline patches wherever it finds regular grids. But where the surface is irregular, OpenSubdiv continues subdividing and refining, finding more Bsplines, and so on. Both approaches give the same limit surface, except the Bspline code path is much much faster on the GPU. Typical models have regular grids on 90% or more of the surface, so this provides a huge speedup.

In the GPU codepath, the amount of subdivision and the density of Bspline tessellation are both independently controllable over the surface. So a part of the model close to camera can have more detail than a part of the same model far away.

Unfortunately, for now, the CPU codepath supports only uniform subdivision – i.e. the entire model undergoes the same amount of subdivision even if part is close to camera and part is far away. Without the flexibility offered by modern GPU tessellation, the OpenSubdiv CPU codepath is less performant than some current popular implementations, such as found in Arnold. Bill Polson agrees that the CPU code could benefit from the same level of optimization that the GPU code has had, and says the Pixar OpenSubdiv team hopes to address this in 2014.

This is why Solid Angle is not supporting OpenSubdiv right now in Solid Angle’s Arnold.

Marcos Fajardo, founder of Solid Angle, has already given Bill Polson the exact details, but the summary is that Sony Pictures Imageworks (SPI) researchers did an experiment a year ago where they integrated OpenSubdiv into the Arnold renderer at SPI to see how it performed. It was much slower, and using more memory, than the subdiv engine already used in Arnold. (SPI’s version of Arnold and Solid Angle’s more general version are in sync and run in parallel in this respect).

“So we don’t use it,” says Fajardo. “Apparently OpenSubdiv is blazingly fast on the GPU, but the OpenSubdiv team (Pixar) haven’t paid equal attention to optimizing the CPU code. At least for uniform subdivision, which is what we use, not the adaptive subdivision that Pixar favors. This is because the REYES algorithm is camera-dependent and optimizes meshes for the current camera, unlike with Monte Carlo physically-based renderers where it’s less clear that adapting tessellation to the camera is a big win, because most rays are not camera rays anyway but shadow and GI rays. I think the OpenSubdiv team is aware of this and they would like to optimize things, and there may even be other studios who can contribute to fixing this, since it’s open source. So, we are keeping an eye on OpenSubdiv, and once it’s more efficient for our use cases we’ll re-evaluate.”

3. GPU implementation

To be able to work at the desktop, Pixar felt it was important to have a GPU version of OpenSubdiv, so as a key part of OpenSubdiv 2.0 Pixar worked with others to implement an open GPU solution. The GPU version supports OpenCL, CUDA, GLSL, DirectX11, OpenGL, Open GL ES. It allows for uniform subdivision and for feature adaptive subdivision on GPUs with tesselation. A GPU mobile computing OpenSubdiv working implementation was shown at Siggraph, and while impressive this did not have hardware based tesselation. When this is added – as it will be in upcoming hardware revs – the results will be even more remarkable. The GPU aspect of OpenSubdiv is really important, it drives the huge difference in artist responsiveness but as stated earlier it is not a miracle add-on or plugin. One can not just drop OpenSubdiv into a standard pipeline and expect it to take already heavily subdivided geo and render it auto-magically faster. The approach of OpenSubdiv is to avoid getting the geo heavy in the first place, while still providing a great close vision of the limit surface.

The

CPU version of OpenSubdiv was not previously fast enough to, for

example, display Woody’s face, which is a subdivision surface – as are

all Pixar characters. This meant animators who are trying to produce

very subtle and moving performances – with comedy and real nuance –

could not see exactly what they were getting. “You are looking at a wire

frame cage and you are kind of guessing you have the right amount of

emotion when he is smiling or winking or twitching his nose,” explains

Polson. “For example, you are kind of guessing you are in the right

ballpark – and that you have it – but you don’t really know you’ve got

it until you get the animation back (from the Renderfarm) the next day.

The fact that we could get all of that actually running – on the GPU –

means that now at the animator’s workstation they see the actual face –

the limit surface of the face. So when they make him wink or smile or

whatever – they see the final shape and they can judge. And they know it

is good. And when they get it back there are no surprises. That has

been the big win for us on our current films in production.”

The

CPU version of OpenSubdiv was not previously fast enough to, for

example, display Woody’s face, which is a subdivision surface – as are

all Pixar characters. This meant animators who are trying to produce

very subtle and moving performances – with comedy and real nuance –

could not see exactly what they were getting. “You are looking at a wire

frame cage and you are kind of guessing you have the right amount of

emotion when he is smiling or winking or twitching his nose,” explains

Polson. “For example, you are kind of guessing you are in the right

ballpark – and that you have it – but you don’t really know you’ve got

it until you get the animation back (from the Renderfarm) the next day.

The fact that we could get all of that actually running – on the GPU –

means that now at the animator’s workstation they see the actual face –

the limit surface of the face. So when they make him wink or smile or

whatever – they see the final shape and they can judge. And they know it

is good. And when they get it back there are no surprises. That has

been the big win for us on our current films in production.”

4. Hierarchical modeling

A little-mentioned aspect of OpenSubdiv but one that holds enormous promise is hierarchical model edits. The reason it is not discussed much is that no major software package yet provides direct tools to manipulate models fully in this way. However, there is no reason to not think that once the various contributors to OpenSubdiv implement this new approach it will not be very influential. The best way to understand hierarchical edits is to look at an internal example from the Pixar team, using a test scene from Brave.

Hoof prints are only located here – note: click for a larger version on any of the images in the story.

The hierarchical edit allows level based specific shaders, creasing, weights and offsets in local areas.

Few know that the film was originally intended to be set in the Scottish winter. As such, the team realized that they would need to handle their characters walking in the snow. The Pixar team did a test of this using the hierarchical editing to create the effect of a horse character riding in soft snow. Clearly with large expanses of snow it would be idea to not have to subdivide the whole hill side for just localized hoof prints and disturbances. “Normally when you are building a model you sort of have to build the mesh density fine enough to handle the finest feature,” says Polson. “You are in a bad spot if that fine feature is small compared to the rest of the model, because you have these tiny meshes but you have to span the rest of the model. In our case it was a hoof print but we needed the mesh to cover an entire hill side.

Hierarchical edits is the solution. Subdiv is a progressive refinement that produces a successively finer mesh at every level but hierarchical edits allow for the model to refine down to say level 4 and then make these changes, move these points in this way etc, and then continue with the process.

Even Pixar does not use this feature very much. They have used it in an effects sense, with simulation work, but really Pixar would like to see more tools using this feature so they do have ready made tools to take advantage of the hierarchical edits, and this is coming (see Houdini below).

It might be reasonable to ask, why do I need hierarchy when I can use vector displacement? Certainly the question is one Pixar has asked itself. ”Look again at the snow field example,” says Polson. “Displacement mapping effectively means moving the very small polygons you get after subdividing many times, say 6 levels or more. So they have to move a long way from the undisplaced limit surface, and this typically leads to stretching and shearing and other artifacts. But with hierarchical edits we can add some of the displacement after level one subdivision, some more after level two, and so forth, so that each level’s intermediate surface is artifact free. The resulting displacement at level 6 is much more convincing and high-quality than we’d get by subdividing down to microploys and then trying to move everything at that level.”

While hierarchical edits seem powerful and a possible huge benefit, Maya has in fact had hierarchical edits for a few years and they have recently removed them as no one was apparently using them. It is unclear if this was due to the current complexity or limitations on rendering them or if users just don’t want them. They are also not free from a computational point of view. In terms of rendering and memory, hierarchical edits can slow down in certain situations, and perhaps vector displacement maps provide enough power in this area already. Either way Pixar has included them to see if they will be adopted and tools will be developed to use them. Even inside Pixar they are not used all the time and they remain a ‘jury is still out’ aspect of OpenSubdiv.

Open source – who’s using it?

A host of companies are adopting OpenSubdiv, especially with the official release of version 2.0.

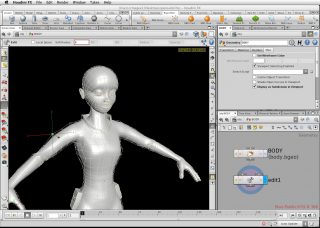

Maya, Autodesk

Pixar has worked closely with Autodesk and the Maya team for some time. Not long ago, Bill Polson and his Pixar team approached the Maya team to show them their GPU implementation inside of just ‘vanilla’ Maya. The idea was to gauge response from the Maya team and to show a test, so they just “hijacked the draw path to the GPU,” jokes Polson. The Maya team were impressed. “They said yeah that looks amazing – we want that in Maya and not just in a plugin that only Pixar has – we want it in core Maya, we want it available at all times not just preview mode,” Polson adds.

It is complex to add a new draw pipeline into an existing product such as Maya. The work has been challenging and the combined teams have been exploring solving this now for over 12 months at both Pixar’s Emeryville location and Autodesk’s Maya Montreal development offices.

Gordon Bradley is heading up Autodesk’s effort and is a part of the OpenSubdiv group: “Autodesk is really excited about the OpenSubdiv technology, and we’ve been working closely with Pixar on it for a while. Having an industry standard for interactive and final frame rendering of subdivision surfaces not only solves a lot of challenges we’ve struggled with for years, but it gives our industry a standard platform on which to build new tools, workflows and content – so we’re really happy to be playing an active part in helping establish the technology.”

While the company cannot commit to dates as yet, this year at the Autodesk user group in Anaheim during SIGGRAPH, Pixar and Autodesk showed a custom-built prototype of how OpenSubdiv technology could be natively integrated into Maya. The prototype let a user create a standard Maya mesh, hit the ‘3’ key to smooth it, and instantly one was able to see and work with an OpenSubdiv powered surface natively in Maya’s viewport, using all the standard tools and workflows. This provided a more accurate representation of the subdivision limit surface in Maya than anything that an artist would normally see, let alone interact with, before in the application.

“There are some incredibly talented and dedicated developers working on OpenSubdiv – both in terms of the underlying maths and the implementation on various architectures. It’s a real pleasure to get to work with them,” says Bradley. “The API itself is still evolving – but the core code is really clean, principled and fast. Another thing that’s been really impressive about the OpenSubdiv project is the whole ecosystem that’s been pulled together by Pixar to get to this point. It’s not just the authoring tools; it’s the other studios, the renderers, the game engine guys, the graphics APIs, the hardware vendors. There’s a lot of collaboration and support there.”

fxguide put some detailed questions to Gordon Bradley about how Autodesk will work with OpenSubdiv and where it might be used.

FXG: Maya modelers can already sub-divide can’t they – why is this new?

GB: There are two new things here.

Firstly, Pixar has very generously donated its subdivision IP and libraries to the community. So now we’ve got the potential to literally have the same code powering the authoring tools, like Maya, and the renderers. So, once everyone has had a chance to adopt it, you should be able to view something in Maya’s viewport, hit render in any renderer supporting OpenSubdiv’s and see the same thing in the final frame – or export it to another application/cache, and still see the same thing. Right now, that’s not the case: we’ve all got our own implementations with varying feature sets and parameter semantics. So while at a glance they might look similar, more often than not there are a lot of differences, which can lead to unpredictable results. We saw this when we compared the native Maya subdivision with the OpenSubdiv in the Maya prototype we put together with Pixar.

Secondly, this is the first time we’ve had access to on-GPU tessellation of the limit surface. This means we can now have the potential to work interactively with a representation of the surface in the Maya viewport that would not have even loaded in before. And it’s not just a really highly subdivided surface – it also means we get final frame compliant animation and displacement too. Combined, this would let you see and work with a much better representation of the mesh, interactively, and with greater confidence than what you’re seeing in the viewport with will match what you render.

FXG: Why should a Maya artist reading this care about OpenSubdiv?

GB: You’re going to be able to work with a much higher-fidelity representation of the mesh, it’s going to be faster, smaller (so you can have more characters/objects in your scene), and what you’re seeing in the viewport will match what gets rendered at the end. It’s WYSIWYG for subdivision surfaces.

FXG: Can you comment on the benefits in version 2 such as the limit surface evaluation for collision detection?

GB: Being able to see your geometry in the viewport and final frame render are obviously the first things you think about when you think about geometry. But there are a lot of other things we need to do with our objects: from simple things like snapping, to dynamics and collisions, to complex ray tracing, growing things on them, and procedural FX. That’s where v2 comes in – it gives us the APIs we need for these other features. And it’s really important that they’re part of the same library – this makes sure that when you snap to something, or collide with it, or grow something on it – it matches the surface you (and your audience) are seeing.

FXG: Will Autodesk be contributing code back into the open source community?

GB: We’ve been contributing code back to open source projects for a while now! We have done so with both Alembic and OpenEXR. These technologies are really transforming our pipelines and our industry – and the teams of people setting these projects up and making them available to the community are just awesome. Although we cannot talk about our future plans we definitely want to be a part of that where we can.

FXG: Is Autodesk exploring OpenSubdiv beyond Maya?

GB: We’ve certainly had a few early discussions around how we could leverage this in products other than Maya, but since Pixar use Maya the collaboration on the prototype has been Maya-based and this has been our primary area of focus so far.

FXG: Can you comment on the power and usefulness of open standards ?

GB: There’s something really powerful about taking a well-defined problem, and giving the community a solution they can not only use, but also improve. And at some point, it becomes a solved problem. As we hope will be the case with subdivision surfaces: if you need the technology – there it is, it works, it’s well tested, it’s optimized, it’s cross platform, and it’s supported in all these products. You don’t have to spend the thousands of hours that Pixar (and now the OpenSubdiv community) has spent researching, developing, testing and optimizing this awesome technology. You just get to build on top of it.

So yes, there are a lot of great benefits to something being an open

standard: it helps focus us as a community around one solution instead

of many; It lets us build an ecosystem of tools that support it; it

gives us interoperability; it gives us access to features we just

otherwise wouldn’t have; it avoids redundant repetition of work on

non-essential problems. Bit by bit we’re also moving our industry

forward – and letting the next generation of artists and films do even

more amazing and innovative things.

Above: see a Meet the Experts video with Pixar and Autodesk discussing OpenSubdiv.

Houdini, Side Effects

Mark Elendt, Side Effects Software’s senior mathematician, is very

excited by OpenSubdiv. He sees it as a great way to

have interoperability between various software packages. There are at

least three places that Side Effects Software are looking forward to integrating OpenSubdiv in Houdini. “Of course, each task has its own unique challenges,” notes Elendt. He sets these out below:

1) As a modeling tool. This is likely the easiest place for us to start using OpenSubdiv. Preliminary results look very promising.

2) OpenSubdiv has some very clever code for rendering subdivision surfaces on the GPU. This will likely take a little more effort than the modelling tools, but can provide a huge benefit to our users, to be able to visualize the refined surface in real-time will be a huge win.

3) The other obvious place to incorporate OpenSubdiv is in our software renderer Mantra. This is likely the most difficult of the integration tasks. Mantra uses specialized rendering algorithms which currently perform refinement in a way that doesn’t seem to have support in OpenSubdiv. There are a couple of ways we could try to solve this, but it isn’t clear to us which would be best at the moment.

Elendt is also very excited to see in the latest version 2.0 release that the limit surface evaluation is built in. “Because OpenSubdiv provides a standard implementation, this means that tools like fur generation and particle effects can now provide results that match exactly between Houdini and other software like PRman or Maya,” he explains. With the limit surface evaluation tool or command it is possible to make sure intersections are correct, that water correctly follows the form of a face, or that cloth correctly intersects and does not allow breakthroughs – something that could happen with release 1.0 which did not have the ability to collision detect with the limit surface.

Interestingly, Side Effects had also entered into agreements with Pixar related to this before Subdiv was open source. For example, Houdini has two styles of creasing in their modeling tool. One version is licensed from Pixar and allows an artist to produce creases that would render correctly in RenderMan but would not render in Side Effects’ own Mantra renderer. Mantra is not RenderMan compliant – but it is very similar to RenderMan. To render in Mantra a user needed to use Side Effects’ own version of creasing that was not in violation of any of Pixar’s patents. “We provide both options in the modeler and we offer the non-patent infringing one inside the Mantra render, so it is very exciting for us for us to use OpenSubdiv to provide the Pixar Subdiv inside our renderer,” says Elendt.

Actually Side Effects was not alone, AIR renderer also had the same approach and had a different creasing model that was outside of Pixar’s patents. “This is one of the big things that OpenSubdiv solves, now everyone can produce the same creasing and so the interoperability between software packages is greatly improved,” adds Elendt. Now with OpenSubdiv Houdini will be able to have one version that is allowed to be rendered inside Mantra and RenderMan.

The other interesting aspect is the hierarchical edits. Houdini has had a version of this for many years, but the OpenSubdiv hierarchical edits are a super-set of what Houdini currently allows. For example, Houdini could apply a different shader per face of a sub division surface, “and the only way to output that to a RIB file is with a hierarchical edit,” Elendt explains. But with OpenSubdiv you can define for different creases and/or weight level at several different levels on different parts of the master model. This additional flexibility and power is something that Side Effects very much wants to investigate for Houdini. “The hierarchical edits are definitely a very interesting technology and we definitely want to use that in the future,” says Elendy, adding temptingly, “There may be other ways for us to use that technology but it will be in Houdini 13 and beyond.”

While many people think of Houdini as an effects animation tool, due to its industry defining destruction, volumetric and fluid pipelines, it is of course a complete modeling, design and animation package, with years of great early adoption of technology like OpenSubdiv, OpenVDB and earlier technologies like Ptex – all of which Side Effects adopted if not first then very soon after their introduction, which is why advanced modeling tools are so relevant to a Houdini pipeline.

Side Effects’ research into more fully embracing OpenSubdiv is already producing results that could benefit the whole community. Ever keen to contribute as well as use code in the open source community, Side Effects hopes to give back some of the interesting enhancements they have been working on. For example, they discovered that the Linux implementation is really fast but the Windows implementation has some speed memory issues. Linux was “blazingly fast and really tuned to work with large data sets,” says Elendt. “Windows was three or four – at times ten – times slower. It turns out that there is one little function which is doing a lot of memory allocation and on Linux we are using this Firefox library which cut down the overhead of lots of little memory allocations. so we don’t notice that cost on Linux. But on Windows we saw the big cost, so right now we are trying to find a solution to that. And we will try and feed that back into the OpenSubdiv project and that’s the great thing about open source – when we find a patch we can feed that back into the community, and this would probably affect the CPU and GPU implementations.”

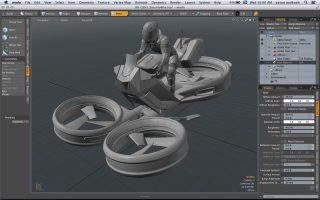

Design and Modeling (Modo, The Foundry)

Pixar Subdiv (PSUB) being used inside The Foundry’s Modo for industrial design. Credit: Harald Belker Design

One of the issues faced by industrial designers is producing designs that can be actually made. In design terms there are some key concepts that need to be respected to make real world designs. One is being able to produce designs which are ‘water tight’. The other is make sure that any model is ‘manifold’. If you look up manifold in google you may find a definition which says unhelpfully that “in mathematics, a manifold is a topological space that near each point resembles Euclidean space.” A simpler way to think of it is that each surface has an inside and outside – so a mobius is a great example of a non-manifold shape. A mobius is a surface with only one side and only one boundary component.

Or in simple terms - If an ant were to crawl along the length of a mobius strip, it would return to its starting point having walked the entire length of the strip (on both sides of the original paper) without ever crossing an edge. Designers cannot work in the real world with non-manifold models if they are headed to manufacturing. Actually, it is not just a design / 3D printer issue. RenderMan cannot render any non-manifold 3D object, this does actually affect the broader OpenSubdiv story outside Modo as RenderMan and thus OpenSubdiv “just does not want to deal with any faces of points that are not manifold. The code that is in OpenSubdiv is from RenderMan’s core subdivision library,” explains Pixar’s Manuel Kraemer.

As designers need these more engineering specific models they tend to work with NURBS, whereas a film designer may have a preference for straight polygons, which can easily be made to produce an impossible model from an manufacturing point of view. The problem with NURBS is that they can be much more complex than polygons. For example, the problem of matching arbitrary topology by connecting two NURBS patches can lead to cracks. This is not only a problem for designers but such cracks on a character’s face for example could be disastrous for a character pipeline as they can break under animation and allow literally holes in your character’s head.

By comparison, arbitrary topology with OpenSubdiv is easy and produces a clean limit surface, which is robust under animation – something the Pixar modeler relied on in say the modeling of the face of the mother bear in Brave. It is also exactly what industrial designers use in MODO when using the PSUB modeling approach. (PSUB is short for Pixar SubDiv and has been in MODO since MODO 501). In MODO the implementation is not strictly “Open Subdiv”, as the Luxology team lead the adoption of Pixar’s approach, under license, before OpenSubdiv was officially even launched, but the code approach they have taken is similar. MODO designers are able to easily and yet confidently produce industrial design knowing the designs will not only be able to be manufactured but also match their on screen originals. Open Subdiv wonderfully bridges these two extremes while having really the best of both. Like NURBS you can produce water tight, manifold designs, and yet like polygons, OpenSubdiv is easy to work with, fast and user friendly.

Artibary subdivision of any topology avoids NURBS cracks or breaks which can be worse with animation.

Brad Peebler of The Foundry (ex Luxology) commented, “we are super positive about OpenSubdiv because it means other apps can feed from our PSUB (Pixar SubD) meshes. That’s great! Our PSUB work was done with Pixar prior to the release of OpenSubdiv and it means that we are OpenSubdiv mesh compatible with the extra advantage that having the algorithms in the core of our meshing tools means we can also make topology changes very very fast. We are 100% supportive of the effort and continue to stay in close communication.”

OpenSubdiv also makes a lot of sense for another Foundry product, MARI, which is now actively exploring it. “OpenSubdiv for MARI makes a tremendous amount of sense because there is no topological changes happening inside the MARI workflow,” says Peebler. “OpenSubdiv represents a very fast and efficient method for displaying SDS meshes and as you can imagine that would be very valuable to an app like MARI. In the UK, Jack Greasley (MARI original developer and Product Manager) and the MARI team are currently focused on the OSX version of the software, but officially they can say this: “The guys will be starting work on it as soon as the Mac version has shipped. Aiming for early next year,” according to The Foundry’s Ian Hall.

Mobile solutions (DigitalFish & Motorola)

DigitalFish is a group originally from inside Pixar, who have set up their own company and amongst other projects help implement OpenSubdiv pipelines and solutions. They help their clients solve difficult graphics and artist-related technical problems. Just one example of this is DigitalFish embedding engineers within their clients’ teams to support their goals integrating OpenSubdiv. Such an example was shown at this year’s SIGGRAPH conference built around a new phone from Motorola Mobility. As OpenSubdiv provides great performance in the interactive display of smooth surfaces for characters, vehicles and other scene content, DigitalFish helped delivered the first-ever realtime subdivision surfaces on a mobile device. DigitalFish engineers helped their Motorola clients build software in a very tough framework. The team had just 7 millisecs refresh, it had to work with time budgets, power budgets, and of course a memory budget. For DigitalFish much of their work involves so-called ”GPGPU” programming using GPU-centric interfaces such as OpenCL or CUDA to do general programming on the graphics processing unit.

The result of the effort allowed for Motorola to collaborate with Oscar-winning animator Jan Pinkava and team of animators, modelers, and sound experts. “Storytelling is foundational to our history, our teachings and our relationships. We tell stories to our children and one another. They bind us together. So it’s exciting for us to start exploring what happens when we make stories for perhaps the most personal devices we own – our smartphones,” said Regina Dugan, senior V.P of ATAP, a division of Motorola Mobility in Computer Graphics World in July.

DigitalFish now also have a CPU version, which the company says is optimized for ARM processors and that will be contributed as part of the Motorola branch. The CPU version runs very close to, and the company says, in some cases faster than, GPU version on mobile. It enables uniform subdivision only.

“We’re investigating how a mobile device can permit stories to be told in a more immersive, interactive way. This is not a flat content experience or the viewing of stories made for the big screen and shown on a small one,” explains Baback Elmieh, technical program lead in the Advanced Technology and Projects (ATAP) group of Motorola Mobility.

Left: Baback Elmieh of Motorola Mobility can be seen demoing and discusses the Windy Day app during the 2013 SIGGRAPH course

With so much of the creative opportunity around OpenSubdiv involving new modeling approaches, it is worth noting that the SIGGRAPH demo did not include hardware that has built in tessellation. As such the team could only go to level 2. With new graphics hardware coming with hardware tessellation, the team could take this much further in the future with newer hardware, and produce even more responsive and higher quality visuals.

Bill Polson believes having an independent company like DigitalFish familiar in the code base being available to help companies adopt OpenSubdiv is a great benefit. He points to key members of the team like Nathan Litke, Senior Research Scientist. “Nathan’s particularly strong, he is a PhD in computational geometry and his PhD thesis was on Subdivison, and he has production experience,” points out Polson, who also singles out DigitalFish co-founder Dan Herman.

DigitalFish’s Dan Herman and (left) and Geri’s Game & Windy Day director Jan Pinkava having fun at SIGGRAPH 2013.

“Geri’s Game was being made across the hall from my office,” says Herman. “When I was at Pixar, that was my first exposure, and that was 1998. At that time Subdiv was an experiment. “Toy Story had been modeled primarily with NURBS, Woody and Buzz were NURBS and NURBS are not good for organic surfaces. From Geri’s Game in 1998 to Toy Story 2 (2000) Pixar completely converted from a NURBS pipeline with polygons to a totally Subdiv pipeline. That conversion was because the advantages of Subdiv were just so compelling.”

At Qualcomm UPLINQ 2013 in Sept. Dr Regina Dugan SVP of Motorola Mobility demoed Windy Day. (link)

The whole process of having consultants available is also key as implementing an OpenSubdiv pipeline is not as simple just just downloading the code from GitHub and compiling it. Until companies like Autodesk and Side Effects include the tools as standard, there is still some real engineering to be done to adopt a pipeline that would mirror or resemble the one at Pixar. “It is a reasonably complex package with a lot of dependencies,” notes Kraemer.

In Herman’s opinion, Subdiv is:

- easy to model

- easy to refine if you need to edit

- easy to animate

- very light weight in the pipeline

- good to render

The only downside is modeler education, and work is needed to help explain them and to help implement it. “We see the conversion that happened at Pixar primed to happen at other places all around the entertainment industry,” concludes Herman.