spark分析天气数据--涉及的知识点

一. 实验内容和要求

给定气象数据集(ftp://ftp.ncdc.noaa.gov/pub/data/noaa上2018年中国地区监测站的数据)cndcdata.zip,编写spark程序实现以下内容:

1、从每一条气象数据中提取到记录时间、经度、纬度、温度、湿度、气压等信息组成一条新的记录。(数据说明见附录)

2、找出温差最大的观测点。

二.分析

1.数据分析

示例数据文件cndctest.txt

0169501360999992018010100004+52970+122530FM-12+043399999V0201401N00101026001C9004700199-02041-02321102941ADDAA124001531AJ100003100000099GA1081+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF108991081999026001999999MA1999999097051MD1210061-0101REMSYN004BUFR 0165501360999992018010103004+52970+122530FM-12+043399999V0201101N0010122000199004900199-01651-02051102921ADDAJ100079100070099AY171031AY201031GA1021+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF102991021999026001999999MD1210021+9999MW1001REMSYN004BUFR 0187501360999992018010106004+52970+122530FM-12+043399999V0202701N00101026001C9027000199-01271-01781102841ADDAA106000131AY171061AY201061GA1081+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF108991081999026001999999KA1240N-02051MA1999999097021MD1710051+9999MW1001REMSYN004BUFR 0148501360999992018010109004+52970+122530FM-12+043399999V0202701N00101026001C9030000199-01341-01691102901ADDAA106999999AA224999999GA1081+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF108991081999026001999999MD1210061+9999REMSYN004BUFR 0163501360999992018010112004+52970+122530FM-12+043399999V0202501N00201026001C9030000199-01321-01711102961ADDAA106999999AA224999999GA1081+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF108991081999026001999999MA1999999097121MD1210041+9999REMSYN004BUFR 0052501360999992018010115004+52970+122530FM-12+043399999V0202901N0020199999999030000199-01251-01671102941ADDAA106999999AA224999999MD1210031+9999REMSYN004BUFR 0067501360999992018010118004+52970+122530FM-12+043399999V0203401N0030199999999030000199-01301-01821102981ADDAA106999999AA224999999MA1999999097221MD1210071+9999REMSYN004BUFR 0052501360999992018010121004+52970+122530FM-12+043399999V0202501N0020199999999030000199-01771-02141103101ADDAA106999999AA224000231MD1210061+9999REMSYN004BUFR 0169501360999992018010200004+52970+122530FM-12+043399999V0202701N0020122000199030000199-02271-02551103221ADDAA124000131AJ100079100070099GA1031+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF103991031999026001999999MA1999999097341MD1210061+0301REMSYN004BUFR 0148501360999992018010203004+52970+122530FM-12+043399999V0202701N00301026001C9030000199-01421-02121103221ADDAA106999999AA224999999GA1061+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF106991061999026001999999MD1210101+9999REMSYN004BUFR 0176501360999992018010206004+52970+122530FM-12+043399999V0203401N00301026001C9030000199-01251-02101103201ADDAA106999999AA224999999GA1051+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF105991051999026001999999KA1240N-02291MA1999999097441MD1410001+9999REMSYN004BUFR 0148501360999992018010209004+52970+122530FM-12+043399999V0202701N00201026001C9030000199-01571-02201103461ADDAA106999999AA224999999GA1051+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF105991051999026001999999MD1210151+9999REMSYN004BUFR 0163501360999992018010212004+52970+122530FM-12+043399999V0202701N00101026001C9030000199-02191-02491103661ADDAA106999999AA224999999GA1061+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF106991061999026001999999MA1999999097661MD1210071+9999REMSYN004BUFR 0052501360999992018010215004+52970+122530FM-12+043399999V0202001N0030199999999015000199-02521-02821103681ADDAA106999999AA224999999MD1210071+9999REMSYN004BUFR 0067501360999992018010218004+52970+122530FM-12+043399999V0201801N0010199999999019000199-02671-02981103741ADDAA106999999AA224999999MA1999999097791MD1210061+9999REMSYN004BUFR 0052501360999992018010221004+52970+122530FM-12+043399999V0201801N0010199999999026000199-02851-03191103791ADDAA106999999AA224999999MD1710011+9999REMSYN004BUFR 0169501360999992018010300004+52970+122530FM-12+043399999V0201401N00101026001C9003800199-02831-03181103911ADDAA124070091AJ199999999999999GA1051+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF105991051999026001999999MA1999999097831MD1210051+0501REMSYN004BUFR 0148501360999992018010303004+52970+122530FM-12+043399999V0201101N0010122000199003400199-02151-02531103791ADDAA106999999AA224999999GA1021+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF102991021999026001999999MD1710081+9999REMSYN004BUFR 0106501360999992018010306004+52970+122530FM-12+043399999V0202201N0010122000199002900199-01201-02061103481ADDAA106999999AA224999999GF100991999999999999999999KA1240N-02941MA1999999097551MD1710201+9999REMSYN004BUFR 0078501360999992018010309004+52970+122530FM-12+043399999V0202501N0010122000199021000199-01741-02271103571ADDAA106999999AA224999999GF100991999999999999999999MD1410001+9999REMSYN004BUFR 0093501360999992018010312004+52970+122530FM-12+043399999V0202001N0010122000199030000199-02291-02571103581ADDAA106999999AA224999999GF100991999999999999999999MA1999999097501MD1710051+9999REMSYN004BUFR 0052501360999992018010315004+52970+122530FM-12+043399999V0203601N0000199999999019000199-02491-02771103451ADDAA106999999AA224999999MD1710061+9999REMSYN004BUFR 0089501360999992018010318004+52970+122530FM-12+043399999V0203601N0000199999999008000199-02681-02991103291ADDAA106999999AA224999999AY101061AY201061MA1999999097371MD1710071+9999MW1051REMSYN004BUFR 0052501360999992018010321004+52970+122530FM-12+043399999V0203601N0000199999999015000199-02801-03171103171ADDAA106999999AA224999999MD1710191+9999REMSYN004BUFR 0169501360999992018010400004+52970+122530FM-12+043399999V0200701N0010122000199003800199-02801-03141103181ADDAA124070091AJ199999999999999GA1021+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF102991021999026001999999MA1999999097131MD1710051-0701REMSYN004BUFR 0148501360999992018010403004+52970+122530FM-12+043399999V0202501N0030122000199030000199-01422-02131102861ADDAA106999999AA224999999GA1041+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF104991041999026001999999MD1710171+9999REMSYN004BUFR 0176501360999992018010406004+52970+122530FM-12+043399999V0202201N0050122000199030000199-01072-01991102581ADDAA106999999AA224999999GA1031+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF103991031999026001999999KA1240N-02841MA1999999096721MD1710241+9999REMSYN004BUFR 0148501360999992018010409004+52970+122530FM-12+043399999V0202501N0020122000199030000199-01451-02091102571ADDAA106999999AA224999999GA1041+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF104991041999026001999999MD1710071+9999REMSYN004BUFR 0163501360999992018010412004+52970+122530FM-12+043399999V0202501N0010122000199020000199-02031-02391102561ADDAA106999999AA224999999GA1031+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF103991031999026001999999MA1999999096581MD1710071+9999REMSYN004BUFR 0052501360999992018010415004+52970+122530FM-12+043399999V0201601N0020199999999006000199-02391-02651102401ADDAA106999999AA224999999MD1710041+9999REMSYN004BUFR 0067501360999992018010418004+52970+122530FM-12+043399999V0200701N0010199999999022000199-02471-02771102361ADDAA106999999AA224999999MA1999999096531MD1710011+9999REMSYN004BUFR 0052501360999992018010421004+52970+122530FM-12+043399999V0200701N0010199999999014000199-02191-02491102351ADDAA106999999AA224999999MD1710021+9999REMSYN004BUFR 0169501360999992018010500004+52970+122530FM-12+043399999V0202001N00101026001C9002600199-02101-02391102431ADDAA124070091AJ199999999999999GA1071+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF107991071999026001999999MA1999999096531MD1210021-0601REMSYN004BUFR 0148501360999992018010503004+52970+122530FM-12+043399999V0200901N0010122000199002500199-01741-02251102351ADDAA106999999AA224999999GA1021+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF102991021999026001999999MD1710071+9999REMSYN004BUFR 0176501360999992018010506004+52970+122530FM-12+043399999V0202201N0030122000199030000199-01171-02011102061ADDAA106999999AA224999999GA1011+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF101991011999026001999999KA1240N-02611MA1999999096241MD1710221+9999REMSYN004BUFR 0148501360999992018010509004+52970+122530FM-12+043399999V0202901N0010122000199030000199-01741-02171102081ADDAA106999999AA224999999GA1011+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF101991011999026001999999MD1710011+9999REMSYN004BUFR 0163501360999992018010512004+52970+122530FM-12+043399999V0201601N0010122000199023000199-02241-02501102081ADDAA106999999AA224999999GA1031+026001101GA2999+999999101GA3999+999999101GE19MSL +99999+99999GF103991031999026001999999MA1999999096181MD1710051+9999REMSYN004BUFR 0058501360999992018010515004+52970+122530FM-12+043399999V0203601N0000199999999999999999-02081-02351101951ADDAA106999999AA224999999MD1710061+9999MW1051REMSYN004BUFR 0067501360999992018010518004+52970+122530FM-12+043399999V0203601N0000199999999017000199-02441-02751101871ADDAA106999999AA224999999MA1999999096061MD1710061+9999REMSYN004BUFR 0052501360999992018010521004+52970+122530FM-12+043399999V0201801N0010199999999017000199-02571-02871101891ADDAA106999999AA224999999MD1710051+9999REMSYN004BUFR

1.原始数据

每行代表一条数据

2.对数据分析

提取出关键数据

分析数据得:

每行数据的信息有以下规律:

日期 在第15-23个字符

经度 在第35-41

维度 在第29-34

温度 在第89-92 其符号位在:87

湿度 在第95-98 其符号位在:93

大气压值 在第99-104

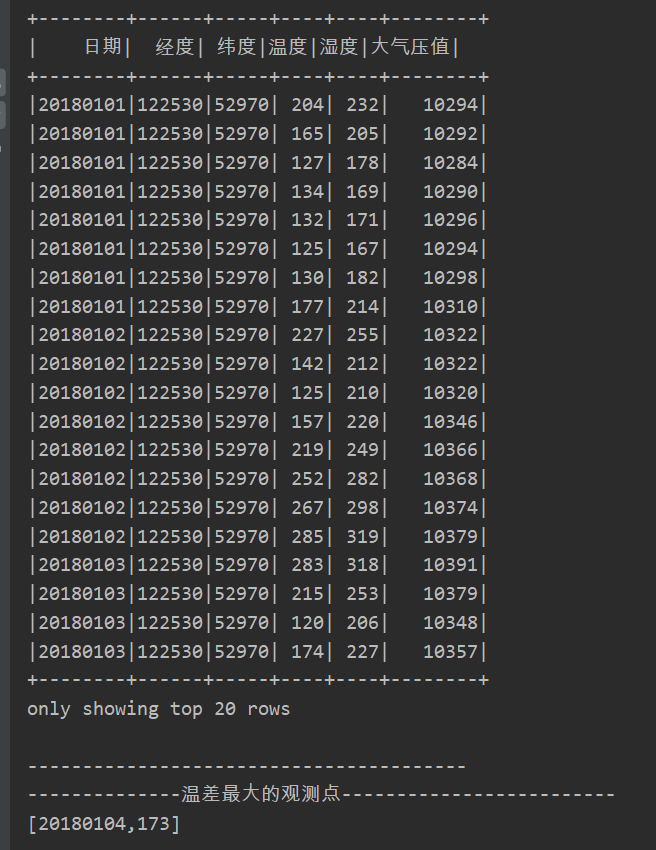

3.数据提取后的数据形式

日期 经度 纬度 温度 湿度 大气压值

(20180101,122530,52970,-204,-232,10294)

(20180101,122530,52970,-165,-205,10292)

(20180101,122530,52970,-127,-178,10284)

2.利用spark与scala进行数据分析

(1)对数据进行过滤---filter算子

(2)利用表格型数据结构DataFrame存储数据,并进行排序

df.orderBy(-df("col2")).show ---按第二列数据的大小进行降序排列

df.orderBy(df("col2")).show ---按第二列数据的大小进行升序排列

(3)groupByKey()算子对每个key进行操作,生成一个sequence ---在这个案例中使用groupByKey()对同一天的数据进行聚合

(4)var group1= group.map(t=>(t._1,t._2.max-t._2.min))//将类似{20180101,{204,165,127,134}的形式数据聚合成{20180101,70}

(5)sortBy算子根据value排序,sortByKey()根据key排序

代码形式1:

1 import org.apache.log4j.{Level, Logger} 2 import org.apache.spark.rdd.RDD 3 import org.apache.spark.sql.types.{IntegerType, LongType, StringType, StructField, StructType} 4 import org.apache.spark.sql.{DataFrame, Row, SparkSession} 5 6 object WeatherTest { 7 def main(args: Array[String]): Unit = { 8 val spark: SparkSession = SparkSession.builder().appName("WeatherTest").master("local").getOrCreate() 9 val sc = spark.sparkContext 10 11 val data: RDD[String] = sc.textFile("E:\\cndctest.txt") //数据文件cndctest.txt 12 // 对数据进行处理 13 val data1 = data.filter(line => { 14 var airTemperature = line.substring(89, 92).toInt 15 //airTemperature是9999为异常数据,排除,数据过滤 16 airTemperature != 9999 17 }) 18 .map(line => { 19 val year = line.substring(15, 23) 20 var jindu = line.substring(35, 41) 21 var weidu = line.substring(29, 34) 22 var shidu = line.substring(95, 98).toInt 23 var airTemperature = line.substring(89, 92).toInt 24 var daqiya = line.substring(99, 104) 25 (year,jindu ,weidu , airTemperature, shidu, daqiya) 26 } 27 ) 28 //利用表格型数据结构DataFrame存储数据 29 val df = spark.createDataFrame(data1).toDF("日期", "经度","纬度","温度","湿度", "大气压值") 30 df.show() 31 32 println("----------------------------------------") 33 //处理数据,只保留日期与温度这两项数据 34 val data_temp = data.filter(line => { 35 var airTemperature = line.substring(89, 92).toInt 36 airTemperature != 9999 37 }) 38 .map(line => { 39 val year = line.substring(15, 23) 40 var airTemperature = line.substring(89, 92).toInt 41 (year,airTemperature) 42 } 43 ) 44 45 var group = data_temp.groupByKey() 46 var group1= group.map(t=>(t._1,t._2.max-t._2.min))//聚合 47 val df1 = spark.createDataFrame(group1).toDF("日期","温度差") 48 var df2 = df1.orderBy(-df1("温度差")) 49 println("--------------温差最大的观测点-------------------------") 50 df2.head(1).foreach(println) 51 } 52 // 屏蔽不必要的日志显示终端上 53 Logger.getLogger("org.apache.spark").setLevel(Level.ERROR) 54 Logger.getLogger("org.eclipse.jetty.server").setLevel(Level.OFF) 55 }

运行结果:

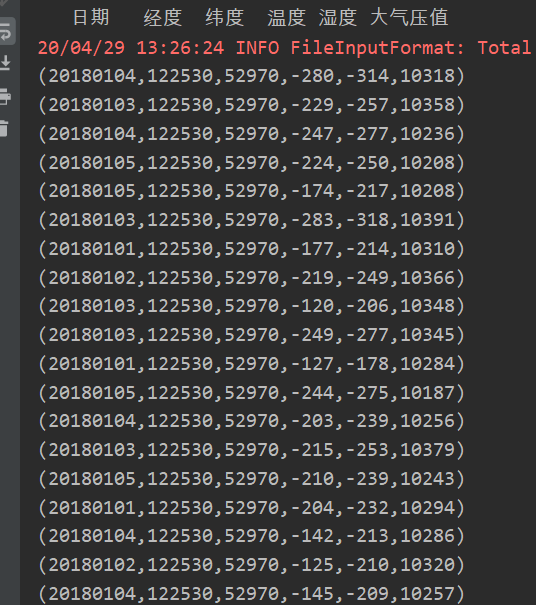

代码形式2:

1 import org.apache.log4j.{Level, Logger} 2 import org.apache.spark.rdd.RDD 3 import org.apache.spark.sql.{RowFactory, SparkSession} 4 import org.apache.spark.{SparkConf, SparkContext} 5 6 object case2 { 7 8 def main(args: Array[String]): Unit = { 9 //SQl编程入口 10 val spark: SparkSession = SparkSession.builder().appName("WeatherTest").master("local").getOrCreate() 11 val sc = spark.sparkContext 12 //对数据的处理 13 val data: RDD[String] = sc.textFile("E:\\cndctest.txt") 14 println(" 日期 经度 纬度 温度 湿度 大气压值") 15 16 val data1 = data.filter(line => { 17 var airTemperature = line.substring(89, 92).toInt 18 //airTemperature是9999为异常数据,排除,数据过滤 19 airTemperature != 9999 20 }) 21 .map(line => { 22 val year = line.substring(15, 23) 23 var jindu = line.substring(35, 41) 24 var weidu = line.substring(29, 34) 25 var shidu = line.substring(95, 98).toInt 26 var airTemperature = line.substring(89, 92).toInt 27 var daqiya = line.substring(99, 104) 28 (year + "," + jindu + "," + weidu + ",-" + airTemperature + ",-" + shidu, daqiya) 29 } 30 ) 31 32 data1.reduceByKey(_ + _).collect().foreach(println) 33 34 //处理数据,只保留日期与温度这两项数据 35 val data_temp = data.filter(line => { 36 var airTemperature = line.substring(89, 92).toInt 37 airTemperature != 9999 38 }) 39 .map(line => { 40 val year = line.substring(15, 23) 41 var airTemperature = line.substring(89, 92).toInt 42 (year,airTemperature) 43 } 44 ) 45 46 /* 47 println("----------------按日期进行聚合之后的数据-------------------") 48 data_temp.groupByKey().collect().foreach(println) 49 /*结果: 50 (20180104,CompactBuffer(280, 142, 107, 145, 203, 239, 247, 219)) 51 (20180101,CompactBuffer(204, 165, 127, 134, 132, 125, 130, 177)) 52 */ 53 println("-----------------------------------") 54 */ 55 56 var group = data_temp.groupByKey() //按日期进行聚合 57 var group1= group.map(t=>(t._1,t._2.max-t._2.min)) //对rdd进行处理,得到(日期,温度差) 58 println("---------------日期与温度差--根据key--日期排序--------------------") 59 group1.sortByKey().collect().foreach(println) 60 /*结果是: 61 (20180101,79) 62 (20180102,160) 63 */ 64 65 println("---------------日期与温度差--根据value---温度差排序--------------------") 66 group1.sortBy(_._2,false).collect.foreach(println) 67 /*结果是: 68 (20180104,173) 69 (20180103,163) 70 */ 71 } 72 // 屏蔽不必要的日志显示终端上 73 Logger.getLogger("org.apache.spark").setLevel(Level.ERROR) 74 Logger.getLogger("org.eclipse.jetty.server").setLevel(Level.OFF) 75 }

运行结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号