linux运维、架构之路-K8s二进制版本升级

一、升级说明

Kubernetes集群小版本升级基本上是只需要更新二进制文件即可。如果大版本升级需要注意kubelet参数的变化,以及其他组件升级之后的变化。 由于Kubernetes版本更新过快许多依赖并没有解决完善,并不建议生产环境使用较新版本。

二、软件准备

1、下载地址

https://github.com/kubernetes/kubernetes/releases

2、目前集群版本信息

[root@k8s-node1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node1 Ready <none> 336d v1.16.0 k8s-node2 Ready <none> 336d v1.16.0 k8s-node3 Ready <none> 336d v1.16.0 [root@k8s-node1 ~]# [root@k8s-node1 ~]# [root@k8s-node1 ~]# [root@k8s-node1 ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.0", GitCommit:"2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", GitTreeState:"clean", BuildDate:"2019-09-18T14:36:53Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.0", GitCommit:"2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", GitTreeState:"clean", BuildDate:"2019-09-18T14:27:17Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

3、升级目标版本v1.18.15

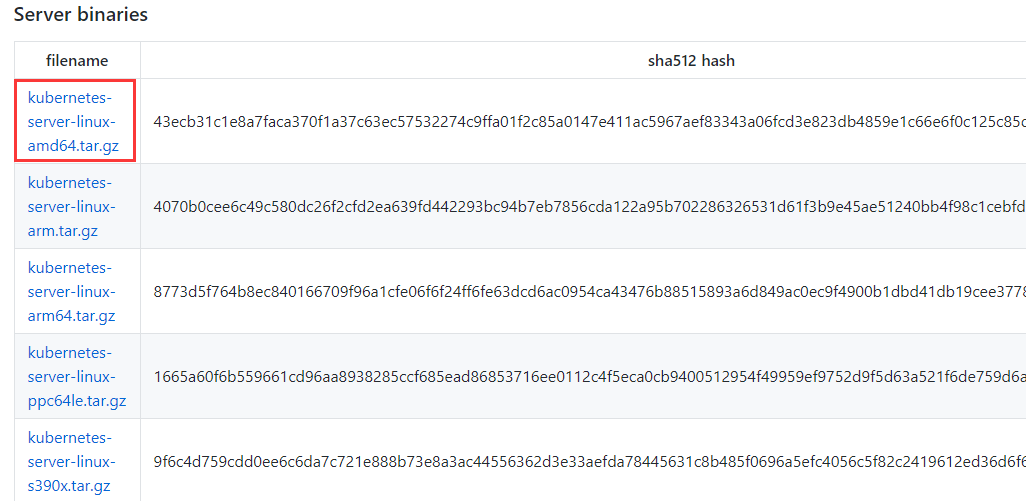

wget https://dl.k8s.io/v1.18.15/kubernetes-server-linux-amd64.tar.gz

三、开始升级

1、Master节点升级

①kubectl工具升级(在所有master节点操作)

备份:

cd /usr/bin && mv kubectl{,.bak_2021-03-02}

升级:

tar xf kubernetes-server-linux-amd64.tar.gz

cp kubernetes/server/bin/kubectl /usr/bin/

升级前:

[root@k8s-node1 ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.0", GitCommit:"2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", GitTreeState:"clean", BuildDate:"2019-09-18T14:36:53Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.0", GitCommit:"2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", GitTreeState:"clean", BuildDate:"2019-09-18T14:27:17Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

升级后:

[root@k8s-node1 ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.15", GitCommit:"73dd5c840662bb066a146d0871216333181f4b64", GitTreeState:"clean", BuildDate:"2021-01-13T13:22:41Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.0", GitCommit:"2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", GitTreeState:"clean", BuildDate:"2019-09-18T14:27:17Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

因为apiserver还没有升级,所以在Server Version中显示为1.16.0版本

②组件升级(生产环境可以一台一台的升级替换)

#在所有master节点执行 (升级生产环境可以不停止keeplived) systemctl stop keepalived #先停掉本机keepalived,切走高可用VIP地址 systemctl stop kube-apiserver systemctl stop kube-scheduler systemctl stop kube-controller-manager

备份:

cd /app/kubernetes/bin mv kube-apiserver{,.bak_2021-03-02} mv kube-controller-manager{,.bak_2021-03-02} mv kube-scheduler{,.bak_2021-03-02}

升级:

cp kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler} /app/kubernetes/bin/

启动keepalived和apiserver:

systemctl start keepalived

systemctl start kube-apiserver

查看启动日志是否有异常:

journalctl -fu kube-apiserver

查看:

[root@k8s-node1 ~]# kubectl get cs NAME STATUS MESSAGE ERROR scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused etcd-0 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"}

可以查看到etcd中的数据说明kube-apiserver没有问题

[root@k8s-node1 ~]# kubectl version Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.15", GitCommit:"73dd5c840662bb066a146d0871216333181f4b64", GitTreeState:"clean", BuildDate:"2021-01-13T13:22:41Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.15", GitCommit:"73dd5c840662bb066a146d0871216333181f4b64", GitTreeState:"clean", BuildDate:"2021-01-13T13:14:05Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"}

查看客户端和服务端的版本都是v1.18.15,说明版本相同没有问题

启动其他组件:

systemctl start kube-controller-manager && systemctl start kube-scheduler

查看启动状态,此时kubernetes集群已经恢复:

[root@k8s-node1 ~]# kubectl get cs NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"}

2、node组件升级

①停止服务,并且备份二进制文件(所有node节点)

systemctl stop kubelet

systemctl stop kube-proxy

备份:

cd /app/kubernetes/bin mv kubelet{,.bak_2021-03-02} mv kube-proxy{,.bak_2021-03-02}

升级:

scp kubernetes/server/bin/{kubelet,kube-proxy} /app/kubernetes/bin/ #分发所有节点

启动kubelet服务:

systemctl daemon-reload && systemctl start kubelet

查看kubelet日志,检查是否有报错

journalctl -fu kubelet

过几分钟查看节点是否正常:

[root@k8s-node1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node1 Ready <none> 336d v1.18.15 k8s-node2 Ready <none> 336d v1.18.15 k8s-node3 Ready <none> 336d v1.18.15

启动kube-proxy服务:

systemctl start kube-proxy

验证集群的状态:

[root@k8s-node1 ~]# systemctl status kube-proxy|grep Active Active: active (running) since Tue 2021-03-02 17:31:32 CST; 33s ago [root@k8s-node1 ~]# kubectl cluster-info Kubernetes master is running at http://localhost:8080 CoreDNS is running at http://localhost:8080/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. [root@k8s-node1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node1 Ready <none> 337d v1.18.15 k8s-node2 Ready <none> 337d v1.18.15 k8s-node3 Ready <none> 337d v1.18.15

四、创建应用测试

1、测试yaml文件

apiVersion: v1 kind: Pod metadata: name: busybox namespace: default spec: containers: - name: busybox image: busybox:1.28.3 command: - sleep - "3600" imagePullPolicy: IfNotPresent restartPolicy: Always

2、查看创新的Pod

[root@k8s-node1 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE busybox 1/1 Running 0 14s cronjob-demo-1614661200-mth6n 0/1 Completed 0 4h37m cronjob-demo-1614664800-2s9w5 0/1 Completed 0 3h37m cronjob-demo-1614668400-jt7ld 0/1 Completed 0 157m cronjob-demo-1614672000-8zx6j 0/1 Completed 0 82m cronjob-demo-1614675600-whjvf 0/1 Completed 0 37m job-demo-ss6mf 0/1 Completed 0 12d kubia-deployment-7b5fb95f85-fzn4d 1/1 Running 0 33d static-web-k8s-node2 1/1 Running 0 64m tomcat1-79c47dc8f-75mr4 1/1 Running 3 41d tomcat2-8fd94cc4-z9mmq 1/1 Running 4 41d tomcat3-6488575ff7-csmsh 1/1 Running 4 41d

3、测试coredns功能是否正常

[root@k8s-node1 ~]# kubectl exec -it busybox -- nslookup kubernetes Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

至此k8s二进制版本升级完毕

成功最有效的方法就是向有经验的人学习!