k8s 基于kubeasz(ansible) 搭建高可用集群 kubernetes v1.22.2

讲在前面

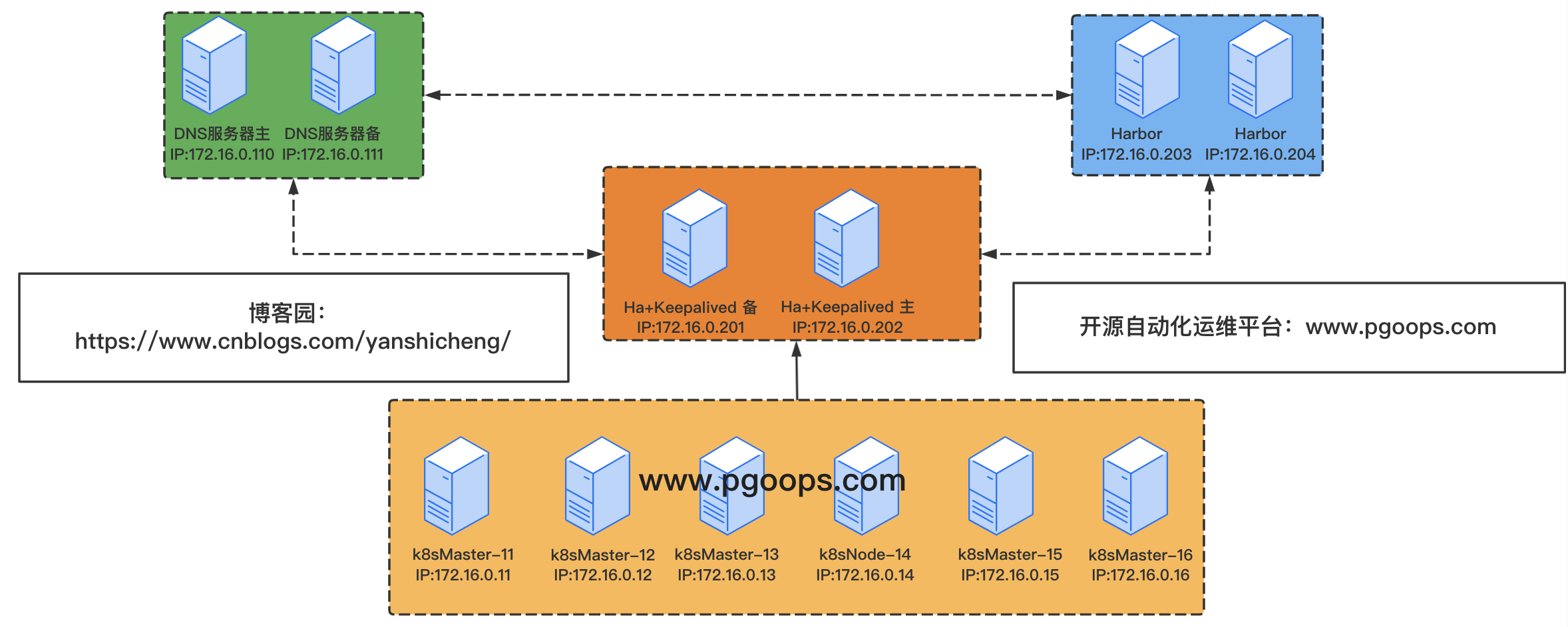

本次环境使用 kubeasz 进行安装,目前我这边使用九台虚拟机模拟,内存,PCU看情况分配即可。

项目地址: https://github.com/easzlab/kubeasz/

地址角色划分

| 序号 | 角色 | IP | hostname | 系统 | 内核版本 | 备注 |

|---|---|---|---|---|---|---|

| 1 | DNS服务器 | 172.16.0.110 | dns-101.pgoops.com | CentOS 7.9.2009 | 3.10.0-1160 | |

| 2 | k8sMaster-11 | 172.16.0.11 | k8smaster-11.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 3 | k8sMaster-11 | 172.16.0.12 | k8smaster-12.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 4 | k8sMaster-11 | 172.16.0.13 | k8smaster-13.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 5 | k8sNode-14 | 172.16.0.14 | k8snode-14.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 6 | k8sNode-14 | 172.16.0.15 | k8snode-15.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 7 | k8sNode-14 | 172.16.0.16 | k8snode-16.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 8 | Ha+keeplived | 172.16.0.202 | ha-202.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 172.16.0.180 | Harobr VIP | |||||

| 172.16.0.200 | k8s-api-server VIP | |||||

| 9 | Harbor | 172.16.0.203 | harbor-203.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic |

实验拓扑图

准备工作

下面各级初始化工作可以在我的另外一篇文章中查看

https://www.cnblogs.com/yanshicheng/p/15746537.html

部署 阿里云 镜像源

内核优化

资源限制优化

安装 docker

docker 只需要在 172.16.0.11-172.16.0.16,172.16.0.203 三台机器上安装即可。

初始化安装环境

172.16.0.110 部署DNS

这里具体安装部署略过,可以看我的另外一篇博客

安装文档:https://www.cnblogs.com/yanshicheng/p/12890515.html

配置文件如下:

[root@dns-101 ~]# tail -n 13 /var/named/chroot/etc/pgoops.com.zone

k8smaster-11 A 172.16.0.11

k8smaster-12 A 172.16.0.12

k8smaster-13 A 172.16.0.13

k8snode-14 A 172.16.0.14

k8snode-15 A 172.16.0.15

k8snode-16 A 172.16.0.16

ha-201 A 172.16.0.201

ha-202 A 172.16.0.202

harbor-203 A 172.16.0.203

harbor A 172.16.0.180 # harbor vip

k8s-api-server A 172.16.0.200 # k8s api server

k8s-api-servers A 172.16.0.200

重新加载DNS

[root@dns-101 ~]# rndc reload

WARNING: key file (/etc/rndc.key) exists, but using default configuration file (/etc/rndc.conf)

server reload successful

172.16.0.203 部署 Harbor

安装文档:https://www.cnblogs.com/yanshicheng/p/15756591.html

配置文件如下:

root@harbor-203:~# head -n 20 /usr/local/harbor/harbor.yml

hostname: harbor203.pgoops.com

http:

port: 80

https:

port: 443

certificate: /usr/local/harbor/certs/harbor203.pgoops.com.crt

private_key: /usr/local/harbor/certs/harbor203.pgoops.com.key

172.16.0.202 部署 Ha+Keepalived

内核参数增加: net.ipv4.ip_nonlocal_bind = 1

keepalived

安装:

apt install keepalived -y

配置文件如下:

# 配置文件 修改IP 网口名称 启动即可

tee /etc/keepalived/keepalived.conf << 'EOF'

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

router_id Keepalived_202

vrrp_skip_check_adv_addr

vrrp_mcast_group4 224.0.0.18

}

vrrp_instance Harbor {

state BACKUP

interface ens32

virtual_router_id 100

nopreempt

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

unicast_src_ip 172.16.0.202 # 本机发送单播地址

unicast_peer {

172.16.0.202 # 单播模式 slave 接收单播广播IP

}

virtual_ipaddress {

172.16.0.180/24 dev ens32 label ens32:2 # harbor 使用的VIP

172.16.0.200/24 dev ens32 label ens32:1 # k8s-api-server 使用的VIP

}

track_interface {

ens32

ens33

}

}

EOF

启动keepalived

systemctl enable --now keepalived

haproxy

安装软件

apt install haproxy -y

# 配置文件

在 /etc/haproxy/haproxy.cfg 后面添加

# harbor 代理

listen harbor

bind 172.16.0.180:443 # harbor 专用 vip

mode tcp

balance source

server harbor1 harbor203.pgoops.com:443 weight 10 check inter 3s fall 3 rise 5 # 后端地址

#server harbor2 harbor204.pgoops.com:443 weight 10 check inter 3s fall 3 rise 5

# k8s api 代理

listen k8s

bind 172.16.0.200:6443 # k8s api 专用 vip

mode tcp

# k8s master 地址

server k8s1 172.16.0.11:6443 weight 10 check inter 3s fall 3 rise 5

server k8s2 172.16.0.12:6443 weight 10 check inter 3s fall 3 rise 5

server k8s3 172.16.0.13:6443 weight 10 check inter 3s fall 3 rise 5

启动haproxy

systemctl enable --now haproxy

部署k8s

使用kubeas部署k8s集群推荐大家直接看官网文档。

要点:

- kubeas 在那台机器上部署,这台机器需要安装 ansible,并且和 所有k8s master node节点要做免密钥,这里就越过了。

下载脚本

export release=3.1.1

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

使用 脚本下载相应软件包

脚本打开可以编辑 docker 和 k8s等软件版本。

下载后存储目录:/etc/kubeasz/

cat ezdown

DOCKER_VER=20.10.8

KUBEASZ_VER=3.1.1

K8S_BIN_VER=v1.22.2

EXT_BIN_VER=0.9.5

SYS_PKG_VER=0.4.1

HARBOR_VER=v2.1.3

REGISTRY_MIRROR=CN

....

# 进行下载

./ezdown -D

创建集群

root@k8smaster-11:~# cd /etc/kubeasz/

### 创建一个集群部署实例

root@k8smaster-11:/etc/kubeasz# ./ezctl new k8s-cluster1

修改集群 hosts 文件

root@k8smaster-11:/etc/kubeasz/clusters/k8s-cluster1# pwd

/etc/kubeasz/clusters/k8s-cluster1

root@k8smaster-11:/etc/kubeasz/clusters/k8s-cluster1# cat hosts

# etcd 节点主机

[etcd]

172.16.0.11

172.16.0.12

172.16.0.13

# k8s-master 节点主机

[kube_master]

172.16.0.11

172.16.0.12

# k8s-node 节点主机

[kube_node]

172.16.0.14

172.16.0.15

# 这里手动安装过了就不安装 harbor ,

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#172.16.0.8 NEW_INSTALL=false

# 负载均衡器主机地址,后面有配置文件会用到VIP,不用脚本安装lb

# EX_APISERVER_VIP VIP 如果没有 dns 就写 ip 地址

# EX_APISERVER_PORT ha 对应暴露的端口 并非k8s api 端口

[ex_lb]

172.16.0.202 LB_ROLE=master EX_APISERVER_VIP=k8s-api-servers.pgoops.com EX_APISERVER_PORT=6443

#172.16.0.7 LB_ROLE=master EX_APISERVER_VIP=172.16.0.250 EX_APISERVER_PORT=8443

# 时间同步, 我这边已经有时间同步服务器了 这里就不做了

[chrony]

#172.16.0.1

[all:vars]

# --------- Main Variables ---------------

# apiservers端口

SECURE_PORT="6443"

# Cluster 运行容器: docker, containerd

CONTAINER_RUNTIME="docker"

# 集群网络组件: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service 地址段

SERVICE_CIDR="10.100.0.0/16"

# Cluster 容器地址段

CLUSTER_CIDR="172.100.0.0/16"

# NodePort 端口范围

NODE_PORT_RANGE="30000-40000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# 二进制命令存放路径

bin_dir="/usr/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

修改集群 config 文件

主要修改配置

- ETCD_DATA_DIR: etcd 存储目录

- MASTER_CERT_HOSTS:

- MAX_PODS: node 节点 最大 pod 数量

- dns_install: coredns 安装关闭 手动安装

- dashboard_install: dashboard 安装关闭 手动安装

- ingress_install: ingress 安装关闭 手动安装

root@k8smaster-11:/etc/kubeasz/clusters/k8s-cluster1# cat config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

# 设置时间源服务器【重要:集群内机器时间必须同步】

ntp_servers:

- "ntp1.aliyun.com"

- "time1.cloud.tencent.com"

- "0.cn.pool.ntp.org"

# 设置允许内部时间同步的网络段,比如"10.0.0.0/8",默认全部允许

local_network: "0.0.0.0/0"

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

# 证书签发有效期

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/data/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "easzlab/pause-amd64:3.5"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG: '["127.0.0.1/8", "harbor.pgoops.com"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.test.io"

- "www.pgoops.com"

- "test.pgoops.com"

- "test1.pgoops.com"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 400

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

# haproxy balance mode

BALANCE_ALG: "roundrobin"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flannelVer: "v0.13.0-amd64"

flanneld_image: "easzlab/flannel:{{ flannelVer }}"

# [flannel]离线镜像tar包

flannel_offline: "flannel_{{ flannelVer }}.tar"

# ------------------------------------------- calico

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calico_ver: "v3.19.2"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# [calico]离线镜像tar包

calico_offline: "calico_{{ calico_ver }}.tar"

# ------------------------------------------- cilium

# [cilium]CILIUM_ETCD_OPERATOR 创建的 etcd 集群节点数 1,3,5,7...

ETCD_CLUSTER_SIZE: 1

# [cilium]镜像版本

cilium_ver: "v1.4.1"

# [cilium]离线镜像tar包

cilium_offline: "cilium_{{ cilium_ver }}.tar"

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: "true"

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

# [kube-router]kube-router 离线镜像tar包

kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar"

busybox_offline: "busybox_{{ busybox_ver }}.tar"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "no"

corednsVer: "1.8.4"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.17.0"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "no"

metricsVer: "v0.5.0"

# dashboard 自动安装

dashboard_install: "no"

dashboardVer: "v2.3.1"

dashboardMetricsScraperVer: "v1.0.6"

# ingress 自动安装

ingress_install: "no"

ingress_backend: "traefik"

traefik_chart_ver: "9.12.3"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "12.10.6"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.1"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.yourdomain.com"

HARBOR_TLS_PORT: 8443

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

安装 集群

安装命令:

root@k8smaster-12:/etc/kubeasz# ./ezctl setup --help

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare 系统初始化* 必须执行

02 etcd 安装etcd 如果没有手动安装需要安装

03 container-runtime 安装容器时,手动安装略过

04 kube-master 安装k8s-master,必须执行

05 kube-node 安装k8s-node,必须执行

06 network 安装网络组件 必须执行

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb 安装 lb ,不需要安装

11 harbor harbor 不需要安装

因为手动安装了 lb 需要在系统初始化的把相应规则删掉

vim playbooks/01.prepare.yml

# [optional] to synchronize system time of nodes with 'chrony'

- hosts:

- kube_master

- kube_node

- etcd

- ex_lb # 删掉

- chrony # 删掉

执行安装集群

#### 01 系统初始化

root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 01

### 部署etcd

root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 02

### 部署 k8s-master

root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 04

### 部署 k8s-node

root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 05

### 部署网络

root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 06

集群验证

etcd 集群验证

root@k8smaster-11:/etc/kubeasz# export NODE_IPS="172.16.0.11 172.16.0.12 172.16.0.13"

root@k8smaster-11:/etc/kubeasz# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://172.16.0.11:2379 is healthy: successfully committed proposal: took = 15.050165ms

https://172.16.0.12:2379 is healthy: successfully committed proposal: took = 13.827469ms

https://172.16.0.13:2379 is healthy: successfully committed proposal: took = 12.144873ms

calicoctl 网络验证

root@k8smaster-11:/etc/kubeasz# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 172.16.0.12 | node-to-node mesh | up | 13:08:39 | Established |

| 172.16.0.14 | node-to-node mesh | up | 13:08:39 | Established |

| 172.16.0.15 | node-to-node mesh | up | 13:08:41 | Established |

+--------------+-------------------+-------+----------+-------------+

集群验证

root@k8smaster-11:/etc/kubeasz# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-59df8b6856-wbwn8 1/1 Running 0 75s

kube-system calico-node-5tqdb 1/1 Running 0 75s

kube-system calico-node-8gwb6 1/1 Running 0 75s

kube-system calico-node-v9tlq 1/1 Running 0 75s

kube-system calico-node-w6j4k 1/1 Running 0 75s

#### 运行容器测试集群

root@k8smaster-11:/etc/kubeasz# kubectl run net-test3 --image=harbor.pgoops.com/base/alpine:v1 sleep 60000

root@k8smaster-11:/etc/kubeasz# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 36s 172.100.248.193 172.16.0.15 <none> <none>

net-test2 1/1 Running 0 30s 172.100.248.194 172.16.0.15 <none> <none>

net-test3 1/1 Running 0 5s 172.100.229.129 172.16.0.14 <none> <none>

#### 测试容器内外网通信

root@k8smaster-11:/etc/kubeasz# kubectl exec -it net-test3 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping 172.100.248.193

PING 172.100.248.193 (172.100.248.193): 56 data bytes

64 bytes from 172.100.248.193: seq=0 ttl=62 time=0.992 ms

64 bytes from 172.100.248.193: seq=1 ttl=62 time=0.792 ms

^C

--- 172.100.248.193 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.792/0.892/0.992 ms

/ # ping 114.114.114.114

PING 114.114.114.114 (114.114.114.114): 56 data bytes

64 bytes from 114.114.114.114: seq=0 ttl=127 time=32.942 ms

64 bytes from 114.114.114.114: seq=1 ttl=127 time=33.414 ms

^C

--- 114.114.114.114 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 32.942/33.178/33.414 ms

安装第三方插件

安装 coredns

安装 dashboard

增加节点

增加 master 节点

增加 ndoe 节点

移除节点

移除 master 节点

移除 node 节点

作者:闫世成

出处:http://cnblogs.com/yanshicheng

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。如有问题或建议,请联系上述邮箱,非常感谢。