使用kubeadm 部署 kubernetes V1.21.0 版本

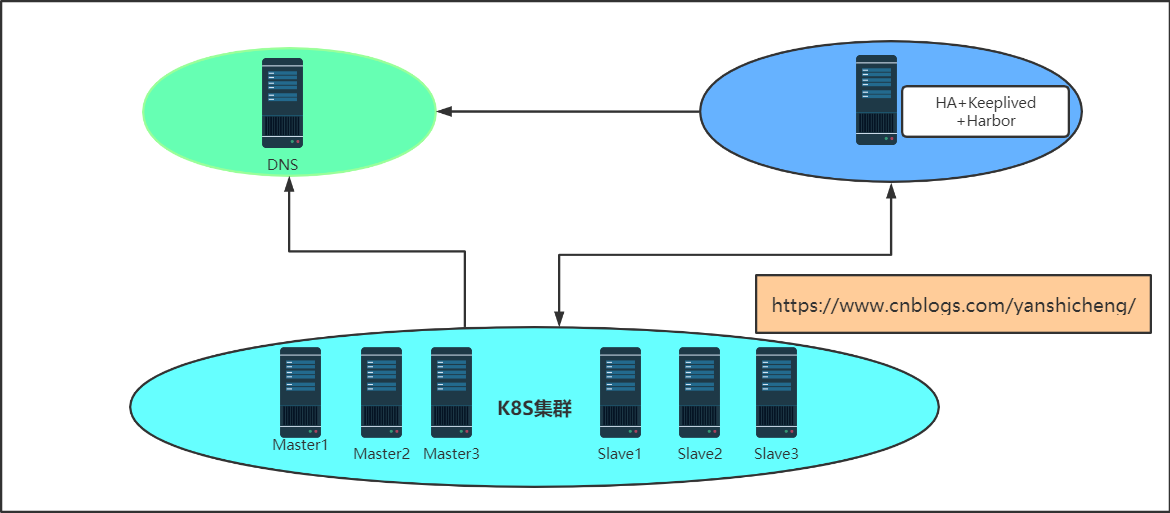

架构讲解

- 环境部署一共用到八台虚拟机, 如下图所示.

- 下图中的DNS,haproxy, keeplived, Harbor 请自行配置高可用.

- 如果不搭建 DNS服务器,用 hosts 文件也可以.

- 本文章先部署 k8s v1.20 版本, 在升级到 v1.21版本, 如果不想练习升级可以直接把部署 v1.20 版本的版本号都改成 v1.21.

配置清单

| 主机名 | IP地址 | CPU核心数 | 内存 | 操作系统 | 内核版本 | 角色 |

| k8s201.devops.com | 172.16.0.201 | 1 | 2 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | k8s-master1 |

| k8s202.devops.com | 172.16.0.202 | 1 | 2 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | k8s-master2 |

| k8s203.devops.com | 172.16.0.203 | 1 | 2 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | k8s-master3 |

| k8s203.devops.com | 172.16.0.204 | 2 | 4 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | k8s-slave1 |

| k8s203.devops.com | 172.16.0.205 | 2 | 4 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | k8s-slave2 |

| k8s203.devops.com | 172.16.0.206 | 2 | 4 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | k8s-slave3 |

| ops110.devops.com | 172.16.0.110 | 1 | 1 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | DNS |

| k8s207.devops.com | 172.16.0.207 | 1 | 1 | CentOS Linux release 7.9.2009 (Core) | 3.10.0-1160.25.1.el7.x86_64 | Keeplived+Haproxy+harbor |

通用配置

关闭swap, 关闭防火墙, 关闭selinux, 通用配置完成后记得重启

[root@k8s201 ~]# swapoff -a [root@k8s201 ~]# cat /etc/fstab # # /etc/fstab # Created by anaconda on Sun Jun 6 16:14:55 2021 # # Accessible filesystems, by reference, are maintained under '/dev/disk' # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # UUID=5ee03148-ea92-4d0e-aeca-9866bffaf140 / xfs defaults 0 0 UUID=10ae0c75-92bf-4b47-a5bb-cdf26b398fac /boot xfs defaults 0 0 #UUID=44a4dd84-2244-4384-b3b3-41201f21d49e swap swap defaults 0 0 ##关闭selinux模块 sed -i 's@SELINUX=enforcing@SELINUX=disabled@' /etc/selinux/config ##关闭防火墙模块 systemctl disable firewalld ##禁用NM systemctl disable NetworkManager

系统调优

配置国内Cents镜像源

官网地址: https://mirrors.huaweicloud.com/

1、备份配置文件: cp -a /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak 2、下载镜像源 wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo 3、执行yum clean all清除原有yum缓存。 4、执行yum makecache(刷新缓存)或者yum repolist all(查看所有配置可以使用的文件,会自动刷新缓存)。

配置国内Epel源

1. 安装 epel源 yum install epel* 2. 备份配置文件: cp -a /etc/yum.repos.d/epel.repo /etc/yum.repos.d/epel.repo.backup mv /etc/yum.repos.d/epel-testing.repo /etc/yum.repos.d/epel-testing.repo.backup 3. 修改epel.repo文件,取消baseurl开头的行的注释,并增加mirrorlist开头的行的注释。将文件中的http://download.fedoraproject.org/pub替换成https://repo.huaweicloud.com,可以参考如下命令: sed -i "s/#baseurl/baseurl/g" /etc/yum.repos.d/epel.repo sed -i "s/metalink/#metalink/g" /etc/yum.repos.d/epel.repo sed -i "s@https\?://download.fedoraproject.org/pub@https://repo.huaweicloud.com@g" /etc/yum.repos.d/epel.repo

加载ipvs模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 nf_conntrack_ipv4 15053 0 nf_defrag_ipv4 12729 1 nf_conntrack_ipv4 ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 139264 2 ip_vs,nf_conntrack_ipv4 libcrc32c 12644 3 xfs,ip_vs,nf_conntrack # 检查加载的模块 [root@128 ~]# lsmod | grep -e ipvs -e nf_conntrack_ipv4 yum install ipset ipvsadm -y

其他调优配置

[root@k8s207 ~]# cat /etc/sysctl.conf fs.file-max = 6815744 fs.aui-max-nr = 1048576 net.ipv4.ip_local_port_range = 1024 65535 net.ipv4.tcp_mem = 786432 2097152 3145728 net.ipv4.tcp_rmem = 4096 4096 16777216 net.ipv4.tcp_wmem = 4096 4096 16777216 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_tw_recycle = 1 vm.swappiness = 1 vm.min_free_kbytes = 204800 vm.max_map_count = 204800 kernel.pid_max = 819200 #kernel.pty.max = 10 vm.zone_reclaim_mode = 0 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_syn_retries = 1 net.ipv4.tcp_fin_timeout = 1 net.ipv4.tcp_keepalive_time = 1200 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_max_orphans = 16384 net.ipv4.tcp_synack_retries = 1 net.ipv4.route.gc_timeout = 100 net.core.somaxconn = 16384 net.core.netdev_max_backlog = 16384 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 [root@k8s207 ~]# cat /etc/security/limits.conf # End of file * soft nofile 1048576 * hard nofile 1048576 * soft nproc 1048576 * hard nproc 1048576 * soft stack 10240 * hard stack 32768 * hard memlock unlimited * soft memlock unlimited

k8s集群安装Docker

安装镜像源

sudo yum remove docker docker-common docker-selinux docker-engine sudo yum install -y yum-utils device-mapper-persistent-data lvm2 wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo sudo sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

安装docker-ce

yum install docker-ce -y

daemon.json

[root@k8s207 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [

"https://v16stybc.mirror.aliyuncs.com",

"http://hub-mirror.c.163.com",

"https://registry.docker-cn.com"

],

"insecure-registries": ["harbor1.devops.com", "harbor2.devops.com", "harbor.devops.com"],

"experimental": false,

"exec-opts": ["native.cgroupdriver=systemd"],

"debug": true

}

ops101部署DNS

这里就不详细写了, 如果需要可以看另外一篇文章.

k8s207部署haproxy

k8s207部署keeplived

k8s207部署harbor

k8s集群配置软件源

cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgb

部署kubernetes

安装kubeadm工具

node节点不必安装 kubectl 工具

yum install kubeadm=1.21.0-0 kubectl=1.21.0-0 kubelet=1.21.0-0 -y

设置kubectl补全功能

kubectl completion bash > ~/.kube/completion.bash.inc mkdir ~/.kube kubectl completion bash > ~/.kube/completion.bash.inc echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile source $HOME/.bash_profilex

下载镜像

因为默认用的google镜像源,可能下载不下来, 所以这里使用国内的镜像源先把docker镜像下载下来.

注: 阿里的镜像仓库 coredns 和google仓库存放路径以及标签不一样,所以要手动进行打标签.

# 可以查看当前版本k8s 所用到的镜像版本信息 > kubeadm config images list --kubernetes-version v1.21.0 k8s.gcr.io/kube-apiserver:v1.21.0 k8s.gcr.io/kube-controller-manager:v1.21.0 k8s.gcr.io/kube-scheduler:v1.21.0 k8s.gcr.io/kube-proxy:v1.21.0 k8s.gcr.io/pause:3.4.1 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns/coredns:v1.8.0 # 下载镜像 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.21.0 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.21.0 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.21.0 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.0 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.0 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0 docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.0

部署master节点

kubeadm init --apiserver-advertise-address=172.16.0.201 --apiserver-bind-port=6443 --control-plane-endpoint=172.16.0.10 --ignore-preflight-errors='Swap,NumCPU' --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version=v1.21.0 --pod-network-cidr=10.10.0.0/16 --service-cidr=192.168.1.0/24 --service-dns-domain=devops.com

参数说明

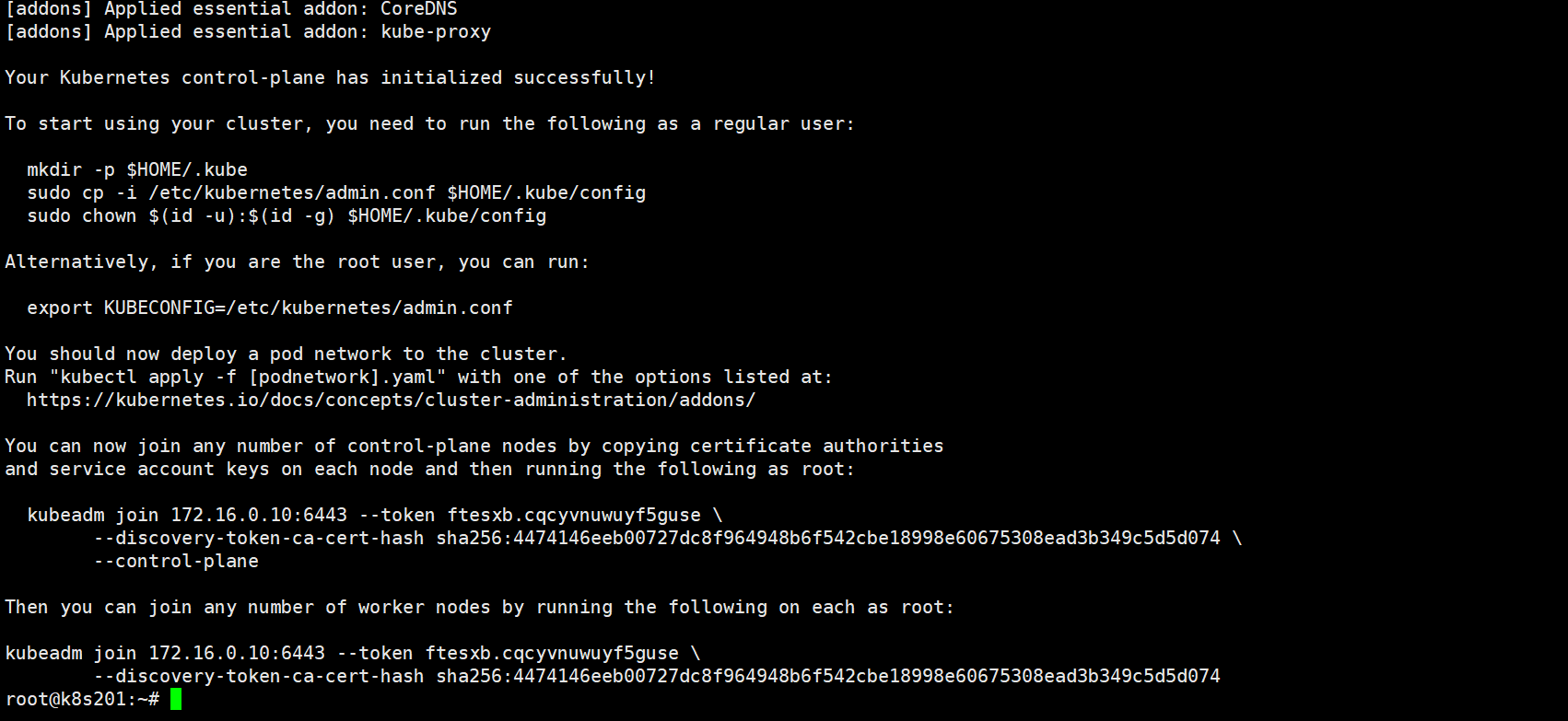

部署成功照片

根据提示创建连接集群的认证文件

root@k8s201:~# mkdir -p $HOME/.kube root@k8s201:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config root@k8s201:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

基于yml配置文件方式部署K8S

生成默认配置文件

kubeadm config print init-defaults > kubeadm-init.yml

配置文件

cat kubeadm-init.yml apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 172.16.0.201 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: k8s201.devops.cc taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: 172.16.0.10:6443 controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.21.0 networking: dnsDomain: devops.local podSubnet: 10.10.0.0/16 serviceSubnet: 192.168.1.0/24 scheduler: {}

部署集群

kubeadm init --config=kubeadm-init.yml --ignore-preflight-errors=NumCpu

作者:闫世成

出处:http://cnblogs.com/yanshicheng

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。如有问题或建议,请联系上述邮箱,非常感谢。