|NO.Z.00012|——————————|BigDataEnd|——|Hadoop&实时数仓.V12|——|项目.v12|ODS层处理|JSON日志数据格式|

一、json日志数据格式

### --- 样例sql

~~~ # 在mysql中插入数

mysql> INSERT INTO `yanqi_trade_orders` VALUES ('2', '23a0b124546', '121', '2', '0.12', '6331.00', '2', '0', '370203', '0', '0', '0', '1', '2020-06-28 16:55:02', '2020-06-28 16:55:02', '2020-10-21 22:54:32');### --- kafka中收到Canal的消息 {"data":[{"productId":"115908","productName":"索尼

~~~ # 在kafka消费者主题下查看采集到的数据

[root@hadoop02 ~]# kafka-console-consumer.sh --zookeeper hadoop02:2181/myKafka --topic canal --from-beginning

~~~ # 在mysql下insert插入操作采集到数据

{"data":[{"orderId":"2","orderNo":"23a0b124546","userId":"121","status":"2","productMoney":"0.12","totalMoney":"6331.0","payMethod":"2","isPay":"0","areaId":"370203","tradeSrc":"0","tradeType":"0","isRefund":"0","dataFlag":"1","createTime":"2020-06-28 16:55:02","payTime":"2020-06-28 16:55:02","modifiedTime":"2020-10-21 22:54:32"}],"database":"dwshow","es":1638097808000,"id":3,"isDdl":false,"mysqlType":{"orderId":"bigint(11)","orderNo":"varchar(20)","userId":"bigint(11)","status":"tinyint(4)","productMoney":"decimal(11,2)","totalMoney":"decimal(11,2)","payMethod":"tinyint(4)","isPay":"tinyint(4)","areaId":"int(11)","tradeSrc":"tinyint(4)","tradeType":"int(11)","isRefund":"tinyint(4)","dataFlag":"tinyint(4)","createTime":"varchar(25)","payTime":"varchar(25)","modifiedTime":"timestamp"},"old":null,"pkNames":["orderId"],"sql":"","sqlType":{"orderId":-5,"orderNo":12,"userId":-5,"status":-6,"productMoney":3,"totalMoney":3,"payMethod":-6,"isPay":-6,"areaId":4,"tradeSrc":-6,"tradeType":4,"isRefund":-6,"dataFlag":-6,"createTime":12,"payTime":12,"modifiedTime":93},"table":"yanqi_trade_orders","ts":1638097808750,"type":"INSERT"}

{"data":[{"orderId":"3","orderNo":"23a0b124546","userId":"35","status":"2","productMoney":"0.12","totalMoney":"1987.50","payMethod":"4","isPay":"0","areaId":"370203","tradeSrc":"0","tradeType":"0","isRefund":"0","dataFlag":"1","createTime":"2020-06-28 12:07:01","payTime":"2020-06-28 12:07:01","modifiedTime":"2020-10-21 22:54:34"}],"database":"dwshow","es":1638098012000,"id":4,"isDdl":false,"mysqlType":{"orderId":"bigint(11)","orderNo":"varchar(20)","userId":"bigint(11)","status":"tinyint(4)","productMoney":"decimal(11,2)","totalMoney":"decimal(11,2)","payMethod":"tinyint(4)","isPay":"tinyint(4)","areaId":"int(11)","tradeSrc":"tinyint(4)","tradeType":"int(11)","isRefund":"tinyint(4)","dataFlag":"tinyint(4)","createTime":"varchar(25)","payTime":"varchar(25)","modifiedTime":"timestamp"},"old":null,"pkNames":["orderId"],"sql":"","sqlType":{"orderId":-5,"orderNo":12,"userId":-5,"status":-6,"productMoney":3,"totalMoney":3,"payMethod":-6,"isPay":-6,"areaId":4,"tradeSrc":-6,"tradeType":4,"isRefund":-6,"dataFlag":-6,"createTime":12,"payTime":12,"modifiedTime":93},"table":"yanqi_trade_orders","ts":1638098012708,"type":"INSERT"}### --- 注意:

~~~ Canal将MySQL数据库所有的表数据都写入Kafka的同一个分区中,

~~~ 可以通过json文件中的table属性来获取表名。

~~~ 只要对数据表进行insert、update、delete操作,

~~~ 就会产生相应的日志,并且产生的日志就会通过Canal写入Kafka分区。

~~~ 这样就会有一个问题,当我们向某张表中添加数据,然后对其进行修改和删除操作,

~~~ 那么就会产生三条相应的日志,并且这三条日志都会写入Kafka分区。

~~~ 此时,数据表中已经删除了这条数据,但是Kafka中没有删除。

~~~ 这样就会造成Kafka与MySQL中数据不一致的情况。

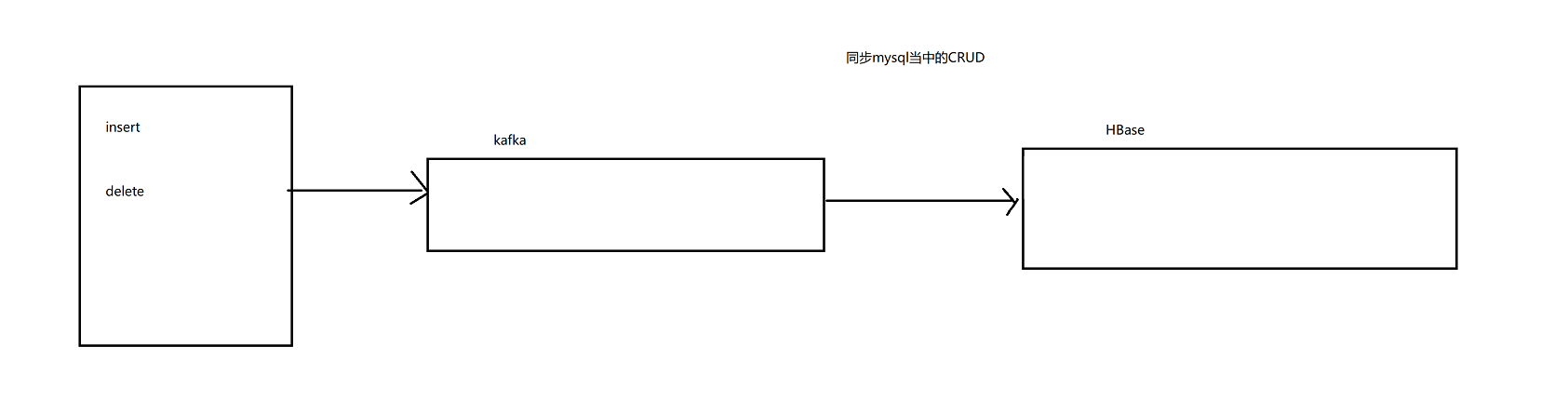

~~~ 解决上述问题可以通过解析Kafka中的json日志将数据写入HBase中,

~~~ 因为HBase中可以有多版本的数据。

~~~ 地域:省、市、区

Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

bdv026-EB实时数仓

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通