|NO.Z.00048|——————————|BigDataEnd|——|Hadoop&Flink.V03|——|Flink.v03|Flink Connector|kafka|源码理解|源码说明.V1|

一、源码提取说明

### --- flink-kafka 是如何消费的?以及如何分区分配等

~~~ open方法源码:### --- 指定offset提交模式

~~~ OffsetCommitMode:

~~~ OffsetCommitMode:表示偏移量如何从外部提交回Kafka brokers/

~~~ Zookeeper的行为它的确切值是在运行时在使用者子任务中确定的。~~~ # 源码提取说明:OffsetCommitMode.java

~~~ # 第29~39行

~~~ DISABLED:完全禁用offset提交。

~~~ ON_CHECKPOINTS:只有当检查点完成时,才将偏移量提交回Kafka。

~~~ KAFKA_PERIODIC:使用内部Kafka客户端的自动提交功能,定期将偏移量提交回Kafka。

/**

* The offset commit mode represents the behaviour of how offsets are externally committed

* back to Kafka brokers / Zookeeper.

*

* <p>The exact value of this is determined at runtime in the consumer subtasks.

*/

@Internal

public enum OffsetCommitMode {

/** Completely disable offset committing. */

DISABLED,

/** Commit offsets back to Kafka only when checkpoints are completed. */

ON_CHECKPOINTS,

/** Commit offsets periodically back to Kafka, using the auto commit functionality of internal Kafka clients. */

KAFKA_PERIODIC;

}~~~ # 源码提取说明:OffsetCommitModes.java

~~~ # 第37~50行

public static OffsetCommitMode fromConfiguration(

boolean enableAutoCommit,

boolean enableCommitOnCheckpoint,

boolean enableCheckpointing) {

if (enableCheckpointing) {

// if checkpointing is enabled, the mode depends only on whether committing on checkpoints is enabled

return (enableCommitOnCheckpoint) ? OffsetCommitMode.ON_CHECKPOINTS : OffsetCommitMode.DISABLED;

} else {

// else, the mode depends only on whether auto committing is enabled in the provided Kafka properties

return (enableAutoCommit) ? OffsetCommitMode.KAFKA_PERIODIC : OffsetCommitMode.DISABLED;

}

}

}~~~ 使用多个配置值确定偏移量提交模式

~~~ 如果启用了checkpoint,并且启用了checkpoint完成时提交offset,返回ON_CHECKPOINTS。

~~~ 如果未启用checkpoint,但是启用了自动提交,返回KAFKA_PERIODIC。

~~~ 其他情况都返回DISABLED。### --- 接下来创建和启动分区发现工具

~~~ # 源码提取说明:OffsetCommitModes.java

~~~ # 第1040~1052行

/**

* Creates the partition discoverer that is used to find new partitions for this subtask.

*

* @param topicsDescriptor Descriptor that describes whether we are discovering partitions for fixed topics or a topic pattern.

* @param indexOfThisSubtask The index of this consumer subtask.

* @param numParallelSubtasks The total number of parallel consumer subtasks.

*

* @return The instantiated partition discoverer

*/

protected abstract AbstractPartitionDiscoverer createPartitionDiscoverer(

KafkaTopicsDescriptor topicsDescriptor,

int indexOfThisSubtask,

int numParallelSubtasks);~~~ 创建用于为此子任务查找新分区的分区发现程序。

~~~ 参数1:topicsDescriptor : 描述我们是为固定主题还是主题模式发现分区,也就是fixedTopics和

~~~ topicPattern的封装。其中fixedTopics明确指定了topic的名称,称为固定topic。topicPattern为匹配

~~~ topic名称的正则表达式,用于分区发现。~~~ # 源码提取说明:KafkaTopicsDescriptor.java

~~~ # 第31~36行

~~~ 参数2:indexOfThisSubtask :此consumer子任务的索引。

~~~ 参数3:numParallelSubtasks : 并行consumer子任务的总数方法返回一个分区发现器的实例

/**

* A Kafka Topics Descriptor describes how the consumer subscribes to Kafka topics -

* either a fixed list of topics, or a topic pattern.

*/

@Internal

public class KafkaTopicsDescriptor implements Serializable {### --- 打开分区发现程序,初始化所有需要的Kafka连接。

~~~ 注意是线程不安全的

~~~ # 源码提取说明:AbstractPartitionDiscoverer.java

~~~ # 第87~95行

/**

* Opens the partition discoverer, initializing all required Kafka connections.

*

* <p>NOTE: thread-safety is not guaranteed.

*/

public void open() throws Exception {

closed = false;

initializeConnections();

}### --- 初始化所有需要的Kafka链接源码:

~~~ # 源码提取说明:AbstractPartitionDiscoverer.java

~~~ # 第210~211行

/** Establish the required connections in order to fetch topics and partitions metadata. */

protected abstract void initializeConnections() throws Exception;### --- KafkaPartitionDiscoverer:

~~~ 创建出KafkaConsumer对象。

~~~ # 源码提取说明:AbstractPartitionDiscoverer.java

@Override

protected void initializeConnections(){

this.kafkaConsumer = new KafkaConsumer<>(kafkaProperties);

}### --- subscribedPartitionsToStartOffsets = new HashMap<>();

~~~ 已订阅的分区列表,这里将它初始化

~~~ private Map<KafkaTopicPartition, Long> subscribedPartitionsToStartOffsets;

~~~ 用来保存将读取的一组主题分区,以及要开始读取的初始偏移量。### --- 用户获取所有fixedTopics和匹配topicPattern的Topic包含的所有分区信息

~~~ # 源码提取说明:AbstractPartitionDiscoverer.java

~~~ # 第118~124行

/**

* Execute a partition discovery attempt for this subtask.

* This method lets the partition discoverer update what partitions it has discovered so far.

*

* @return List of discovered new partitions that this subtask should subscribe to.

*/

public List<KafkaTopicPartition> discoverPartitions() throws WakeupException, ClosedException {### --- 如果consumer从检查点恢复状态restoredState用来保存要恢复的偏移量选择TreeMap数据类型目的是有序‘

~~~ # 源码提取说明:FlinkKafkaConsumerBase.java

~~~ # 第182~190行

/**

* The offsets to restore to, if the consumer restores state from a checkpoint.

*

* <p>This map will be populated by the {@link #initializeState(FunctionInitializationContext)} method.

*

* <p>Using a sorted map as the ordering is important when using restored state

* to seed the partition discoverer.

*/

private transient volatile TreeMap<KafkaTopicPartition, Long> restoredState;### --- 在initializeState实例化方法中填充:

~~~ # 源码提取说明:FlinkKafkaConsumerBase.java

~~~ # 第892~912行

@Override

public final void initializeState(FunctionInitializationContext context) throws Exception {

OperatorStateStore stateStore = context.getOperatorStateStore();

this.unionOffsetStates = stateStore.getUnionListState(new ListStateDescriptor<>(OFFSETS_STATE_NAME,

createStateSerializer(getRuntimeContext().getExecutionConfig())));

if (context.isRestored()) {

restoredState = new TreeMap<>(new KafkaTopicPartition.Comparator());

// populate actual holder for restored state

for (Tuple2<KafkaTopicPartition, Long> kafkaOffset : unionOffsetStates.get()) {

restoredState.put(kafkaOffset.f0, kafkaOffset.f1);

}

LOG.info("Consumer subtask {} restored state: {}.", getRuntimeContext().getIndexOfThisSubtask(), restoredState);

} else {

LOG.info("Consumer subtask {} has no restore state.", getRuntimeContext().getIndexOfThisSubtask());

}

}### --- 回顾:context.isRestored的机制:当程序发生故障的时候值为true

~~~ # 源码提取说明:ManagedInitializationContext.java

~~~ # 第36~42行

public interface ManagedInitializationContext {

/**

* Returns true, if state was restored from the snapshot of a previous execution. This returns always false for

* stateless tasks.

*/

boolean isRestored();if (restoredState != null) {

// 从快照恢复逻辑...

} else {

// 直接启动逻辑...

}### --- 如果restoredState没有存储某一分区的状态, 需要重头消费该分区

~~~ # 源码提取说明:FlinkKafkaConsumerBase.java

~~~ # 第553~569行

final List<KafkaTopicPartition> allPartitions = partitionDiscoverer.discoverPartitions();

if (restoredState != null) {

for (KafkaTopicPartition partition : allPartitions) {

if (!restoredState.containsKey(partition)) {

restoredState.put(partition, KafkaTopicPartitionStateSentinel.EARLIEST_OFFSET);

}

}

for (Map.Entry<KafkaTopicPartition, Long> restoredStateEntry : restoredState.entrySet()) {

// seed the partition discoverer with the union state while filtering out

// restored partitions that should not be subscribed by this subtask

if (KafkaTopicPartitionAssigner.assign(

restoredStateEntry.getKey(), getRuntimeContext().getNumberOfParallelSubtasks())

== getRuntimeContext().getIndexOfThisSubtask()){

subscribedPartitionsToStartOffsets.put(restoredStateEntry.getKey(), restoredStateEntry.getValue());

}

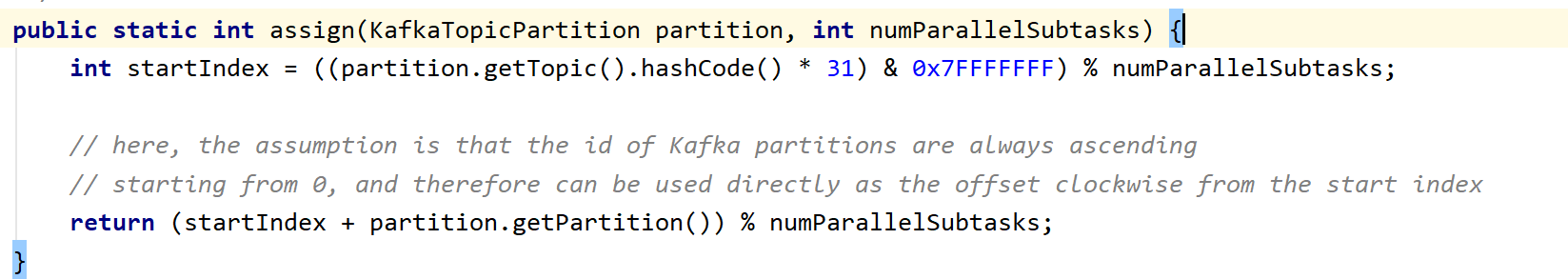

}### --- 过滤掉不归该subtask负责的partition分区

~~~ assign方法:返回应该分配给特定Kafka分区的目标子任务的索引

~~~ subscribedPartitionsToStartOffsets.put(restoredStateEntry.getKey(),restoredStateEntry.getValue());

~~~ 将restoredState中保存的一组topic的partition和要开始读取的

~~~ 起始偏移量保存到subscribedPartitionsToStartOffsets

~~~ 其中restoredStateEntry.getKey为某个Topic的摸个partition,restoredStateEntry.getValue为

~~~ 该partition的要开始读取的起始偏移量过滤掉topic名称不符合topicsDescriptor的topicPattern的分区~~~ # 源码提取说明:FlinkKafkaConsumerBase.java

~~~ # 第571~581行

if (filterRestoredPartitionsWithCurrentTopicsDescriptor) {

subscribedPartitionsToStartOffsets.entrySet().removeIf(entry -> {

if (!topicsDescriptor.isMatchingTopic(entry.getKey().getTopic())) {

LOG.warn(

"{} is removed from subscribed partitions since it is no longer associated with topics descriptor of current execution.",

entry.getKey());

return true;

}

return false;

});

}Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

bdv020-flink

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通