|NO.Z.00043|——————————|BigDataEnd|——|Hadoop&Flink.V10|——|Flink.v10|Flink State|状态原理|原理剖析|状态存储|state文件格式|

一、state 文件格式

### --- state文件格式

~~~ 当我们创建 state 时,数据是如何保存的呢?

~~~ 对于不同的 statebackend,有不同的存储格式。

~~~ 但是都是使用 flink 序列化器,将键值转化为字节数组保存起来。

~~~ 这里使用 RocksDBStateBackend 示例。

~~~ 每个 taskmanager 会创建多个 RocksDB 目录,每个目录保存一个 RocksDB 数据库;

~~~ 每个数据库包含多个 column famiilies,这些 column families 由 state descriptors 定义。

~~~ 每个 column family 包含多个 key-value 对,key 是 Operator 的 key, value 是对应的状态数据。二、state文件格式编程实现

### --- state文件格式编程实现

~~~ # TestFlink.java

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.ProcessingTime);

ParameterTool configuration = ParameterTool.fromArgs(args);

FlinkKafkaConsumer010<String> kafkaConsumer010 = new

FlinkKafkaConsumer010<String>("test", new SimpleStringSchema(), getKafkaConsumerProperties("testing123"));

DataStream<String> srcStream = env.addSource(kafkaConsumer010);

Random random = new Random();

DataStream<String> outStream = srcStream

.map(row -> new KeyValue("testing" + random.nextInt(100000), row))

.keyBy(row -> row.getKey())

.process(new StatefulProcess()).name("stateful_process").uid("stateful_process")

.keyBy(row -> row.getKey())

outStream.print();

env.execute("Test Job");

}

public static Properties getKafkaConsumerProperties(String groupId){

Properties props = new Properties();

props.setProperty("bootstrap.servers", "localhost:9092"

);

props.setProperty("group.id", groupId);

return props;

}### --- 这个程序包含两个有状态的算子:

~~~ # StatefulMapTest.java

public class StatefulMapTest extends RichFlatMapFunction<KeyValue, String> {

ValueState<Integer> previousInt;

ValueState<Integer> nextInt;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

previousInt = getRuntimeContext().getState(new ValueStateDescriptor<Integer>("previousInt", Integer.class));

nextInt = getRuntimeContext().getState(new ValueStateDescriptor<Integer> ("nextInt", Integer.class));

}

@Override

public void flatMap(KeyValue s, Collector<String> collector) throws Exception {

try{

Integer oldInt = Integer.parseInt(s.getValue());

Integer newInt;

if(previousInt.value() == null){

newInt = oldInt;

collector.collect("OLD INT: " + oldInt.toString());

}else{

newInt = oldInt - previousInt.value();

collector.collect("NEW INT: " + newInt.toString());

}

nextInt.update(newInt);

previousInt.update(oldInt);

}catch(Exception e){

e.printStackTrace();

}

}

}

// StatefulProcess.java

public class StatefulProcess extends KeyedProcessFunction<String, KeyValue,

KeyValue> {

ValueState<Integer> processedInt;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

processedInt = getRuntimeContext().getState(new ValueStateDescriptor<>("processedInt", Integer.class));

}

@Override

public void processElement(KeyValue keyValue, Context context,

Collector<KeyValue> collector) throws Exception { try{

Integer a = Integer.parseInt(keyValue.getValue());

processedInt.update(a);

collector.collect(keyValue);

}catch(Exception e){e.printStackTrace();

}

}

}~~~ # 在 flink-conf.yaml 文件中设置 rocksdb 作为 state backend。

~~~ 每个 taskmanager 将在指定的 tmp 目录下(对于 onyarn 模式,

~~~ tmp 目录由 yarn 指定,

~~~ 一般为 /path/to/nm-localdir/usercache/user/appcache/application_xxx/flink-io-xxx),

~~~ # 生成下面的目录:

drwxr-xr-x 4 abc 74715970 128B Sep 23 03:19 job_127b2b84f80b368b8edfe02b2762d10d_op_KeyedProcessOperator_0d49016af99997646695a030f69aa7ee__1_1__uuid_65b50444-5857-4940-9f8c-77326cc79279/db

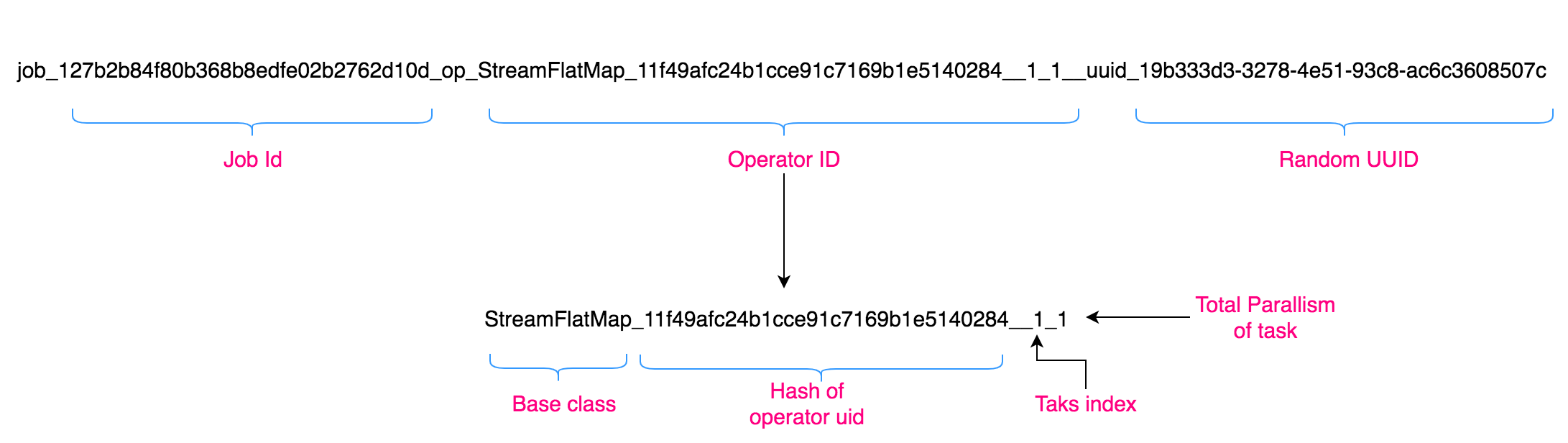

drwxr-xr-x 4 abc 74715970 128B Sep 23 03:20 job_127b2b84f80b368b8edfe02b2762d10d_op_StreamFlatMap_11f49afc24b1cce91c7169b1e5140284__1_1__uuid_19b333d3-3278-4e51-93c8-ac6c3608507c/db三、目录名含义如下:

### --- 大致分为 3 部分:

~~~ JOB_ID: JobGraph 创建时分配的随机 id

~~~ OPERATOR_ID: 由 4 部分组成, 算子基类Murmur3(算子 uid)task索引_task总并行度。

~~~ 对于StatefulMapTest 这个算子,### --- 4 个 部分分别为:

~~~ StreamFlatMap

~~~ Murmur3_128(“stateful_map_test”) -> 11f49afc24b1cce91c7169b1e5140284

~~~ 1,因为总并行度指定了1,所以只有这一个 task

~~~ 1,因为总并行度指定了1### --- UUID: 随机的 UUID 值

~~~ # 每个目录都包含一个 RocksDB 实例,其文件结构如下:

-rw-r--r-- 1 abc 74715970 21K Sep 23 03:20 000011.sst

-rw-r--r-- 1 abc 74715970 21K Sep 23 03:20 000012.sst

-rw-r--r-- 1 abc 74715970 0B Sep 23 03:36 000015.log

-rw-r--r-- 1 abc 74715970 16B Sep 23 03:36 CURRENT

-rw-r--r-- 1 abc 74715970 33B Sep 23 03:18 IDENTITY

-rw-r--r-- 1 abc 74715970 0B Sep 23 03:33 LOCK

-rw-r--r-- 1 abc 74715970 34K Sep 23 03:36 LOG

-rw-r--r-- 1 abc 74715970 339B Sep 23 03:36 MANIFEST-000014

-rw-r--r-- 1 abc 74715970 10K Sep 23 03:36 OPTIONS-000017~~~ sst 文件是 RocksDB 生成的 SSTable,包含真实的状态数据。

~~~ LOG 文件包含 commit log

~~~ MANIFEST 文件包含元数据信息,例如 column families

~~~ OPTIONS 文件包含创建 RocksDB 实例时使用的配置### --- 我们通过 RocksDB java API 打开这些文件:

~~~ # FlinkRocksDb.java

public class FlinkRocksDb {

public static void main(String[] args) throws Exception {

RocksDB.loadLibrary();

String previousIntColumnFamily = "previousInt";

byte[] previousIntColumnFamilyBA = previousIntColumnFamily.getBytes(StandardCharsets.UTF_8);

String nextIntcolumnFamily = "nextInt";

byte[] nextIntcolumnFamilyBA = nextIntcolumnFamily.getBytes(StandardCharsets.UTF_8);

try (final ColumnFamilyOptions cfOpts = new ColumnFamilyOptions().optimizeUniversalStyleCompaction()) {

~~~ # list of column family descriptors, first entry must always be

default column family final List<ColumnFamilyDescriptor> cfDescriptors = Arrays.asList(

new ColumnFamilyDescriptor(RocksDB.DEFAULT_COLUMN_FAMILY, cfOpts),

new ColumnFamilyDescriptor(previousIntColumnFamilyBA, cfOpts),

new ColumnFamilyDescriptor(nextIntcolumnFamilyBA, cfOpts)

);

~~~ # a list which will hold the handles for the column families oncethe db is opened

final List<ColumnFamilyHandle> columnFamilyHandleList = new ArrayList<>();

String dbPath = "/Users/abc/job_127b2b84f80b368b8edfe02b2762d10d_op"+"_StreamFlatMap_11f49afc24b1cce91c7169b1e5140284__1_1__uuid_19b333d3-3278-4e51-93c8-ac6c3608507c/db/";

try (final DBOptions options = new DBOptions()

.setCreateIfMissing(true)

.setCreateMissingColumnFamilies(true); final RocksDB db = RocksDB.open(options, dbPath, cfDescriptors,columnFamilyHandleList)) {

try {

for(ColumnFamilyHandle columnFamilyHandle :

columnFamilyHandleList){

// 有些 rocksdb 版本去除了 getName 这个方法

byte[] name = columnFamilyHandle.getName();

System.out.write(name);

}

}finally {

~~~ # NOTE frees the column family handles before freeing the db

for (final ColumnFamilyHandle columnFamilyHandle :

columnFamilyHandleList) {

columnFamilyHandle.close();

}

}

}

} catch (Exception e) {

e.printStackTrace();

}

}~~~ # 上面的程序将会输出:

default

previousInt

nextInt### --- 我们可以打印出每个 column family 中的键值对:

~~~ 上面的程序将会输出键值对,如 (testing123, 1423), (testing456, 1212) …

~~~ # RocksdbKVIterator.java

TypeInformation<Integer> resultType = TypeExtractor.createTypeInfo(Integer.class);

TypeSerializer<Integer> serializer = resultType.createSerializer(new ExecutionConfig());

RocksIterator iterator = db.newIterator(columnFamilyHandle);

iterator.seekToFirst();

iterator.status();

while (iterator.isValid()) {

byte[] key = iterator.key();

System.out.write(key);

System.out.println(serializer.deserialize(new TestInputView(iterator.value())));

iterator.next();

}Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

bdv020-flink

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通