|NO.Z.00086|——————————|BigDataEnd|——|Hadoop&Spark.V02|——|Spark.v02|Spark 原理 源码|作业执行原理&job触发|

一、作业执行原理

### --- job触发

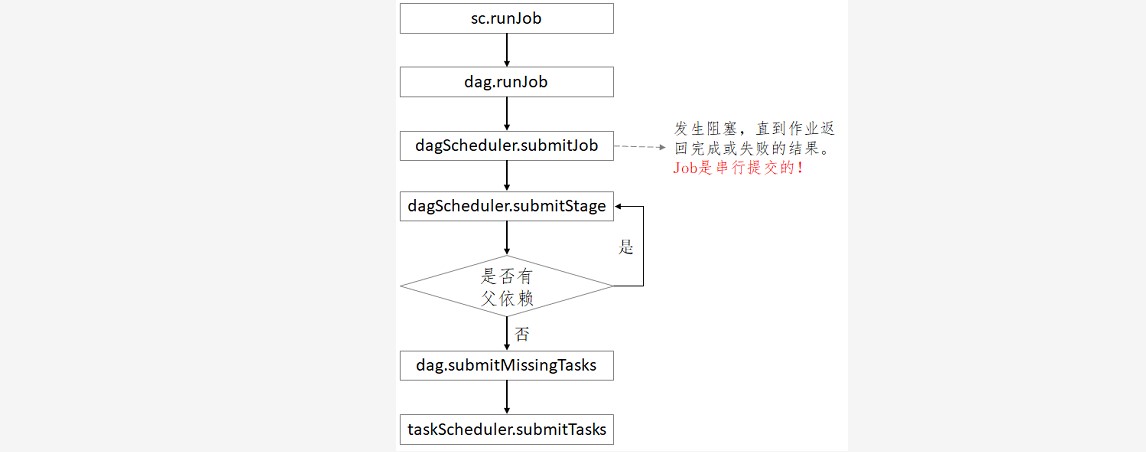

~~~ Action 操作后会触发 Job 的计算,并交给 DAGScheduler 来提交。

二、作业执行原理

### --- 作业执行原理

~~~ Action 触发 sc.runJob

~~~ 触发 dagScheduler.runJob

~~~ spark.logLineage 为 true 时,调用 Action 时打印 rdd 的 lineage 信息。~~~ # 源码提取说明:SparkContext.scala

~~~ # 2047行~2064行

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

resultHandler: (Int, U) => Unit): Unit = {

if (stopped.get()) {

throw new IllegalStateException("SparkContext has been shutdown")

}

val callSite = getCallSite

val cleanedFunc = clean(func)

logInfo("Starting job: " + callSite.shortForm)

if (conf.getBoolean("spark.logLineage", false)) {

logInfo("RDD's recursive dependencies:\n" + rdd.toDebugString)

}

dagScheduler.runJob(rdd, cleanedFunc, partitions, callSite, resultHandler, localProperties.get)

progressBar.foreach(_.finishAll())

rdd.doCheckpoint()

}### --- dagScheduler.runJob 提交job

~~~ 作业提交后发生阻塞,等待执行结果, job 是串行执行的。def runJob[T, U](rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,partitions: Seq[Int],callSite: CallSite,

resultHandler: (Int, U) => Unit,properties: Properties): Unit = {

// 启动时间

val start = System.nanoTime

// 提交Job,该方法是异步的,会立即返回JobWaiter对象

val waiter = submitJob(rdd, func, partitions, callSite, resultHandler, properties)

val awaitPermission = null.asInstanceOf[scala.concurrent.CanAwait]

// 等待Job处理完毕

waiter.completionFuture.ready(Duration.Inf)(awaitPermission)

// 获取运行结果

waiter.completionFuture.value.get match {

case scala.util.Success(_) => // Job执行成功

logInfo("Job %d finished: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

case scala.util.Failure(exception) => // Job执行失败

logInfo("Job %d failed: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

// SPARK-8644: Include user stack trace in exceptions coming from DAGScheduler.

// 记录线程异常堆栈信息

val callerStackTrace = Thread.currentThread().getStackTrace.tail

exception.setStackTrace(exception.getStackTrace ++ callerStackTrace)

// 抛出异常

throw exception

}

}Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

bdv018-spark.v03

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通