|NO.Z.00015|——————————|^^ 配置 ^^|——|Hadoop&Spark.V03|——|Spark.v03|sparkcore|RDD编程&sparkcontext创建|

一、设置spark-standalone集群非HA模式

### --- 修改配置文件

[root@hadoop02 ~]# vim $SPARK_HOME/conf/spark-env.sh

export JAVA_HOME=/opt/yanqi/servers/jdk1.8.0_231

export HADOOP_HOME=/opt/yanqi/servers/hadoop-2.9.2

export HADOOP_CONF_DIR=/opt/yanqi/servers/hadoop-2.9.2/etc/hadoop

export SPARK_DIST_CLASSPATH=$(/opt/yanqi/servers/hadoop-2.9.2/bin/hadoop classpath)

export SPARK_MASTER_HOST=hadoop02 # 打开这两行

export SPARK_MASTER_PORT=7077 # 打开这两行

export SPARK_WORKER_CORES=1

export SPARK_WORKER_MEMORY=1g

export SPARK_HISTORY_OPTS="-Dspark.history.ui.port=18080 -Dspark.history.retainedApplications=50 -Dspark.history.fs.logDirectory=hdfs://Hadoop01:9000/spark-eventlog"~~~ # 注销zookeeper配置

~~~ export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=hadoop01,hadoop02,hadoop03 -Dspark.deploy.zookeeper.dir=/spark"[root@hadoop02 ~]# rsync-script $SPARK_HOME/conf/spark-env.sh### --- 启动相关服务

~~~ # 启动hdfs服务

[root@hadoop01 ~]# start-dfs.sh

~~~ # 启动spark-standalone服务

[root@hadoop02 ~]# start-all-spark.sh

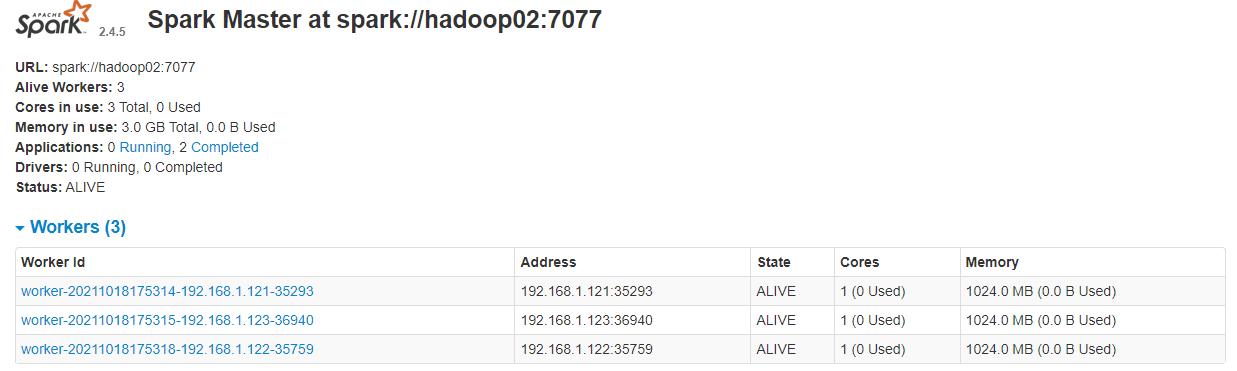

~~~ # 通过UI访问spark服务:http://hadoop02:8080/

~~~ 通过UI访问standalone服务:http://hadoop02:8080/

### --- 集群验证

[root@hadoop02 ~]# run-example SparkPi 10

~~~ 查看输出参数

Pi is roughly 3.1412511412511415scala> val lines = sc.textFile("/wcinput/wc.txt")

lines: org.apache.spark.rdd.RDD[String] = /wcinput/wc.txt MapPartitionsRDD[1] at textFile at <console>:24

scala> lines.flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).collect().foreach(println)

(#在文件中输入如下内容,1)

(yanqi,3)

(mapreduce,3)

(yarn,2)

(hadoop,2)

(hdfs,1)二、sparkContext创建

### --- SparkContext

~~~ SparkContext是编写Spark程序用到的第一个类,是Spark的主要入口点,它负责和整个集群的交互;~~~ # 摘录自源码说明:sparkcontext

/**

* Main entry point for Spark functionality. A SparkContext represents the connection to a Spark

* cluster, and can be used to create RDDs, accumulators and broadcast variables on that cluster.

*

* Only one SparkContext may be active per JVM. You must `stop()` the active SparkContext before

* creating a new one. This limitation may eventually be removed; see SPARK-2243 for more details.

*

* @param config a Spark Config object describing the application configuration. Any settings in

* this config overrides the default configs as well as system properties.

*/~~~ # 如把Spark集群当作服务端,那么Driver就是客户端,SparkContext 是客户端的核心;

~~~ SparkContext是Spark的对外接口,负责向调用者提供 Spark 的各种功能;

~~~ SparkContext用于连接Spark集群、创建RDD、累加器、广播变量;

~~~ 在 spark-shell 中 SparkContext 已经创建好了,可直接使用;三、RDD创建

### --- 编写Spark Driver程序第一件事就是:创建SparkContext;

~~~ 建议:Standalone模式或本地模式学习RDD的各种算子;

~~~ 不需要HA;不需要IDEA~~~ # 在spark-shell下,spark-context已经创建好了,直接在这里面使用就可以了

[root@hadoop02 ~]# spark-shell

Warning: Ignoring non-Spark config property: Example

21/10/18 17:15:39 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop02:4040

# --- Spark context available as 'sc' (master = spark://hadoop02:7077, app id = app-20211018171602-0000).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Scala version 2.12.10 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_231)

Type in expressions to have them evaluated.

Type :help for more information.

scala> 四、集合创建RDD

### --- 从集合创建RDD:主要用于测试

~~~ 从集合中创建RDD,主要用于测试。Spark 提供了以下函数:parallelize、makeRDD、range

~~~ # 摘录源码说明:Sparkcontext.scala

~~~ # 714行

def parallelize[T: ClassTag](

seq: Seq[T],

numSlices: Int = defaultParallelism): RDD[T] = withScope {

assertNotStopped()

new ParallelCollectionRDD[T](this, seq, numSlices, Map[Int, Seq[String]]())

}~~~ # 摘录源码说明:Sparkcontext.scala

~~~ 733行

def range(

start: Long,

end: Long,

step: Long = 1,

numSlices: Int = defaultParallelism): RDD[Long] = withScope {

assertNotStopped()

// when step is 0, range will run infinitely

require(step != 0, "step cannot be 0")

val numElements: BigInt = {

val safeStart = BigInt(start)

val safeEnd = BigInt(end)

if ((safeEnd - safeStart) % step == 0 || (safeEnd > safeStart) != (step > 0)) {

(safeEnd - safeStart) / step

} else {

// the remainder has the same sign with range, could add 1 more

(safeEnd - safeStart) / step + 1

}

}~~~ # 摘录源码说明:Sparkcontext.scala

~~~ 800行

def makeRDD[T: ClassTag](

seq: Seq[T],

numSlices: Int = defaultParallelism): RDD[T] = withScope {

parallelize(seq, numSlices)

}### --- 创建sparkcontext

~~~ # 创建rdd

scala> val rdd1 = sc.parallelize(Array(1,2,3,4,5))

rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:24

scala> val rdd2 = sc.parallelize(1 to 100)

rdd2: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[1] at parallelize at <console>:24~~~ # 检查RDD分区数

scala> rdd2.getNumPartitions

res2: Int = 3

scala> rdd2.partitions.length

res3: Int = 3~~~ # 创建 RDD,并指定分区数

~~~ rdd.collect 方法在生产环境中不要使用,会造成Driver OOM

scala> val rdd2 = sc.parallelize(1 to 100)

rdd2: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[2] at parallelize at <console>:24

scala> rdd2.getNumPartitions

res4: Int = 3

scala> val rdd3 = sc.makeRDD(List(1,2,3,4,5))

rdd3: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[3] at makeRDD at <console>:24

scala> val rdd4 = sc.makeRDD(1 to 100)

rdd4: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[4] at makeRDD at <console>:24

scala> rdd4.getNumPartitions

res5: Int = 3

scala> val rdd5 = sc.range(1, 100, 3)

rdd5: org.apache.spark.rdd.RDD[Long] = MapPartitionsRDD[6] at range at <console>:24

scala> rdd5.getNumPartitions

res6: Int = 3

scala> val rdd6 = sc.range(1, 100, 2 ,10)

rdd6: org.apache.spark.rdd.RDD[Long] = MapPartitionsRDD[8] at range at <console>:24

scala> rdd6.getNumPartitions

res7: Int = 10五、从文件系统创建RDD

### --- 从文件系统创建RDD

~~~ # 用 textFile() 方法来从文件系统中加载数据创建RDD。方法将文件的 URI 作为参数,这个URI可以是:

~~~ 本地文件系统

~~~ 使用本地文件系统要注意:该文件是不是在所有的节点存在(在Standalone模式下)

~~~ 分布式文件系统HDFS的地址

~~~ Amazon S3的地址六、从文件系统创建RDD

### --- 从本地文件系统创建RDD

~~~ # 从本地文件系统加载数据

scala> val lines = sc.textFile("file:///root/data/wc.txt")

lines: org.apache.spark.rdd.RDD[String] = file:///root/data/wc.txt MapPartitionsRDD[10] at textFile at <console>:24

scala> lines.count

~~~ # 报错:ileNotFoundException: File file:/root/data/wc.txt does not exist //文件不存在

~~~ # 因为这个文件只存在于hadoop02主机的本地,而这个文件是发送到spark集群中执行,所以并不是所有主机都有文件

~~~ # 发送到所有主机

[root@hadoop02 ~]# rsync-script data/scala> val lines = sc.textFile("file:///root/data/wc.txt")

lines: org.apache.spark.rdd.RDD[String] = file:///root/data/wc.txt MapPartitionsRDD[18] at textFile at <console>:24

scala> lines.count

res16: Long = 6 ### --- 从分布式文件系统加载数据

~~~ # 从分布式文件系统加载数据

~~~ # 方案一:

scala> val lines = sc.textFile("hdfs://hadoop01:9000/user/root/data/yanqi.dat")

lines: org.apache.spark.rdd.RDD[String] = hdfs://hadoop01:9000/user/root/data/yanqi.dat MapPartitionsRDD[30] at textFile at <console>:24

scala> lines.count

res25: Long = 980 ~~~ # 方案二:

scala> val lines = sc.textFile("/user/root/data/yanqi.dat")

lines: org.apache.spark.rdd.RDD[String] = /user/root/data/yanqi.dat MapPartitionsRDD[32] at textFile at <console>:24

scala> lines.count

res23: Long = 980~~~ # 方案三:

scala> val lines = sc.textFile("data/yanqi.dat")

lines: org.apache.spark.rdd.RDD[String] = data/yanqi.dat MapPartitionsRDD[34] at textFile at <console>:24

scala> lines.count

res25: Long = 980七、从RDD创建RDD

### --- 从RDD创建RDD

~~~ 本质是将一个RDD转换为另一个RDD。详细信息参见 3.5 TransformationWalter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

浙公网安备 33010602011771号

浙公网安备 33010602011771号