|NO.Z.00020|——————————|BigDataEnd|——|Hadoop&kafka.V05|——|kafka.v05|分区器剖析.v01|

一、分区器剖析

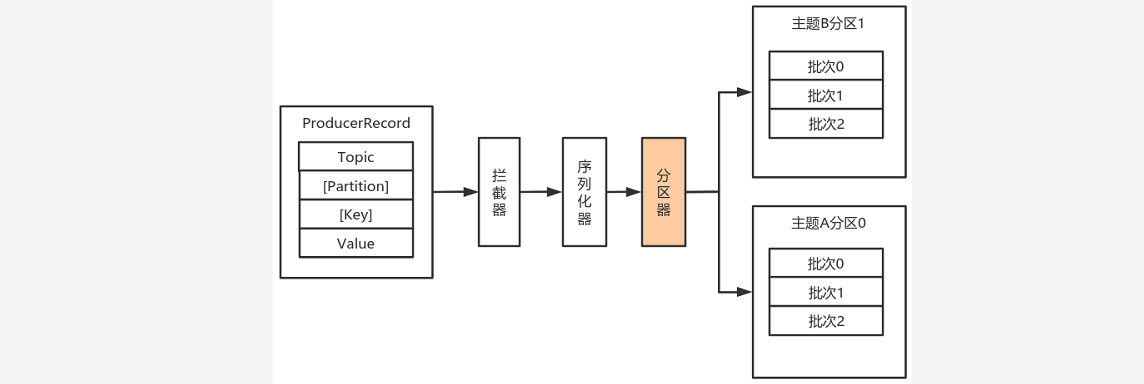

### --- 分区器

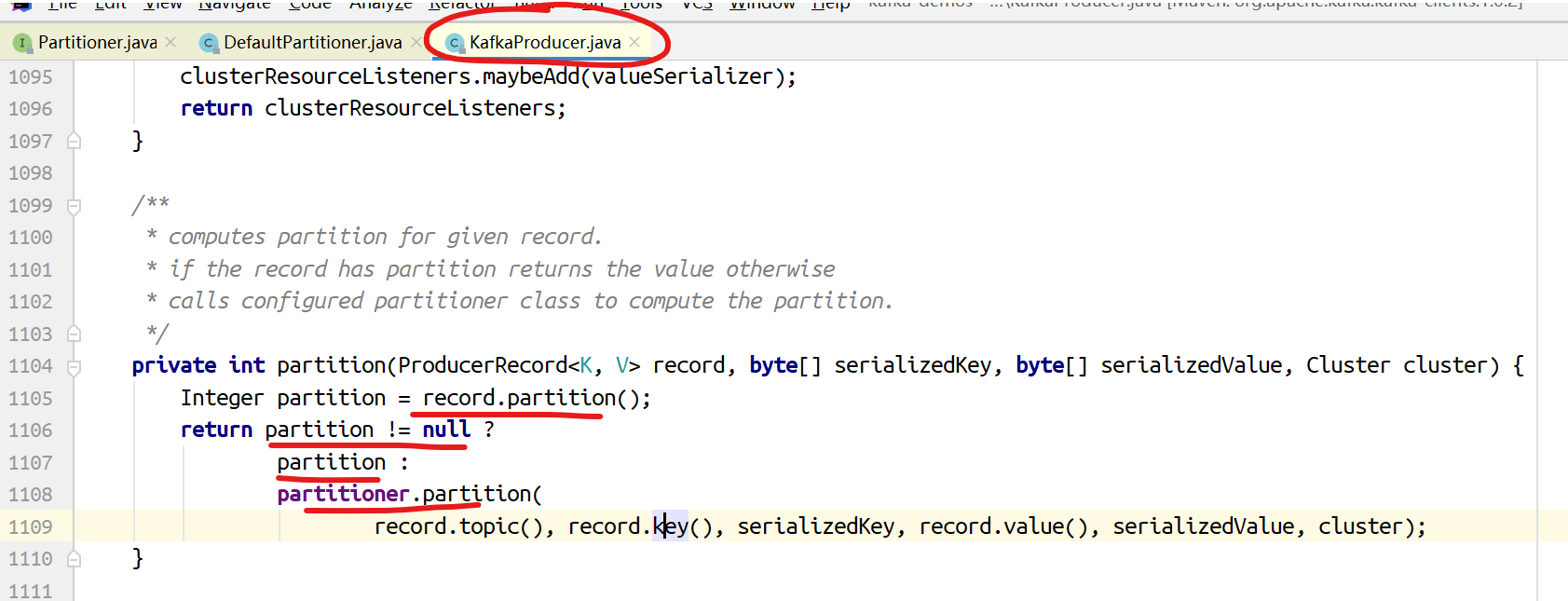

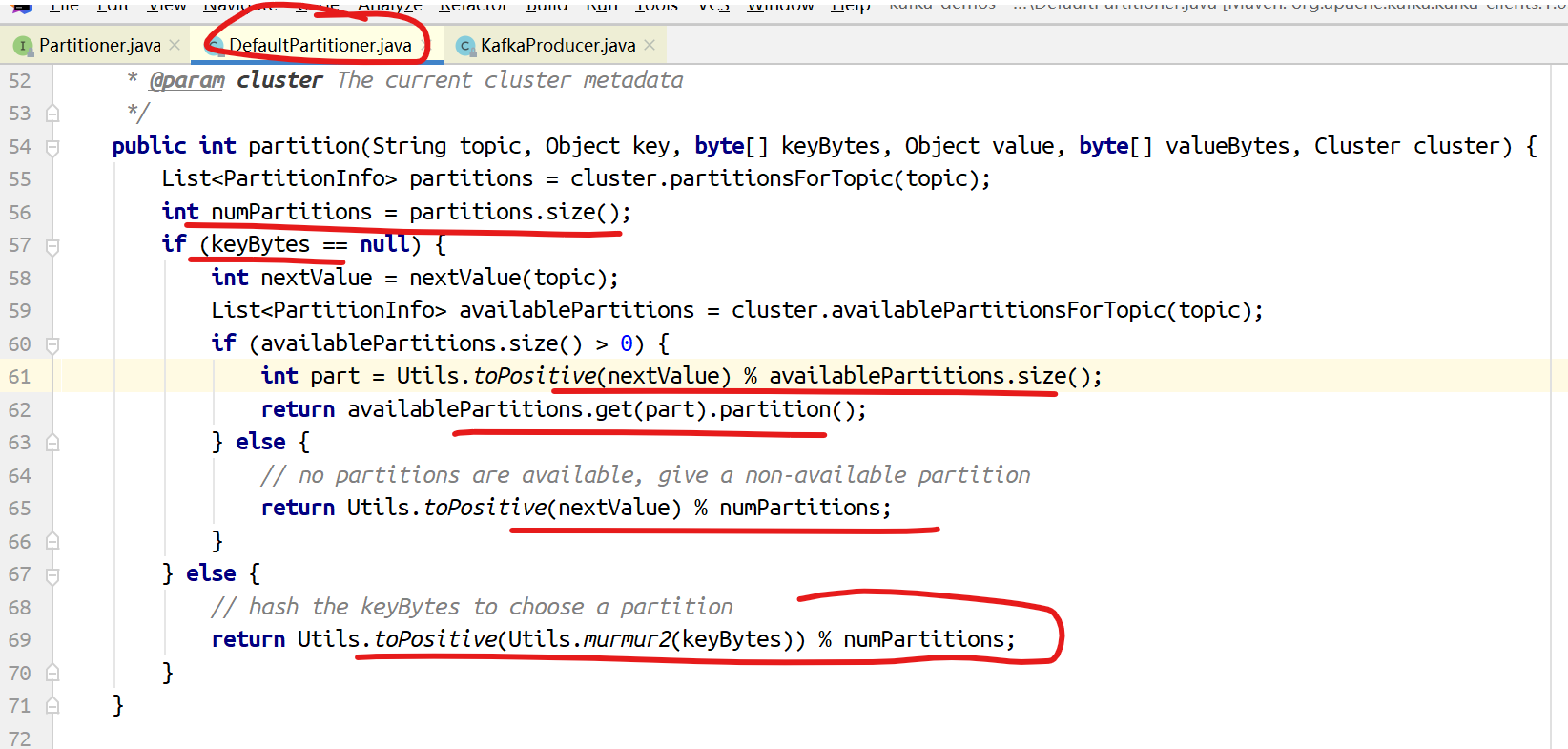

~~~ # 默认(DefaultPartitioner)分区计算:

~~~ 如果record提供了分区号,则使用record提供的分区号

~~~ 如果record没有提供分区号,则使用key的序列化后的值的hash值对分区数量取模

~~~ 如果record没有提供分区号,也没有提供key,则使用轮询的方式分配分区号。

~~~ 会首先在可用的分区中分配分区号

二、如果没有可用的分区,则在该主题所有分区中分配分区号。

/**

* The default partitioning strategy:

* <ul>

* <li>If a partition is specified in the record, use it

* <li>If no partition is specified but a key is present choose a partition based on a hash of the key

* <li>If no partition or key is present choose a partition in a round-robin fashion

*/

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

List<PartitionInfo> partitions = cluster.partitionsForTopic(topic);

int numPartitions = partitions.size();

if (keyBytes == null) {

int nextValue = nextValue(topic);

List<PartitionInfo> availablePartitions = cluster.availablePartitionsForTopic(topic);

if (availablePartitions.size() > 0) {

int part = Utils.toPositive(nextValue) % availablePartitions.size();

return availablePartitions.get(part).partition();

} else {

// no partitions are available, give a non-available partition

return Utils.toPositive(nextValue) % numPartitions;

}

} else {

// hash the keyBytes to choose a partition

return Utils.toPositive(Utils.murmur2(keyBytes)) % numPartitions;

}

}三、自定义分区器

### --- 如果要自定义分区器,则需要

~~~ 首先开发Partitioner接口的实现类

~~~ 在KafkaProducer中进行设置:configs.put("partitioner.class", "xxx.xx.Xxx.class")

~~~ 位于org.apache.kafka.clients.producer 中的分区器接口:### --- 创建maven模块:demo-06-kafka-customPartitioner

~~~ 添加pom.xml依赖

<dependencies>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>1.0.2</version>

</dependency>

</dependencies>四、编程代码实现

### --- 自定义分区器的实现

package com.yanqi.kafka.demo.partitioner;

import org.apache.kafka.clients.producer.Partitioner;

import org.apache.kafka.common.Cluster;

import java.util.Map;

/**

* 自定义分区器

*/

public class MyPartitioner implements Partitioner {

@Override

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

// 此处可以计算分区的数字。

// 我们直接指定为2

return 2;

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> configs) {

}

}### --- 自定义分区器的实现

package producer;

import com.yanqi.kafka.demo.partitioner.MyPartitioner;

import org.apache.kafka.clients.producer.*;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.HashMap;

import java.util.Map;

public class MyProducer {

public static void main(String[] args) {

Map<String, Object> configs = new HashMap<>();

configs.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "node1:9092");

configs.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

configs.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

// 指定自定义的分区器

configs.put(ProducerConfig.PARTITIONER_CLASS_CONFIG, MyPartitioner.class);

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(configs);

// 此处不要设置partition的值

ProducerRecord<String, String> record = new ProducerRecord<String, String>(

"tp_part_01",

"mykey",

"myvalue"

);

producer.send(record, new Callback() {

@Override

public void onCompletion(RecordMetadata metadata, Exception exception) {

if (exception != null) {

System.out.println("消息发送失败");

} else {

System.out.println(metadata.topic());

System.out.println(metadata.partition());

System.out.println(metadata.offset());

}

}

});

// 关闭生产者

producer.close();

}

}五、编译打印

### --- 创建主题

[root@hadoop ~]# kafka-topics.sh --zookeeper localhost:2181/myKafka \

> --create --topic tp_part_01 --partitions 4 --replication-factor 1

[root@hadoop ~]# kafka-topics.sh --zookeeper localhost:2181/myKafka --list

tp_part_01### --- 启动消费者

[root@hadoop ~]# kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic tp_part_01

~~输出参数

myvalue //第一次消费的消息

myvalue //第二次消费的消息### --- 编译打印

D:\JAVA\jdk1.8.0_231\bin\java.exe "-javaagent:D:\IntelliJIDEA\IntelliJ IDEA 2019.3.3\lib\idea_rt.jar=54988:D:\IntelliJIDEA\IntelliJ IDEA 2019.3.3\bin" -Dfile.encoding=UTF-8 -classpath D:\JAVA\jdk1.8.0_231\jre\lib\charsets.jar;D:\JAVA\jdk1.8.0_231\jre\lib\deploy.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\access-bridge-64.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\cldrdata.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\dnsns.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\jaccess.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\jfxrt.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\localedata.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\nashorn.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\sunec.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\sunjce_provider.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\sunmscapi.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\sunpkcs11.jar;D:\JAVA\jdk1.8.0_231\jre\lib\ext\zipfs.jar;D:\JAVA\jdk1.8.0_231\jre\lib\javaws.jar;D:\JAVA\jdk1.8.0_231\jre\lib\jce.jar;D:\JAVA\jdk1.8.0_231\jre\lib\jfr.jar;D:\JAVA\jdk1.8.0_231\jre\lib\jfxswt.jar;D:\JAVA\jdk1.8.0_231\jre\lib\jsse.jar;D:\JAVA\jdk1.8.0_231\jre\lib\management-agent.jar;D:\JAVA\jdk1.8.0_231\jre\lib\plugin.jar;D:\JAVA\jdk1.8.0_231\jre\lib\resources.jar;D:\JAVA\jdk1.8.0_231\jre\lib\rt.jar;E:\NO.Z.10000——javaproject\NO.Z.00002.Hadoop\kafka_demo\demo-06-kafka-customPartitioner\target\classes;C:\Users\Administrator\.m2\repository\org\apache\kafka\kafka-clients\1.0.2\kafka-clients-1.0.2.jar;C:\Users\Administrator\.m2\repository\org\lz4\lz4-java\1.4\lz4-java-1.4.jar;C:\Users\Administrator\.m2\repository\org\xerial\snappy\snappy-java\1.1.4\snappy-java-1.1.4.jar;C:\Users\Administrator\.m2\repository\org\slf4j\slf4j-api\1.7.25\slf4j-api-1.7.25.jar producer.MyProducer

tp_part_01

2

0

~再发送一次

tp_part_01

2

1Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

bdv013-kafka

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通