|NO.Z.00018|——————————|BigDataEnd|——|Hadoop&kafka.V03|——|kafka.v03|序列化器剖析|

一、序列化器剖析

### --- 序列化器

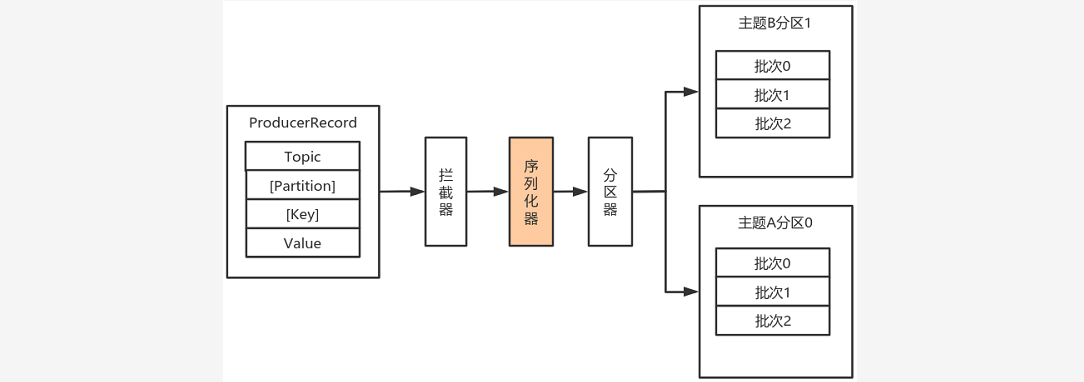

~~~ 由于Kafka中的数据都是字节数组,在将消息发送到Kafka之前需要先将数据序列化为字节数组。

~~~ 序列化器的作用就是用于序列化要发送的消息的。

~~~ Kafka使用org.apache.kafka.common.serialization.Serializer 接口用于定义序列化器,

~~~ 将泛型指定类型的数据转换为字节数组。

二、序列化器官方实现

### --- serialization实现序列化器

~~~ org.apache.kafka.common.serialization

package org.apache.kafka.common.serialization;

import java.io.Closeable;

import java.util.Map;

/**

* An interface for converting objects to bytes.

*

* A class that implements this interface is expected to have a constructor with no parameter.

* <p>

* Implement {@link org.apache.kafka.common.ClusterResourceListener} to receive cluster metadata once it's available. Please see the class documentation for ClusterResourceListener for more information.

*

* @param <T> Type to be serialized from.

*/

public interface Serializer<T> extends Closeable {

/**

* Configure this class.

* @param configs configs in key/value pairs

* @param isKey whether is for key or value

*/

void configure(Map<String, ?> configs, boolean isKey);

/**

* Convert {@code data} into a byte array.

*

* @param topic topic associated with data

* @param data typed data

* @return serialized bytes

*/

byte[] serialize(String topic, T data);

/**

* Close this serializer.

*

* This method must be idempotent as it may be called multiple times.

*/

@Override

void close();

}### --- 系统提供了该接口的子接口以及实现类:

~~~ org.apache.kafka.common.serialization.ByteArraySerializer

public class ByteArraySerializer implements Serializer<byte[]> {

@Override

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

@Override

public byte[] serialize(String topic, byte[] data) {

return data;

}

@Override

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.ByteBufferSerializer

public class ByteBufferSerializer implements Serializer<ByteBuffer> {

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

public byte[] serialize(String topic, ByteBuffer data) {

if (data == null)

return null;

data.rewind();

if (data.hasArray()) {

byte[] arr = data.array();

if (data.arrayOffset() == 0 && arr.length == data.remaining()) {

return arr;

}

}

byte[] ret = new byte[data.remaining()];

data.get(ret, 0, ret.length);

data.rewind();

return ret;

}

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.BytesSerializer

public class BytesSerializer implements Serializer<Bytes> {

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

public byte[] serialize(String topic, Bytes data) {

if (data == null)

return null;

return data.get();

}

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.DoubleSerializer

public class DoubleSerializer implements Serializer<Double> {

@Override

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

@Override

public byte[] serialize(String topic, Double data) {

if (data == null)

return null;

long bits = Double.doubleToLongBits(data);

return new byte[] {

(byte) (bits >>> 56),

(byte) (bits >>> 48),

(byte) (bits >>> 40),

(byte) (bits >>> 32),

(byte) (bits >>> 24),

(byte) (bits >>> 16),

(byte) (bits >>> 8),

(byte) bits

};

}

@Override

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.FloatSerializer

public class FloatSerializer implements Serializer<Float> {

@Override

public void configure(final Map<String, ?> configs, final boolean isKey) {

// nothing to do

}

@Override

public byte[] serialize(final String topic, final Float data) {

if (data == null)

return null;

long bits = Float.floatToRawIntBits(data);

return new byte[] {

(byte) (bits >>> 24),

(byte) (bits >>> 16),

(byte) (bits >>> 8),

(byte) bits

};

}

@Override

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.IntegerSerializer

public class IntegerSerializer implements Serializer<Integer> {

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

public byte[] serialize(String topic, Integer data) {

if (data == null)

return null;

return new byte[] {

(byte) (data >>> 24),

(byte) (data >>> 16),

(byte) (data >>> 8),

data.byteValue()

};

}

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.StringSerializer

public void configure(Map<String, ?> configs, boolean isKey) {

String propertyName = isKey ? "key.serializer.encoding" : "value.serializer.encoding";

Object encodingValue = configs.get(propertyName);

if (encodingValue == null)

encodingValue = configs.get("serializer.encoding");

if (encodingValue != null && encodingValue instanceof String)

encoding = (String) encodingValue;

}

@Override

public byte[] serialize(String topic, String data) {

try {

if (data == null)

return null;

else

return data.getBytes(encoding);

} catch (UnsupportedEncodingException e) {

throw new SerializationException("Error when serializing string to byte[] due to unsupported encoding " + encoding);

}

}

@Override

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.LongSerializer

public class LongSerializer implements Serializer<Long> {

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

public byte[] serialize(String topic, Long data) {

if (data == null)

return null;

return new byte[] {

(byte) (data >>> 56),

(byte) (data >>> 48),

(byte) (data >>> 40),

(byte) (data >>> 32),

(byte) (data >>> 24),

(byte) (data >>> 16),

(byte) (data >>> 8),

data.byteValue()

};

}

public void close() {

// nothing to do

}

}### --- org.apache.kafka.common.serialization.ShortSerializer

public class ShortSerializer implements Serializer<Short> {

public void configure(Map<String, ?> configs, boolean isKey) {

// nothing to do

}

public byte[] serialize(String topic, Short data) {

if (data == null)

return null;

return new byte[] {

(byte) (data >>> 8),

data.byteValue()

};

}

public void close() {

// nothing to do

}

}Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

bdv013-kafka

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通