|NO.Z.00036|——————————|BigDataEnd|——|Hadoop&MapReduce.V09|——|Hadoop.v09|MapReduce原理剖析之自定义分区及ReduceTask数|自定义分区案例.v02|

一、编程实现步骤

### --- 创建项目:partition

### --- Mapper

package com.yanqi.mr.partition;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/*

1. 读取一行文本,按照制表符切分

2. 解析出appkey字段,其余数据封装为PartitionBean对象(实现序列化Writable接口)

3. 设计map()输出的kv,key-->appkey(依靠该字段完成分区),PartitionBean对象作为Value输出

*/

public class PartitionMapper extends Mapper<LongWritable, Text, Text, PartitionBean> {

final PartitionBean bean = new PartitionBean();

final Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

final String[] fields = value.toString().split("\t");

String appkey = fields[2];

bean.setId(fields[0]);

bean.setDeviceId(fields[1]);

bean.setAppkey(fields[2]);

bean.setIp(fields[3]);

bean.setSelfDuration(Long.parseLong(fields[4]));

bean.setThirdPartDuration(Long.parseLong(fields[5]));

bean.setStatus(fields[6]);

k.set(appkey);

context.write(k, bean); //shuffle开始时会根据k的hashcode值进行分区,但是结合我们自己的业务,默认hash分区方式不能满足需求

}

}### --- PartitionBean

package com.yanqi.mr.partition;

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class PartitionBean implements Writable {

//准备一个空参构造

public PartitionBean() {

}

public PartitionBean(String id, String deviceId, String appkey, String ip, Long selfDuration, Long thirdPartDuration, String status) {

this.id = id;

this.deviceId = deviceId;

this.appkey = appkey;

this.ip = ip;

this.selfDuration = selfDuration;

this.thirdPartDuration = thirdPartDuration;

this.status = status;

}

//序列化方法

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(id);

out.writeUTF(deviceId);

out.writeUTF(appkey);

out.writeUTF(ip);

out.writeLong(selfDuration);

out.writeLong(thirdPartDuration);

out.writeUTF(status);

}

//反序列化方法 要求序列化与反序列化字段顺序要保持一致

@Override

public void readFields(DataInput in) throws IOException {

this.id = in.readUTF();

this.deviceId = in.readUTF();

this.appkey = in.readUTF();

this.ip = in.readUTF();

this.selfDuration = in.readLong();

this.thirdPartDuration = in.readLong();

this.status = in.readUTF();

}

//定义属性

private String id;//日志id

private String deviceId;//设备id

private String appkey;//appkey厂商id

private String ip;//ip地址

private Long selfDuration;//自有内容播放时长

private Long thirdPartDuration;//第三方内容时长

private String status;//状态码

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getDeviceId() {

return deviceId;

}

public void setDeviceId(String deviceId) {

this.deviceId = deviceId;

}

public String getAppkey() {

return appkey;

}

public void setAppkey(String appkey) {

this.appkey = appkey;

}

public String getIp() {

return ip;

}

public void setIp(String ip) {

this.ip = ip;

}

public Long getSelfDuration() {

return selfDuration;

}

public void setSelfDuration(Long selfDuration) {

this.selfDuration = selfDuration;

}

public Long getThirdPartDuration() {

return thirdPartDuration;

}

public void setThirdPartDuration(Long thirdPartDuration) {

this.thirdPartDuration = thirdPartDuration;

}

public String getStatus() {

return status;

}

public void setStatus(String status) {

this.status = status;

}

//方便文本中的数据易于观察,重写toString()方法

@Override

public String toString() {

return id + '\t' +

"\t" + deviceId + '\t' + appkey + '\t' +

ip + '\t' +

selfDuration +

"\t" + thirdPartDuration +

"\t" + status;

}

}### --- CustomPartitioner

package com.yanqi.mr.partition;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

//Partitioner分区器的泛型是map输出的kv类型

public class CustomPartitioner extends Partitioner<Text, PartitionBean> {

@Override

public int getPartition(Text text, PartitionBean partitionBean, int numPartitions) {

int partition = 0;

if (text.toString().equals("kar")) {

//只需要保证满足此if条件的数据获得同个分区编号集合

partition = 0;

} else if (text.toString().equals("pandora")) {

partition = 1;

} else {

partition = 2;

}

return partition;

}

}### --- PartitionReducer

package com.yanqi.mr.partition;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

//reduce输入类型:Text,PartitionBean,输出:Text,PartitionBean

public class PartitionReducer extends Reducer<Text, PartitionBean, Text, PartitionBean> {

@Override

protected void reduce(Text key, Iterable<PartitionBean> values, Context context) throws IOException, InterruptedException {

//无需聚合运算,只需要进行输出即可

for (PartitionBean bean : values) {

context.write(key, bean);

}

}

}### --- PartitionDriver

package com.yanqi.mr.partition;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class PartitionDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1 获取配置文件

final Configuration conf = new Configuration();

//2 获取job实例

final Job job = Job.getInstance(conf);

//3 设置任务相关参数

job.setJarByClass(PartitionDriver.class);

job.setMapperClass(PartitionMapper.class);

job.setReducerClass(PartitionReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(PartitionBean.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(PartitionBean.class);

// 4 设置使用自定义分区器

job.setPartitionerClass(CustomPartitioner.class);

//5 指定reducetask的数量与分区数量保持一致,分区数量是3

job.setNumReduceTasks(3); //reducetask不设置默认是1个

// job.setNumReduceTasks(5);

// job.setNumReduceTasks(2);

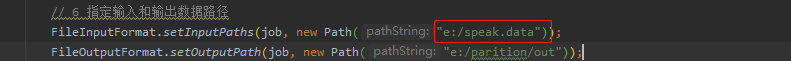

// 6 指定输入和输出数据路径

FileInputFormat.setInputPaths(job, new Path("e:/speak.data"));

FileOutputFormat.setOutputPath(job, new Path("e:/parition/out"));

// 7 提交任务

final boolean flag = job.waitForCompletion(true);

System.exit(flag ? 0 : 1);

}

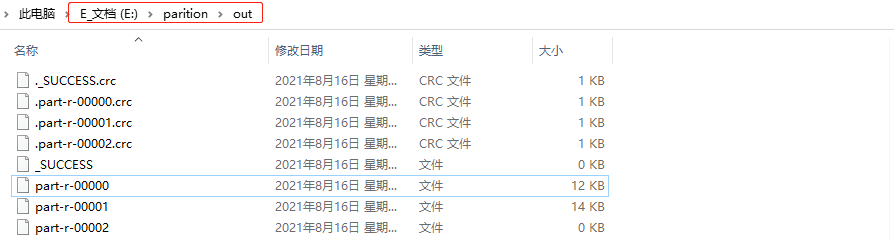

}三、运行程序

### --- 运行程序

~~~ 配置input数据参数

~~~ 打印输出

四、总结

### --- 总结

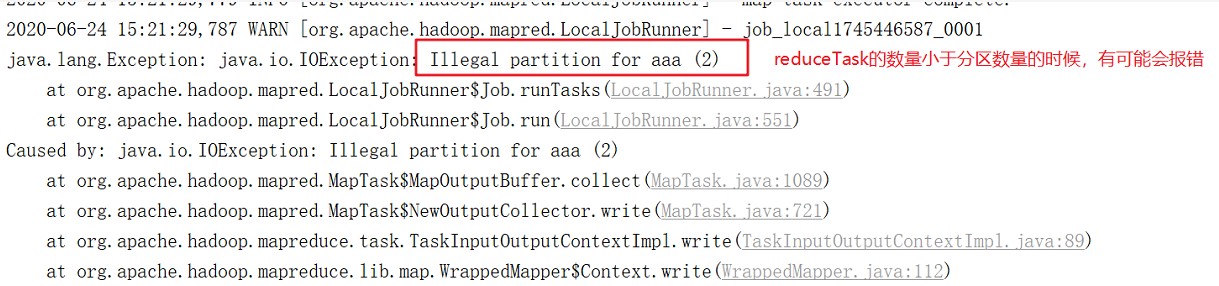

~~~ 自定义分区器时最好保证分区数量与reduceTask数量保持一致;

~~~ 如果分区数量不止1个,但是reduceTask数量1个,此时只会输出一个文件。

~~~ 如果reduceTask数量大于分区数量,但是输出多个空文件

~~~ 如果reduceTask数量小于分区数量,有可能会报错。

Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通