|NO.Z.00081|——————————|^^ 部署 ^^|——|Linux&ELK日志分析&.V02|——|JDK|ELK|Logstash|

一、实验部署

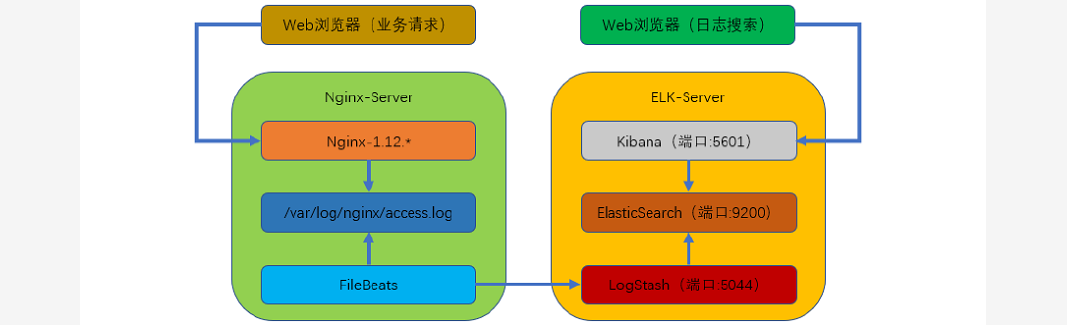

### --- 实验架构

~~~ 本次部署的是filebeats(客户端),logstash+elasticsearch+kibana(服务端)组成的架构

~~~ 业务请求到达nginx-server机器上的nginx;nginx的响应请求,

~~~ 并在access.log文件中增加访问记录,FileBeat搜集新增的日志,

~~~ #通过LogStash的5044端口上传日志:

~~~ LogStash将日志信息通过本机的9200端口传入到ElasticSerach;

~~~ 搜集日志的用户通过浏览器访问Kibana,服务器端口是5601;

~~~ Kibana通过9200端口访问ElasticSerach;一、实验环境

### --- 本次部署的单点ELK用了两台机器(Centos-7.5)

~~~ ELK服务端: centos7.x 10.10.10.11 ELK-server

~~~ Nginx客户端:centos7.x 10.10.10.12 Nginx-server### --- 配置好网络yum源

[root@server11 yum.repos.d]# wget http://mirrors.aliyun.com/repo/Centos-7.repo

[root@server11 yum.repos.d]# wget http://mirrors.aliyun.com/repo/epel-7.repo### --- 关闭防火墙:systemctl stop(disable)firewalld

[root@server11 ~]# systemctl status firewalld.service

### --- 关闭selinux:SELINUX=disabled

[root@server11 ~]# getenforce

Disabled 二、下载并安装软件包

### --- 下载并安装软件包

[root@server11 ~]# mkdir /elk

[root@server11 ~]# cd /elk

[root@server11 elk]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.3.tar.gz

[root@server11 elk]# wget https://artifacts.elastic.co^Cownloads/logstash/logstash-6.2.3.tar.gz

[root@server11 elk]# wget https://artifacts.elastic.co/downloads/kibana/kibana-6.2.3-linux-x86_64.tar.gz### --- 全部解压缩,并复制到/usr/local/目录下

[root@server11 elk]# tar -xf elasticsearch-6.2.3.tar.gz

[root@server11 elk]# tar -xf kibana-6.2.3-linux-x86_64.tar.gz

[root@server11 elk]# tar -xf logstash-6.2.3.tar.gz

[root@server11 elk]# cp -a elasticsearch-6.2.3 kibana-6.2.3-linux-x86_64 logstash-6.2.3 /usr/local/三、安装JDK(java)环境工具

### --- 安装JDK(java)环境工具

[root@server11 ~]# yum install -y java-1.8*四、配置elasticsearch:

### --- 配置elasticsearch:

~~~ 新建elasticsearch用户并启动(elasticsearch普通用户启动)

[root@server11 ~]# useradd elasticsearch

[root@server11 ~]# chown -R elasticsearch.elasticsearch /usr/local/elasticsearch-6.2.3/ #将这个用户的所有者改为elasticsearch目录

[root@server11 ~]# su - elasticsearch // 以这个用户的身份进行启动部署

[elasticsearch@server11 ~]$ cd /usr/local/elasticsearch-6.2.3

[elasticsearch@server11 elasticsearch-6.2.3]$ ./bin/elasticsearch -d### --- 查看进程是否启动成功(等待一下,不是立刻启动)

[elasticsearch@server11 elasticsearch-6.2.3]$ netstat -antp

tcp6 0 0 127.0.0.1:9200 :::* LISTEN 12133/java ### --- 若出现错误可以查看日志

[elasticsearch@server11 elasticsearch-6.2.3]$ cat /usr/local/elasticsearch-6.2.3/logs/elasticsearch.log ### --- 测试是否可以正常访问

[elasticsearch@server11 elasticsearch-6.2.3]$ curl localhost:9200 // 只要看到这个界面说明部署成功

{

"name" : "XRgtD77",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "jPl_H0_FRIWo0CY-ureAuA",

"version" : {

"number" : "6.2.3",

"build_hash" : "c59ff00",

"build_date" : "2018-03-13T10:06:29.741383Z",

"build_snapshot" : false,

"lucene_version" : "7.2.1",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}五、配置logstash

### --- 配置logstash

~~~ Logstash收集nginx日志之使用grok过滤插件解析日志,grok作为一个logstash的过滤插件,

~~~ 支持根据模式解析文本日志行,拆成字段

### --- logstash中grok的正则匹配

[elasticsearch@server11 elasticsearch-6.2.3]$ exit

logout

[root@server11 ~]# cd /usr/local/logstash-6.2.3/

[root@server11 logstash-6.2.3]# vim vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patterns/grok-patterns atterns

#Nginx log

WZ ([^ ]*)

NGINXACCESS %{IP:remote_ip} \- \- \[%{HTTPDATE:timestamp}\] "%{WORD:method} %{WZ:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}### --- 创建logstash配置文件

[root@server11 logstash-6.2.3]# vim /usr/local/logstash-6.2.3/default.conf

input {

beats {

port=> "5044"

}

}

#数据过滤

filter {

grok {

match => { "message" => "%/{NGINXACCESS}" }

}

geoip {

#nginx客户端IP

source => "10.10.10.12"

}

}

#输出配置为本机的9200端口,这是ElasticSerach服务的监听端口

output{

elasticsearch {

hosts => ["127.0.0.1:9200"]

}

}### --- 进入到/usr/local/logstash-6.2.3目录下,并执行下列命令

~~~ 后台启动logstash:nohup bin/logstash -f default.conf &

~~~ 查看启动日志:tailf nohup.out

~~~ 查看端口是否启动:netstat -napt|grep 5044

[root@server11 logstash-6.2.3]# nohup bin/logstash -f default.conf &

[1] 12384

[root@server11 logstash-6.2.3]# nohup: ignoring input and appending output to ‘nohup.out’ #自动执行

[root@server11 logstash-6.2.3]# tailf nohup.out

[2021-02-15T23:27:50,599][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2021-02-15T23:27:50,699][INFO ][logstash.pipeline ] Pipeline started succesfully {:pipeline_id=>"main", :thread=>"#<Thread:0x35e626f run>"}

[2021-02-15T23:27:50,864][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2021-02-15T23:27:51,054][INFO ][logstash.agent ] Pipelines running {:count=>1, :pipelines=>["main"]}

[root@server11 logstash-6.2.3]# netstat -napt|grep 5044

tcp6 0 0 :::5044 :::* LISTEN 12384/java Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

分类:

cdv007-network

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通