Centos 7.9 基于二进制文件部署kubernetes v1.25.5集群

简述

一、集群环境准备

1.1 主机规划

| 服务器系统 | 节点IP | 主机角色 | CUP/内存 | Hostname | 内核 | 软件列表 |

| Centos 7.9.2009 | 192.168.1.92 | master | 2C/4G | k8s-master1 | 6.1.0 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、Containerd、runc |

| Centos 7.9.2009 | 192.168.1.93 | master | 2C/4G | k8s-master2 | 6.1.0 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、Containerd、runc |

| Centos 7.9.2009 | 192.168.1.94 | master | 2C/4G | k8s-master3 | 6.1.0 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、Containerd、runc |

| Centos 7.9.2009 | 192.168.1.96 | worker | 2C/4G | k8s-worker1 | 6.1.0 | kubelet、kube-proxy、Containerd、runc |

| Centos 7.9.2009 | 192.168.1.111 | LB | 1C/1G | ha1 | 6.1.0 | haproxy、keepalived |

| Centos 7.9.2009 | 192.168.1.112 | LB | 1C/1G | ha2 | 6.1.0 | haproxy、keepalived |

| Centos 7.9.2009 | 192.168.1.100 | VIP(虚拟IP) | / | / | / | / |

1.2 软件版本

|

软件名称 |

版本 | 备注 |

|

CentOS 7.9

|

kernel版本:6.1.0 | 升级后版本 |

| kubernetes |

v1.25.5

|

|

|

etcd

|

v3.5.6 | 最新版本 |

| calico | v3.23 | 稳定版本 |

| coredns | 1.8.4 | |

| containerd | 1.6.12 | 最新版本 |

| runc | 1.1.4 | 最新版本 |

| haproxy | 1.5.18 | yum源默认 |

| keepalived | v1.3.5 | yum源默认 |

1.3 网络分配

| 网络名称 | 网段 | 备注 |

| Node网络 | 192.168.1.0/24 | |

| Service网络 | 10.96.0.0/16 | |

| Pod网络 | 10.244.0.0/16 |

二、集群部署

2.1主机准备

2.1.1 主机名设置

注:关于主机名参见1.1小节主机规划表

1 2 3 4 5 6 | hostnamectl set-hostname ha1hostnamectl set-hostname ha2hostnamectl set-hostname k8s-master1hostnamectl set-hostname k8s-master2hostnamectl set-hostname k8s-master3hostnamectl set-hostname k8s-worker1 |

2.1.2 主机与IP地址解析

1 2 3 4 5 6 7 8 | cat >> /etc/hosts << EOF192.168.1.111 ha1192.168.1.112 ha2192.168.1.92 k8s-master1192.168.1.93 k8s-master2192.168.1.94 k8s-master3192.168.1.96 k8s-worker1EOF |

2.1.3 主机安全设置

2.1.3.1 关闭防火墙

1 2 3 | systemctl stop firewalldsystmctl disable firewalldfirewall-cmd --state |

2.1.3.2 关闭selinux

1 2 3 | setenforce 0sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/configsestatus |

2.1.4 交换分区设置

1 2 3 4 | swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstabecho "vm.swappiness=0" >> /etc/sysctl.confsysctl -p |

2.1.5 主机系统时间同步

1 | yum -y install ntpdate |

2.1.5.2 制定时间同步计划任务(每小时执行一次同步)

1 2 | crontab -e0 */1 * * * ntpdate time1.aliyun.com |

2.1.6 主机系统优化

- limit优化

1 | ulimit -SHn 65535 |

1 2 3 4 5 6 7 8 | cat <<EOF >> /etc/security/limits.conf* soft nofile 655360* hard nofile 131072* soft nproc 655350* hard nproc 655350* soft memlock unlimited* hard memlock unlimitedEOF |

2.1.7 ipvs管理工具安装及模块加载

- 为集群节点安装,负载均衡节点不用安装

1 | yum -y install ipvsadm ipset sysstat conntrack libseccomp |

- 临时加入,所有节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可:

1 2 3 4 5 | modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack |

永久加入,创建 /etc/modules-load.d/ipvs.conf 并加入以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | cat >/etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF |

2.1.8 加载containerd相关内核模块

注:master和worker节点执行

2.1.8.1 临时加载模块

1 2 | modprobe overlaymodprobe br_netfilter |

2.1.8.2 永久性加载模块

1 2 3 4 | cat > /etc/modules-load.d/containerd.conf << EOFoverlaybr_netfilterEOF |

2.1.8.3 设置为开机启动

1 | systemctl enable --now systemd-modules-load.service |

2.1.9 Linux内核升级

1 2 3 4 5 6 | [root@localhost ~]# yum -y install perl[root@localhost ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org[root@localhost ~]# yum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm[root@localhost ~]# yum --enablerepo="elrepo-kernel" -y install kernel-ml.x86_64[root@localhost ~]# grub2-set-default 0[root@localhost ~]# grub2-mkconfig -o /boot/grub2/grub.cfg |

2.1.10 Linux内核优化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | cat <<EOF > /etc/sysctl.d/k8s.confnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1fs.may_detach_mounts = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_probes = 3net.ipv4.tcp_keepalive_intvl =15net.ipv4.tcp_max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 131072net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.tcp_timestamps = 0net.core.somaxconn = 16384EOF |

使其生效:

1 | sysctl --system |

1 | reboot -h now |

重启后查看ipvs模块加载情况:

1 | lsmod | grep --color=auto -e ip_vs -e nf_conntrack |

1 2 | lsmod | egrep 'br_netfilter'lsmod | egrep 'overlay' |

2.1.11 其它工具安装(选装)

注:jq在本次集群部署中,无关紧要,如果无法安装也不影响。

- master和worker节点执行

1 | yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git lrzsz -y |

2.2 负载均衡器准备

2.2.1 安装haproxy与keepalived

1 | yum -y install haproxy keepalived |

2.2.2 HAProxy配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | cat >/etc/haproxy/haproxy.cfg<<"EOF"global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30sdefaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15sfrontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitorfrontend k8s-master bind 0.0.0.0:6443 bind 127.0.0.1:6443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-masterbackend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master1 192.168.1.92:6443 check server k8s-master2 192.168.1.93:6443 check server k8s-master3 192.168.1.94:6443 checkEOF |

2.2.3 KeepAlived

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | cat >/etc/keepalived/keepalived.conf<<"EOF"! Configuration File for keepalivedglobal_defs { router_id LVS_DEVELscript_user root enable_script_security}vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1}vrrp_instance VI_1 { state MASTER interface ens32 mcast_src_ip 192.168.1.111 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.1.100 } track_script { chk_apiserver }}EOF |

说明:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | cat >/etc/keepalived/keepalived.conf<<"EOF"! Configuration File for keepalivedglobal_defs { router_id LVS_DEVELscript_user root enable_script_security}vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1}vrrp_instance VI_1 { state BACKUP interface ens32 mcast_src_ip 192.168.1.112 virtual_router_id 51 priority 99 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.1.100 } track_script { chk_apiserver }}EOF |

2.2.4 健康检查脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | cat > /etc/keepalived/check_apiserver.sh <<"EOF"#!/bin/basherr=0for k in $(seq 1 3)do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fidoneif [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1else exit 0fiEOF |

赋予脚本执行权限:

1 | chmod +x /etc/keepalived/check_apiserver.sh |

2.2.5 启动服务并验证

1 2 3 | systemctl daemon-reloadsystemctl enable --now haproxy keepalivedsystemctl status haproxy keepalived |

2.3 配置免密登录

注:在k8s-master1上操作

2.3.1 生成密钥:

1 | ssh-keygen |

说明:

(/root/.ssh/id_rsa): # 证书私钥保存的位置

Enter passphrase: # 私钥使用过程中设置的密码

Enter same passphrase again: # 密码的确认

三个地方全部回车即可!

2.3.2 拷贝至其他服务器

1 2 3 4 | ssh-copy-id root@k8s-master1ssh-copy-id root@k8s-master2ssh-copy-id root@k8s-master3ssh-copy-id root@k8s-worker1 |

输入yes,然后输入目标服务器密码!

2.3.3 测试免密登录

1 | ssh root@k8s-master2 |

2.4 创建CA及etcd证书

2.4.1 创建工作目录

1 | mkdir -p /data/k8s-work |

2.4.2 获取cfssl工具

1 2 3 4 | cd /data/k8s-workwget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 |

说明:

1 | chmod +x cfssl* |

剪切工具至系统目录:

1 2 3 | mv cfssl_linux-amd64 /usr/local/bin/cfsslmv cfssljson_linux-amd64 /usr/local/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo |

验证:

1 2 3 4 | cfssl versionVersion: 1.2.0Revision: devRuntime: go1.6 |

2.4.3 创建CA证书

2.4.3.1 配置ca证书请求文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | cat > ca-csr.json <<"EOF"{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "kubemsb", "OU": "CN" } ], "ca": { "expiry": "87600h" }}EOF |

2.4.3.2 创建ca证书

1 | cfssl gencert -initca ca-csr.json | cfssljson -bare ca |

2.4.3.3 生成配置ca证书策略

1 | cfssl print-defaults config > ca-config.json |

说明:自己生成的证书配置,"expiry":有效期是8760h(一年),也可以修改为87600h(十年)。

或者使用以下配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | cat > ca-config.json <<"EOF"{ "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "87600h" } } }}EOF |

说明:

- server auth 表示client可以对使用该ca对server提供的证书进行验证

- client auth 表示server可以使用该ca对client提供的证书进行验证

2.4.4 创建etcd证书

2.4.4.1 配置etcd请求文件

注:

- etcd可以部署到集群的master节点或worker节点,不受影响。

- 修改主机IP为自己的主机。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | cat > etcd-csr.json <<"EOF"{ "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.1.92", "192.168.1.93", "192.168.1.94" ], "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "kubemsb", "OU": "CN" }]}EOF |

2.4.4.2 生成etcd证书

1 | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd |

2.4.4.3 查看生成的etcd证书文件

1 2 | [root@k8s-master1 k8s-work]# lsca-config.json ca.csr ca-csr.json ca-key.pem ca.pem etcd.csr etcd-csr.json etcd-key.pem etcd.pem |

2.4.5 部署etcd集群

2.4.5.1 下载etcd软件包

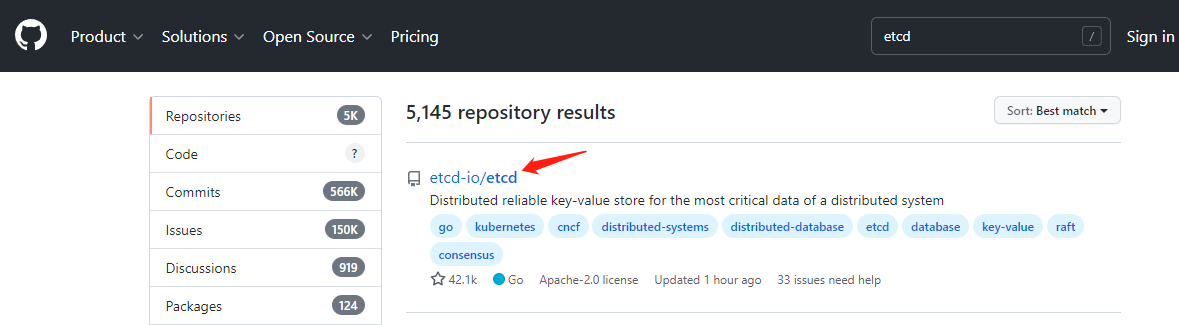

下载地址:https://github.com/

搜索框输入etcd,选择etcd-io/etcd

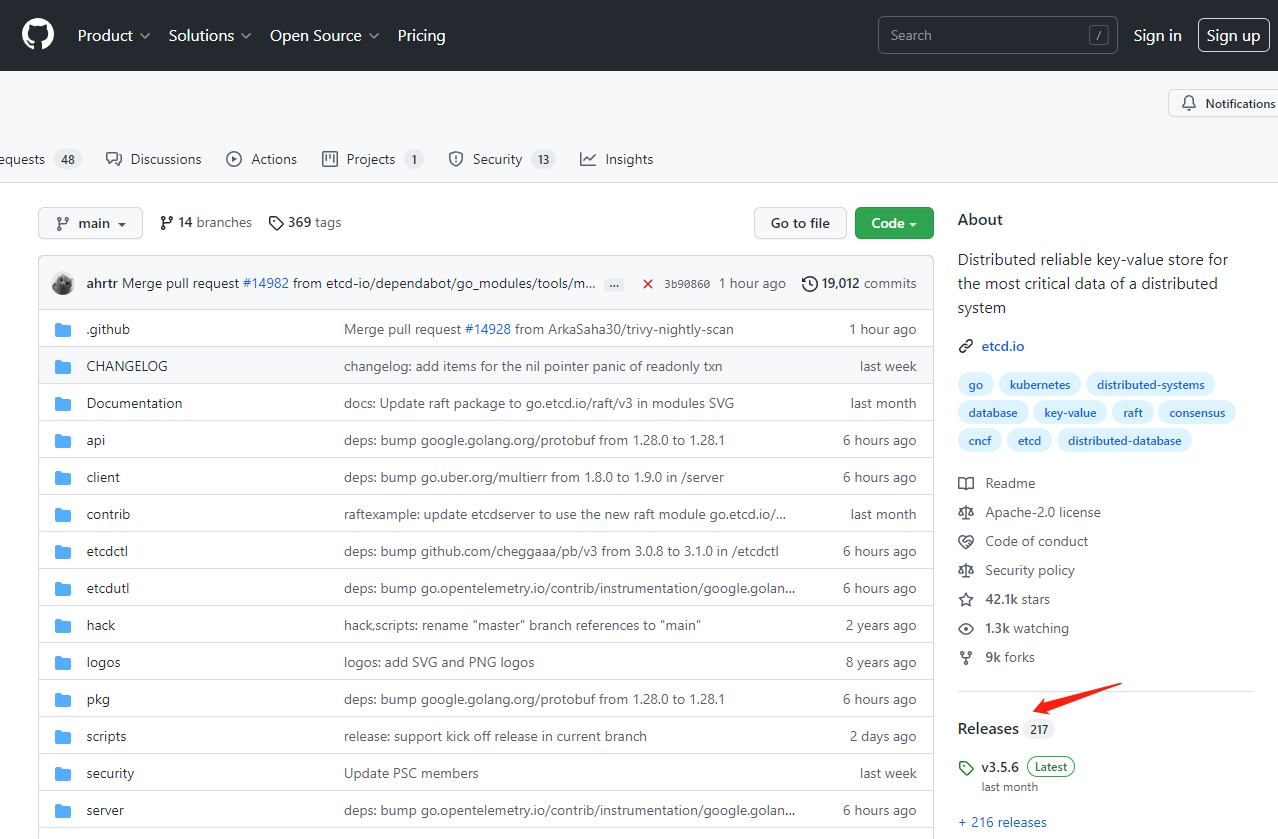

选择右侧releases

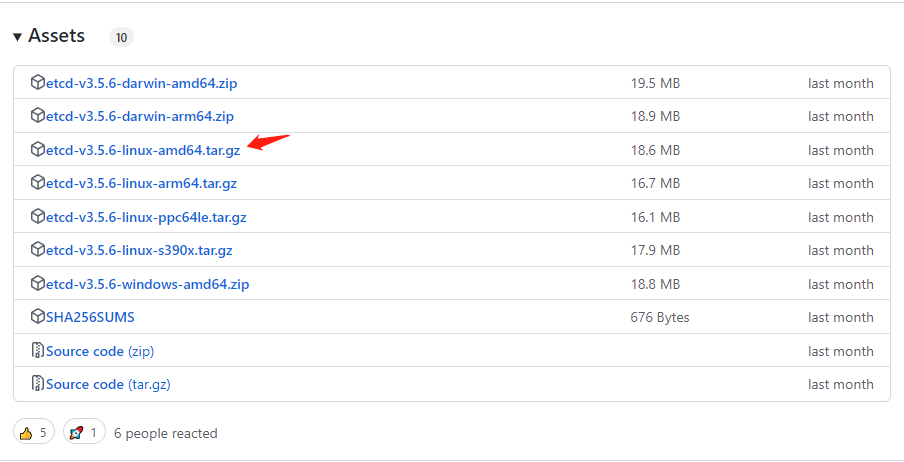

选择自己需要下载的安装包

下载安装包:

1 | wget https://github.com/etcd-io/etcd/releases/download/v3.5.6/etcd-v3.5.6-linux-amd64.tar.gz |

2.4.5.2 安装etcd软件

1 2 | tar xf etcd-v3.5.6-linux-amd64.tar.gzcp -p etcd-v3.5.6-linux-amd64/etcd* /usr/local/bin/ |

验证安装:

1 2 3 | [root@k8s-master1 k8s-work]# etcdctl versionetcdctl version: 3.5.6API version: 3.5 |

2.4.5.3 分发etcd软件

1 2 | scp etcd-v3.5.6-linux-amd64/etcd* k8s-master2:/usr/local/bin/scp etcd-v3.5.6-linux-amd64/etcd* k8s-master3:/usr/local/bin/ |

2.4.5.4 创建配置文件

创建目录:

1 | mkdir /etc/etcd |

创建配置文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | cat > /etc/etcd/etcd.conf <<"EOF"#[Member]ETCD_NAME="etcd1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.1.92:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.1.92:2379,http://127.0.0.1:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.92:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.92:2379"ETCD_INITIAL_CLUSTER="etcd1=https://192.168.1.92:2380,etcd2=https://192.168.1.93:2380,etcd3=https://192.168.1.94:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF |

说明:

2.4.5.5 创建服务配置文件

创建目录:

1 2 | mkdir -p /etc/etcd/sslmkdir -p /var/lib/etcd/default.etcd |

拷贝证书至指定目录:

1 2 3 | cd /data/k8s-workcp ca*.pem /etc/etcd/sslcp etcd*.pem /etc/etcd/ssl |

创建服务文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | cat > /etc/systemd/system/etcd.service <<"EOF"[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=-/etc/etcd/etcd.confWorkingDirectory=/var/lib/etcd/ExecStart=/usr/local/bin/etcd \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --trusted-ca-file=/etc/etcd/ssl/ca.pem \ --peer-cert-file=/etc/etcd/ssl/etcd.pem \ --peer-key-file=/etc/etcd/ssl/etcd-key.pem \ --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \ --peer-client-cert-auth \ --client-cert-authRestart=on-failureRestartSec=5LimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF |

2.4.5.6 同步etcd配置到集群其它master节点

1 2 3 | mkdir -p /etc/etcdmkdir -p /etc/etcd/sslmkdir -p /var/lib/etcd/default.etcd |

服务配置文件,需要修改etcd节点名称及IP地址

1 2 3 4 | for i in k8s-master2 k8s-master3 \do \scp /etc/etcd/etcd.conf $i:/etc/etcd/ \done |

k8s-master2:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | cat > /etc/etcd/etcd.conf <<"EOF"#[Member]ETCD_NAME="etcd2"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.1.93:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.1.93:2379,http://127.0.0.1:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.93:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.93:2379"ETCD_INITIAL_CLUSTER="etcd1=https://192.168.1.92:2380,etcd2=https://192.168.1.93:2380,etcd3=https://192.168.1.94:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF |

k8s-master3:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | cat > /etc/etcd/etcd.conf <<"EOF"#[Member]ETCD_NAME="etcd3"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.1.94:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.1.94:2379,http://127.0.0.1:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.94:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.94:2379"ETCD_INITIAL_CLUSTER="etcd1=https://192.168.1.92:2380,etcd2=https://192.168.1.93:2380,etcd3=https://192.168.1.94:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF |

同步证书文件:

1 | for i in k8s-master2 k8s-master3 ;do scp /etc/etcd/ssl/* $i:/etc/etcd/ssl ;done |

同步服务启动配置文件:

1 | for i in k8s-master2 k8s-master3 ;do scp /etc/systemd/system/etcd.service $i:/etc/systemd/system/ ;done |

2.4.5.7 启动etcd集群

注:如果报错无法启动,则需要将其他etcd节点设置完成后才可以启动(同时操作以下启动命令)

1 2 3 | systemctl daemon-reloadsystemctl enable --now etcd.servicesystemctl status etcd |

2.4.5.8 验证集群状态

检查ETCD集群健康状态

1 2 3 4 5 6 | ETCDCTL_API=3 /usr/local/bin/etcdctl \--write-out=table \--cacert=/etc/etcd/ssl/ca.pem \--cert=/etc/etcd/ssl/etcd.pem \--key=/etc/etcd/ssl/etcd-key.pem \--endpoints=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 endpoint health |

结果:

1 2 3 4 5 6 7 | +---------------------------+--------+-------------+-------+| ENDPOINT | HEALTH | TOOK | ERROR |+---------------------------+--------+-------------+-------+| https://192.168.1.92:2379 | true | 11.109782ms | || https://192.168.1.93:2379 | true | 14.648997ms | || https://192.168.1.94:2379 | true | 16.783152ms | |+---------------------------+--------+-------------+-------+ |

检查ETCD数据库性能

1 2 3 4 5 6 | ETCDCTL_API=3 /usr/local/bin/etcdctl \--write-out=table \--cacert=/etc/etcd/ssl/ca.pem \--cert=/etc/etcd/ssl/etcd.pem \--key=/etc/etcd/ssl/etcd-key.pem \--endpoints=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 check perf |

结果:

1 2 3 4 | 59 / 60 Booooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooooom ! 98.33%PASS: Throughput is 150 writes/sPASS: Slowest request took 0.055096sPASS: Stddev is 0.001185sPASS |

检查ETCD集群成员列表

1 2 3 4 5 6 | ETCDCTL_API=3 /usr/local/bin/etcdctl \--write-out=table \--cacert=/etc/etcd/ssl/ca.pem \--cert=/etc/etcd/ssl/etcd.pem \--key=/etc/etcd/ssl/etcd-key.pem \--endpoints=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 member list |

结果:

1 2 3 4 5 6 7 | +------------------+---------+-------+---------------------------+---------------------------+------------+| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |+------------------+---------+-------+---------------------------+---------------------------+------------+| af05139f75a68867 | started | etcd1 | https://192.168.1.92:2380 | https://192.168.1.92:2379 | false || c3bab7c20fba3f60 | started | etcd2 | https://192.168.1.93:2380 | https://192.168.1.93:2379 | false || fbba12d5c6ebb577 | started | etcd3 | https://192.168.1.94:2380 | https://192.168.1.94:2379 | false |+------------------+---------+-------+---------------------------+---------------------------+------------+ |

检查ETCD节点状态(可以看出谁是集群的leader)

1 2 3 4 5 6 | ETCDCTL_API=3 /usr/local/bin/etcdctl \--write-out=table \--cacert=/etc/etcd/ssl/ca.pem \--cert=/etc/etcd/ssl/etcd.pem \--key=/etc/etcd/ssl/etcd-key.pem \--endpoints=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 endpoint status |

结果:

1 2 3 4 5 6 7 | +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| https://192.168.1.92:2379 | af05139f75a68867 | 3.5.6 | 22 MB | false | false | 3 | 8982 | 8982 | || https://192.168.1.93:2379 | c3bab7c20fba3f60 | 3.5.6 | 22 MB | true | false | 3 | 8982 | 8982 | || https://192.168.1.94:2379 | fbba12d5c6ebb577 | 3.5.6 | 22 MB | false | false | 3 | 8982 | 8982 | |+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ |

2.5 Kubernetes集群部署

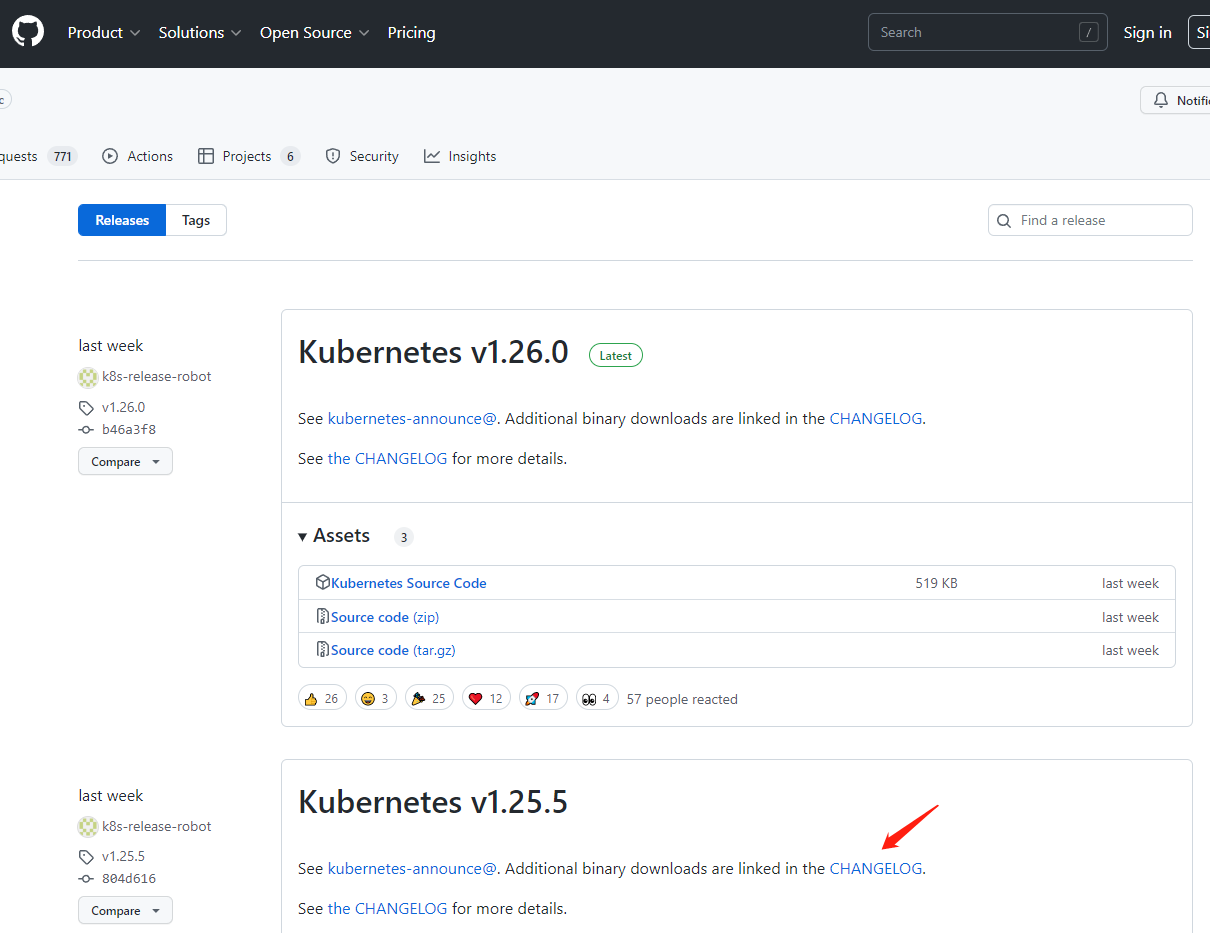

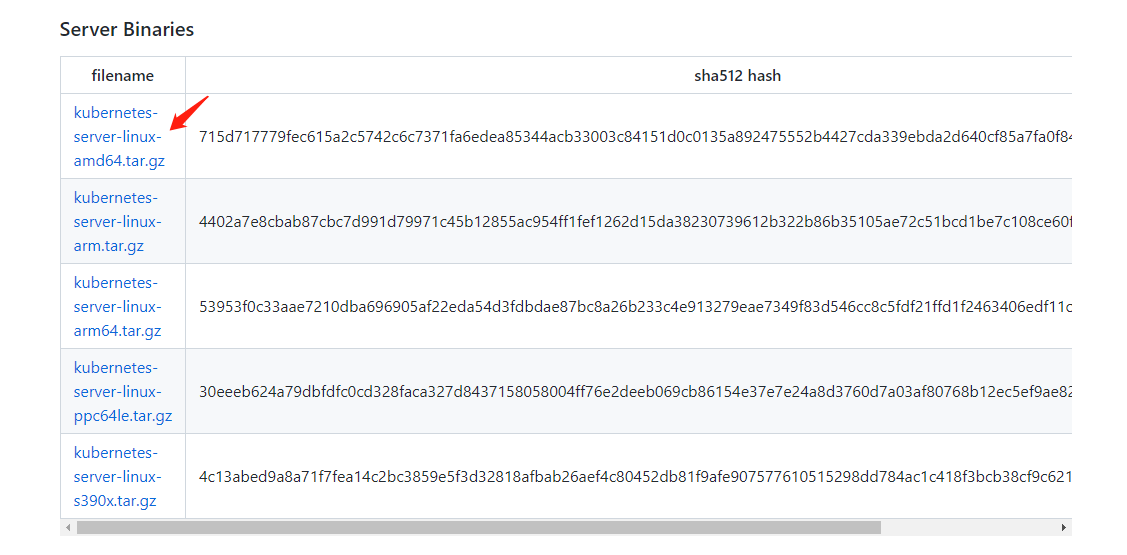

2.5.1 Kubernetes软件包下载

下载安装包(我这里用的v1.25.5版本):

1 | wget https://dl.k8s.io/v1.25.5/kubernetes-server-linux-amd64.tar.gz |

2.5.2 Kubernetes软件包安装

1 2 3 4 5 | tar -xvf kubernetes-server-linux-amd64.tar.gzcd kubernetes/server/bin/cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/ |

2.5.3 Kubernetes软件分发

1 2 | scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master2:/usr/local/bin/scp kube-apiserver kube-controller-manager kube-scheduler kubectl k8s-master3:/usr/local/bin/ |

根据自己需求,拷贝以下服务:

1 2 3 4 | scp kubelet kube-proxy k8s-master1:/usr/local/binscp kubelet kube-proxy k8s-master2:/usr/local/binscp kubelet kube-proxy k8s-master3:/usr/local/binscp kubelet kube-proxy k8s-worker1:/usr/local/bin |

2.5.4 在集群节点上创建目录

1 2 3 | mkdir -p /etc/kubernetes/ mkdir -p /etc/kubernetes/ssl mkdir -p /var/log/kubernetes |

2.5.5 部署api-server

2.5.5.1 创建apiserver证书请求文件

注:master1操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | cat > kube-apiserver-csr.json << "EOF"{"CN": "kubernetes", "hosts": [ "127.0.0.1", "192.168.1.92", "192.168.1.93", "192.168.1.94", "192.168.1.95", "192.168.1.96", "192.168.1.97", "192.168.1.98", "192.168.1.99", "192.168.1.100", "10.96.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "kubemsb", "OU": "CN" } ]}EOF |

说明:

2.5.5.2 生成apiserver证书及token文件

1 | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver |

创建TLS机制所需TOKEN(自动签发给客户端证书的机制):

1 2 3 | cat > token.csv << EOF$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"EOF |

说明:

2.5.5.3 创建apiserver服务配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | cat > /etc/kubernetes/kube-apiserver.conf << "EOF"KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.1.92 \ --secure-port=6443 \ --advertise-address=192.168.1.92 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/etc/kubernetes/token.csv \ --service-node-port-range=30000-32767 \ --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/etc/etcd/ssl/ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --etcd-servers=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=4"EOF |

说明:

--bind-address :监听地址

--secure-port:https安全端口

--advertise-address:集群通告地址

--authorization-mode:认证授权,启用RBAC授权和节点自管理

--allow-privileged:启用授权

--service-cluster-ip-range:Service虚拟IP地址段

--enable-bootstrap-token-auth:启用TLS bootstrap机制

--token-auth-file:bootstrap token文件

--service-node-port-range:Service nodeport类型默认分配端口范围

--kubelet-https:apiserver主动访问kubelet时默认使用https

--kubelet-client-xxx:apiserver访问kubelet客户端证书

--tls-xxx-file:apiserver https证书

--etcd-xxxfile:连接Etcd集群证书

--etcd-servers: etcd集群地址

--audit-log-xxx:审计日志

--logtostderr:启用日志

--log-dir:日志目录

--v:日志等级,越小越多

2.5.5.4 创建apiserver服务管理配置文件

注:

- After 代表谁在前启动

- Requires 代表需要哪个服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | cat > /etc/systemd/system/kube-apiserver.service << "EOF"[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=etcd.serviceWants=etcd.service[Service]EnvironmentFile=-/etc/kubernetes/kube-apiserver.confExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTSRestart=on-failureRestartSec=5Type=notifyLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF |

说明:

此两横字段说明,要启动kube-apiserver,必须先启动etcd。

2.5.5.5 同步文件到集群master节点

拷贝目录:

1 | cd /data/k8s-work |

拷贝ca证书到master1指定目录:

1 | cp ca*.pem /etc/kubernetes/ssl/ |

拷贝kube-apiserver证书到master1指定目录:

1 | cp kube-apiserver*.pem /etc/kubernetes/ssl/ |

拷贝token.csv文件到master1指定目录:

1 | cp token.csv /etc/kubernetes/ |

同步token.csv文件到其他master:

1 2 | scp /etc/kubernetes/token.csv k8s-master2:/etc/kubernetesscp /etc/kubernetes/token.csv k8s-master3:/etc/kubernetes |

同步kube-apiserver证书到其他master:

1 2 | scp /etc/kubernetes/ssl/kube-apiserver*.pem k8s-master2:/etc/kubernetes/sslscp /etc/kubernetes/ssl/kube-apiserver*.pem k8s-master3:/etc/kubernetes/ssl |

同步ca证书到其他master:

1 2 | scp /etc/kubernetes/ssl/ca*.pem k8s-master2:/etc/kubernetes/sslscp /etc/kubernetes/ssl/ca*.pem k8s-master3:/etc/kubernetes/ssl |

同步kube-apiserver配置文件到master2(需要修改主机IP):

1 | scp /etc/kubernetes/kube-apiserver.conf k8s-master2:/etc/kubernetes/kube-apiserver.conf |

或者直接执行以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | cat > /etc/kubernetes/kube-apiserver.conf << "EOF"KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.1.93 \ --secure-port=6443 \ --advertise-address=192.168.1.93 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/etc/kubernetes/token.csv \ --service-node-port-range=30000-32767 \ --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/etc/etcd/ssl/ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --etcd-servers=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=4"EOF |

同步kube-apiserver配置文件到master3(需要修改主机IP):

1 | scp /etc/kubernetes/kube-apiserver.conf k8s-master3:/etc/kubernetes/kube-apiserver.conf |

或者直接执行以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | cat > /etc/kubernetes/kube-apiserver.conf << "EOF"KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \ --anonymous-auth=false \ --bind-address=192.168.1.94 \ --secure-port=6443 \ --advertise-address=192.168.1.94 \ --authorization-mode=Node,RBAC \ --runtime-config=api/all=true \ --enable-bootstrap-token-auth \ --service-cluster-ip-range=10.96.0.0/16 \ --token-auth-file=/etc/kubernetes/token.csv \ --service-node-port-range=30000-32767 \ --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \ --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-account-issuer=api \ --etcd-cafile=/etc/etcd/ssl/ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --etcd-servers=https://192.168.1.92:2379,https://192.168.1.93:2379,https://192.168.1.94:2379 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kube-apiserver-audit.log \ --event-ttl=1h \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=4"EOF |

同步服务启动文件(master节点):

1 2 3 | scp /etc/systemd/system/kube-apiserver.service k8s-master2:/etc/systemd/system/kube-apiserver.servicescp /etc/systemd/system/kube-apiserver.service k8s-master3:/etc/systemd/system/kube-apiserver.service |

2.5.5.6 启动apiserver服务

1 2 3 | systemctl daemon-reloadsystemctl enable --now kube-apiserversystemctl status kube-apiserver |

2.5.5.7 测试

1 2 3 4 | curl --insecure https://192.168.1.92:6443/curl --insecure https://192.168.1.93:6443/curl --insecure https://192.168.1.94:6443/curl --insecure https://192.168.1.100:6443/ |

说明:

未经授权,所有都会报401。

2.5.6 部署kubectl

2.5.6.1 创建kubectl证书请求文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | cat > admin-csr.json << "EOF"{ "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:masters", "OU": "system" } ]}EOF |

说明:

2.5.6.2 生成证书文件

1 | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin |

2.5.6.3 复制文件到指定目录

1 2 | cd /data/k8s-workcp admin*.pem /etc/kubernetes/ssl/ |

2.5.6.4 生成kubeconfig配置文件

注:kube.config 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

1 2 3 4 5 6 7 | kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.1.100:6443 --kubeconfig=kube.configkubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.configkubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.configkubectl config use-context kubernetes --kubeconfig=kube.config |

说明:

第一步:kubectl 管理的集群,所对应的证书,以及证书的访问链接。

第二步:配置证书的角色,管理员,使用的那一个人的证书,来对集群管理。

第三步:设置安全上下文。

第四步:使用安全上下文,对集群进行管理。

2.5.6.5 准备kubectl配置文件并进行角色绑定

1 2 3 | mkdir ~/.kubecp kube.config ~/.kube/configkubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config |

2.5.6.6 查看集群状态

1 | export KUBECONFIG=$HOME/.kube/config |

查看集群信息

1 | kubectl cluster-info |

查看集群组件状态(后续部署组件中都可以查看确认)

1 | kubectl get componentstatuses |

查看命名空间中资源对象

1 | kubectl get all --all-namespaces |

2.5.6.7 同步kubectl配置文件到集群其它master节点

1 | mkdir /root/.kube |

1 | mkdir /root/.kube |

master1操作同步:

1 2 | scp /root/.kube/config k8s-master2:/root/.kube/configscp /root/.kube/config k8s-master3:/root/.kube/config |

2.5.6.8 配置kubectl命令补全(可选)

注:如果配置了此命令,长时间依赖补全命令,会忘记命令,不建议安装。

1 2 3 4 5 6 | yum install -y bash-completionsource /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)kubectl completion bash > ~/.kube/completion.bash.incsource '/root/.kube/completion.bash.inc' source $HOME/.bash_profile |

2.5.7 部署kube-controller-manager

2.5.7.1 创建kube-controller-manager证书请求文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | cat > kube-controller-manager-csr.json << "EOF"{ "CN": "system:kube-controller-manager", "key": { "algo": "rsa", "size": 2048 }, "hosts": [ "127.0.0.1", "192.168.1.92", "192.168.1.93", "192.168.1.94" ], "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-controller-manager", "OU": "system" } ]}EOF |

说明:

2.5.7.2 创建kube-controller-manager证书文件

1 | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager |

查看生成的证书:

1 2 3 4 5 6 | cd /data/k8s-work/# lskube-controller-manager.csr kube-controller-manager-csr.jsonkube-controller-manager-key.pemkube-controller-manager.pem |

2.5.7.3 创建kube-controller-manager的kube-controller-manager.kubeconfig

1 2 3 4 5 6 7 | kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.1.100:6443 --kubeconfig=kube-controller-manager.kubeconfigkubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfigkubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfigkubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig |

2.5.7.4 创建kube-controller-manager配置文件

注:--bind-address 可以绑定主机IP,也可以写127.0.0.1,否则每台主机都需要修改。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | cat > kube-controller-manager.conf << "EOF"KUBE_CONTROLLER_MANAGER_OPTS=" \ --secure-port=10257 \ --bind-address=127.0.0.1 \ --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \ --service-cluster-ip-range=10.96.0.0/16 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --allocate-node-cidrs=true \ --cluster-cidr=10.244.0.0/16 \ --cluster-signing-duration=87600h \ --root-ca-file=/etc/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \ --leader-elect=true \ --feature-gates=RotateKubeletServerCertificate=true \ --controllers=*,bootstrapsigner,tokencleaner \ --horizontal-pod-autoscaler-sync-period=10s \ --tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \ --use-service-account-credentials=true \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2"EOF |

2.5.7.5 创建服务启动文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | cat > kube-controller-manager.service << "EOF"[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/etc/kubernetes/kube-controller-manager.confExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.targetEOF |

2.5.7.6 同步文件到集群master节点

拷贝文件到本机(master1)指定目录:

1 2 3 4 | cp kube-controller-manager*.pem /etc/kubernetes/ssl/cp kube-controller-manager.kubeconfig /etc/kubernetes/cp kube-controller-manager.conf /etc/kubernetes/cp kube-controller-manager.service /usr/lib/systemd/system/ |

同步文件到集群master节点:

1 2 3 4 5 6 | scp kube-controller-manager*.pem k8s-master2:/etc/kubernetes/ssl/scp kube-controller-manager*.pem k8s-master3:/etc/kubernetes/ssl/scp kube-controller-manager.kubeconfig kube-controller-manager.conf k8s-master2:/etc/kubernetes/scp kube-controller-manager.kubeconfig kube-controller-manager.conf k8s-master3:/etc/kubernetes/scp kube-controller-manager.service k8s-master2:/usr/lib/systemd/system/scp kube-controller-manager.service k8s-master3:/usr/lib/systemd/system/ |

查看证书

1 | openssl x509 -in /etc/kubernetes/ssl/kube-controller-manager.pem -noout -text |

2.5.7.7 启动服务

1 2 3 | systemctl daemon-reload systemctl enable --now kube-controller-managersystemctl status kube-controller-manager |

2.5.7.8 查看集群组件状态(查看controller-manager)

1 2 3 4 5 6 7 8 | [root@k8s-master1 k8s-work]# kubectl get componentstatusesWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused controller-manager Healthy ok etcd-1 Healthy {"health":"true","reason":""} etcd-2 Healthy {"health":"true","reason":""} etcd-0 Healthy {"health":"true","reason":""} |

2.5.8 部署kube-scheduler

2.5.8.1 创建kube-scheduler证书请求文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | cat > kube-scheduler-csr.json << "EOF"{ "CN": "system:kube-scheduler", "hosts": [ "127.0.0.1", "192.168.1.92", "192.168.1.93", "192.168.1.94" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-scheduler", "OU": "system" } ]}EOF |

2.5.8.2 生成kube-scheduler证书

1 | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler |

查看生成的证书:

1 2 3 4 5 6 | cd /data/k8s-work/# lskube-scheduler.csrkube-scheduler-csr.jsonkube-scheduler-key.pemkube-scheduler.pem |

2.5.8.3 创建kube-scheduler的kubeconfig

1 2 3 4 5 6 7 | kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.1.100:6443 --kubeconfig=kube-scheduler.kubeconfigkubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfigkubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfigkubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig |

2.5.8.4 创建kube-scheduler服务配置文件

1 2 3 4 5 6 7 8 9 10 | cat > kube-scheduler.conf << "EOF"KUBE_SCHEDULER_OPTS=" \--bind-address=127.0.0.1 \--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \--leader-elect=true \--alsologtostderr=true \--logtostderr=false \--log-dir=/var/log/kubernetes \--v=2"EOF |

2.5.8.5创建服务启动配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | cat > kube-scheduler.service << "EOF"[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/etc/kubernetes/kube-scheduler.confExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTSRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.targetEOF |

2.5.8.6 同步文件至集群master节点

拷贝文件到本机(master1)指定目录:

1 2 3 4 | cp kube-scheduler*.pem /etc/kubernetes/ssl/cp kube-scheduler.kubeconfig /etc/kubernetes/cp kube-scheduler.conf /etc/kubernetes/cp kube-scheduler.service /usr/lib/systemd/system/ |

同步文件到集群master节点:

1 2 3 4 5 6 | scp kube-scheduler*.pem k8s-master2:/etc/kubernetes/ssl/scp kube-scheduler*.pem k8s-master3:/etc/kubernetes/ssl/scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master2:/etc/kubernetes/scp kube-scheduler.kubeconfig kube-scheduler.conf k8s-master3:/etc/kubernetes/scp kube-scheduler.service k8s-master2:/usr/lib/systemd/system/scp kube-scheduler.service k8s-master3:/usr/lib/systemd/system/ |

2.5.8.7 启动服务

1 2 3 | systemctl daemon-reloadsystemctl enable --now kube-schedulersystemctl status kube-scheduler |

2.5.8.8 查看集群组件状态(查看scheduler)

1 2 3 4 5 6 7 8 | [root@k8s-master1 k8s-work]# kubectl get componentstatusesWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORcontroller-manager Healthy ok etcd-0 Healthy {"health":"true","reason":""} etcd-1 Healthy {"health":"true","reason":""} scheduler Healthy ok etcd-2 Healthy {"health":"true","reason":""} |

2.5.9 工作节点(worker node)部署

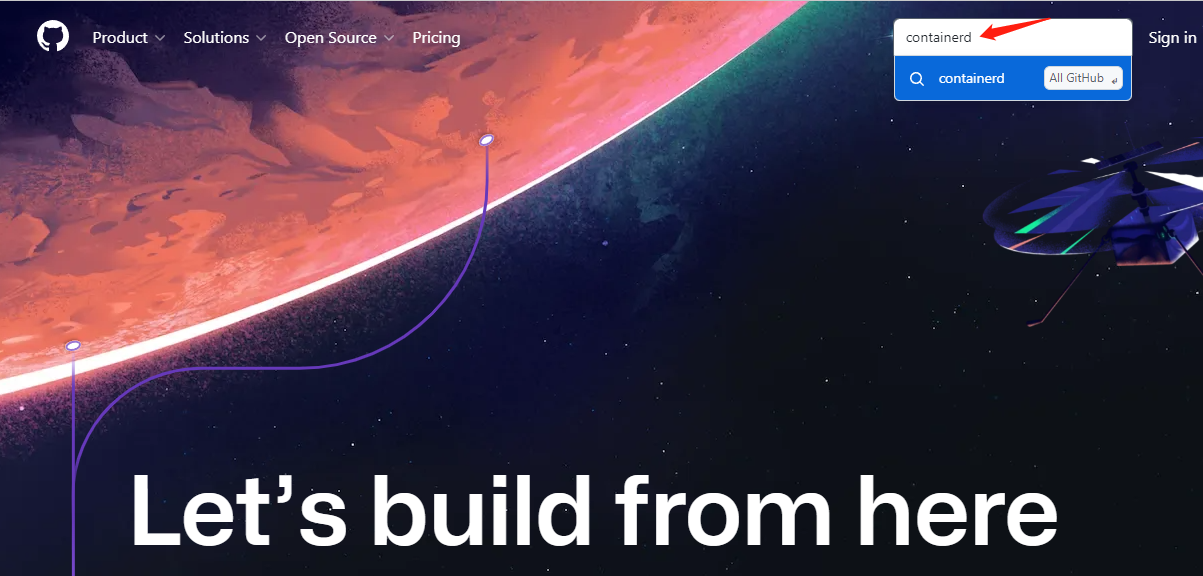

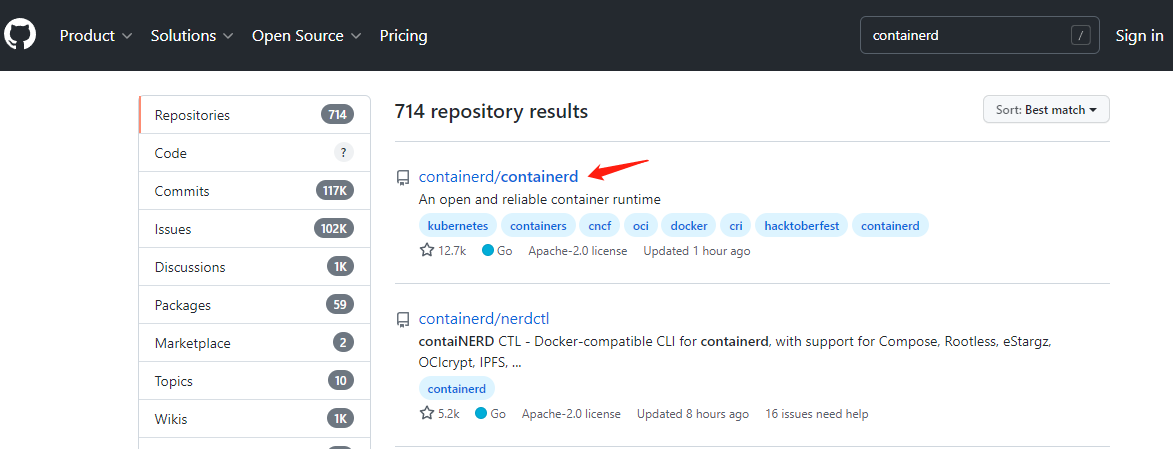

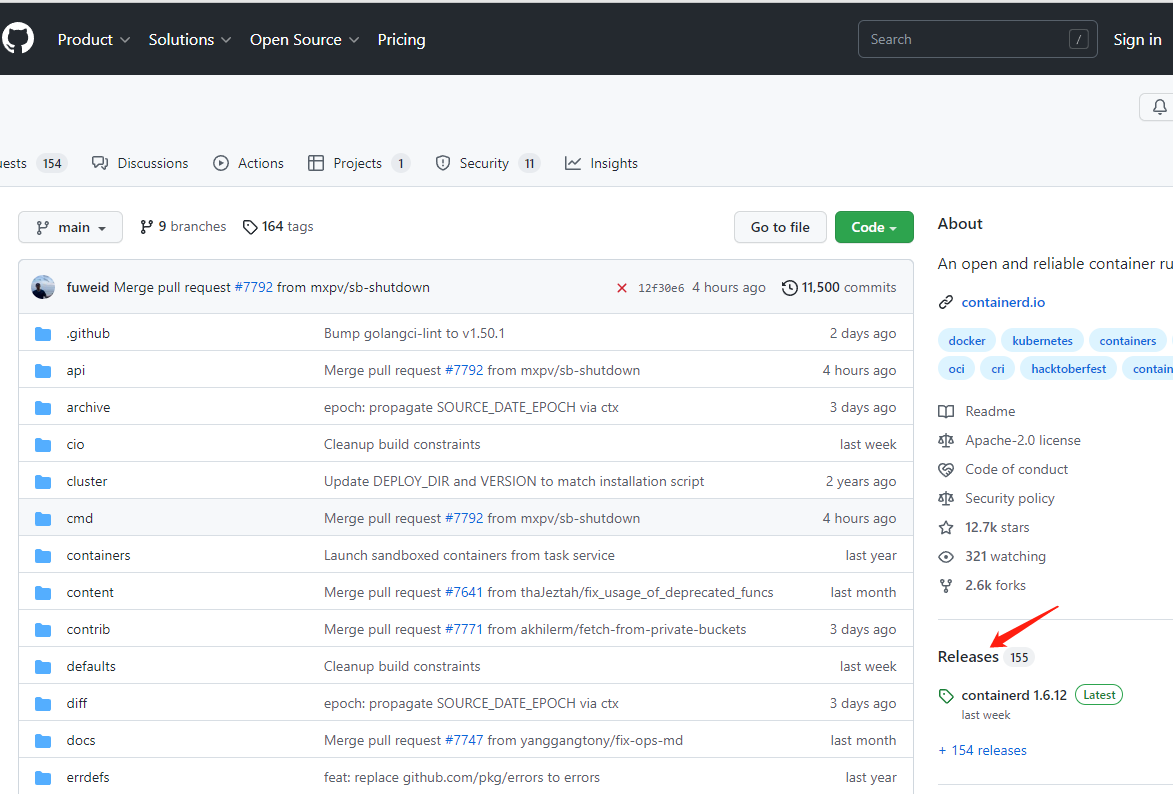

2.5.9.1 Containerd安装及配置

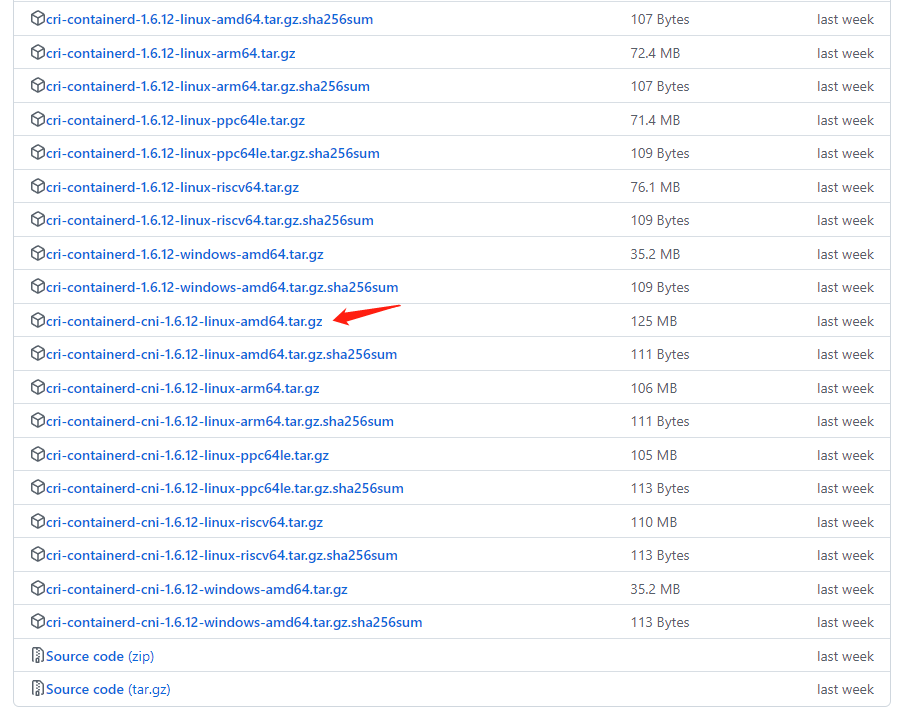

2.5.9.1.1 获取软件包

下载地址:https://github.com/

选择cri-containerd-cni-1.6.12-linux-amd64.tar.gz 类型,此类包括了容器管理,网络插件适用

2.5.9.1.2 安装containerd

注:次此master和worker全部安装(master也做为运行pod的节点),根据自己需求安装

(1)下载安装包:

1 | wget https://github.com/containerd/containerd/releases/download/v1.6.12/cri-containerd-cni-1.6.12-linux-amd64.tar.gz |

安装包可能无法下载,我已放到百度网盘:

链接:https://pan.baidu.com/s/1Ma28Aanwx5nuhGvqptUsWQ?pwd=ixok

提取码:ixok

(2)手动上传至master1,然后同步runc到其他三个节点(master2、master3、worker1):

1 2 3 | scp cri-containerd-cni-1.6.12-linux-amd64.tar.gz k8s-master2:/root/scp cri-containerd-cni-1.6.12-linux-amd64.tar.gz k8s-master3:/root/scp cri-containerd-cni-1.6.12-linux-amd64.tar.gz k8s-worker1:/root/ |

(3)四台主机都解压:

1 | tar -xf cri-containerd-cni-1.6.12-linux-amd64.tar.gz -C / |

说明:

2.5.9.1.3 生成配置文件并修改

创建目录:

1 | mkdir /etc/containerd |

生成containerd配置文件:

1 | containerd config default >/etc/containerd/config.toml |

查看:

1 2 | # ls /etc/containerd/config.toml |

下面的配置文件中已修改,可不执行,仅修改默认时执行。

1 | sed -i 's@systemd_cgroup = false@systemd_cgroup = true@' /etc/containerd/config.toml |

下面的配置文件中已修改,可不执行,仅修改默认时执行。

1 | sed -i 's@k8s.gcr.io/pause:3.6@registry.aliyuncs.com/google_containers/pause:3.6@' /etc/containerd/config.toml |

注:生成的配置文件后期改动的地方较多,缺少镜像仓库,这里直接换成可单机使用、也可k8s环境使用的配置文件并配置好镜像加速器。(根据自己需求决定是否使用)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 | cat >/etc/containerd/config.toml<<EOFroot = "/var/lib/containerd"state = "/run/containerd"oom_score = -999[grpc] address = "/run/containerd/containerd.sock" uid = 0 gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216[debug] address = "" uid = 0 gid = 0 level = ""[metrics] address = "" grpc_histogram = false[cgroup] path = ""[plugins] [plugins.cgroups] no_prometheus = false [plugins.cri] stream_server_address = "127.0.0.1" stream_server_port = "0" enable_selinux = false sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6" stats_collect_period = 10 systemd_cgroup = true enable_tls_streaming = false max_container_log_line_size = 16384 [plugins.cri.containerd] snapshotter = "overlayfs" no_pivot = false [plugins.cri.containerd.default_runtime] runtime_type = "io.containerd.runtime.v1.linux" runtime_engine = "" runtime_root = "" [plugins.cri.containerd.untrusted_workload_runtime] runtime_type = "" runtime_engine = "" runtime_root = "" [plugins.cri.cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "/etc/cni/net.d/10-default.conf" [plugins.cri.registry] [plugins.cri.registry.mirrors] [plugins.cri.registry.mirrors."docker.io"] endpoint = [ "https://docker.mirrors.ustc.edu.cn", "http://hub-mirror.c.163.com" ] [plugins.cri.registry.mirrors."gcr.io"] endpoint = [ "https://gcr.mirrors.ustc.edu.cn" ] [plugins.cri.registry.mirrors."k8s.gcr.io"] endpoint = [ "https://gcr.mirrors.ustc.edu.cn/google-containers/" ] [plugins.cri.registry.mirrors."quay.io"] endpoint = [ "https://quay.mirrors.ustc.edu.cn" ] [plugins.cri.registry.mirrors."harbor.kubemsb.com"] endpoint = [ "http://harbor.kubemsb.com" ] [plugins.cri.x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins.diff-service] default = ["walking"] [plugins.linux] shim = "containerd-shim" runtime = "runc" runtime_root = "" no_shim = false shim_debug = false [plugins.opt] path = "/opt/containerd" [plugins.restart] interval = "10s" [plugins.scheduler] pause_threshold = 0.02 deletion_threshold = 0 mutation_threshold = 100 schedule_delay = "0s" startup_delay = "100ms"EOF |

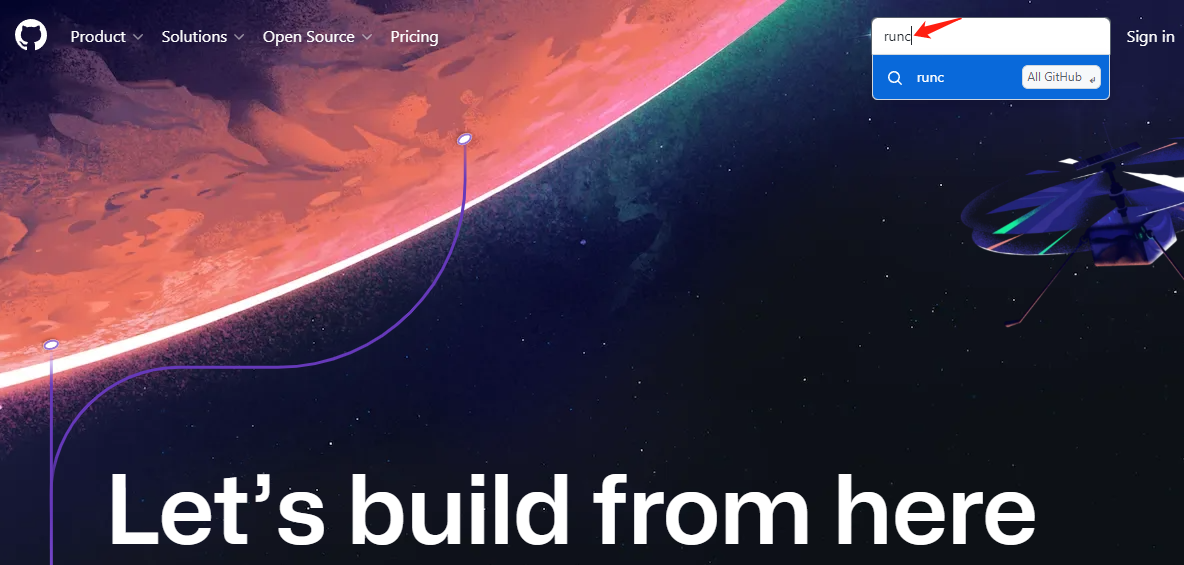

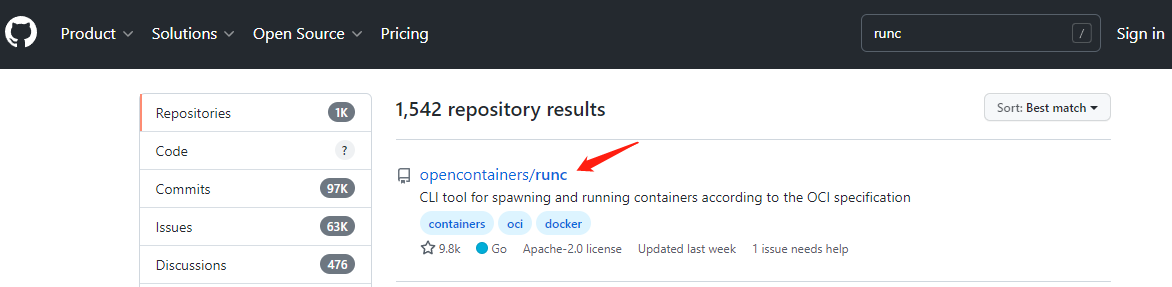

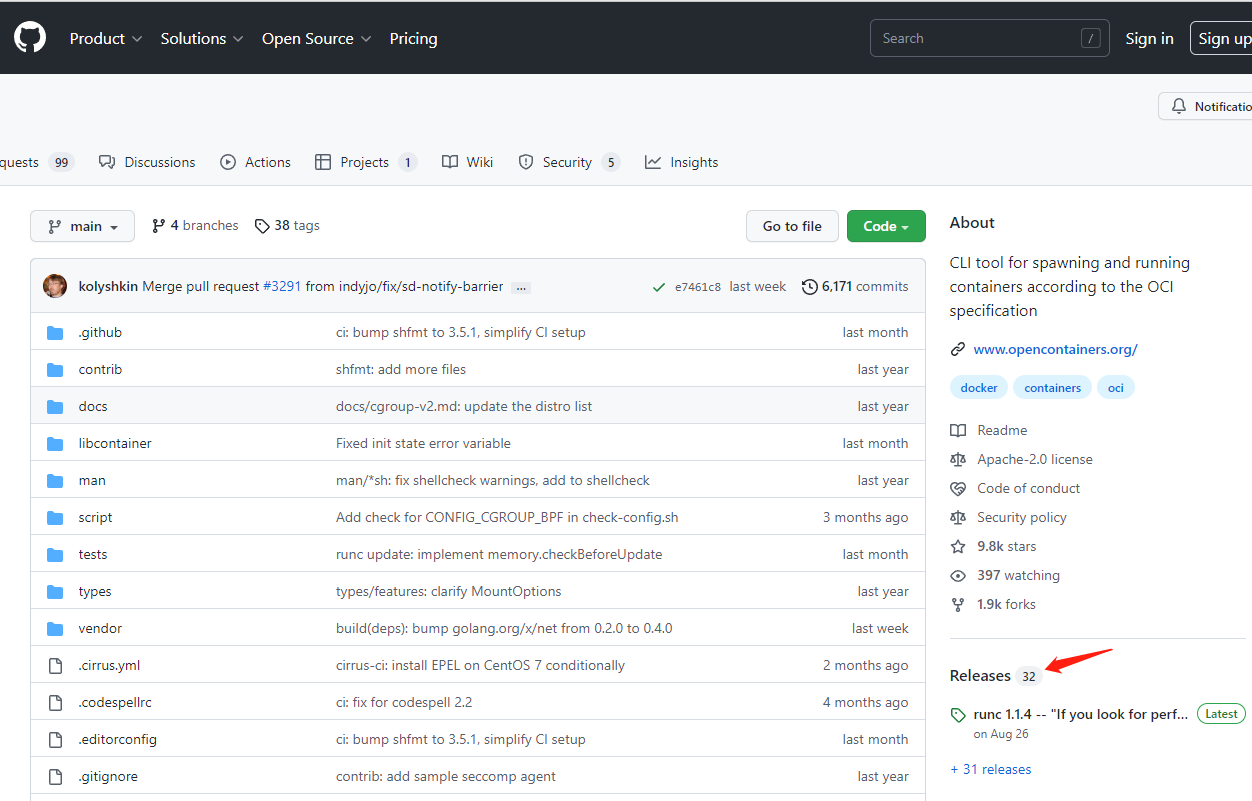

2.5.9.1.4 安装runc

注:

- 由于上述软件包中包含的runc对系统依赖过多,所以建议单独下载安装。

- 默认runc执行时提示:runc: symbol lookup error: runc: undefined symbol: seccomp_notify_respond

获取runc

下载地址:https://github.com/

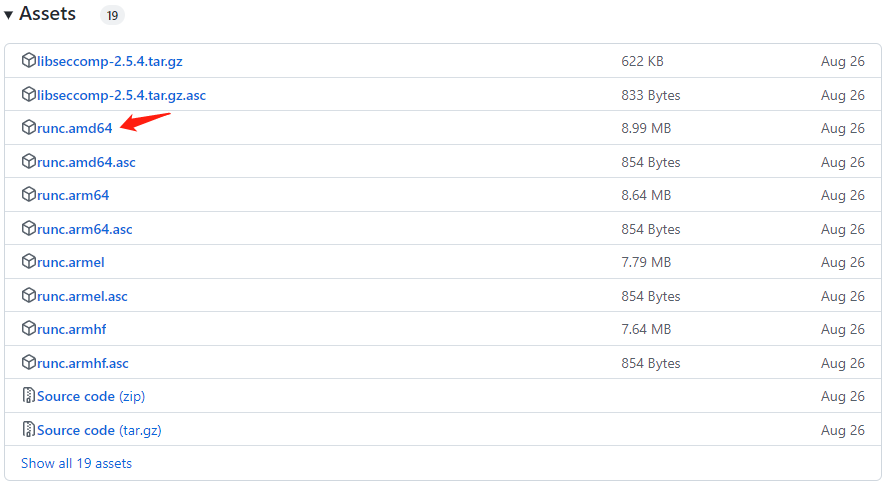

(1) 下载安装包:

1 | wget https://github.com/opencontainers/runc/releases/download/v1.1.4/runc.amd64 |

安装包可能无法下载,我已放到百度网盘:

链接:https://pan.baidu.com/s/1Ma28Aanwx5nuhGvqptUsWQ?pwd=ixok

提取码:ixok

(2) 赋予runc执行权限:

1 | chmod +x runc.amd64 |

(3) 替换掉原软件包中的runc:

1 | mv runc.amd64 /usr/local/sbin/runc |

(4) 同步runc到其他三个节点(master2、master3、worker1)

1 2 3 | scp /usr/local/sbin/runc k8s-master2:/usr/local/sbin/runcscp /usr/local/sbin/runc k8s-master3:/usr/local/sbin/runcscp /usr/local/sbin/runc k8s-worker1:/usr/local/sbin/runc |

(5) 验证:

1 2 3 4 5 6 | [root@k8s-master1 k8s-work]# runc -vrunc version 1.1.4commit: v1.1.4-0-g5fd4c4d1spec: 1.0.2-devgo: go1.17.10libseccomp: 2.5.4 |

(6) 启动Containerd

1 2 3 | systemctl enable containerdsystemctl start containerdsystemctl status containerd |

2.5.9.2 部署kubelet

注:在k8s-master1上操作2.5.9.2.1 创建kubelet-bootstrap.kubeconfig

1 2 3 4 5 6 7 8 9 | BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.1.100:6443 --kubeconfig=kubelet-bootstrap.kubeconfigkubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfigkubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfigkubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig |

确定集群的角色和资源调用

1 2 3 | kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrapkubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig |

验证(描述):

1 2 3 | kubectl describe clusterrolebinding cluster-system-anonymouskubectl describe clusterrolebinding kubelet-bootstrap |

2.5.9.2.2 创建kubelet配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | cat > kubelet.json << "EOF"{ "kind": "KubeletConfiguration", "apiVersion": "kubelet.config.k8s.io/v1beta1", "authentication": { "x509": { "clientCAFile": "/etc/kubernetes/ssl/ca.pem" }, "webhook": { "enabled": true, "cacheTTL": "2m0s" }, "anonymous": { "enabled": false } }, "authorization": { "mode": "Webhook", "webhook": { "cacheAuthorizedTTL": "5m0s", "cacheUnauthorizedTTL": "30s" } }, "address": "192.168.1.92", "port": 10250, "readOnlyPort": 10255, "cgroupDriver": "systemd", "hairpinMode": "promiscuous-bridge", "serializeImagePulls": false, "clusterDomain": "cluster.local.", "clusterDNS": ["10.96.0.2"]}EOF |

2.5.9.2.3 创建kubelet服务启动管理文件

注:

- After 代表谁在前启动。

- Requires 代表需要哪个服务。

- 次此使用的容器运行时是:containerd,如果使用docker,两处都填写docker.service。

-

registry.aliyuncs.com/google_containers/pause:3.2或者3.6 都可以。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | cat > kubelet.service << "EOF"[Unit]Description=Kubernetes KubeletDocumentation=https://github.com/kubernetes/kubernetesAfter=containerd.serviceRequires=containerd.service[Service]WorkingDirectory=/var/lib/kubeletExecStart=/usr/local/bin/kubelet \ --bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \ --cert-dir=/etc/kubernetes/ssl \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --config=/etc/kubernetes/kubelet.json \ --container-runtime=remote \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --rotate-certificates \ --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.2 \ --root-dir=/etc/cni/net.d \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2Restart=on-failureRestartSec=5[Install]WantedBy=multi-user.targetEOF |

查看生成的文件:

1 2 3 4 5 | cd /data/k8s-work/lskubelet-bootstrap.kubeconfig kubelet.json kubelet.service |

2.5.9.2.4 同步文件到集群节点

拷贝文件到指定目录:

1 2 3 | cp kubelet-bootstrap.kubeconfig /etc/kubernetes/cp kubelet.json /etc/kubernetes/cp kubelet.service /usr/lib/systemd/system/ |

worker节点创建目录(master之前已经创建过):

1 | mkdir -p /etc/kubernetes/ssl |

同步文件到其他三个节点:

1 2 3 4 5 | for i in k8s-master2 k8s-master3 k8s-worker1;do scp kubelet-bootstrap.kubeconfig kubelet.json $i:/etc/kubernetes/;donefor i in k8s-master2 k8s-master3 k8s-worker1;do scp ca.pem $i:/etc/kubernetes/ssl/;donefor i in k8s-master2 k8s-master3 k8s-worker1;do scp kubelet.service $i:/usr/lib/systemd/system/;done |

说明:

2.5.9.2.5 创建目录及启动服务

注:所有节点创建目录,否则kubelet 无法启动!

1 2 | mkdir -p /var/lib/kubeletmkdir -p /var/log/kubernetes |

启动服务:

1 2 3 4 | systemctl daemon-reloadsystemctl enable --now kubeletsystemctl status kubelet |

查看集群节点状态:

1 2 3 4 5 6 | [root@k8s-master1 k8s-work]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master1 Ready <none> 13m v1.25.5k8s-master2 Ready <none> 18s v1.25.5k8s-master3 Ready <none> 12s v1.25.5k8s-worker1 Ready <none> 10s v1.25.5 |

查看bootstrap请求:

1 2 3 4 5 6 | [root@k8s-master1 k8s-work]# kubectl get csrNAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITIONcsr-24h9v 2m16s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Approved,Issuedcsr-rhpgr 15m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Approved,Issuedcsr-rq2xx 2m8s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Approved,Issuedcsr-xsrd7 2m10s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Approved,Issued |

说明:

2.5.9.3 部署kube-proxy

2.5.9.3.1 创建kube-proxy证书请求文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | cat > kube-proxy-csr.json << "EOF"{ "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "kubemsb", "OU": "CN" } ]}EOF |

2.5.9.3.2 生成证书

1 | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy |

查看生成的证书:

1 2 | ls kube-proxy*kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem |

2.5.9.3.3 创建kubeconfig文件

1 2 3 4 5 6 7 | kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.1.100:6443 --kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfigkubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig |

2.5.9.3.4 创建服务配置文件

1 2 3 4 5 6 7 8 9 10 11 | cat > kube-proxy.yaml << "EOF"apiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 192.168.1.92clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfigclusterCIDR: 10.244.0.0/16healthzBindAddress: 192.168.1.92:10256kind: KubeProxyConfigurationmetricsBindAddress: 192.168.1.92:10249mode: "ipvs"EOF |

2.5.9.3.5 创建服务启动管理文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | cat > kube-proxy.service << "EOF"[Unit]Description=Kubernetes Kube-Proxy ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]WorkingDirectory=/var/lib/kube-proxyExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --alsologtostderr=true \ --logtostderr=false \ --log-dir=/var/log/kubernetes \ --v=2Restart=on-failureRestartSec=5LimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF |

2.5.9.3.6 同步文件到集群工作节点主机

拷贝文件至master1指定目录:

1 2 3 | cp kube-proxy*.pem /etc/kubernetes/ssl/cp kube-proxy.kubeconfig kube-proxy.yaml /etc/kubernetes/cp kube-proxy.service /usr/lib/systemd/system/ |

同步文件到其他三个节点(master2、master3、worker1):

1 2 | for i in k8s-master2 k8s-master3 k8s-worker1;do scp kube-proxy.kubeconfig kube-proxy.yaml $i:/etc/kubernetes/;donefor i in k8s-master2 k8s-master3 k8s-worker1;do scp kube-proxy.service $i:/usr/lib/systemd/system/;done |

说明:

2.5.9.3.7 服务启动

创建目录(四台主机master1、2、3和worker1):

1 | mkdir -p /var/lib/kube-proxy |

启动服务:

1 2 3 | systemctl daemon-reloadsystemctl enable --now kube-proxy<br>systemctl status kube-proxy |

2.5.10 网络组件部署 Calico

2.5.10.1 下载

1 | curl https://projectcalico.docs.tigera.io/archive/v3.23/manifests/calico.yaml -O |

2.5.10.2 修改文件

1 2 | 4470 - name: CALICO_IPV4POOL_CIDR4471 value: "10.244.0.0/16" |

2.5.10.3 应用文件

1 | kubectl apply -f calico.yaml |

2.5.10.4 验证应用结果

1 2 3 4 5 6 7 | [root@k8s-master1 k8s-work]# kubectl get pods -A -o wideNAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESkube-system calico-kube-controllers-d8b9b6478-g26fc 1/1 Running 2 (31s ago) 3m2s 10.244.194.64 k8s-worker1 <none> <none>kube-system calico-node-7hx5p 1/1 Running 0 3m2s 192.168.1.93 k8s-master2 <none> <none>kube-system calico-node-crd6m 1/1 Running 0 3m2s 192.168.1.96 k8s-worker1 <none> <none>kube-system calico-node-jnvs7 1/1 Running 0 3m2s 192.168.1.94 k8s-master3 <none> <none>kube-system calico-node-k8qth 1/1 Running 0 3m2s 192.168.1.92 k8s-master1 <none> <none> |

集群状态:

1 2 3 4 5 6 | [root@k8s-master1 k8s-work]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master1 Ready <none> 84m v1.25.5k8s-master2 Ready <none> 71m v1.25.5k8s-master3 Ready <none> 70m v1.25.5k8s-worker1 Ready <none> 70m v1.25.5 |

2.5.10 部署CoreDNS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 | cat > coredns.yaml << "EOF"apiVersion: v1kind: ServiceAccountmetadata: name: coredns namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:corednsrules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:corednsroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:corednssubjects:- kind: ServiceAccount name: coredns namespace: kube-system---apiVersion: v1kind: ConfigMapmetadata: name: coredns namespace: kube-systemdata: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance }---apiVersion: apps/v1kind: Deploymentmetadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS"spec: # replicas: not specified here: # 1. Default is 1. # 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.8.4 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile---apiVersion: v1kind: Servicemetadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS"spec: selector: k8s-app: kube-dns clusterIP: 10.96.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP EOF |

安装:

1 | kubectl apply -f coredns.yaml |

查看状态:

1 2 3 4 5 6 7 8 | [root@k8s-master1 k8s-work]# kubectl get pods -n kube-system -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEScalico-kube-controllers-d8b9b6478-g26fc 1/1 Running 2 (10m ago) 13m 10.244.194.64 k8s-worker1 <none> <none>calico-node-7hx5p 1/1 Running 0 13m 192.168.1.93 k8s-master2 <none> <none>calico-node-crd6m 1/1 Running 0 13m 192.168.1.96 k8s-worker1 <none> <none>calico-node-jnvs7 1/1 Running 0 13m 192.168.1.94 k8s-master3 <none> <none>calico-node-k8qth 1/1 Running 0 13m 192.168.1.92 k8s-master1 <none> <none>coredns-564fd8c776-h7f4q 1/1 Running 0 29s 10.244.159.129 k8s-master1 <none> <none> |

验证测试:

1 | dig -t a www.baidu.com @10.96.0.2 |

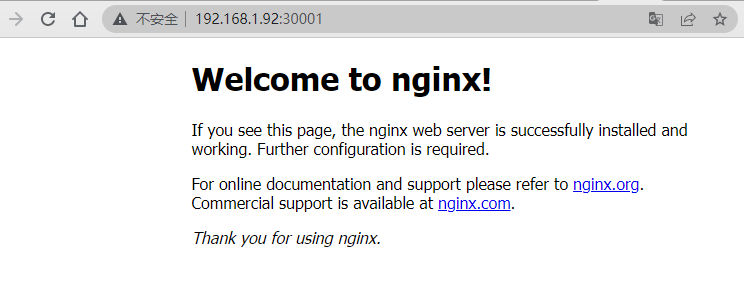

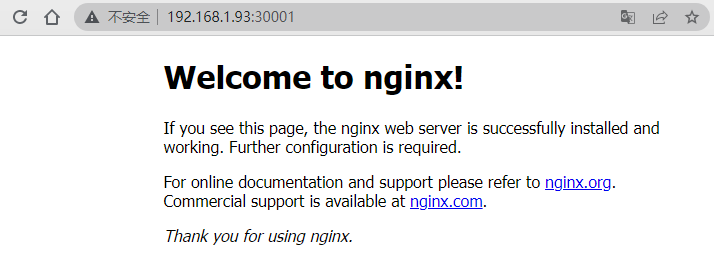

2.5.11 部署应用验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | cat > nginx.yaml << "EOF"---apiVersion: v1kind: ReplicationControllermetadata: name: nginx-webspec: replicas: 2 selector: name: nginx template: metadata: labels: name: nginx spec: containers: - name: nginx image: nginx:1.19.6 ports: - containerPort: 80---apiVersion: v1kind: Servicemetadata: name: nginx-service-nodeportspec: ports: - port: 80 targetPort: 80 nodePort: 30001 protocol: TCP type: NodePort selector: name: nginxEOF |

(1)安装部署:

1 | kubectl apply -f nginx.yaml |

(2)查看pod状态

1 2 3 4 | [root@k8s-master1 k8s-work]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-web-cxgtm 1/1 Running 0 2m16s 10.244.135.193 k8s-master3 <none> <none>nginx-web-nd8hg 1/1 Running 0 2m16s 10.244.224.1 k8s-master2 <none> <none> |

(3)查看所有default下内容:

1 2 3 4 5 6 7 8 9 10 11 | [root@k8s-master1 k8s-work]# kubectl get allNAME READY STATUS RESTARTS AGEpod/nginx-web-cxgtm 1/1 Running 0 11mpod/nginx-web-nd8hg 1/1 Running 0 11mNAME DESIRED CURRENT READY AGEreplicationcontroller/nginx-web 2 2 2 11mNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 30hservice/nginx-service-nodeport NodePort 10.96.216.41 <none> 80:30001/TCP 11m |

(4)查看节点端口:

1 | ss -anput | grep "30001" |

访问测试:

至此,集群部署完毕!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?

2020-12-16 Kubernetes核心原理和搭建