k8s中的容器网络接口(CNI)

k8s规定了一系列CNI( Container Network Interface)API接口

Kubelet启动时,通过--network-plugin=cni启用CNI,通过--cni-bin-dir参数指定CNI插件所在主机目录(默认为/opt/cni/bin/)、通过--cni-conf-dir参数指定CNI配置文件所在主机目录(默认为/etc/cni/net.d)

在节点上创建Pod前,会先读取CNI配置文件(xxnet.conf),执行安装在主机上的CNI二进制插件(xxnet)

通过这些API调用网络插件为Pod配置网络

k8s要求二进制插件负责实现以下内容,实现手段不限:

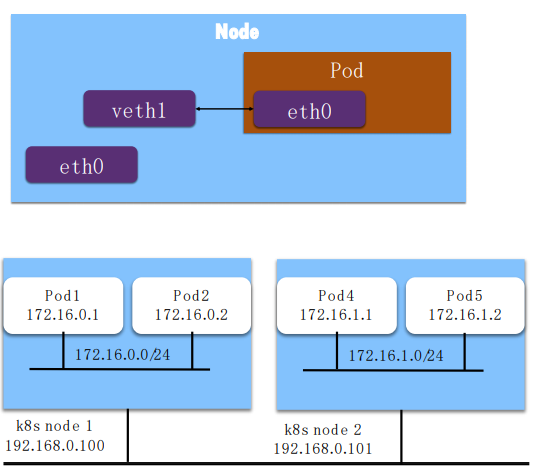

1.、创建“veth”虚拟网卡对,将其中一端放在Pod中

2.、给Pod分配集群中唯一的IP地址(一般会按Node分段,每个Pod再从Node段中分配IP),且Pod内所有容器共享此地址

3、给Pod的虚拟网卡配置分配到的IP、集群网段的路由

4、在宿主机上将到Pod的IP地址的路由配置到对端虚拟网卡上

一般插件提供者还会提供一个Daemon,负责:

1、把配置和二进制插件拷贝到Node中

2、通过请求apiserver学习到集群所有Pod的IP和其所在节点、监听新的Pod和Node的创建

3、创建到所有Node的通道(Overlay隧道、VPC路由表、BGP路由等)

4、将所有Pod的IP地址跟其所在Node的通道关联起来(Linux路由、Fdb转发表、OVS流表等)

总的来说实现模式分为3类:

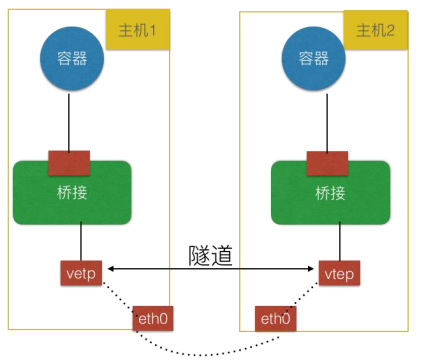

(1) Overlay:靠隧道打通,不依赖底层网络

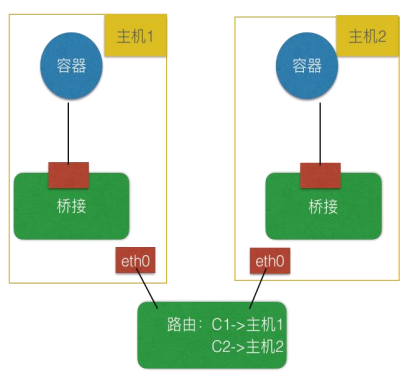

(2) 路由:靠路由打通,部分依赖底层网络

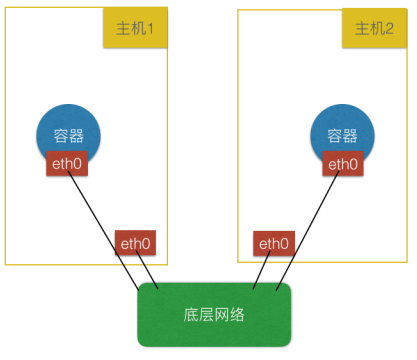

(3) Underlay:靠底层网络能力打通,强依赖底层

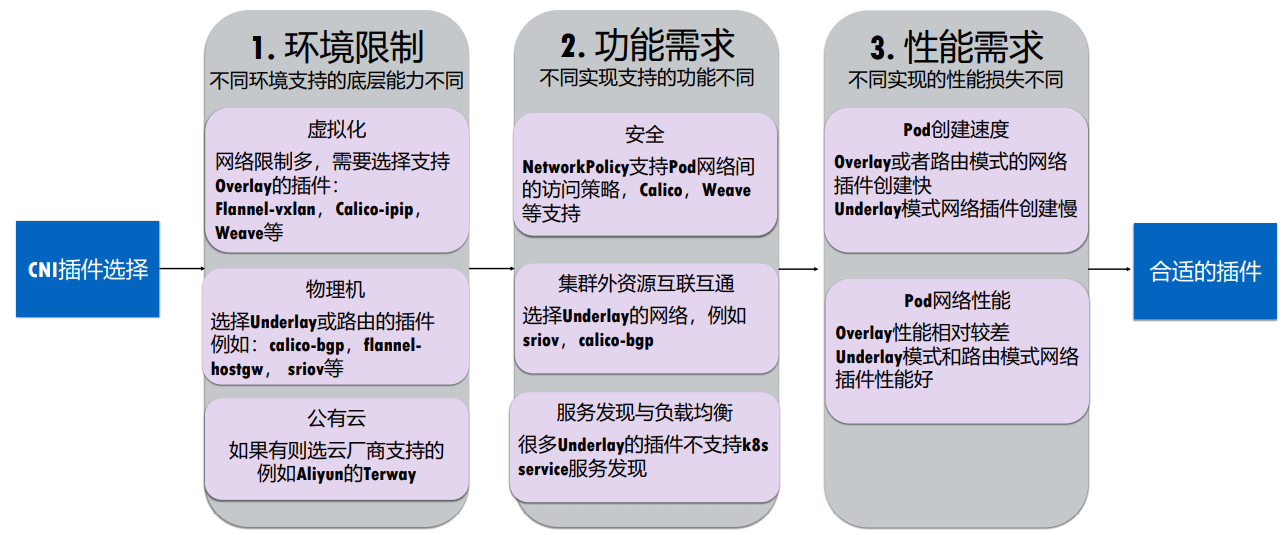

阿里云云原生公开课上建议的选择原则:

网络策略

默认情况下,集群中所有Pod之间、Pod与节点之间可以互通。

NetworkPolicy用来实现隔离性,只有匹配规则的流量才能进入、离开Pod

不同CNI插件对NetworkPolicy的实现程序不同。

配置示例:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978

-

podSelector

若为空,则选择命名空间下所有Pod

-

policyType

Ingress/Egress/两者兼有

-

ingress/egress

包含一份允许流量的规则列表,流量必须同时满足某一条规则的from/to和ports两部分才能被允许

from/to有四种:

①通过ipBlock选择ip段,通过except再排除掉ip段

②通过namespaceSelector选择Namespace

③通过podSelector选择Pod

④既通过namespaceSelector选择Namespace,又通过podSelector选择Pod

port部分可以指定端口范围(需要在apiserver启动参数加上-feature-gates=NetworkPolicyEndPort=true,以启用 NetworkPolicyEndPort特性门控):

ports:

- protocol: TCP

port: 32000

endPort: 32768

PS:如果没有该部分,则policyType中相应的流量全部会被拒绝:

spec:

podSelector: {}

policyTypes:

- Ingress

如果该部分包含了一条空规则,则policyType中相应的流量全部会被允许

spec:

podSelector: {}

ingress:

- {}

policyTypes:

- Ingress

常用CNI插件

Flannel

需要安装并运行etcd(或其它分布式健值存储数据库),在etcd中规划配置所有主机的Pod子网范围。

用户只需指定这个大的IP池,Flannel为每个主机自动分配独立的subnet,每台主机上的Pod子网范围不重复(因此Pod无法做到带地址迁移到其它主机)。不同subnet之间的路由信息也由Flannel自动生成和配置。

这样etcd库中就保存了每台主机的Pod子网范围和主机IP的对应关系(相当于通过etcd服务维护了一张节点间的路由表)

当需要与其他主机上的Pod进行通信时,查找etcd数据库,找到目的Pod的子网所对应的主机IP。

由于不同主机上的Pod在不同的子网,Pod跨节点通信本质为三层通信,也就不存在二层的ARP广播问题了。

Flannel支持不同的backend:

- VXLAN

将原始数据包封装在VXLAN数据包中,IP层以Pod所在主机为目的IP进行封装

数据从源容器发出后,经由所在主机的docker0虚拟网卡转发到flannel0虚拟网卡(P2P虚拟网卡),Flannel服务监听flannel0网卡的另一端,它将原本的数据内容封装后根据自己的路由表投递给目的IP的Flannel服务。

由于目的IP是宿主机IP,因此路由必然是可达的

VXLAN数据包到达目的宿主机解封装,解出原始数据包,最终到达目的容器

- UDP

将原始数据包封装在UDP数据包中

仅用于debug

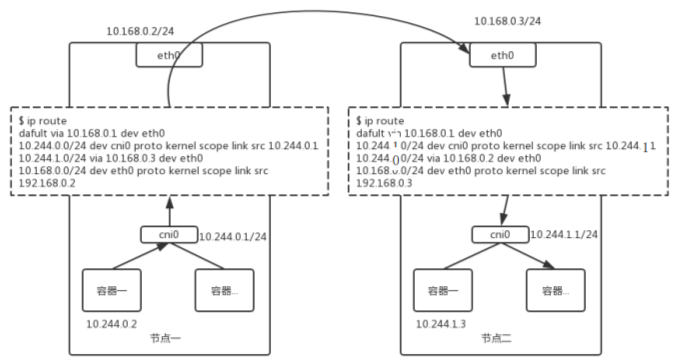

- host-gw

通过静态路由的方式实现容器跨主机通信,这时每个Node都相当于一个路由器,作为容器的网关,负责容器的路由转发。

由于没有VXLAN、UDP的封包拆包过程,直接路由过去,因此性能相对要好。

不过由于是通过路由的方式实现,每个Node相当于是容器的网关,因此所有Node必须在同一个LAN子网内,否则跨子网由于链路层不通导致无法实现路由。

具体实现步骤:

(1)容器应用netns内产生数据包,根据路由决定目的Mac:如属于同一subnet内直接发给本机另一容器;否则填写Gw-mac (cni0桥)。两种情况均通过Pod内的内veth pair发送到cni0桥;

(2)mac-桥转发:cni0桥默认按照mac转发数据,如目的mac为本桥上某一容器就直接转发;如目的为cni0的mac,则将数据包送到Host协议栈处理;

(3)ip-主机路由转发:数据包剥离mac层,进入ip寻址层,如目的IP为本机Gw(cni0)则上送到本地用户进程;如目的为其他网段,则查询本地路由表(见图ip route块),找到该网段对应的远端Gw-ip(其实是远端主机IP),通过neigh系统查出该远端Gw-ip的mac地址,作为数据的目的mac,通过主机eth0送出去;

(4)ip-远端路由转发: 由于mac地址的指引,数据包准确到达远端节点,通过eth0进入主机协议栈,再查询一次路由表,正常的话该目的网段的对应设备为自己的cni0的ip地址,数据发给了cni0;

(5)mac-远端桥转发:cni0作为host internal port on Bridge,收到数据包后,继续推送,IP层的处理末端填写目的ip(目标Pod的ip)的mac,此时通过bridge fdb表查出mac(否则发arp广播),经过bridge mac转发,包终于到了目的容器netns。

kube-ovn

基于OVS/OVN,将OpenStack领域成熟的网络功能平移到了Kubernetes。

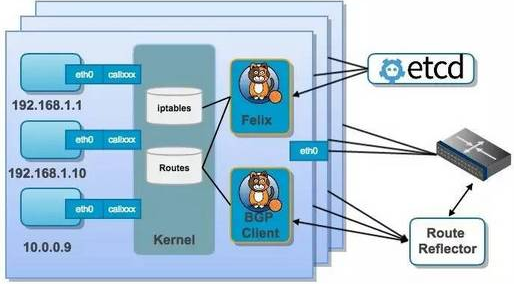

Calico

calico包括如下重要组件:

- Felix:Calico agent,主要负责路由配置以及ACLS规则的配置以及下发,确保endpoint的连通状态。

- BGP Client:主要负责把Felix写入kernel的路由信息分发到当前Calico网络,确保Pod跨主机通信的有效性;

- BGP Route Reflector:大规模部署时使用,摒弃所有节点互联的mesh模式,通过一个或者多个BGPRoute Reflector来完成集中式的路由分发。

Calico实现了大部分NetworkPolicy,通过其强大的Policy能够实现几乎任意场景的访问控制,尤其是企业版提供的Policy。

通过IP Pool可以为每个主机定制自己的subnet。IP Pool可以使用两种模式:

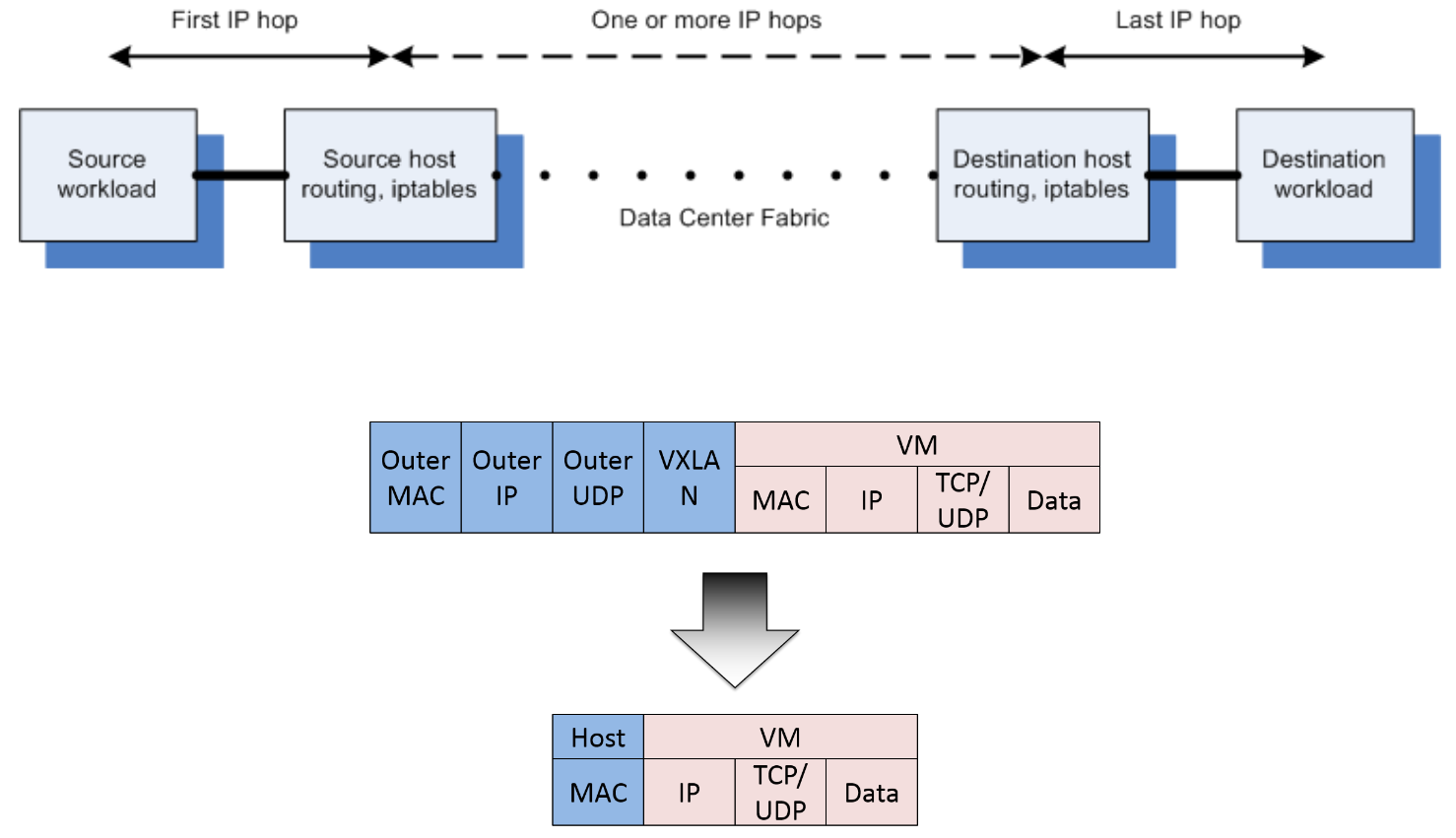

- BGP

每一台虚拟路由器通过BGP协议传播可达信息(路由)到其他虚拟或物理路由器。

如果节点不跨网段,数据直接从源容器经过源宿主机,经过数据中心的路由,然后到达目的宿主机最后分配到目的容器:

整个过程中始终都是根据iptables规则进行路由转发,并没有进行封包、解包的过程,节约了CPU的计算资源。

- overlay

跨VPC/子网使用calico时,需要进行报文封装(overlay)

目前支持两种模式:

(1)IPIP模式

Calico将在各Node上创建一个名为"tunl0"的虚拟网络接口。将各Node的路由之间做一个tunnel,即创建虚拟隧道。

(2)VXLAN

此时IPPool的配置为:

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: ippool-ipip-cross-subnet-1

spec:

cidr: 192.168.0.0/16

ipipMode/vxlanMode: xxxxx

natOutgoing: true

.spec.ipipMode/vxlanMode为Always时,会一直使用隧道;为CrossSubnet时,只有跨Subnet会使用隧道

Calico还通过iptables+ipset解决了多网络支持问题。

另外,如果需要Pod从一个节点迁移到另一个节点保持IP不变,可能导致Pod的IP不在Node分配的子网范围内,Calico可以通过添加一条32位的明细路由实现。Flannel不支持这种情况。

calico部署:

Calico有两种数据存储模式,既可以以用户自定义资源对象的形式存储在k8s中,也可以直接存储在etcd中

以直接使用etcd存储为例:

(1)准备两个目录,分别作为cni-conf-dir和cni-bin-dir

(2)准备证书请求文件calico-csr.json:

{

"CN": "calico",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "NanJing",

"L": "XS",

"O": "k8s",

"OU": "System"

}

]

}

创建证书和私钥:

cfssl gencert \ -ca=/etc/kubernetes/ssl/ca.pem \ -ca-key=/etc/kubernetes/ssl/ca-key.pem \ -config=/etc/kubernetes/ssl/ca-config.json \ -profile=kubernetes calico-csr.json | cfssljson -bare calico

创建secret:

kubectl create secret generic -n kube-system calico-etcd-secrets \ --from-file=etcd-ca=/etc/kubernetes/ssl/ca.pem \ --from-file=etcd-key=calico-key.pem \ --from-file=etcd-cert=calico.pem

(3)创建RBAC

calico-node的:

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-node

rules:

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

- watch

- list

- apiGroups: [""]

resources:

- configmaps

verbs:

- get

- apiGroups: [""]

resources:

- nodes/status

verbs:

- patch

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

calico-kube-controllers的:

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-kube-controllers

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

rules:

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

- serviceaccounts

verbs:

- watch

- list

- get

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: calico-kube-controllers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-kube-controllers

subjects:

- kind: ServiceAccount

name: calico-kube-controllers

namespace: kube-system

(4)创建ConfigMap:

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# 指定etcd的地址,可以使用K8S Master所用的etcd,也可以另外搭建

etcd_endpoints: "https://xxx:2379,……"

# etcd如果配置了TLS安全认证,则还需要指定相应的ca、cert、key等文件

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

typha_service_name: "none"

calico_backend: "bird"

veth_mtu: "1440"

# 安装在每个节点上的cni-conf-dir中的calico配置文件10-calico.conflist

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "warning",

"log_file_path": "/var/log/calico/cni/cni.log",

"etcd_endpoints": "https://132.252.142.161:2379,https://132.252.142.162:2379,https://132.252.142.163:2379",

"etcd_key_file": "/etc/calico/ssl/calico-key.pem",

"etcd_cert_file": "/etc/calico/ssl/calico.pem",

"etcd_ca_cert_file": "/etc/kubernetes/ssl/ca.pem",

"mtu": 1500,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/root/.kube/config"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

},

{

"type": "bandwidth",

"capabilities": {"bandwidth": true}

}

]

}

(5)通过deployment部署calico-kube-controllers:

apiVersion: apps/v1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

replicas: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

nodeSelector:

kubernetes.io/os: linux

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- key: node-role.kubernetes.io/master

effect: NoSchedule

serviceAccountName: calico-kube-controllers

priorityClassName: system-cluster-critical

hostNetwork: true

containers:

- name: calico-kube-controllers

image: reg.harbor.com/calico/kube-controllers:v3.19.1

env:

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

- name: ENABLED_CONTROLLERS

value: policy,namespace,serviceaccount,workloadendpoint,node

volumeMounts:

- mountPath: /calico-secrets

name: etcd-certs

readinessProbe:

exec:

command:

- /usr/bin/check-status

- -r

volumes:

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0444

通过Daemonset部署calico-node:

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

hostNetwork: true

tolerations:

- effect: NoSchedule

operator: Exists

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

- name: install-cni

image: reg.harbor.com/calico/cni:v3.19.1

command: ["/opt/cni/bin/install"]

envFrom:

- configMapRef:

name: kubernetes-services-endpoint

optional: true

env:

- name: CNI_CONF_NAME

value: "10-calico.conflist"

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

- mountPath: /calico-secrets

name: etcd-certs

securityContext:

privileged: true

- name: flexvol-driver

image: reg.harbor.com/calico/pod2daemon-flexvol:v3.19.1

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

containers:

- name: calico-node

image: reg.harbor.com/calico/node:v3.19.1

envFrom:

- configMapRef:

name: kubernetes-services-endpoint

optional: true

env:

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

- name: CALICO_K8S_NODE_REF

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

- name: CLUSTER_TYPE

value: "k8s,bgp"

- name: IP

value: "autodetect"

- name: CALICO_IPV4POOL_IPIP

value: "Always"

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

- name: FELIX_VXLANMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

- name: FELIX_WIREGUARDMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

- name: CALICO_IPV4POOL_CIDR

value: "182.80.0.0/16"

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

- name: FELIX_IPV6SUPPORT

value: "false"

- name: FELIX_LOGSEVERITYSCREEN

value: "warning"

- name: FELIX_HEALTHENABLED

value: "true"

- name: IP_AUTODETECTION_METHOD

value: can-reach=132.252.142.161

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

- name: policysync

mountPath: /var/run/nodeagent

- name: sysfs

mountPath: /sys/fs/

mountPropagation: Bidirectional

- name: cni-log-dir

mountPath: /var/log/calico/cni

readOnly: true

volumes:

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

- name: sysfs

hostPath:

path: /sys/fs/

type: DirectoryOrCreate

- name: cni-bin-dir

hostPath:

path: /data/aikube/opt/kube/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

- name: cni-log-dir

hostPath:

path: /var/log/calico/cni

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0400

- name: policysync

hostPath:

type: DirectoryOrCreate

path: /var/run/nodeagent

- name: flexvol-driver-host

hostPath:

type: DirectoryOrCreate

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

calico-node Pod包含2个容器:

- 初始化容器install-cni

在每个Node上安装CNI二进制文件到cni-bin-dir目录下,并安装相应的网络配置文件到cni-conf-dir目录下

- calico-node

calico服务程序,用于设置Pod的网络资源,保证Pod的网络与各Node互联互通

它通过环境变量注入的主要参数:

CALICO_IPV4POOL_CIDR: Calico IPAM的IP地址池,Pod的IP地址将从该池中进行分配。

CALICO_IPV4POOL_IPIP和CALICO_IPV4POOL_VXLAN:是否启用IPIP模式和VXLAN模式,有always(同网段情况也使用隧道)、off(如果节点跨网段,才使用隧道)

IP_AUTODETECTION_METHOD:自动选择网卡的方法,不指定的话会选择第一张网卡;value可以设置为can-reach=<ip>,此时自动选择能reach该ip的网卡;可以直接指定网卡名,指定时可以加正则规则设置为interface=ens.*,bond.*,team.*

felix相关的几个环境变量:

FELIX_DEFAULTENDPOINTTOHOSTACTION:Set Felix endpoint to host default action to ACCEPT

FELIX_IPV6SUPPORT:是否支持IPV6模式

FELIX_LOGSEVERITYSCREEN:日志级别,建议设置为warning

FELIX_HEALTHENABLED:是否开启健康检查

(6)设置Kubelet的启动参数;在kube-apiserver的启动参数设置--allow-privileged=true(calico-node需要以特权模式运行在各node上)

安装calicoctl工具

需要准备/etc/calico/calicoctl.cfg文件:

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

datastoreType: "etcdv3"

etcdEndpoints: "https://xxx:2379,……"

etcdKeyFile: /etc/calico/ssl/calico-key.pem

etcdCertFile: /etc/calico/ssl/calico.pem

etcdCACertFile: /etc/kubernetes/ssl/ca.pem

查看所有主机:

$ calicoctl get nodes

NAME

jfk8snode48

k8smanager4

查看主机状态:

$ calicoctl node status Calico process is running. IPv4 BGP status +----------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +----------------+-------------------+-------+----------+-------------+ | 132.252.41.100 | node-to-node mesh | up | 02:24:27 | Established | +----------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found.

缺点:无论是Flannel还是calico,与k8s集群外通信都需要通过bridge网络,即源地址变为主机的IP地址

浙公网安备 33010602011771号

浙公网安备 33010602011771号