LESSON 5 - Markov Sources

1. Markov sources

The state of the Markov chain is used to represent the “memory” of the source. The state could be the previous symbol from the source or could be the previous 300 symbols. It is possible to model as much memory as desired while staying in the regime of finite-state Markov chains.

(state与前M个symbols有关,就叫M重马尔科夫链)

- For example:

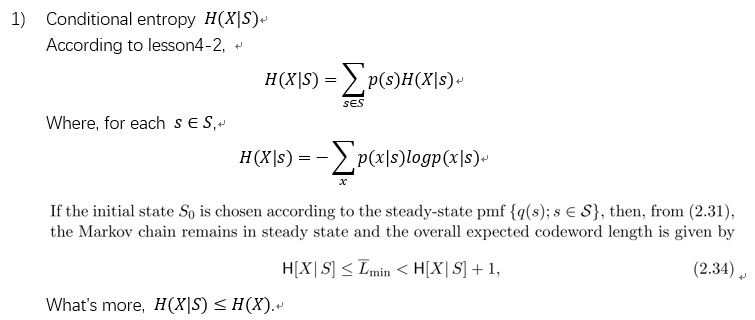

There is a binary source with outputs X1, X2,.... Assume that the symbol probabilities for Xk are conditioned on X(k-1) and X(k-2) but are independent of all previous symbols given these past 2 symbols.

The alphabet of possible states is then the set of binary pairs, Sn = (Xn-1Xn), S = {[00], [01], [10], [11]}.

From the state Sk−1 = [01] (representing Xk−2=0,Xk−1=1), the output Xk=1 causes a transition to Sk = [11] (representing Xk−1=1,Xk=1). The chain is assumed to start at time 0 in a state S0 given by some arbitrary pmf (probability mass function).

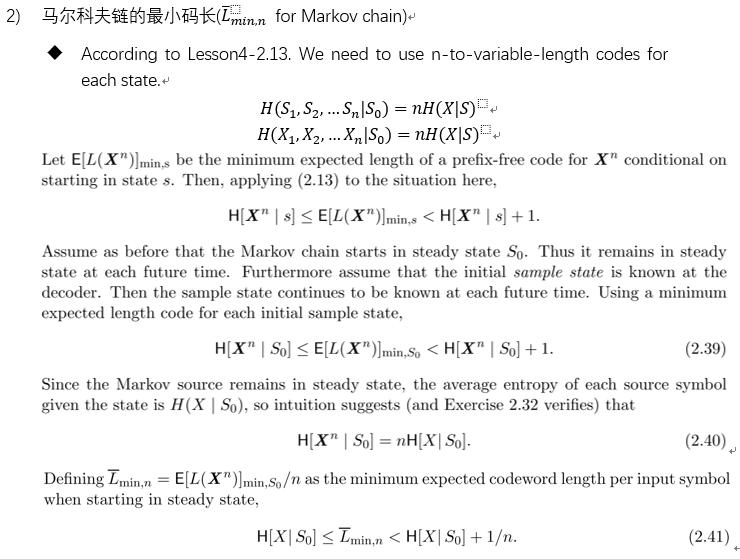

2. Coding for Markov sources

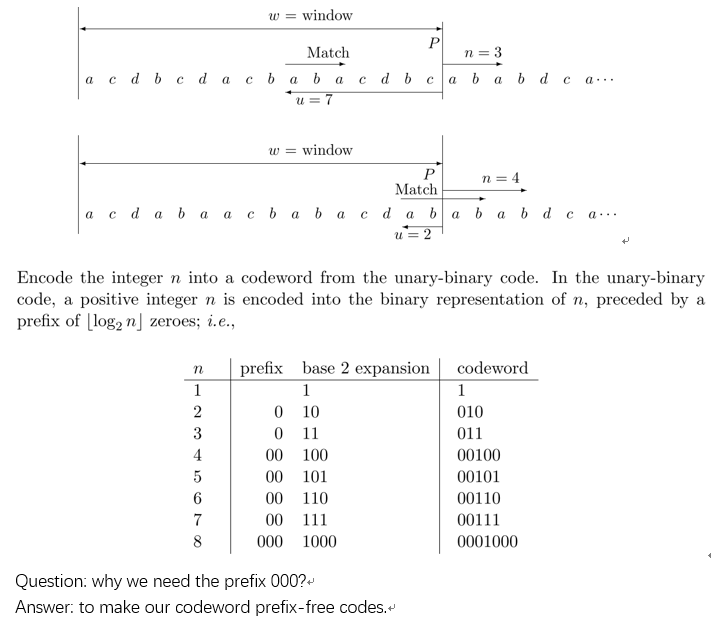

3. Lempel-Ziv universal data compression

(参考:https://www.jianshu.com/p/89dd96537d9d)

两种编码压缩方式:1,按概率去冗余,如Huffman;2,去重复,如LZ77

In general, two encoding compression methods: 1, compression by probability, such as Huffman algorithm; 2, deduplication, such as LZ77