debezium 抽取大表优化

现象

在debezium 抽取 千万级大表时,发现snapshot时同步速率在 2s 10000 row ,同时还有 young GC 信息打印

原因分析

网络原因

首先排除网络延迟的原因,ping 一下目的主机,发现 延迟在0.1ms

本身原因

查看SnapshotReader源码

// Scan the rows in the table ... long start = clock.currentTimeInMillis(); logger.info("Step {}: - scanning table '{}' ({} of {} tables)", step, tableId, ++counter, capturedTableIds.size()); Map<TableId, String> selectOverrides = context.getConnectorConfig().getSnapshotSelectOverridesByTable(); String selectStatement = selectOverrides.getOrDefault(tableId, "SELECT * FROM " + quote(tableId)); logger.info("For table '{}' using select statement: '{}'", tableId, selectStatement); sql.set(selectStatement); try { int stepNum = step; mysql.query(sql.get(), statementFactory, rs -> { try { // The table is included in the connector's filters, so process all of the table records // ... final Table table = schema.tableFor(tableId); final int numColumns = table.columns().size(); final Object[] row = new Object[numColumns]; while (rs.next()) { for (int i = 0, j = 1; i != numColumns; ++i, ++j) { Column actualColumn = table.columns().get(i); row[i] = readField(rs, j, actualColumn, table); } recorder.recordRow(recordMaker, row, clock.currentTimeAsInstant()); // has no row number! rowNum.incrementAndGet(); if (rowNum.get() % 100 == 0 && !isRunning()) { // We've stopped running ... break; } if (rowNum.get() % 10_000 == 0) { if (logger.isInfoEnabled()) { long stop = clock.currentTimeInMillis(); logger.info("Step {}: - {} of {} rows scanned from table '{}' after {}", stepNum, rowNum, rowCountStr, tableId, Strings.duration(stop - start)); } metrics.rowsScanned(tableId, rowNum.get()); } }

,原来它默认是对表做一个select * ,然后在内存中对 整个表做 个 count ,之后迭代发送数据

解决方案

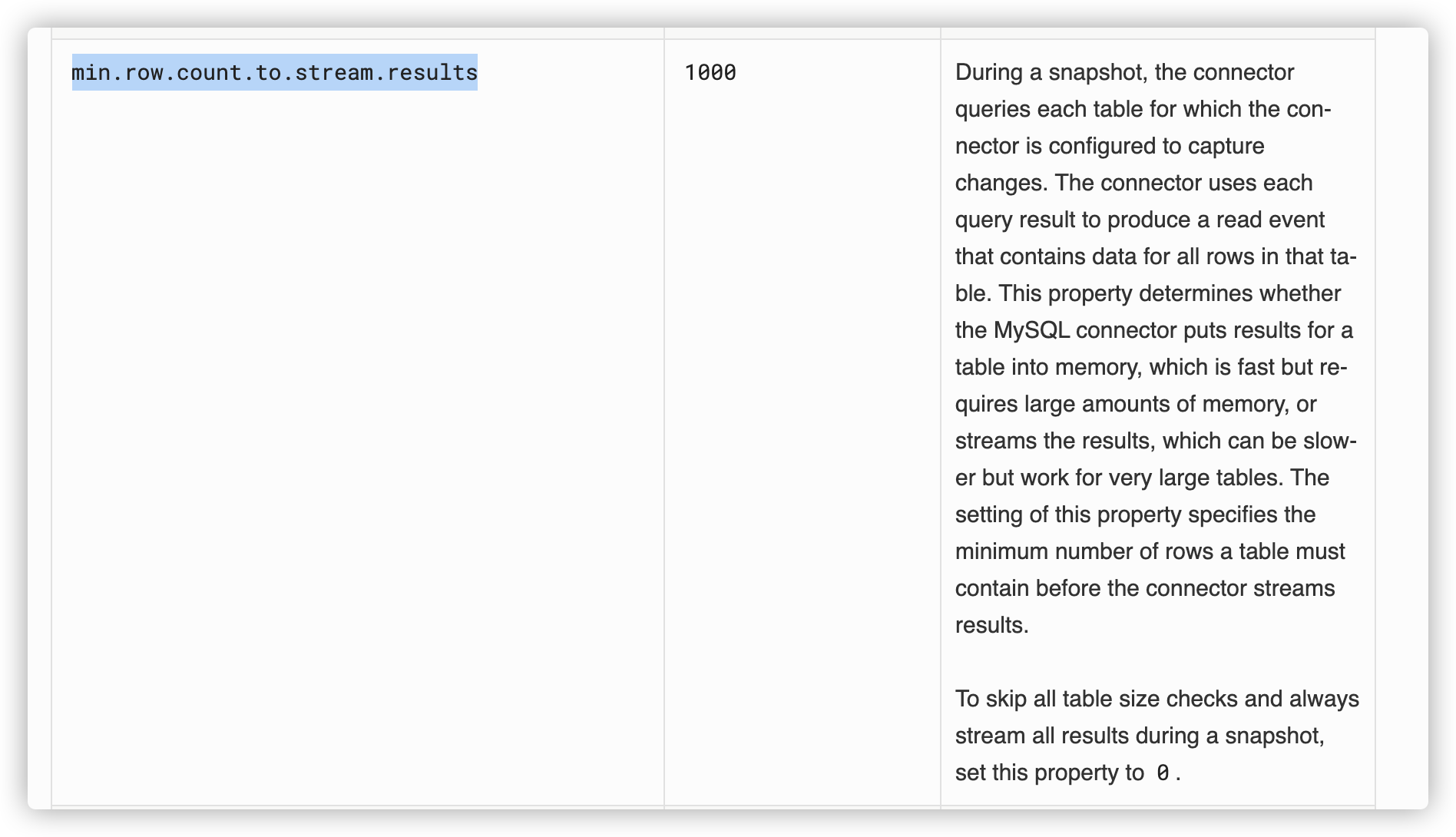

在官网里找snapshot 的配置,发现一个参数 min.row.count.to.stream.results

https://debezium.io/documentation/reference/1.4/connectors/mysql.html#mysql-property-min-row-count-to-stream-results

,尝试配了这个参数后,日志打印没有去select * ,直接 每批次 发送10000,速度提升了20倍。

浙公网安备 33010602011771号

浙公网安备 33010602011771号