k8s常用命令

节点加入集群生成新的token:

kubeadm token create --print-join-command

查询资源的描述信息,也就是yaml的字段意思(这里以deployment为例子):

kubectl explain deployment

kubectl explain deployment.spc

1.命名空间 namespace /ns

方式:1

创建:kubectl create ns hello-ns

删除:kubectl delete ns hello-ns

查询:kubectl get ns

方式2:万能通用方式:通过yaml模板创建 : 创建ns-ceate.yaml

apiVersion: v1

kind: Namespace

metadata:

name: hello

创建: kubectl apply -f ns-create.yaml

删除:kubectl delete -f ns-create.yaml

2.pod相关命令

方式1:命令行创建

创建:kubectl run nginx --image=nginx

查看:kubectl get pods -A -o wide

查询详情:kubectl describe pod pod名称

删除: kubectl delete pod nginx

查询容器日志: kubectl logs -c 容器名称 pod名称

进入pod的某个容器:kubectl exec -it pod名称 -c 容器名称 -- 命令:如 kubectl exec -it nginx -c nginx -- /bin/bash

方式2:万能方式: 创建:kubectl apply -f xxx.yaml 删除 kubectl delete -f xxx.yaml

apiVersion: v1 kind: Pod metadata: name: nignx_tomcat labels: app: nignx_tomcat namespace: hello spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent - name: tomcat image: tomcat:8.5.68 imagePullPolicy: IfNotPresent restartPolicy: Always

3.deployment部署相关

方式1.:

创建部署 kubectl -n hello create deploy my-first-deploy --image=nginx --replicas=3

删除部署:kubectl delete deploy my-first-deploy -n hello

扩容和缩容:kubectl scale deploy --current-replicas=3 --replicas=4 my-first-deploy -n hello

滚动更新:kubectl set image deploy mydeployment ngnix-deplyment=nginx:1.9.1 -n hello

查询滚动更新的状态: kubectl rollout status deploy mydeployment -n hello

查询滚动更新的历史记录: kubectl rollout history deploy mydeployment -n hello

回滚到某一个版本: kubectl rollout undo deployment mydeployment --to-revision=1 -n hello

方式2:万能方式: 创建:kubectl apply -f xxx.yaml 删除 kubectl delete -f xxx.yaml 扩容缩容 kubectl edit deployment xxx -n hello

apiVersion: apps/v1

kind: Deployment

metadata:

name: mydeployment

namespace: hello

labels:

app: mydeployment

spec:

replicas: 3

template:

metadata:

name: mydeply

labels:

app: mydeply

spec:

containers:

- name: ngnix-deplyment

image: nginx

imagePullPolicy: IfNotPresent

- name: tomcat-deplayment

image: tomcat:8.5.68

imagePullPolicy: IfNotPresent

restartPolicy: Always

selector:

matchLabels:

app: mydeply

3.服务service的创建

方式1.通过命令行方式创建:

创建service: 将一个deployment暴露成一组服务 1.默认模式基于clusterIp 先查询deployment的标签 kubectl get deploy --show-labels -A 找到对应的标签名称开始创建(-n hello表示命名空间为hello): kubectl expose deployment my-nginx --port=8088 --target-port=80 --type=ClusterIp -n hello 集群内才能访问(查询服务的IP) kubectl get svc -n hello 最后访问:http://服务的ip:8088 其他pod内部访问:服务名称.命名空间:8088 如这里的是 http://my-nginx.hello:8088 2. 基于NodePort (比clusterIp方式多了一种访问方式节点Ip:端口) 创建方式:kubectl expose deployment my-nginx --port=8088 --target-port=80 --type=NodePort -n hello

方式2.万能公式,通过yaml模式创建 kubectl apply -f xxx.yaml 删除 kubectl delete -f xxx.yaml

apiVersion: v1 kind: Service metadata: name: hello-service namespace: hello spec: selector: app: mydeply #这里的标签要跟deployment中的pod标签对应而不是跟deployment的标签对应,可以过kubectl get pod --show-labels -A 查询pod的标签 ports: - port: 8089 targetPort: 80 nodePort: 30033 type: NodePort

4. ingress: 可以根据域名来对服务进行转发 下载进行安装: wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

ingress也是一个服务,通过NodePort方式暴露,我这里暴露的短点是32721

将服务暴露成ingress服务:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: hello-ingress namespace: hello annotations: nginx.ingress.kubernetes.io/limit-rps: "1" #限流 spec: ingressClassName: nginx rules: - host: "hello.shop.com" http: paths: - path: "/" pathType: Prefix backend: service: name: hello-service port: number: 8089 - host: "hello.tom.com" http: paths: - path: "/kk" pathType: Prefix backend: service: name: tomcat-service port: number: 8077 #如果service是使用NodePort方式,这里用的是集群内部的那个端口

上面例子:在windows配置域名跟linux其中一台服务器进行映射:

之后在windows中访问 http://hello.shop.com:32721 就可以访问到hello-service对应的pod了,访问http://hello.tom.com:32721/kk就可以访问到tomcat-service对应的pod了

查询ingress服务:

容易错的地方:service /deployment/pod/ingress要用相同的命名空间

4.存储

a: nfs存储:原理

#所有机器安装

yum install -y nfs-utils

#nfs主节点 echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports mkdir -p /nfs/data systemctl enable rpcbind --now systemctl enable nfs-server --now #配置生效 exportfs -r

#nfs从节点

showmount -e 172.31.0.4 #执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount mkdir -p /nfs/data #将主节点的目录跟从节点关联 mount -t nfs 172.31.0.4:/nfs/data /nfs/data # 写入一个测试文件 echo "hello nfs server" > /nfs/data/test.txt

创建一个deployment测试:先在任意节点的 /nfs/data下创建一个nginx目录,然后再创建一个index.html

apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx-nsf name: nginx-nsf spec: replicas: 2 selector: matchLabels: app: nginx-nsf template: metadata: labels: app: nginx-nsf spec: containers: - image: nginx name: nginx volumeMounts: - mountPath: /usr/share/nginx/html name: index volumes: - name: index nfs: path: /nfs/data/nginx server: 192.168.233.10

运行后,访问nginx可以访问到你自己写的index.html.删除delpoy再创建,依然可以访问到

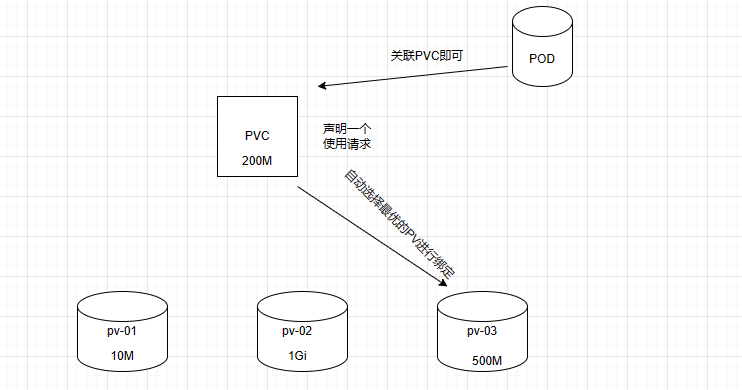

b: PV和PVC的使用:原理:

在/nfs/data/下创建三个文件夹:pv-01 pv-02 pv-03

#创建三个存储卷 分别是pv-01 pv-02 pv-03并声明大小 apiVersion: v1 kind: PersistentVolume metadata: name: pv-01 spec: capacity: storage: 10M accessModes: - ReadWriteMany storageClassName: nfs nfs: path: /nfs/data/pv-01 server: 192.168.233.10 --- apiVersion: v1 kind: PersistentVolume metadata: name: pv-02 spec: capacity: storage: 1Gi accessModes: - ReadWriteMany storageClassName: nfs nfs: path: /nfs/data/pv-02 server: 192.168.233.10 --- apiVersion: v1 kind: PersistentVolume metadata: name: pv-03 spec: capacity: storage: 500M accessModes: - ReadWriteMany storageClassName: nfs nfs: path: /nfs/data/pv-03 server: 192.168.233.10 --- #创建一个持久化请求,声明需要的内存大小 apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nginx-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 200Mi storageClassName: nfs --- #将pod和pvc进行绑定 apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx-deploy-pvc name: nginx-deploy-pvc spec: replicas: 2 selector: matchLabels: app: nginx-deploy-pvc template: metadata: labels: app: nginx-deploy-pvc spec: containers: - image: nginx name: nginx volumeMounts: - name: html mountPath: /usr/share/nginx/html volumes: - name: html persistentVolumeClaim: claimName: nginx-pvc

查询PV: kubectl get pv

查询PVC: kubectl get pvc

c: configMap的使用,一般用来指定配置文件

apiVersion: v1 kind: ConfigMap metadata: name: redis-config data: redis.conf: | appendonly yes --- apiVersion: v1 kind: Pod metadata: name: redis spec: containers: - name: redis image: redis command: - redis-server - "/redis-master/redis.conf" #指的是redis容器内部的位置 ports: - containerPort: 6379 volumeMounts: - mountPath: /data name: data - mountPath: /redis-master name: config volumes: - name: data emptyDir: {} - name: config configMap: name: redis-config items: - key: redis.conf path: redis.conf

查询configMap: kubectl get cm 命令行创建configMap: kubectl create cm redis-conf --from-file=redis.conf 上面创建容器的后,进入容器查询配置 kubectl exec -it redis -c redis -- /bin/bash redis-cli命令,然后config get appendonly查询结果是否正确

d: secret的使用,当我们需要拉取私服镜像需要账号密码的时候,可以通过他来实现:

创建secret: kubectl create secret docker-registry my-dokcer-secrect --docker-username=user --docker-password=password --docker-email=email

应用:

apiVersion: v1 kind: Pod metadata: name: private-nginx spec: containers: - name: private-nginx image: yangxiaohui/nginx:v1.0 imagePullSecrets: - name: my-docker-secret

e.有状态服务创建:StatefulSet

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: statefulset-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 800M storageClassName: nfs --- apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: ports: - port: 80 name: web clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: serviceName: "nginx" replicas: 2 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80 name: web volumeMounts: - name: www mountPath: /usr/share/nginx/html volumes: - name: www persistentVolumeClaim: claimName: statefulset-pvc

在其他pod中,可以通过域名访问:web-0.nginx.default.svc.cluster.local 或者 web-1.nginx.default.svc.cluster.local,因为statefulset创建的pod,命名都是statefulset的name-0或n

f: DaemonSet 守护进程,一般用于收集日志用

apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-elasticsearch namespace: kube-system labels: k8s-app: fluentd-logging spec: selector: matchLabels: name: fluentd-elasticsearch template: metadata: labels: name: fluentd-elasticsearch spec: tolerations: # 这些容忍度设置是为了让该守护进程集在控制平面节点上运行 # 如果你不希望自己的控制平面节点运行 Pod,可以删除它们 - key: node-role.kubernetes.io/control-plane operator: Exists effect: NoSchedule - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule containers: - name: fluentd-elasticsearch image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2 resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log