kubeadm 安装1.16.2版本k8s

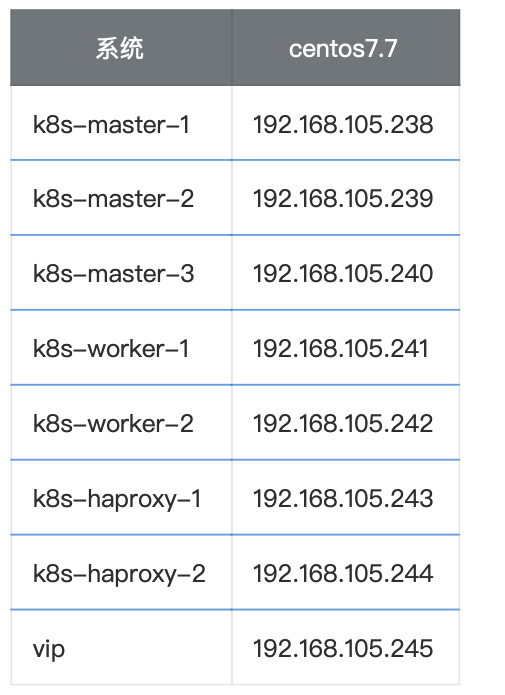

环境介绍

环境准备

1 2 3 4 5 | # 修改 hostnamehostnamectl set-hostname your-new-host-name # 查看修改结果hostnamectl status# 设置 hostname 解析echo "127.0.0.1 $(hostname)" >> /etc/hosts |

安装docker kubelet kubectl kubeadm

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 | #!/bin/bash # 在 master 节点和 worker 节点都要执行# 安装 docker# 参考文档如下# https://docs.docker.com/install/linux/docker-ce/centos/ # https://docs.docker.com/install/linux/linux-postinstall/# 卸载旧版本yum remove -y docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engine# 设置 yum repositoryyum install -y yum-utils \device-mapper-persistent-data \lvm2yum-config-manager --add-repo http://mirrors.aliyun.com/docker- ce/linux/centos/docker-ce.repo# 安装并启动 dockeryum install -y docker-ce-18.09.7 docker-ce-cli-18.09.7 containerd.io systemctl enable dockersystemctl start docker# 安装 nfs-utils# 必须先安装 nfs-utils 才能挂载 nfs 网络存储 yum install -y nfs-utilsyum install -y wget# 关闭 防火墙systemctl stop firewalld systemctl disable firewalld# 关闭 SeLinuxsetenforce 0sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config# 关闭 swapswapoff -ayes | cp /etc/fstab /etc/fstab_bakcat /etc/fstab_bak |grep -v swap > /etc/fstab# 修改 /etc/sysctl.conf# 如果有配置,则修改sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call- ip6tables=1#g" /etc/sysctl.confsed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call- iptables=1#g" /etc/sysctl.conf# 可能没有,追加echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.confecho "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.confecho "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf# 执行命令以应用sysctl -p# 配置K8S的yum源cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1gpgcheck=0repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttp://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF# 卸载旧版本yum remove -y kubelet kubeadm kubectl# 安装kubelet、kubeadm、kubectlyum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2# 修改docker Cgroup Driver为systemd# # 将/usr/lib/systemd/system/docker.service文件中的这一行 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock# # 修改为 ExecStart=/usr/bin/dockerd -H fd:// -- containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd# 如果不修改,在添加 worker 节点时可能会碰到如下错误# [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd".# Please follow the guide at https://kubernetes.io/docs/setup/cri/sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// -- containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service# 设置 docker 镜像,提高 docker 镜像下载速度和稳定性# 如果您访问 https://hub.docker.io 速度非常稳定,亦可以跳过这个步骤 curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io# 重启 docker,并启动 kubeletsystemctl daemon-reloadsystemctl restart dockersystemctl enable kubelet && systemctl start kubeletdocker version |

初始化第一个master节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | 初始化其他的master 获得 certificate key获得 join整合命令添加第二个 第三个master获取添加worker的命令kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca- cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303添加worker节点删除worker节点安装ingress-nginxkubectl apply -f https://kuboard.cn/install-script/v1.16.2/nginx-ingress.yaml# 只在第一个 master 节点执行# 替换 apiserver.demo 为 您想要的 dnsNameexport APISERVER_NAME=apiserver.demo# Kubernetes 容器组所在的网段,该网段安装完成后,由 kubernetes 创建,事先并不存在于您的物理网 络中export POD_SUBNET=10.100.0.1/16echo "127.0.0.1 ${APISERVER_NAME}" >> /etc/hosts |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | #!/bin/bash# 只在 master 节点执行# 脚本出错时终止执行 set -e# 查看完整配置选项 https://godoc.org/k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/v1beta2 rm -f ./kubeadm-config.yamlcat <<EOF > ./kubeadm-config.yamlapiVersion: kubeadm.k8s.io/v1beta2kind: ClusterConfigurationkubernetesVersion: v1.16.2imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers controlPlaneEndpoint: "${APISERVER_NAME}:6443"networking:serviceSubnet: "10.96.0.0/16" podSubnet: "${POD_SUBNET}" dnsDomain: "cluster.local"EOF# kubeadm init# 根据您服务器网速的情况,您需要等候 3 - 10 分钟kubeadm init --config=kubeadm-config.yaml --upload-certs# 配置 kubectlrm -rf /root/.kube/mkdir /root/.kube/cp -i /etc/kubernetes/admin.conf /root/.kube/config# 安装 calico 网络插件# 参考文档 https://docs.projectcalico.org/v3.9/getting-started/kubernetes/ rm -f calico-3.9.2.yamlwget https://kuboard.cn/install-script/calico/calico-3.9.2.yamlsed -i "s#192\.168\.0\.0/16#${POD_SUBNET}#" calico-3.9.2.yamlkubectl apply -f calico-3.9.2.yaml |

初始化其他的master

1。获得 certificate key

1 2 3 4 5 | [root@k8s-master-1 ~]# kubeadm init phase upload-certs --upload-certsW0902 09:05:28.355623 1046 version.go:98] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)W0902 09:05:28.355718 1046 version.go:99] falling back to the local client version: v1.16.2[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube- system" Namespace[upload-certs] Using certificate key: 70eb87e62f052d2d5de759969d5b42f372d0ad798f98df38f7fe73efdf63a13c |

2.获得 join

1 2 | [root@k8s-master-1 ~]# kubeadm token create --print-join-commandkubeadm join apiserver.demo:6443 --token bl80xo.hfewon9l5jlpmjft --discovery- token-ca-cert-hash sha256:b4d2bed371fe4603b83e7504051dcfcdebcbdcacd8be27884223c4ccc13059a4 |

整合命令

1 | kubeadm join apiserver.demo:6443 --token ejwx62.vqwog6il5p83uk7y \ --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303 \ --control-plane --certificate-key 70eb87e62f052d2d5de759969d5b42f372d0ad798f98df38f7fe73efdf63a13c |

添加第二个 第三个master

1 2 3 4 5 6 7 | # 只在第二、三个 master 节点 demo-master-b-1 和 demo-master-b-2 执行 # 替换 x.x.x.x 为 ApiServer LoadBalancer 的 IP 地址export APISERVER_IP= VIP# 替换 apiserver.demo 为 前面已经使用的 dnsNameexport APISERVER_NAME=apiserver.demoecho "${APISERVER_IP} ${APISERVER_NAME}" >> /etc/hosts# 使用前面步骤中获得的第二、三个 master 节点的 join 命令kubeadm join apiserver.demo:6443 --token ejwx62.vqwog6il5p83uk7y \ --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303 \ --control-plane --certificate-key 70eb87e62f052d2d5de759969d5b42f372d0ad798f98df38f7fe73efdf63a13c |

获取添加worker的命令

1 | # 只在第一个 master 节点 demo-master-a-1 上执行 kubeadm token create --print-join-command |

kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca- cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

添加worker节点

1 2 3 4 5 6 7 | # 只在 worker 节点执行# 替换 x.x.x.x 为 ApiServer LoadBalancer 的 IP 地址export MASTER_IP=x.x.x.x# 替换 apiserver.demo 为初始化 master 节点时所使用的 APISERVER_NAME export APISERVER_NAME=apiserver.demoecho "${MASTER_IP} ${APISERVER_NAME}" >> /etc/hosts# 替换为前面 kubeadm token create --print-join-command 的输出结果kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303 |

删除worker节点

1 2 3 4 5 | #在准备移除的worker上执行 kubeadm reset#在master上执行kubectl delete node demo-worker-x-x |

安装ingress-nginx

kubectl apply -f https://kuboard.cn/install-script/v1.16.2/nginx-ingress.yaml

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律