Pytorch 2.1 基础知识大扫盲

Pytorch基础知识大扫盲

张量 Tensor

Tensor在TensorFlow中数据的核心单元就是Tensor。在PyTorch中Tensor负责存储基本数据,PyTorch也对Tensor提供了丰富的函数和方法,PyTorch中Tensor与Numpy的数组十分相似。并且PyTorch中定义的Tensor数据类型可以实现在GUPs上进行运算,并且只需要对变量做一些简单的类型转换就可以实现。

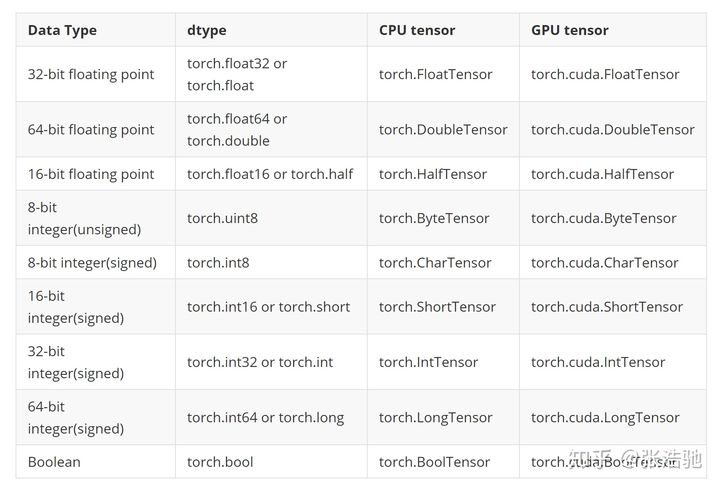

首先需要掌握Tensor定义不同数据类型的方法,常用的如下:

▷▷▷▷创建Tensor▷▷▷

Tensor的创建

torch.tensor(data, #功能解释:从data创建tensor #data:数据,可以是list,ndarray

dtype=None, #dtype:数据类型

device=None, #device:所在设备,cuda/cpu

requires_grad=False, #requires_grad:是否计算梯度

pin_memory=False) #pin_memory:是否存于锁页内存

Tensor类型转换

import torch ,numpy as np

# 类型转换

a = np.array([1,2,3,4,5])

before = id(a)

b = torch.from_numpy(a)

b[2] = 999

a,b,type(a),type(b)

>>>[ 1 2 999 4 5] tensor([ 1, 2, 999, 4, 5], dtype=torch.int32) <class 'numpy.ndarray'> <class 'torch.Tensor'>

在使用torch.from_numpy()这个函数的时候,传入的参数和返回的参数共享内存,所以当我修改b的时候,a也会被修改,但是ab的内存地址不一样

创建0、1组成的tensor

#创建0、1组成的tensor

torch.zeros(size, #size: 张量的形状,如(3, 3)、(3, 224,224)

out=None, #out : 输出的张量,将创建的tensor赋值给out,共享内存

dtype=None, #dtype : 数据类型

layout=torch.strided, #layout : 内存中布局形式,有 strided,sparse_coo(稀疏张量使用)等

device=None, #device : 所在设备,cuda/cpu

requires_grad=False)#requires_grad:是否需要计算梯度

#torch.zeros_like()

torch.zeros_like(input, #intput: 创建与input同形状的全0张量

dtype=None,

layout=None,

device=None,

requires_grad=False)

#torch.ones()

torch.ones(size,

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False

# torch.ones_like()

torch.ones_like(input,

dtype=None,

layout=None,

device=None,

requires_grad=False

创建其他数字的Tensor

# torch.full()

torch.full(size,

fill_value, #fill_value:张量中填充的值

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False

# torch.full_like()

torch.full_like(input,

fill_value,

dtype=None,

layout=None,

device=None,

requires_grad=False

e = torch.full((3,4), fill_value=3)

e

>>>tensor([[3, 3, 3, 3],

[3, 3, 3, 3],

[3, 3, 3, 3]])

标准阵的创建

# torch.eye()

'''torch.eye(n,

m=None,

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False)'''

f = torch.eye(n=3,m=4) #功能解释:创建n行m列的identify Matrix,注意:未输入m时,m=n

f

>>>tensor([[1., 0., 0., 0.],

[0., 1., 0., 0.],

[0., 0., 1., 0.]])

torch.empty()用法

# torch.empty()

g = torch.empty(2,3)

'''torch.empty(sizes,

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False)'''

# torch.empty_like()创建input.size()大小,值全为0的Tensor

'''torch.empty_like(input,

dtype=None,

layout=None,

device=None,

requires_grad=False)'''

g

>>>tensor([[ 1.6167e-30, 3.0816e-41, -4.8385e+02],

[ 4.5758e-41, 8.9683e-44, 0.0000e+00]])

创建序列数组

#torch.arange() 按照步长创建tensor

'''torch.arange(start=0,

end,

step=1,

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False)'''

#torch.range() 等差一维数组 同torch.arange

'''torch.range(start=0,

end,

step=1,

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False)'''

h = torch.arange(start=0,end=12,step=2)

h

>>>tensor([ 0, 2, 4, 6, 8, 10])

torch.linspace()

# torch.linspace() #步长(end-start)/steps等差的一维张量

'''torch.linspace(start,

end,

steps=100, #注意这里的steps和之前的step不一样,这里指的是一共要走100步

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False)'''

i = torch.linspace(start=0,end=11)

i

>>>tensor([ 0.0000, 0.1111, 0.2222, 0.3333, 0.4444, 0.5556, 0.6667, 0.7778,

0.8889, 1.0000, 1.1111, 1.2222, 1.3333, 1.4444, 1.5556, 1.6667,

1.7778, 1.8889, 2.0000, 2.1111, 2.2222, 2.3333, 2.4444, 2.5556,

2.6667, 2.7778, 2.8889, 3.0000, 3.1111, 3.2222, 3.3333, 3.4444,

3.5556, 3.6667, 3.7778, 3.8889, 4.0000, 4.1111, 4.2222, 4.3333,

4.4444, 4.5556, 4.6667, 4.7778, 4.8889, 5.0000, 5.1111, 5.2222,

5.3333, 5.4444, 5.5556, 5.6667, 5.7778, 5.8889, 6.0000, 6.1111,

6.2222, 6.3333, 6.4444, 6.5556, 6.6667, 6.7778, 6.8889, 7.0000,

7.1111, 7.2222, 7.3333, 7.4444, 7.5556, 7.6667, 7.7778, 7.8889,

8.0000, 8.1111, 8.2222, 8.3333, 8.4444, 8.5556, 8.6667, 8.7778,

8.8889, 9.0000, 9.1111, 9.2222, 9.3333, 9.4444, 9.5556, 9.6667,

9.7778, 9.8889, 10.0000, 10.1111, 10.2222, 10.3333, 10.4444, 10.5556,

10.6667, 10.7778, 10.8889, 11.0000])

torch.logspace()

#torch.logspace()

'''torch.logspace(start,

end,

steps=100,

out=None,

dtype=None,

layout=torch.strided,

device=None,

requires_grad=False)'''

j = torch.logspace(0,11)

j

>>>tensor([1.0000e+00, 1.2915e+00, 1.6681e+00, 2.1544e+00, 2.7826e+00, 3.5938e+00,

4.6416e+00, 5.9948e+00, 7.7426e+00, 1.0000e+01, 1.2915e+01, 1.6681e+01,

2.1544e+01, 2.7826e+01, 3.5938e+01, 4.6416e+01, 5.9948e+01, 7.7426e+01,

1.0000e+02, 1.2915e+02, 1.6681e+02, 2.1544e+02, 2.7826e+02, 3.5938e+02,

4.6416e+02, 5.9948e+02, 7.7426e+02, 1.0000e+03, 1.2915e+03, 1.6681e+03,

2.1544e+03, 2.7826e+03, 3.5938e+03, 4.6416e+03, 5.9948e+03, 7.7426e+03,

1.0000e+04, 1.2915e+04, 1.6681e+04, 2.1544e+04, 2.7826e+04, 3.5938e+04,

4.6416e+04, 5.9948e+04, 7.7426e+04, 1.0000e+05, 1.2915e+05, 1.6681e+05,

2.1544e+05, 2.7826e+05, 3.5938e+05, 4.6416e+05, 5.9948e+05, 7.7426e+05,

1.0000e+06, 1.2915e+06, 1.6681e+06, 2.1544e+06, 2.7826e+06, 3.5938e+06,

4.6416e+06, 5.9948e+06, 7.7426e+06, 1.0000e+07, 1.2915e+07, 1.6681e+07,

2.1544e+07, 2.7826e+07, 3.5938e+07, 4.6416e+07, 5.9948e+07, 7.7426e+07,

1.0000e+08, 1.2915e+08, 1.6681e+08, 2.1544e+08, 2.7826e+08, 3.5938e+08,

4.6416e+08, 5.9948e+08, 7.7426e+08, 1.0000e+09, 1.2915e+09, 1.6681e+09,

2.1544e+09, 2.7826e+09, 3.5938e+09, 4.6416e+09, 5.9948e+09, 7.7426e+09,

1.0000e+10, 1.2915e+10, 1.6681e+10, 2.1544e+10, 2.7826e+10, 3.5938e+10,

4.6416e+10, 5.9948e+10, 7.7426e+10, 1.0000e+11])

▷▷▷▷变形Tensor▷▷▷

拼接Tensor

#torch.cat()

'''torch.cat(seq,

dim=0,

out=None)'''

k = torch.arange(9).reshape(3,3)

l = torch.arange(9).reshape(3,3)

torch.cat((k,l),dim=0),torch.cat((k,l),dim=1) # 保留列,左右拼接 #保留行,上下拼接

>>>(tensor([[0, 1, 2],

[3, 4, 5],

[6, 7, 8],

[0, 1, 2],

[3, 4, 5],

[6, 7, 8]]),

tensor([[0, 1, 2, 0, 1, 2],

[3, 4, 5, 3, 4, 5],

[6, 7, 8, 6, 7, 8]]))

torch.squeeze() 去除那些维度大小为1的维度

#torch.squeeze()

'''torch.squeeze(input,

dim=None,

out=None)'''

torch.unbind() 去除某个维度

#torch.unbind()

'''torch.unbind(tensor,

dim=0)'''

torch.unsqueeze() 在指定位置添加维度

'''torch.unsqueeze(input,

dim,

out=None) '''

上一篇:Pytorch基础:Pytorch的优势

下一篇:Pytorch 学习笔记2 基础知识大扫盲

预备知识1.matplotlib的基本用法和常识

预备知识2.Pytorch下的线代

预备知识3.pandas的基本用法

posted on 2021-12-10 08:47 YangShusen' 阅读(463) 评论(0) 编辑 收藏 举报