kubernetes创建RC的时候,创建不成功

1. 环境:node节点已经成功连接到master节点,并且在两个节点都添加对方docker0的静态路由。

2. 创建RC,内容如下:

apiVersion: v1

kind: ReplicationController

metadata:

name: blog-controller

spec:

replicas: 2

selector:

name: blog

template:

metadata:

labels:

name: blog

spec:

containers:

- name: blog

image: 172.16.20.215:5000/blognew4:v4

ports:

- containerPort: 80

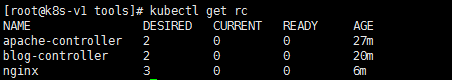

结果:kubectl get rc,显示的结果为:

3. 使用命令:kubectl describe rc blog,结果如下:

[root@k8s-v1 tools]# kubectl describe rc blog

Name: blog-controller

Namespace: default

Selector: name=blog

Labels: name=blog

Annotations: <none>

Replicas: 0 current / 2 desired

Pods Status: 0 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: name=blog

Containers:

blog:

Image: 172.16.20.215:5000/blognew4:v4

Port: 80/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

ReplicaFailure True FailedCreate

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 6m (x18 over 19m) replication-controller Error creating: No API token found for service account "default", retry after the token is automatically created and added to the service account

解决方法:将/etc/kubernetes/apiserver中的KUBE_ADMISSION_CONTROL中的ServiceAccount删除。

这个ServiceAccount做什么用的?还不清楚。

4. 再创建RC,查看RC,状态正常了。

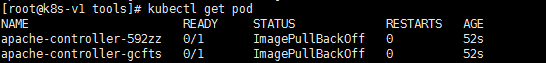

5. 查看pod状态,结果如下:

查看详细的错误:kubectl describe pod apache-controller-592zz,内容如下:

[root@k8s-v1 tools]# kubectl describe pod apache-controller-592zz

Name: apache-controller-592zz

Namespace: default

Node: 172.16.252.209/172.16.252.209

Start Time: Mon, 05 Mar 2018 16:47:10 +0800

Labels: name=apache

Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"ReplicationController","namespace":"default","name":"apache-controller","uid":"cb0c03eb-2051-11e8-872d-000...

Status: Pending

IP: 192.168.130.2

Created By: ReplicationController/apache-controller

Controlled By: ReplicationController/apache-controller

Containers:

apache:

Container ID:

Image: 172.16.20.215:5000/httpd

Image ID:

Port: 80/TCP

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment: <none>

Mounts: <none>

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

Volumes: <none>

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m default-scheduler Successfully assigned apache-controller-592zz to 172.16.252.209

Normal Pulling 4m (x4 over 6m) kubelet, 172.16.252.209 pulling image "172.16.20.215:5000/httpd"

Warning Failed 4m (x4 over 6m) kubelet, 172.16.252.209 Failed to pull image "172.16.20.215:5000/httpd": rpc error: code = Unknown desc = Error: image httpd:latest not found

Normal BackOff 4m (x6 over 6m) kubelet, 172.16.252.209 Back-off pulling image "172.16.20.215:5000/httpd"

Warning FailedSync 1m (x26 over 6m) kubelet, 172.16.252.209 Error syncing pod

解决方法:因为我上传的镜像带有标签,所以我需要在拉镜像的时候,添加上标签。删除旧的RC,重新创建RC,成功了。

6. 当前使用的yaml文件,没有配置pod亲和性,所以当我把一个节点关机以后,所有的pod都跑到另外一个节点上了。

等我关掉的那台机器恢复以后,pod不会自动回来。

如何让那些pod自动回来呢?

一往无前虎山行,拨开云雾见光明