SVM 之 SMO 算法

支持向量机是一种二分类模型,它的目的是寻找一个超平面来对样本进行分割,分割的原则是间隔最大化,最终转化为一个凸二次规划问题来求解。

模型包括以下几类:

-

当训练样本线性可分时,通过硬间隔最大化,学习一个线性可分支持向量机;

-

当训练样本近似线性可分时,通过软间隔最大化,学习一个线性支持向量机;

-

当训练样本线性不可分时,通过核技巧和软间隔最大化,学习一个非线性支持向量机;

- 序列最小最优化算法 $SMO$;

阅读本篇之前,可先阅读上面的前 $3$ 篇博客。

$SMO$ 算法要解决的就是如下凸二次规划问题(其中 $C$ 是惩罚系数):

$$\min_{\alpha } \; \frac{1}{2}\sum_{i=1}^{n}\sum_{j=1}^{n}\alpha_{i}\alpha_{j}y_{i}y_{j}K(x_{i},x_{j}) -\sum_{i=1}^{n}\alpha_{i} \\

s.t. \;\;\; \sum_{i=1}^{n}\alpha_{i}y_{i} = 0 \\

0 \leq \alpha_{i} \leq C, \; i = 1,2,\cdots,n$$

假如求出了这个问题的最优解 $\alpha = (\alpha_{1},\alpha_{2},...,\alpha_{n})$,可以看出,有多少个样本点,向量 $\alpha$ 就是几维的。之后就可以利用如下的 KKT 条件

求得 SVM 原始问题的最优解。

$$\left\{\begin{matrix}

L_{w} = w - \sum_{i=1}^{n}\alpha_{i}y_{i}x_{i} = 0 \\

L_{b} = -\sum_{i=1}^{n}\alpha_{i}y_{i} = 0 \\

L_{\xi_{i}} = C - \alpha_{i} - \mu_{i} = 0, \; i = 1,2,\cdots,n \\

y_{i}\left ( w^{T}x_{i} + b\right ) - 1 + \xi_{i} \geq 0, \; i = 1,2,\cdots,n \\

\xi_{i} \geq 0, \; i = 1,2,\cdots,n \\

\alpha_{i} \left [ y_{i}\left ( w^{T}x_{i} + b\right ) - 1 + \xi_{i} \right ] = 0, \; i = 1,2,\cdots,n \\

\mu_{i}\xi_{i} = 0, \; i = 1,2,\cdots,n \\

\alpha_{i} \geq 0,\; i = 1,2,\cdots,n \\

\mu_{i} \geq 0,\; i = 1,2,\cdots,n

\end{matrix}\right. \; \Rightarrow \;

\left\{\begin{matrix}

w = \sum_{i=1}^{n}\alpha_{i}y_{i}x_{i} \\

\sum_{i=1}^{n}\alpha_{i}y_{i} = 0 \\

C - \alpha_{i} - \mu_{i} = 0, \; i = 1,2,\cdots,n \\

b = y_{j}(1 - \xi_{j}) - w^{T}x_{j} = y_{j} - \sum_{i=1}^{n}\alpha_{i}y_{i}x_{i}^{T}x_{j}, \;(0 < \alpha_{j} < C)

\end{matrix}\right.$$

进而得到分离超平面对应的模型

$$g(x_{i}) = w \cdot x_{i} + b = \sum_{j=1}^{n}\alpha_{j}y_{j}x_{j} \cdot x_{i} + b$$

现在对 KKT 条件分类讨论:

1)$\alpha_{i} = 0$:因为 $y_{i}\left (w^{T}x_{i} + b \right ) \geq 1 - \xi_{i}$,又 $\mu_{i} = C$,所以 $\xi_{i} = 0$(因为没有被惩罚,所以它是分类正确的样本点),所以 $y_{i}f(x_{i}) \geq 1$。

这种情况,样本点 $x_{i}$ 可能在分割边界上,也可能在分割边界内部。

2)$0 < \alpha_{i} < C$:此时对应的 $x_{i}$ 是支持向量(在分割边界上)。此种情况下 $\mu_{i} > 0$,又因为 $\mu_{i}\xi_{i} = 0$,于是 $\xi_{i} = 0$,则 $y_{i}g(x_{i}) = 1$。

3)$\alpha_{i} = C$:可知 $\mu_{i} = 0$,$y_{i}\left (w^{T}x_{i} + b \right ) = 1 - \xi_{i}$,又 $\xi_{i} \geq 0$,所以 $y_{i}\left (w^{T}x_{i} + b \right ) \leq 1$。

综上得

$$y_{i}f(x_{i}) = \left\{\begin{matrix}

\geq 1, & \alpha_{i} = 0 \\

= 1, & 0 < \alpha_{i} < C \\

\leq 1, & \alpha_{i} = C

\end{matrix}\right.$$

不妨将这个称为目标条件。也就是说,凸二次规划问题的最优解 $\alpha = (\alpha_{1},\alpha_{2},...,\alpha_{n})$ 也会满足这个目标条件。

现在我们想利用最优解满足目标条件的这个性质来求最优解。

首先确定向量 $\alpha$ 的一个初始值,使它满足原始对偶问题的两个约束条件,很明显 $0$ 向量就满足条件。因为初始向量不是最优解,所以它

不满足目标条件,那么怎么利用目标条件调整初始值呢?

将每个分量 $\alpha_{i}$ 代入目标条件中,显然会有一部分满足条件,另一部分不满足,因为没办法在只优化一个分量的情况下保持原始对偶问题

的约束,所以我们下面选出两个待优化的分量:

1)第一个分量的选择

选择破坏 $g(x)$ 约束条件最严重的分量,$\alpha$ 的分量中有等于 $0$ 的,有等于 $C$ 的,还有大于 $0$ 小于 $C$ 的,先从大于 $0$ 小于 $C$ 的分

量中选择,如果没有找到可优化的分量时,再从其他两类分量中挑选。

2)第二个分量的选择

选择的第一个分量满足违反 $g(x)$ 目标条件比较多,还有一个重要的考量,就是经过一次优化后,两个分量要有尽可能多的改变,这样才

能用少的迭代优化次数让它们达到 $g(x)$ 目标条件,所以选择第二个分量时,希望它能够在优化过程中让 $\alpha_{1},\alpha_{2}$ 有尽可能多的改变。

为每一个分量算出一个指标 $E$,它是这样的

$$E_{i} = g(x_{i}) - y_{i}$$

发现当 $|E_{1}-E_{2}|$ 越大时,优化后的 $\alpha_{1}, \alpha_{2}$ 改变越大。

选出了待优化变量 $\alpha_{1},\alpha_{2}$ 后,如何确保优化后,它们对应的样本能够满足 $g(x)$ 目标条件或者违反 $g(x)$ 目标条件的程度变轻呢?

考虑目标函数

$$L(\alpha) = \frac{1}{2}\sum_{i=1}^{n}\sum_{j=1}^{n}\alpha_{i}\alpha_{j}y_{i}y_{j}K(x_{i},x_{j}) -\sum_{i=1}^{n}\alpha_{i}$$

显然,可以让优化后 $L$ 的函数值变小!使目标函数值变小,肯定是朝着正确的方向优化!也就肯定是朝着使违反 $g(x)$ 目标条件变轻的方向优化。

将 $\alpha_{1}$ 和 $\alpha_{2}$ 看作变量,将 $\alpha_{3}, \alpha_{4},..., \alpha_{n}$ 固定(当做常数)。这样目标函数可以写为

$$L(\alpha_{1},\alpha_{2}) = \frac{1}{2}\alpha_{1}^{2}K_{11} + \frac{1}{2}\alpha_{2}^{2}K_{22} + y_{1}y_{2}\alpha_{1}\alpha _{2}K_{12} + \alpha_{1}y_{1}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} +

\alpha_{2}y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2}- \alpha_{1} - \alpha_{2} - \sum_{i = 1}^{3}\alpha_{i}$$

由等式约束得

$$\alpha_{1}y_{1} + \alpha_{2}y_{2} = -\sum_{i=3}^{n}\alpha_{i}y_{i}$$

因为右边是一个常数,不妨设为 $M$,那么有

$$\alpha_{1}y_{1} + \alpha_{2}y_{2} = M \\

\Rightarrow \alpha_{1} = y_{1}\left (M - \alpha_{2}y_{2} \right )$$

将这个式子代入目标函数,此时原函数就变成了一个单变量的优化问题:

$$L(\alpha_{2}) = \frac{1}{2}\left ( M - \alpha_{2}y_{2} \right )^{2}K_{11} + \frac{1}{2}\alpha_{2}^{2}K_{22} + y_{2}\left (M - \alpha_{2}y_{2} \right )\alpha _{2}K_{12} + \left (M - \alpha_{2}y_{2} \right )\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} + \alpha_{2}y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} - y_{1}\left (M - \alpha_{2}y_{2} \right ) - \alpha_{2} $$

对 $\alpha_{2}$ 求导得

$$\frac{\partial L}{\partial \alpha_{2}} = -y_{2}\left ( M - \alpha_{2}y_{2} \right )K_{11} + \alpha_{2}K_{22} + My_{2}K_{12} - 2\alpha_{2}K_{12} - y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} + y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} + y_{1}y_{2} - 1 \\

= -My_{2}K_{11} + \underline{\alpha_{2}K_{11}} + \underline{\alpha_{2}K_{22}} + My_{2}K_{12} - \underline{2\alpha_{2}K_{12}} - y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} + y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} + y_{1}y_{2} - 1 \\

= \left ( K_{11} + K_{22} - 2K_{12} \right )\alpha_{2} - My_{2}K_{11} + My_{2}K_{12} - y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} + y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} + y_{1}y_{2} - 1$$

令上式为 $0$ 得

$$\left ( K_{11} + K_{22} - 2K_{12} \right )\alpha_{2}^{new} = 1 - y_{1}y_{2} + My_{2}K_{11} - My_{2}K_{12} + y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} - y_{2}\sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} \\

= y_{2}\left ( y_{2} - y_{1} + MK_{11} - MK_{12} + \sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} - \sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} \right )$$

记 $\alpha_{2}^{new}$ 为当前得到的可行解,记上一次迭代得到的可行解为 $\alpha_{2}^{old}$,因为每次迭代的结果都满足原始约束:

$$\alpha_{1}^{new}y_{1} + \alpha_{2}^{new}y_{2} = M \\

\alpha_{1}^{old}y_{1} + \alpha_{2}^{old}y_{2} = M$$

代入有

$$\left ( K_{11} + K_{22} - 2K_{12} \right )\alpha_{2}^{new} = y_{2}\left ( y_{2} - y_{1} + \left (\alpha_{1}^{old}y_{1} + \alpha_{2}^{old}y_{2} \right )K_{11} - \left (\alpha_{1}^{old}y_{1} + \alpha_{2}^{old}y_{2} \right )K_{12} + \sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} - \sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} \right ) \\

= y_{2}\left ( y_{2} - y_{1} + \alpha_{1}^{old}y_{1}K_{11} + \alpha_{2}^{old}y_{2}K_{11} - \alpha_{1}^{old}y_{1}K_{12} - \alpha_{2}^{old}y_{2}K_{12} + \sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i1} - \sum_{i = 3}^{n}\alpha_{i}y_{i}K_{i2} \right )$$

向量 $\alpha$ 的初始值为 $0$ 向量,所以等式右侧所有符号的值都是已知的,从中可以解出 $\alpha_{2}^{new}$。

看到上面这个迭代的式子实在太繁琐了,下面我们利用已知的一些等式条件来进行简化。模型函数为

$$g(x_{i}) = w \cdot x_{i} + b = \sum_{j=1}^{n}\alpha_{j}y_{j}x_{j} \cdot x_{i} + b$$

将数值代入求得的这个函数目前还不是正确的。代入 $x_{1},x_{2}$ 有

$$g(x_{1}) = \sum_{i=1}^{n}\alpha_{i}y_{i}x_{i}^{T}x_{1} + b \\

= \alpha_{1}y_{1}x_{1}^{T}x_{1} + \alpha_{2}y_{2}x_{2}^{T}x_{1} + \sum_{i=3}^{n}\alpha_{i}y_{i}x_{i}^{T}x_{1} + b \\

= \alpha_{1}y_{1}K_{11} + \alpha_{2}y_{2}K_{12} + \sum_{i=3}^{n}\alpha_{i}y_{i}K_{i1} + b \\

\Rightarrow \;\; \sum_{i=3}^{n}\alpha_{i}y_{i}K_{i1} = g(x_{1}) - \alpha_{1}y_{1}K_{11} - \alpha_{2}y_{2}K_{12} - b$$

$$g(x_{2}) = \sum_{i=1}^{n}\alpha_{i}y_{i}x_{i}^{T}x_{2} + b \\

= \alpha_{1}y_{1}x_{1}^{T}x_{2} + \alpha_{2}y_{2}x_{2}^{T}x_{2} + \sum_{i=3}^{n}\alpha_{i}y_{i}x_{i}^{T}x_{2} + b \\

= \alpha_{1}y_{1}K_{12} + \alpha_{2}y_{2}K_{22} + \sum_{i=3}^{n}\alpha_{i}y_{i}K_{i2} + b \\

\Rightarrow \;\; \sum_{i=3}^{n}\alpha_{i}y_{i}K_{i2} = g(x_{2}) - \alpha_{1}y_{1}K_{12} - \alpha_{2}y_{2}K_{22} - b$$

将上面两个式子代入先前求得迭代式得

$$\left ( K_{11} + K_{22} - 2K_{12} \right )\alpha_{2}^{new} = y_{2}\left ( y_{2} - y_{1} + \underline{\alpha_{1}^{old}y_{1}K_{11}} + \alpha_{2}^{old}y_{2}K_{11} - \underbrace{\alpha_{1}^{old}y_{1}K_{12}} - \alpha_{2}^{old}y_{2}K_{12}

+ g(x_{1}) - \underline{\alpha_{1}^{old}y_{1}K_{11}} - \alpha_{2}^{old}y_{2}K_{12} - b

- g(x_{2}) + \underbrace{\alpha_{1}^{old}y_{1}K_{12}} + \alpha_{2}^{old}y_{2}K_{22} + b \right )

= y_{2} \bigg( g(x_{1}) - y_{1} - \left [g(x_{2}) - y_{2} \right ] + \alpha_{2}^{old}y_{2}(K_{11} - 2K_{12} + K_{22})\bigg) \\

= y_{2} \left [ E_{1} - E_{2} + \alpha_{2}^{old}y_{2}(K_{11} - 2K_{12} + K_{22}) \right ]$$

所以迭代式最终变成了下面这个样子

$$\alpha_{2}^{new} = \alpha_{2}^{old} +\frac{y_{2}\left ( E_{1} - E_{2} \right )}{K_{11}+ K_{22} - 2K_{12}}$$

每次迭代得到一个 $\alpha_{2}^{new}$ 后,还需要进行剪辑使其满足不等式约束,根据限制条件

$$\alpha_{1}^{new}y_{1} + \alpha_{2}^{new}y_{2} = M$$

1)$y_{1} = y_{2}$,那么变量必须满足的约束所有约束如下

$$\alpha_{1}^{new} + \alpha_{2}^{new} = \varepsilon, \; (\varepsilon = \pm M)\\

0 \leq \alpha_{1}^{new} \leq C \\

0 \leq \alpha_{2}^{new} \leq C$$

即

$$0 \leq \varepsilon - \alpha_{2}^{new} \leq C \\

0 \leq \alpha_{2}^{new} \leq C$$

于是有

$$\varepsilon - C \leq \alpha_{2}^{new} \leq \varepsilon \\

0 \leq \alpha_{2}^{new} \leq C$$

最终

$$max\left \{ 0, \varepsilon - C \right \} \leq \alpha_{2}^{new} \leq min\left \{ \varepsilon,C \right \}$$

也就是说,所求的值 $\alpha_{2}^{new}$ 如果低于下界,则取下界,高于上界,则取上界。

2)$y_{1} \neq y_{2}$,那么变量必须满足的约束所有约束如下

$$\alpha_{1}^{new} - \alpha_{2}^{new} = \varepsilon, \; (\varepsilon = \pm M)\\

0 \leq \alpha_{1}^{new} \leq C \\

0 \leq \alpha_{2}^{new} \leq C$$

即

$$0 \leq \varepsilon + \alpha_{2}^{new} \leq C \\

0 \leq \alpha_{2}^{new} \leq C$$

于是有

$$-\varepsilon \leq \alpha_{2}^{new} \leq C - \varepsilon \\

0 \leq \alpha_{2}^{new} \leq C$$

最终

$$max \left \{0, -\varepsilon \right \} \leq \alpha_{2}^{new} \leq min \left \{ C, C - \varepsilon \right \}$$

综合 $1),2)$,如果 $\alpha_{2}^{new}$ 就在这个限制范围内,则求出 $\alpha_{2}^{new}$,完成一轮迭代。如果 $\alpha_{2}^{new}$ 不在这个限制范围内,进行截断取边界,此轮迭代照样结束。

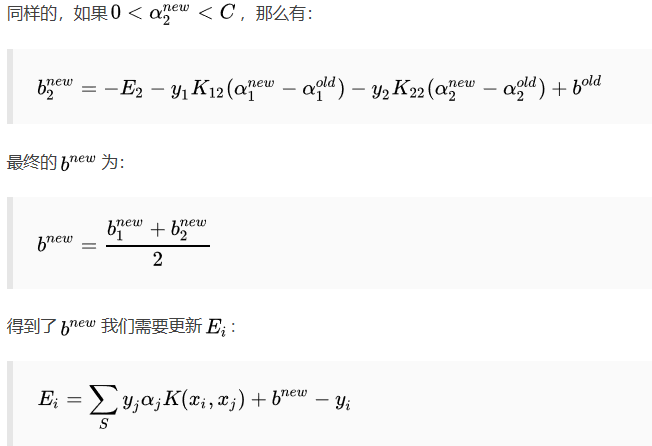

经过上述一系列复杂的运算,我们找到了两个优化后的变量 $\alpha_{1},\alpha_{2}$,每次我们找到两个变量,都要更新阈值 $b$ 和差值 $E_{i}$。

当 $0 < \alpha_{1}^{new} < C$ 时,有

$$b = y_{1} - \sum_{i=1}^{n}\alpha_{i}y_{i}K_{i1} \\

\Rightarrow b_{1}^{new} = y_{1} - \alpha_{1}^{new}y_{1}K_{11} - \alpha_{2}^{new}y_{2}K_{21} - \sum_{i=3}^{n}\alpha_{i}y_{i}K_{i1}$$

计算出上一次迭代后的 $E_{1}$ 为(这个值已经计算过并存储在内存里了):

$$E_{1} = g(x_{1}) - y_{1} = \alpha_{1}^{old}y_{1}K_{11} + \alpha_{2}^{old}y_{2}K_{12} + \sum_{i=3}^{n}\alpha_{i}y_{i}K_{i1} + b^{old} - y_{1}$$

为了减少计算量,将上式代入 $b_{1}^{new}$ 得

$$b_{1}^{new} = -E_{1} + \underline{\alpha_{1}^{old}y_{1}K_{11}} + \underbrace{\alpha_{2}^{old}y_{2}K_{12}} - \underline{\alpha_{1}^{new}y_{1}K_{11}} - \underbrace{\alpha_{2}^{new}y_{2}K_{21}} + b^{old} \\

= -E_{1} - y_{1}K_{11}\left ( \alpha_{1}^{new} - \alpha_{1}^{old} \right ) - y_{2}K_{21}\left ( \alpha_{2}^{new} - \alpha_{2}^{old} \right ) + b^{old}$$