caffe lstm

本文官方链接https://www.cnblogs.com/yanghailin/p/15603194.html,未经授权勿转载

理解 LSTM 网络 https://www.jianshu.com/p/9dc9f41f0b29

Understanding LSTM Networks https://colah.github.io/posts/2015-08-Understanding-LSTMs/

pytorch https://pytorch.org/docs/1.0.1/nn.html?highlight=lstm#torch.nn.LSTM

https://blog.csdn.net/nfzhlk/article/details/86507635

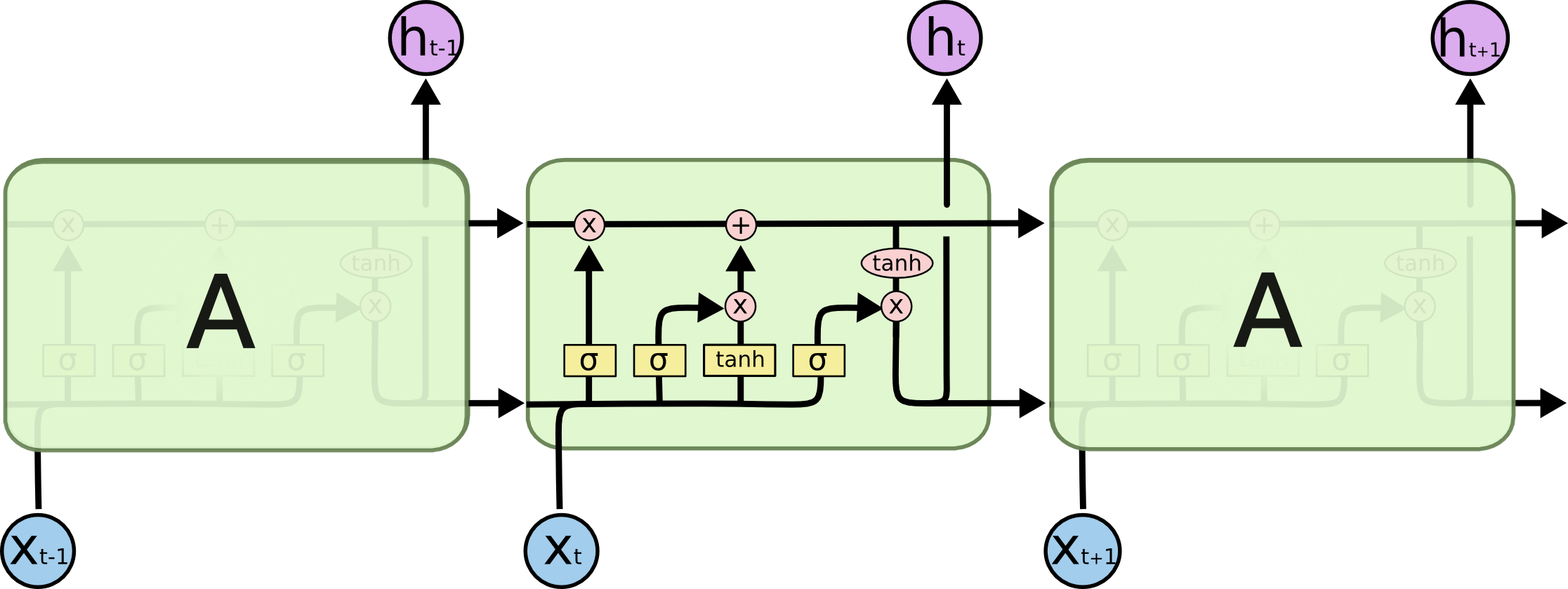

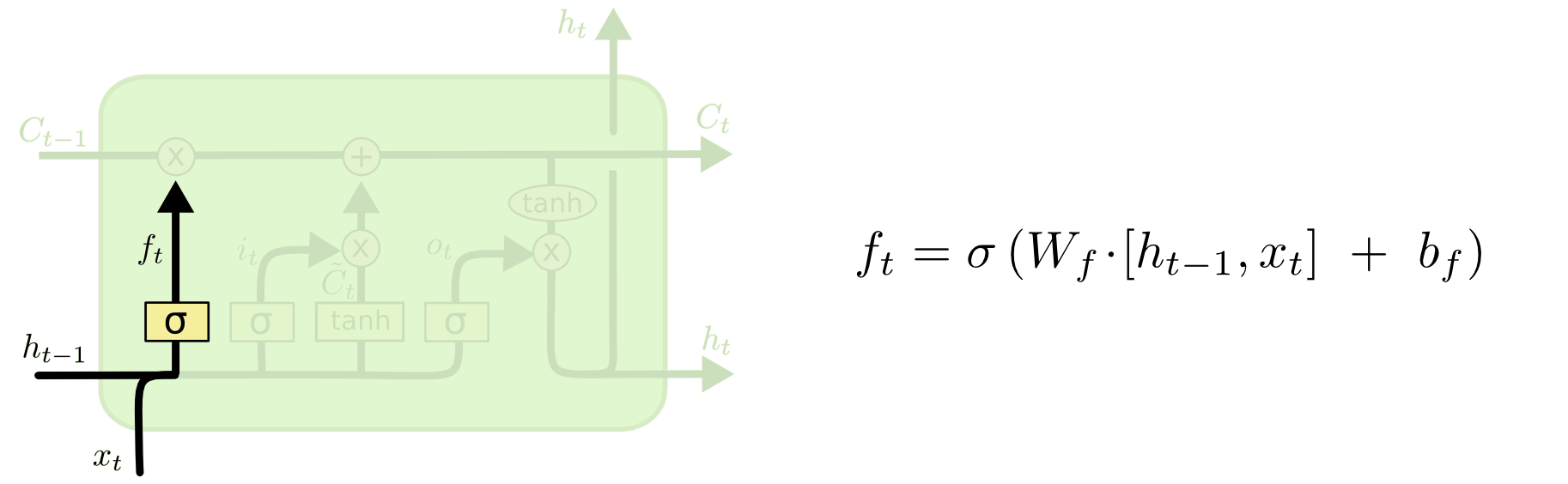

忘记门:

作用对象:细胞状态

作用:将细胞状态中的信息选择性的遗忘

让我们回到语言模型的例子中来基于已经看到的预测下一个词。在这个问题中,细胞状态可能包含当前主语的类别,因此正确的代词可以被选择出来。当我们看到新的主语,我们希望忘记旧的主语。

例如,他今天有事,所以我。。。当处理到‘’我‘’的时候选择性的忘记前面的’他’,或者说减小这个词对后面词的作用。

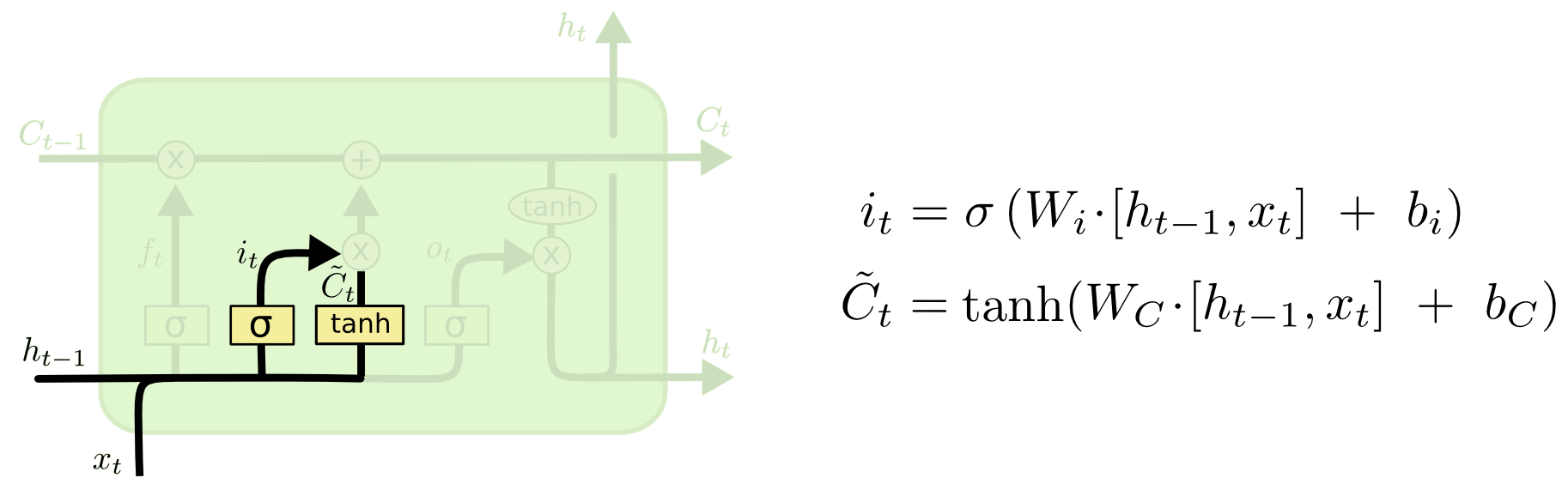

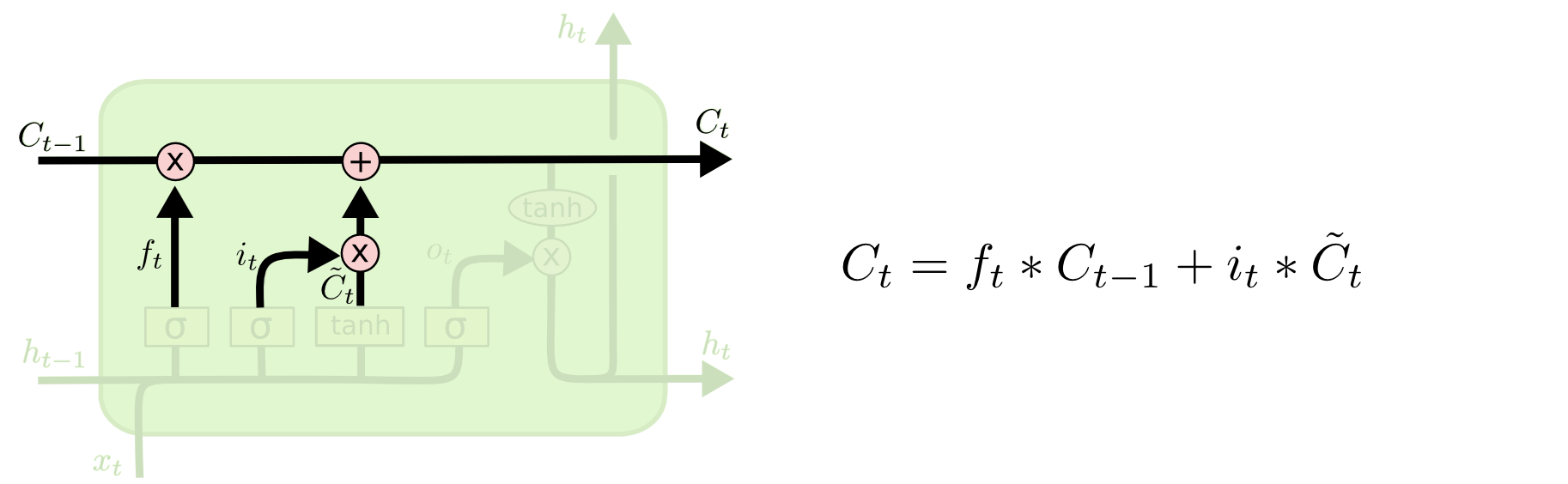

输入层门:

作用对象:细胞状态

作用:将新的信息选择性的记录到细胞状态中

在我们语言模型的例子中,我们希望增加新的主语的类别到细胞状态中,来替代旧的需要忘记的主语。

例如:他今天有事,所以我。。。。当处理到‘’我‘’这个词的时候,就会把主语我更新到细胞中去。

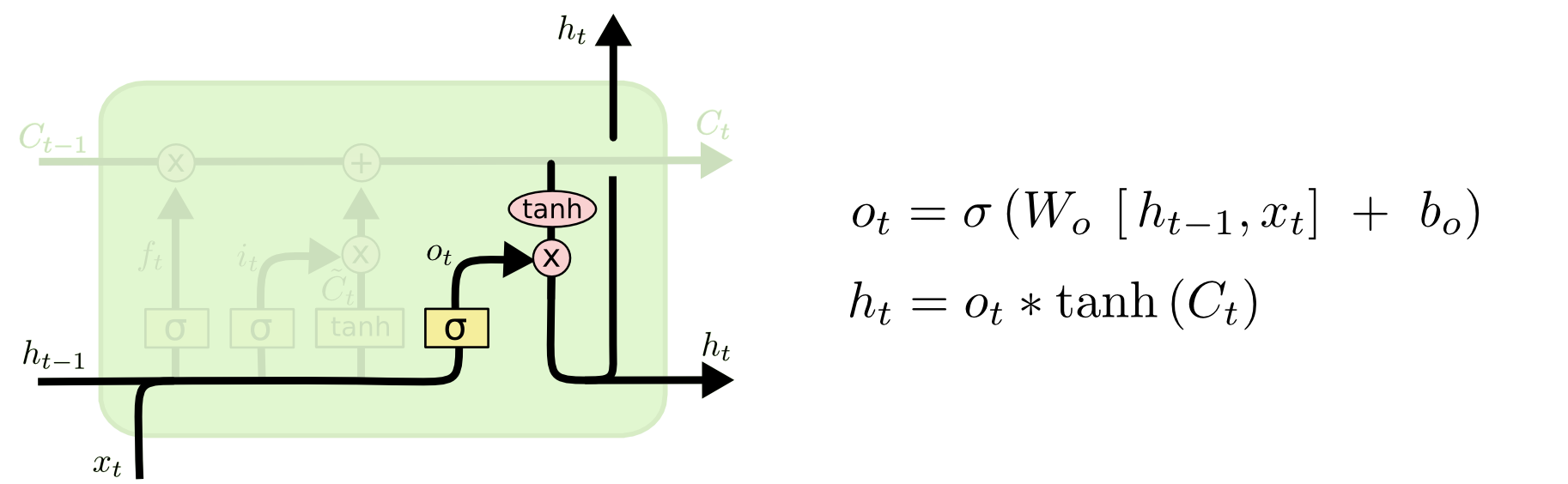

输出层门:

作用对象:隐层ht

在语言模型的例子中,因为他就看到了一个 代词,可能需要输出与一个 动词 相关的信息。例如,可能输出是否代词是单数还是负数,这样如果是动词的话,我们也知道动词需要进行的词形变化。

例如:上面的例子,当处理到‘’我‘’这个词的时候,可以预测下一个词,是动词的可能性较大,而且是第一人称。

会把前面的信息保存到隐层中去。

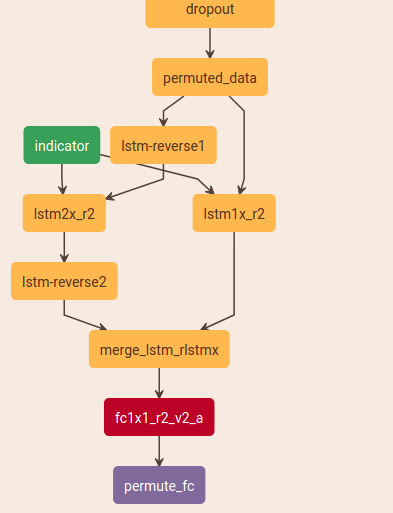

caffe lstm的实现方式过于复杂,lstm层继承于RecurrentLayer,然后在该层自建一个net。就是说一个lstm层内部就是一个网络!

上图是一个双向lstm,以右边的lstm1X_r2为例。

layer {

name: "lstm1x_r2"

type: "LSTM"

bottom: "permuted_data"

bottom: "indicator"

top: "lstm1x"

recurrent_param {

num_output: 100

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

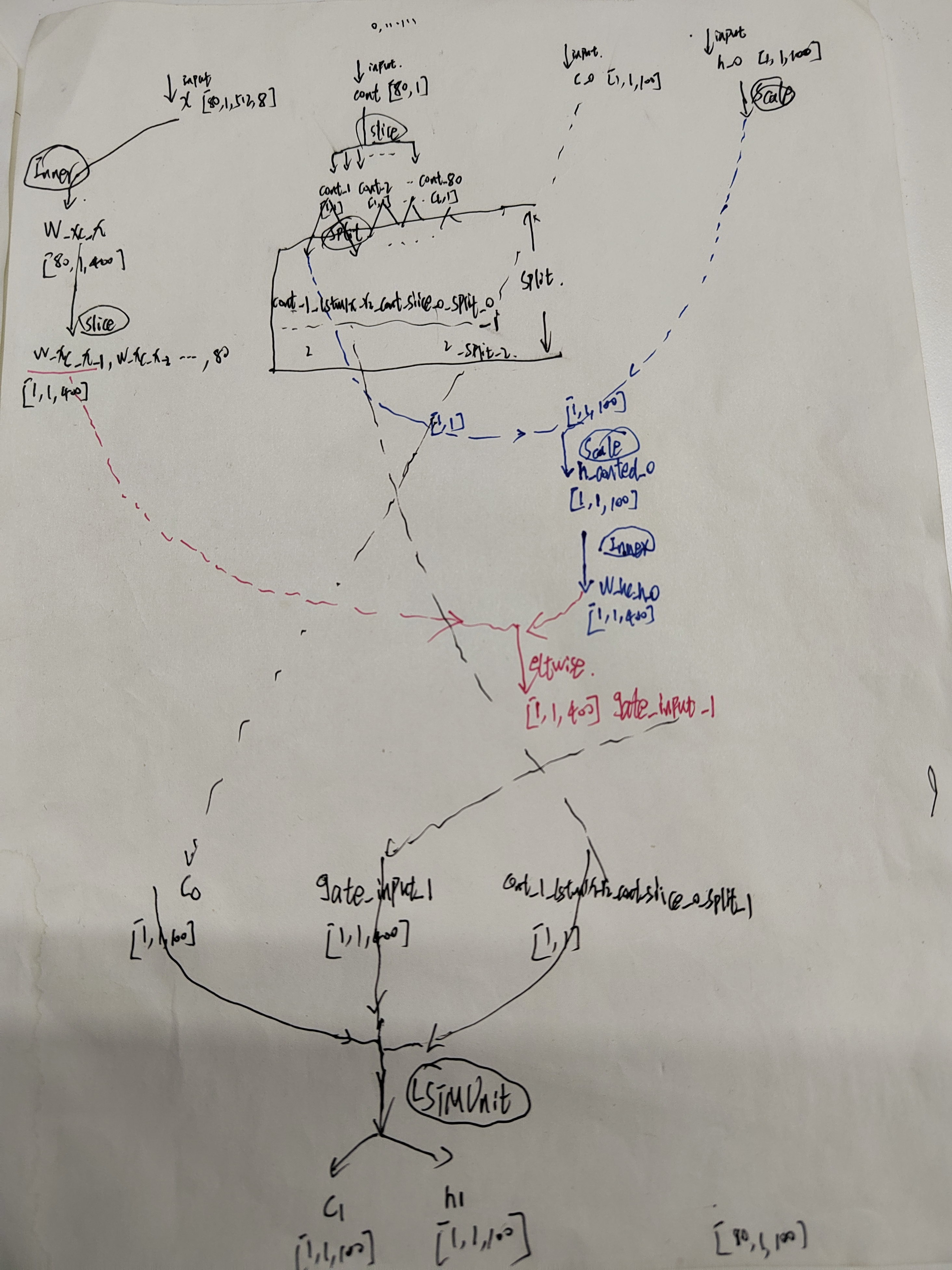

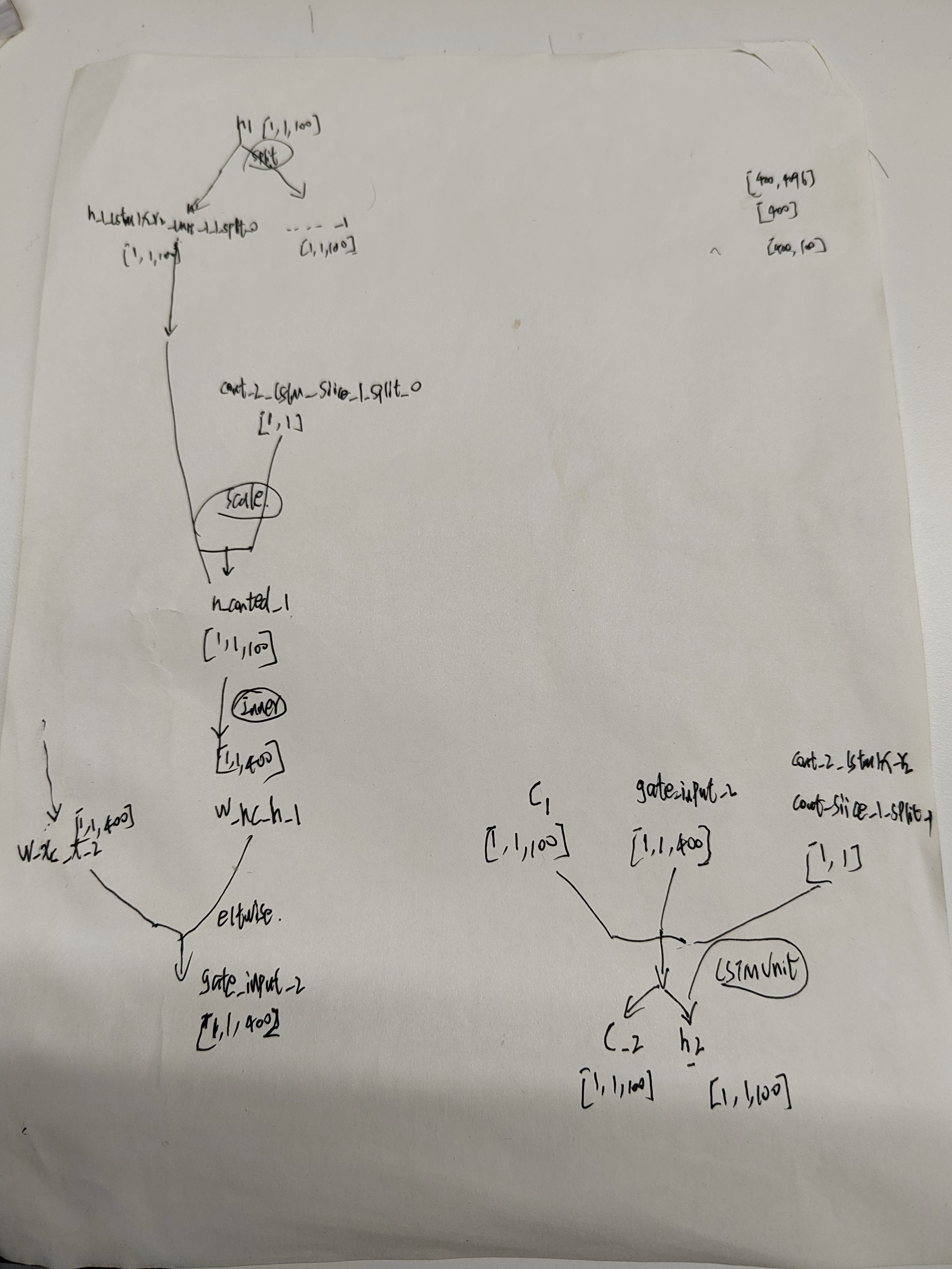

输入[80,1,512,8] 经过inner

这里从bottom permuted_data数据流shape是[80,1,512,8], indicator数据流的shape是[80,1],这里indicator80个数据是第一个为0,其余为1.

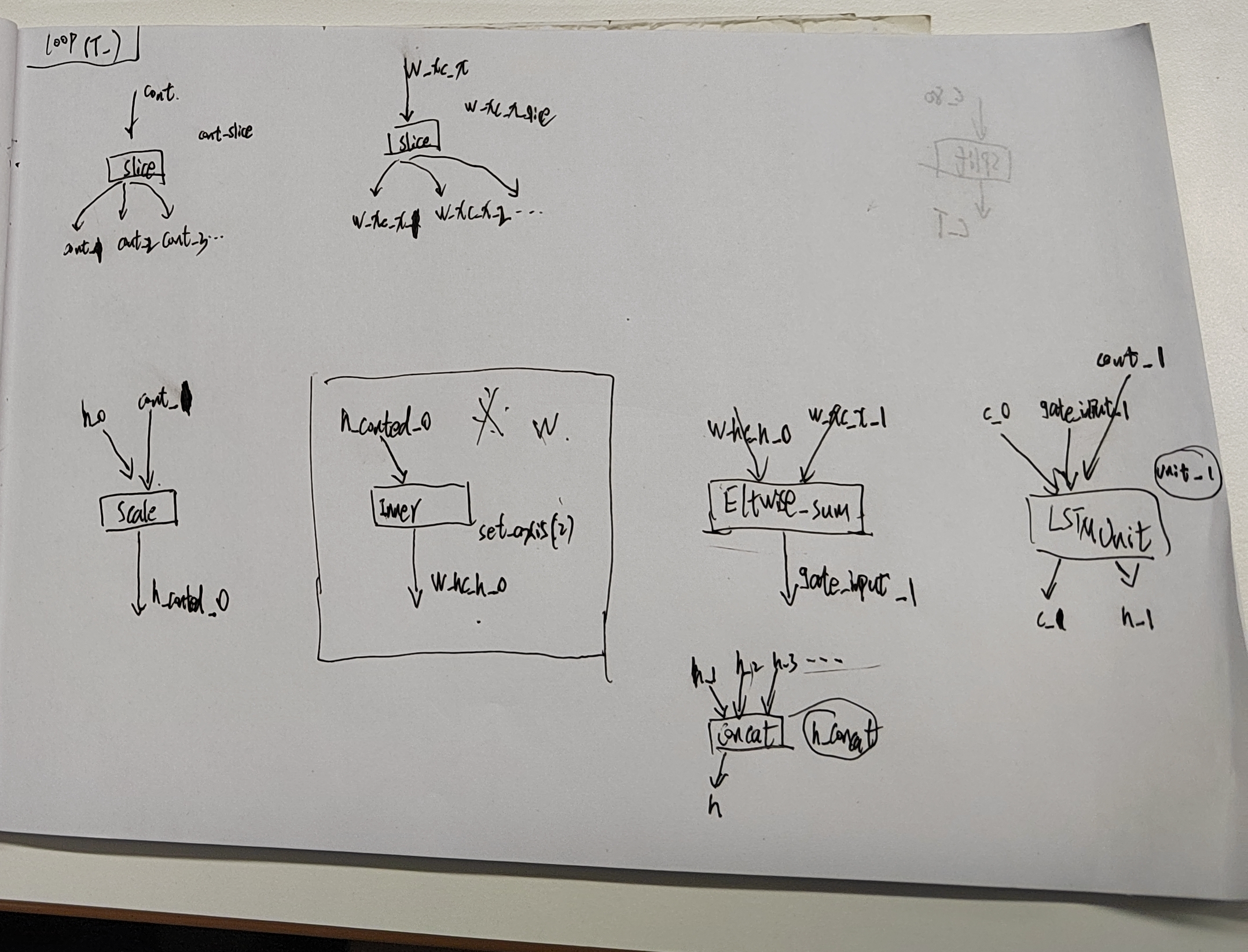

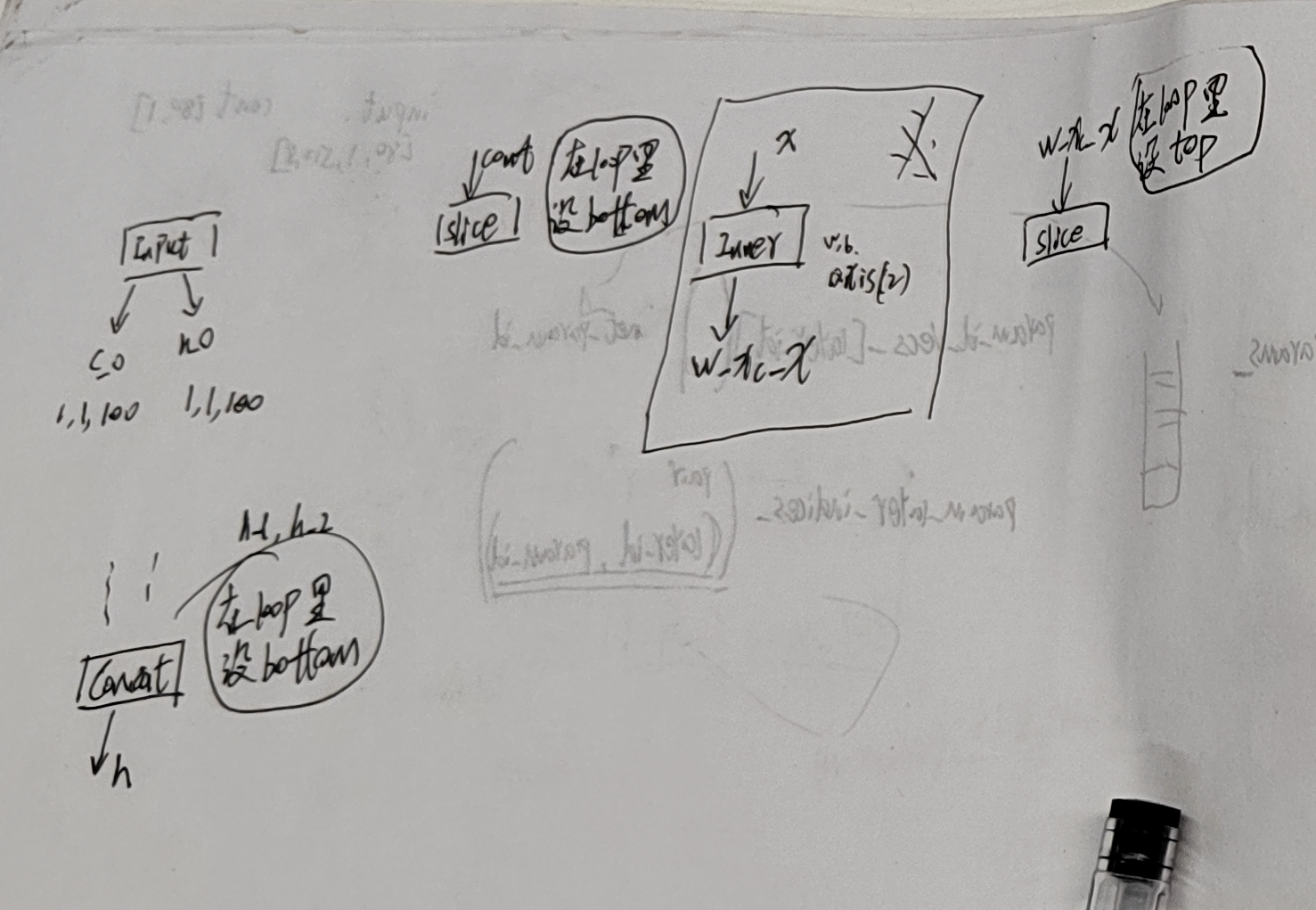

输入的[80,1,512,8] 在lstm内部叫x,type是input。 对这股数据流进行全连接,inner,得到W_xc_x,其shape是[80,1,400],然后经过slice层被切割成80份,W_xc_x_1[1,1,400] W_xc_x_2[1,1,400] ... W_xc_x_80[1,1,400].

注意这里是一个全连接层,会有2组参数,w和b。w的shape是[400,4096],b的shape是[400]. (512×8=4096)

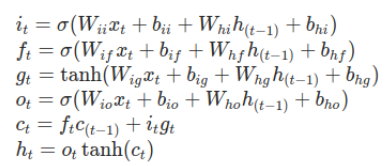

这里的全连接层和公式结合起来就是wx,设定的lstm的recurrent_param num_output: 100,然后这个的全连接层参数直接是num_output×4=400,就是输出是400.

知道这里为什么是4倍关系吗?

仔细看公式,你会发现是有4组w与x相乘的,没错,就是后面的遗忘门f,输入门i,细胞状态g(c~),输出门o都是w与x相乘,所以这里它们是乘以的不同的x,也就是说为每一个门都配置了可学习参数w。

w的shape是[400,4096],原本hidden参数设置的是100,现在就是这100里面每一个有4组参数,所以就是400了!

W_xc_x_1,W_xc_x_2,。。。,W_xc_x_80这些shape是[1,1,400]。它们后续与h_t-1相加然后在循环中最为gate_input一个接一个的在LSTMUnitLayer层中做逻辑运算。

c(t) h(t)

然后讲解c0, h0, 这两个初始状态都被置位0,shape都是[1,1,100]。

其中根据公式,h也是需要经过全连接层的,h[1,1,100]经过inner变成[1,1,400]. 所以这里也会产生一组参数w[400,100]. 这里在caffe中偏置是没有的,所以caffe的可以学习参数就是这3组,这里一组还是上面全连接层参数是2组。

还有需要注意的是这里的参数是共享的。因为每个h都会用这同样的参数进行全连接运算。

h0是全0的[1,1,100],后面会循环timestep次产生h1,h2,...,h79.

这里就是对应公式中的w×h。这里也是由100生成400个出来,每个i,f,o,g都是自己的。所以100变成400.

gate_input

然后把这两组全连接之后的值相加,得到gate_input_1[1,1,400]。

W_xc_x_t [1,1,400]

h(t-1)' [1,1,400]

就是上面公式中的

wf[h(t-1),xt]

wi[h(t-1),xt]

wc[h(t-1),xt]

wo[h(t-1),xt]

当然要时刻记住这里其实是100组,每组是4个。每个对应了i,f,o,g。

pytorch手册上面的公式

这里就是相当于公式前4个括号里面的相加部分。

这里再简单说下caffe源码里面的indicator,在层lstm里面就是input,层名叫cont。80个,第一个为0,其余为1.这个与h相乘。

LSTMUnit

然后输入3个参数到LSTMUnit层,该层就是负责逻辑运算的,输入的3个参数分别是c(t-1)shape是[1,1,100], gate_input_t[1,1,400], cont[1,1].

LSTMUnit层输出c(t) [1,1,100], h(t) [1,1,100]

这里附上逻辑运算过程代码:

template <typename Dtype>

void LSTMUnitLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const int num = bottom[0]->shape(1);//1

const int x_dim = hidden_dim_ * 4;

const Dtype* C_prev = bottom[0]->cpu_data();

const Dtype* X = bottom[1]->cpu_data();

const Dtype* cont = bottom[2]->cpu_data();

Dtype* C = top[0]->mutable_cpu_data();

Dtype* H = top[1]->mutable_cpu_data();

for (int n = 0; n < num; ++n) { //1

for (int d = 0; d < hidden_dim_; ++d) {//100

const Dtype i = sigmoid(X[d]);

const Dtype f = (*cont == 0) ? 0 :

(*cont * sigmoid(X[1 * hidden_dim_ + d]));

const Dtype o = sigmoid(X[2 * hidden_dim_ + d]);

const Dtype g = tanh(X[3 * hidden_dim_ + d]);

const Dtype c_prev = C_prev[d];

const Dtype c = f * c_prev + i * g;

C[d] = c;

const Dtype tanh_c = tanh(c);

H[d] = o * tanh_c;

}

C_prev += hidden_dim_;

X += x_dim;

C += hidden_dim_;

H += hidden_dim_;

++cont;

}

}

从代码中可以看到X[d] X[1 * hidden_dim_ + d] X[2 * hidden_dim_ + d] X[3 * hidden_dim_ + d],大循环是hidden_dim_,100, 每次拿出i,f,o,g的值进行运算。

这里经过了一个单元运算,总共需要time step次运算,每次都会把3个输入参数先得,输入的3个参数分别是c(t-1)shape是[1,1,100], gate_input_t[1,1,400], cont[1,1].再送到LSTMUnitLayer单元运算。

c(t-1)是上一次的运算输出, gate_input_t里面包含了当前的x和上一次输出的h。

最后结果就是直接把h的输出都统一保存,变成[80,1,100]. 每次得到的h是[1,1,100]

整个计算流图

恩,以上大概是把整个流程讲解完了,但是其实现过程是特别复杂的,这些计算流程都是用网络来实现的。

手画的大概流程图:

就是先用代码把网络给搭建好。

代码搭网络的代码在lstm_layer.cpp和recurrent_layer.cpp两部分中,部分代码如下:

template <typename Dtype>

void LSTMLayer<Dtype>::FillUnrolledNet(NetParameter* net_param) const {

const int num_output = this->layer_param_.recurrent_param().num_output();

CHECK_GT(num_output, 0) << "num_output must be positive";

const FillerParameter& weight_filler =

this->layer_param_.recurrent_param().weight_filler();

const FillerParameter& bias_filler =

this->layer_param_.recurrent_param().bias_filler();

// Add generic LayerParameter's (without bottoms/tops) of layer types we'll

// use to save redundant code.

LayerParameter hidden_param;

hidden_param.set_type("InnerProduct");

hidden_param.mutable_inner_product_param()->set_num_output(num_output * 4);

hidden_param.mutable_inner_product_param()->set_bias_term(false);

hidden_param.mutable_inner_product_param()->set_axis(2);

hidden_param.mutable_inner_product_param()->

mutable_weight_filler()->CopyFrom(weight_filler);

LayerParameter biased_hidden_param(hidden_param);

biased_hidden_param.mutable_inner_product_param()->set_bias_term(true);

biased_hidden_param.mutable_inner_product_param()->

mutable_bias_filler()->CopyFrom(bias_filler);

LayerParameter sum_param;

sum_param.set_type("Eltwise");

sum_param.mutable_eltwise_param()->set_operation(

EltwiseParameter_EltwiseOp_SUM);

LayerParameter scale_param;

scale_param.set_type("Scale");

scale_param.mutable_scale_param()->set_axis(0);

LayerParameter slice_param;

slice_param.set_type("Slice");

slice_param.mutable_slice_param()->set_axis(0);

LayerParameter split_param;

split_param.set_type("Split");

vector<BlobShape> input_shapes;

RecurrentInputShapes(&input_shapes);//1 1 100 1 1 100

CHECK_EQ(2, input_shapes.size());

LayerParameter* input_layer_param = net_param->add_layer();

input_layer_param->set_type("Input");

InputParameter* input_param = input_layer_param->mutable_input_param();

input_layer_param->add_top("c_0");

input_param->add_shape()->CopyFrom(input_shapes[0]);

input_layer_param->add_top("h_0");

input_param->add_shape()->CopyFrom(input_shapes[1]);

LayerParameter* cont_slice_param = net_param->add_layer();

cont_slice_param->CopyFrom(slice_param);

cont_slice_param->set_name("cont_slice");

cont_slice_param->add_bottom("cont");

cont_slice_param->mutable_slice_param()->set_axis(0);

// Add layer to transform all timesteps of x to the hidden state dimension.

// W_xc_x = W_xc * x + b_c

{

LayerParameter* x_transform_param = net_param->add_layer();

x_transform_param->CopyFrom(biased_hidden_param);

x_transform_param->set_name("x_transform");

x_transform_param->add_param()->set_name("W_xc");

x_transform_param->add_param()->set_name("b_c");

x_transform_param->add_bottom("x");

x_transform_param->add_top("W_xc_x");

x_transform_param->add_propagate_down(true);

}

if (this->static_input_) {

// Add layer to transform x_static to the gate dimension.

// W_xc_x_static = W_xc_static * x_static

LayerParameter* x_static_transform_param = net_param->add_layer();

x_static_transform_param->CopyFrom(hidden_param);

x_static_transform_param->mutable_inner_product_param()->set_axis(1);

x_static_transform_param->set_name("W_xc_x_static");

x_static_transform_param->add_param()->set_name("W_xc_static");

x_static_transform_param->add_bottom("x_static");

x_static_transform_param->add_top("W_xc_x_static_preshape");

x_static_transform_param->add_propagate_down(true);

LayerParameter* reshape_param = net_param->add_layer();

reshape_param->set_type("Reshape");

BlobShape* new_shape =

reshape_param->mutable_reshape_param()->mutable_shape();

new_shape->add_dim(1); // One timestep.

// Should infer this->N as the dimension so we can reshape on batch size.

new_shape->add_dim(-1);

new_shape->add_dim(

x_static_transform_param->inner_product_param().num_output());

reshape_param->set_name("W_xc_x_static_reshape");

reshape_param->add_bottom("W_xc_x_static_preshape");

reshape_param->add_top("W_xc_x_static");

}

LayerParameter* x_slice_param = net_param->add_layer();

x_slice_param->CopyFrom(slice_param);

x_slice_param->add_bottom("W_xc_x");

x_slice_param->set_name("W_xc_x_slice");

LayerParameter output_concat_layer;

output_concat_layer.set_name("h_concat");

output_concat_layer.set_type("Concat");

output_concat_layer.add_top("h");

output_concat_layer.mutable_concat_param()->set_axis(0);

// int TTT = this->T_; //80

for (int t = 1; t <= this->T_; ++t) {

string tm1s = format_int(t - 1);

string ts = format_int(t);

cont_slice_param->add_top("cont_" + ts);

x_slice_param->add_top("W_xc_x_" + ts);

// Add layers to flush the hidden state when beginning a new

// sequence, as indicated by cont_t.

// h_conted_{t-1} := cont_t * h_{t-1}

//

// Normally, cont_t is binary (i.e., 0 or 1), so:

// h_conted_{t-1} := h_{t-1} if cont_t == 1

// 0 otherwise

{

LayerParameter* cont_h_param = net_param->add_layer();

cont_h_param->CopyFrom(scale_param);

cont_h_param->set_name("h_conted_" + tm1s);

cont_h_param->add_bottom("h_" + tm1s);

cont_h_param->add_bottom("cont_" + ts);

cont_h_param->add_top("h_conted_" + tm1s);

}

// Add layer to compute

// W_hc_h_{t-1} := W_hc * h_conted_{t-1}

{

LayerParameter* w_param = net_param->add_layer();

w_param->CopyFrom(hidden_param);

w_param->set_name("transform_" + ts);

w_param->add_param()->set_name("W_hc");

w_param->add_bottom("h_conted_" + tm1s);

w_param->add_top("W_hc_h_" + tm1s);

w_param->mutable_inner_product_param()->set_axis(2);

}

// Add the outputs of the linear transformations to compute the gate input.

// gate_input_t := W_hc * h_conted_{t-1} + W_xc * x_t + b_c

// = W_hc_h_{t-1} + W_xc_x_t + b_c

{

LayerParameter* input_sum_layer = net_param->add_layer();

input_sum_layer->CopyFrom(sum_param);

input_sum_layer->set_name("gate_input_" + ts);

input_sum_layer->add_bottom("W_hc_h_" + tm1s);

input_sum_layer->add_bottom("W_xc_x_" + ts);

if (this->static_input_) {

input_sum_layer->add_bottom("W_xc_x_static");

}

input_sum_layer->add_top("gate_input_" + ts);

}

// Add LSTMUnit layer to compute the cell & hidden vectors c_t and h_t.

// Inputs: c_{t-1}, gate_input_t = (i_t, f_t, o_t, g_t), cont_t

// Outputs: c_t, h_t

// [ i_t' ]

// [ f_t' ] := gate_input_t

// [ o_t' ]

// [ g_t' ]

// i_t := \sigmoid[i_t']

// f_t := \sigmoid[f_t']

// o_t := \sigmoid[o_t']

// g_t := \tanh[g_t']

// c_t := cont_t * (f_t .* c_{t-1}) + (i_t .* g_t)

// h_t := o_t .* \tanh[c_t]

{

LayerParameter* lstm_unit_param = net_param->add_layer();

lstm_unit_param->set_type("LSTMUnit");

lstm_unit_param->add_bottom("c_" + tm1s);

lstm_unit_param->add_bottom("gate_input_" + ts);

lstm_unit_param->add_bottom("cont_" + ts);

lstm_unit_param->add_top("c_" + ts);

lstm_unit_param->add_top("h_" + ts);

lstm_unit_param->set_name("unit_" + ts);

}

output_concat_layer.add_bottom("h_" + ts);

} // for (int t = 1; t <= this->T_; ++t)

{

LayerParameter* c_T_copy_param = net_param->add_layer();

c_T_copy_param->CopyFrom(split_param);

c_T_copy_param->add_bottom("c_" + format_int(this->T_));

c_T_copy_param->add_top("c_T");

}

net_param->add_layer()->CopyFrom(output_concat_layer);

}

手画的代码循环搭建网络的各个层:

代码自动生成的prototxt

这里我给出它用代码搭建网络生成的prototxt, timestep=80.放到netscope里面,直接像蜘蛛网一样的网络。太复杂。

其实也还好,就是把上面的过程重复了80次而已。咱们把一次搞懂就可以,重复循环的事情交给计算机就可以了。

# Enter your network definition here.

# Use Shift+Enter to update the visualization.

layer {

name: "lstm2x_r2_"

type: "Input"

top: "x"

top: "cont"

input_param {

shape {

dim: 80

dim: 1

dim: 512

dim: 8

}

shape {

dim: 80

dim: 1

}

}

}

layer {

name: "lstm2x_r2_"

type: "Input"

top: "c_0"

top: "h_0"

input_param {

shape {

dim: 1

dim: 1

dim: 100

}

shape {

dim: 1

dim: 1

dim: 100

}

}

}

layer {

name: "lstm2x_r2_cont_slice"

type: "Slice"

bottom: "cont"

top: "cont_1"

top: "cont_2"

top: "cont_3"

top: "cont_4"

top: "cont_5"

top: "cont_6"

top: "cont_7"

top: "cont_8"

top: "cont_9"

top: "cont_10"

top: "cont_11"

top: "cont_12"

top: "cont_13"

top: "cont_14"

top: "cont_15"

top: "cont_16"

top: "cont_17"

top: "cont_18"

top: "cont_19"

top: "cont_20"

top: "cont_21"

top: "cont_22"

top: "cont_23"

top: "cont_24"

top: "cont_25"

top: "cont_26"

top: "cont_27"

top: "cont_28"

top: "cont_29"

top: "cont_30"

top: "cont_31"

top: "cont_32"

top: "cont_33"

top: "cont_34"

top: "cont_35"

top: "cont_36"

top: "cont_37"

top: "cont_38"

top: "cont_39"

top: "cont_40"

top: "cont_41"

top: "cont_42"

top: "cont_43"

top: "cont_44"

top: "cont_45"

top: "cont_46"

top: "cont_47"

top: "cont_48"

top: "cont_49"

top: "cont_50"

top: "cont_51"

top: "cont_52"

top: "cont_53"

top: "cont_54"

top: "cont_55"

top: "cont_56"

top: "cont_57"

top: "cont_58"

top: "cont_59"

top: "cont_60"

top: "cont_61"

top: "cont_62"

top: "cont_63"

top: "cont_64"

top: "cont_65"

top: "cont_66"

top: "cont_67"

top: "cont_68"

top: "cont_69"

top: "cont_70"

top: "cont_71"

top: "cont_72"

top: "cont_73"

top: "cont_74"

top: "cont_75"

top: "cont_76"

top: "cont_77"

top: "cont_78"

top: "cont_79"

top: "cont_80"

slice_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_x_transform"

type: "InnerProduct"

bottom: "x"

top: "W_xc_x"

param {

name: "W_xc"

}

param {

name: "b_c"

}

propagate_down: true

inner_product_param {

num_output: 400

bias_term: true

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

axis: 2

}

}

layer {

name: "lstm2x_r2_W_xc_x_slice"

type: "Slice"

bottom: "W_xc_x"

top: "W_xc_x_1"

top: "W_xc_x_2"

top: "W_xc_x_3"

top: "W_xc_x_4"

top: "W_xc_x_5"

top: "W_xc_x_6"

top: "W_xc_x_7"

top: "W_xc_x_8"

top: "W_xc_x_9"

top: "W_xc_x_10"

top: "W_xc_x_11"

top: "W_xc_x_12"

top: "W_xc_x_13"

top: "W_xc_x_14"

top: "W_xc_x_15"

top: "W_xc_x_16"

top: "W_xc_x_17"

top: "W_xc_x_18"

top: "W_xc_x_19"

top: "W_xc_x_20"

top: "W_xc_x_21"

top: "W_xc_x_22"

top: "W_xc_x_23"

top: "W_xc_x_24"

top: "W_xc_x_25"

top: "W_xc_x_26"

top: "W_xc_x_27"

top: "W_xc_x_28"

top: "W_xc_x_29"

top: "W_xc_x_30"

top: "W_xc_x_31"

top: "W_xc_x_32"

top: "W_xc_x_33"

top: "W_xc_x_34"

top: "W_xc_x_35"

top: "W_xc_x_36"

top: "W_xc_x_37"

top: "W_xc_x_38"

top: "W_xc_x_39"

top: "W_xc_x_40"

top: "W_xc_x_41"

top: "W_xc_x_42"

top: "W_xc_x_43"

top: "W_xc_x_44"

top: "W_xc_x_45"

top: "W_xc_x_46"

top: "W_xc_x_47"

top: "W_xc_x_48"

top: "W_xc_x_49"

top: "W_xc_x_50"

top: "W_xc_x_51"

top: "W_xc_x_52"

top: "W_xc_x_53"

top: "W_xc_x_54"

top: "W_xc_x_55"

top: "W_xc_x_56"

top: "W_xc_x_57"

top: "W_xc_x_58"

top: "W_xc_x_59"

top: "W_xc_x_60"

top: "W_xc_x_61"

top: "W_xc_x_62"

top: "W_xc_x_63"

top: "W_xc_x_64"

top: "W_xc_x_65"

top: "W_xc_x_66"

top: "W_xc_x_67"

top: "W_xc_x_68"

top: "W_xc_x_69"

top: "W_xc_x_70"

top: "W_xc_x_71"

top: "W_xc_x_72"

top: "W_xc_x_73"

top: "W_xc_x_74"

top: "W_xc_x_75"

top: "W_xc_x_76"

top: "W_xc_x_77"

top: "W_xc_x_78"

top: "W_xc_x_79"

top: "W_xc_x_80"

slice_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_h_conted_0"

type: "Scale"

bottom: "h_0"

bottom: "cont_1"

top: "h_conted_0"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_1"

type: "InnerProduct"

bottom: "h_conted_0"

top: "W_hc_h_0"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_1"

type: "Eltwise"

bottom: "W_hc_h_0"

bottom: "W_xc_x_1"

top: "gate_input_1"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_1"

type: "LSTMUnit"

bottom: "c_0"

bottom: "gate_input_1"

bottom: "cont_1"

top: "c_1"

top: "h_1"

}

layer {

name: "lstm2x_r2_h_conted_1"

type: "Scale"

bottom: "h_1"

bottom: "cont_2"

top: "h_conted_1"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_2"

type: "InnerProduct"

bottom: "h_conted_1"

top: "W_hc_h_1"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_2"

type: "Eltwise"

bottom: "W_hc_h_1"

bottom: "W_xc_x_2"

top: "gate_input_2"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_2"

type: "LSTMUnit"

bottom: "c_1"

bottom: "gate_input_2"

bottom: "cont_2"

top: "c_2"

top: "h_2"

}

layer {

name: "lstm2x_r2_h_conted_2"

type: "Scale"

bottom: "h_2"

bottom: "cont_3"

top: "h_conted_2"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_3"

type: "InnerProduct"

bottom: "h_conted_2"

top: "W_hc_h_2"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_3"

type: "Eltwise"

bottom: "W_hc_h_2"

bottom: "W_xc_x_3"

top: "gate_input_3"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_3"

type: "LSTMUnit"

bottom: "c_2"

bottom: "gate_input_3"

bottom: "cont_3"

top: "c_3"

top: "h_3"

}

layer {

name: "lstm2x_r2_h_conted_3"

type: "Scale"

bottom: "h_3"

bottom: "cont_4"

top: "h_conted_3"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_4"

type: "InnerProduct"

bottom: "h_conted_3"

top: "W_hc_h_3"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_4"

type: "Eltwise"

bottom: "W_hc_h_3"

bottom: "W_xc_x_4"

top: "gate_input_4"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_4"

type: "LSTMUnit"

bottom: "c_3"

bottom: "gate_input_4"

bottom: "cont_4"

top: "c_4"

top: "h_4"

}

layer {

name: "lstm2x_r2_h_conted_4"

type: "Scale"

bottom: "h_4"

bottom: "cont_5"

top: "h_conted_4"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_5"

type: "InnerProduct"

bottom: "h_conted_4"

top: "W_hc_h_4"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_5"

type: "Eltwise"

bottom: "W_hc_h_4"

bottom: "W_xc_x_5"

top: "gate_input_5"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_5"

type: "LSTMUnit"

bottom: "c_4"

bottom: "gate_input_5"

bottom: "cont_5"

top: "c_5"

top: "h_5"

}

layer {

name: "lstm2x_r2_h_conted_5"

type: "Scale"

bottom: "h_5"

bottom: "cont_6"

top: "h_conted_5"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_6"

type: "InnerProduct"

bottom: "h_conted_5"

top: "W_hc_h_5"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_6"

type: "Eltwise"

bottom: "W_hc_h_5"

bottom: "W_xc_x_6"

top: "gate_input_6"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_6"

type: "LSTMUnit"

bottom: "c_5"

bottom: "gate_input_6"

bottom: "cont_6"

top: "c_6"

top: "h_6"

}

layer {

name: "lstm2x_r2_h_conted_6"

type: "Scale"

bottom: "h_6"

bottom: "cont_7"

top: "h_conted_6"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_7"

type: "InnerProduct"

bottom: "h_conted_6"

top: "W_hc_h_6"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_7"

type: "Eltwise"

bottom: "W_hc_h_6"

bottom: "W_xc_x_7"

top: "gate_input_7"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_7"

type: "LSTMUnit"

bottom: "c_6"

bottom: "gate_input_7"

bottom: "cont_7"

top: "c_7"

top: "h_7"

}

layer {

name: "lstm2x_r2_h_conted_7"

type: "Scale"

bottom: "h_7"

bottom: "cont_8"

top: "h_conted_7"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_8"

type: "InnerProduct"

bottom: "h_conted_7"

top: "W_hc_h_7"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_8"

type: "Eltwise"

bottom: "W_hc_h_7"

bottom: "W_xc_x_8"

top: "gate_input_8"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_8"

type: "LSTMUnit"

bottom: "c_7"

bottom: "gate_input_8"

bottom: "cont_8"

top: "c_8"

top: "h_8"

}

layer {

name: "lstm2x_r2_h_conted_8"

type: "Scale"

bottom: "h_8"

bottom: "cont_9"

top: "h_conted_8"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_9"

type: "InnerProduct"

bottom: "h_conted_8"

top: "W_hc_h_8"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_9"

type: "Eltwise"

bottom: "W_hc_h_8"

bottom: "W_xc_x_9"

top: "gate_input_9"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_9"

type: "LSTMUnit"

bottom: "c_8"

bottom: "gate_input_9"

bottom: "cont_9"

top: "c_9"

top: "h_9"

}

layer {

name: "lstm2x_r2_h_conted_9"

type: "Scale"

bottom: "h_9"

bottom: "cont_10"

top: "h_conted_9"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_10"

type: "InnerProduct"

bottom: "h_conted_9"

top: "W_hc_h_9"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_10"

type: "Eltwise"

bottom: "W_hc_h_9"

bottom: "W_xc_x_10"

top: "gate_input_10"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_10"

type: "LSTMUnit"

bottom: "c_9"

bottom: "gate_input_10"

bottom: "cont_10"

top: "c_10"

top: "h_10"

}

layer {

name: "lstm2x_r2_h_conted_10"

type: "Scale"

bottom: "h_10"

bottom: "cont_11"

top: "h_conted_10"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_11"

type: "InnerProduct"

bottom: "h_conted_10"

top: "W_hc_h_10"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_11"

type: "Eltwise"

bottom: "W_hc_h_10"

bottom: "W_xc_x_11"

top: "gate_input_11"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_11"

type: "LSTMUnit"

bottom: "c_10"

bottom: "gate_input_11"

bottom: "cont_11"

top: "c_11"

top: "h_11"

}

layer {

name: "lstm2x_r2_h_conted_11"

type: "Scale"

bottom: "h_11"

bottom: "cont_12"

top: "h_conted_11"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_12"

type: "InnerProduct"

bottom: "h_conted_11"

top: "W_hc_h_11"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_12"

type: "Eltwise"

bottom: "W_hc_h_11"

bottom: "W_xc_x_12"

top: "gate_input_12"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_12"

type: "LSTMUnit"

bottom: "c_11"

bottom: "gate_input_12"

bottom: "cont_12"

top: "c_12"

top: "h_12"

}

layer {

name: "lstm2x_r2_h_conted_12"

type: "Scale"

bottom: "h_12"

bottom: "cont_13"

top: "h_conted_12"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_13"

type: "InnerProduct"

bottom: "h_conted_12"

top: "W_hc_h_12"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_13"

type: "Eltwise"

bottom: "W_hc_h_12"

bottom: "W_xc_x_13"

top: "gate_input_13"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_13"

type: "LSTMUnit"

bottom: "c_12"

bottom: "gate_input_13"

bottom: "cont_13"

top: "c_13"

top: "h_13"

}

layer {

name: "lstm2x_r2_h_conted_13"

type: "Scale"

bottom: "h_13"

bottom: "cont_14"

top: "h_conted_13"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_14"

type: "InnerProduct"

bottom: "h_conted_13"

top: "W_hc_h_13"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_14"

type: "Eltwise"

bottom: "W_hc_h_13"

bottom: "W_xc_x_14"

top: "gate_input_14"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_14"

type: "LSTMUnit"

bottom: "c_13"

bottom: "gate_input_14"

bottom: "cont_14"

top: "c_14"

top: "h_14"

}

layer {

name: "lstm2x_r2_h_conted_14"

type: "Scale"

bottom: "h_14"

bottom: "cont_15"

top: "h_conted_14"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_15"

type: "InnerProduct"

bottom: "h_conted_14"

top: "W_hc_h_14"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_15"

type: "Eltwise"

bottom: "W_hc_h_14"

bottom: "W_xc_x_15"

top: "gate_input_15"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_15"

type: "LSTMUnit"

bottom: "c_14"

bottom: "gate_input_15"

bottom: "cont_15"

top: "c_15"

top: "h_15"

}

layer {

name: "lstm2x_r2_h_conted_15"

type: "Scale"

bottom: "h_15"

bottom: "cont_16"

top: "h_conted_15"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_16"

type: "InnerProduct"

bottom: "h_conted_15"

top: "W_hc_h_15"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_16"

type: "Eltwise"

bottom: "W_hc_h_15"

bottom: "W_xc_x_16"

top: "gate_input_16"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_16"

type: "LSTMUnit"

bottom: "c_15"

bottom: "gate_input_16"

bottom: "cont_16"

top: "c_16"

top: "h_16"

}

layer {

name: "lstm2x_r2_h_conted_16"

type: "Scale"

bottom: "h_16"

bottom: "cont_17"

top: "h_conted_16"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_17"

type: "InnerProduct"

bottom: "h_conted_16"

top: "W_hc_h_16"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_17"

type: "Eltwise"

bottom: "W_hc_h_16"

bottom: "W_xc_x_17"

top: "gate_input_17"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_17"

type: "LSTMUnit"

bottom: "c_16"

bottom: "gate_input_17"

bottom: "cont_17"

top: "c_17"

top: "h_17"

}

layer {

name: "lstm2x_r2_h_conted_17"

type: "Scale"

bottom: "h_17"

bottom: "cont_18"

top: "h_conted_17"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_18"

type: "InnerProduct"

bottom: "h_conted_17"

top: "W_hc_h_17"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_18"

type: "Eltwise"

bottom: "W_hc_h_17"

bottom: "W_xc_x_18"

top: "gate_input_18"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_18"

type: "LSTMUnit"

bottom: "c_17"

bottom: "gate_input_18"

bottom: "cont_18"

top: "c_18"

top: "h_18"

}

layer {

name: "lstm2x_r2_h_conted_18"

type: "Scale"

bottom: "h_18"

bottom: "cont_19"

top: "h_conted_18"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_19"

type: "InnerProduct"

bottom: "h_conted_18"

top: "W_hc_h_18"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_19"

type: "Eltwise"

bottom: "W_hc_h_18"

bottom: "W_xc_x_19"

top: "gate_input_19"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_19"

type: "LSTMUnit"

bottom: "c_18"

bottom: "gate_input_19"

bottom: "cont_19"

top: "c_19"

top: "h_19"

}

layer {

name: "lstm2x_r2_h_conted_19"

type: "Scale"

bottom: "h_19"

bottom: "cont_20"

top: "h_conted_19"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_20"

type: "InnerProduct"

bottom: "h_conted_19"

top: "W_hc_h_19"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_20"

type: "Eltwise"

bottom: "W_hc_h_19"

bottom: "W_xc_x_20"

top: "gate_input_20"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_20"

type: "LSTMUnit"

bottom: "c_19"

bottom: "gate_input_20"

bottom: "cont_20"

top: "c_20"

top: "h_20"

}

layer {

name: "lstm2x_r2_h_conted_20"

type: "Scale"

bottom: "h_20"

bottom: "cont_21"

top: "h_conted_20"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_21"

type: "InnerProduct"

bottom: "h_conted_20"

top: "W_hc_h_20"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_21"

type: "Eltwise"

bottom: "W_hc_h_20"

bottom: "W_xc_x_21"

top: "gate_input_21"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_21"

type: "LSTMUnit"

bottom: "c_20"

bottom: "gate_input_21"

bottom: "cont_21"

top: "c_21"

top: "h_21"

}

layer {

name: "lstm2x_r2_h_conted_21"

type: "Scale"

bottom: "h_21"

bottom: "cont_22"

top: "h_conted_21"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_22"

type: "InnerProduct"

bottom: "h_conted_21"

top: "W_hc_h_21"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_22"

type: "Eltwise"

bottom: "W_hc_h_21"

bottom: "W_xc_x_22"

top: "gate_input_22"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_22"

type: "LSTMUnit"

bottom: "c_21"

bottom: "gate_input_22"

bottom: "cont_22"

top: "c_22"

top: "h_22"

}

layer {

name: "lstm2x_r2_h_conted_22"

type: "Scale"

bottom: "h_22"

bottom: "cont_23"

top: "h_conted_22"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_23"

type: "InnerProduct"

bottom: "h_conted_22"

top: "W_hc_h_22"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_23"

type: "Eltwise"

bottom: "W_hc_h_22"

bottom: "W_xc_x_23"

top: "gate_input_23"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_23"

type: "LSTMUnit"

bottom: "c_22"

bottom: "gate_input_23"

bottom: "cont_23"

top: "c_23"

top: "h_23"

}

layer {

name: "lstm2x_r2_h_conted_23"

type: "Scale"

bottom: "h_23"

bottom: "cont_24"

top: "h_conted_23"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_24"

type: "InnerProduct"

bottom: "h_conted_23"

top: "W_hc_h_23"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_24"

type: "Eltwise"

bottom: "W_hc_h_23"

bottom: "W_xc_x_24"

top: "gate_input_24"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_24"

type: "LSTMUnit"

bottom: "c_23"

bottom: "gate_input_24"

bottom: "cont_24"

top: "c_24"

top: "h_24"

}

layer {

name: "lstm2x_r2_h_conted_24"

type: "Scale"

bottom: "h_24"

bottom: "cont_25"

top: "h_conted_24"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_25"

type: "InnerProduct"

bottom: "h_conted_24"

top: "W_hc_h_24"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_25"

type: "Eltwise"

bottom: "W_hc_h_24"

bottom: "W_xc_x_25"

top: "gate_input_25"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_25"

type: "LSTMUnit"

bottom: "c_24"

bottom: "gate_input_25"

bottom: "cont_25"

top: "c_25"

top: "h_25"

}

layer {

name: "lstm2x_r2_h_conted_25"

type: "Scale"

bottom: "h_25"

bottom: "cont_26"

top: "h_conted_25"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_26"

type: "InnerProduct"

bottom: "h_conted_25"

top: "W_hc_h_25"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_26"

type: "Eltwise"

bottom: "W_hc_h_25"

bottom: "W_xc_x_26"

top: "gate_input_26"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_26"

type: "LSTMUnit"

bottom: "c_25"

bottom: "gate_input_26"

bottom: "cont_26"

top: "c_26"

top: "h_26"

}

layer {

name: "lstm2x_r2_h_conted_26"

type: "Scale"

bottom: "h_26"

bottom: "cont_27"

top: "h_conted_26"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_27"

type: "InnerProduct"

bottom: "h_conted_26"

top: "W_hc_h_26"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_27"

type: "Eltwise"

bottom: "W_hc_h_26"

bottom: "W_xc_x_27"

top: "gate_input_27"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_27"

type: "LSTMUnit"

bottom: "c_26"

bottom: "gate_input_27"

bottom: "cont_27"

top: "c_27"

top: "h_27"

}

layer {

name: "lstm2x_r2_h_conted_27"

type: "Scale"

bottom: "h_27"

bottom: "cont_28"

top: "h_conted_27"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_28"

type: "InnerProduct"

bottom: "h_conted_27"

top: "W_hc_h_27"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_28"

type: "Eltwise"

bottom: "W_hc_h_27"

bottom: "W_xc_x_28"

top: "gate_input_28"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_28"

type: "LSTMUnit"

bottom: "c_27"

bottom: "gate_input_28"

bottom: "cont_28"

top: "c_28"

top: "h_28"

}

layer {

name: "lstm2x_r2_h_conted_28"

type: "Scale"

bottom: "h_28"

bottom: "cont_29"

top: "h_conted_28"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_29"

type: "InnerProduct"

bottom: "h_conted_28"

top: "W_hc_h_28"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_29"

type: "Eltwise"

bottom: "W_hc_h_28"

bottom: "W_xc_x_29"

top: "gate_input_29"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_29"

type: "LSTMUnit"

bottom: "c_28"

bottom: "gate_input_29"

bottom: "cont_29"

top: "c_29"

top: "h_29"

}

layer {

name: "lstm2x_r2_h_conted_29"

type: "Scale"

bottom: "h_29"

bottom: "cont_30"

top: "h_conted_29"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_30"

type: "InnerProduct"

bottom: "h_conted_29"

top: "W_hc_h_29"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_30"

type: "Eltwise"

bottom: "W_hc_h_29"

bottom: "W_xc_x_30"

top: "gate_input_30"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_30"

type: "LSTMUnit"

bottom: "c_29"

bottom: "gate_input_30"

bottom: "cont_30"

top: "c_30"

top: "h_30"

}

layer {

name: "lstm2x_r2_h_conted_30"

type: "Scale"

bottom: "h_30"

bottom: "cont_31"

top: "h_conted_30"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_31"

type: "InnerProduct"

bottom: "h_conted_30"

top: "W_hc_h_30"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_31"

type: "Eltwise"

bottom: "W_hc_h_30"

bottom: "W_xc_x_31"

top: "gate_input_31"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_31"

type: "LSTMUnit"

bottom: "c_30"

bottom: "gate_input_31"

bottom: "cont_31"

top: "c_31"

top: "h_31"

}

layer {

name: "lstm2x_r2_h_conted_31"

type: "Scale"

bottom: "h_31"

bottom: "cont_32"

top: "h_conted_31"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_32"

type: "InnerProduct"

bottom: "h_conted_31"

top: "W_hc_h_31"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_32"

type: "Eltwise"

bottom: "W_hc_h_31"

bottom: "W_xc_x_32"

top: "gate_input_32"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_32"

type: "LSTMUnit"

bottom: "c_31"

bottom: "gate_input_32"

bottom: "cont_32"

top: "c_32"

top: "h_32"

}

layer {

name: "lstm2x_r2_h_conted_32"

type: "Scale"

bottom: "h_32"

bottom: "cont_33"

top: "h_conted_32"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_33"

type: "InnerProduct"

bottom: "h_conted_32"

top: "W_hc_h_32"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_33"

type: "Eltwise"

bottom: "W_hc_h_32"

bottom: "W_xc_x_33"

top: "gate_input_33"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_33"

type: "LSTMUnit"

bottom: "c_32"

bottom: "gate_input_33"

bottom: "cont_33"

top: "c_33"

top: "h_33"

}

layer {

name: "lstm2x_r2_h_conted_33"

type: "Scale"

bottom: "h_33"

bottom: "cont_34"

top: "h_conted_33"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_34"

type: "InnerProduct"

bottom: "h_conted_33"

top: "W_hc_h_33"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_34"

type: "Eltwise"

bottom: "W_hc_h_33"

bottom: "W_xc_x_34"

top: "gate_input_34"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_34"

type: "LSTMUnit"

bottom: "c_33"

bottom: "gate_input_34"

bottom: "cont_34"

top: "c_34"

top: "h_34"

}

layer {

name: "lstm2x_r2_h_conted_34"

type: "Scale"

bottom: "h_34"

bottom: "cont_35"

top: "h_conted_34"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_35"

type: "InnerProduct"

bottom: "h_conted_34"

top: "W_hc_h_34"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_35"

type: "Eltwise"

bottom: "W_hc_h_34"

bottom: "W_xc_x_35"

top: "gate_input_35"

eltwise_param {

operation: SUM

}

}

layer {

name: "lstm2x_r2_unit_35"

type: "LSTMUnit"

bottom: "c_34"

bottom: "gate_input_35"

bottom: "cont_35"

top: "c_35"

top: "h_35"

}

layer {

name: "lstm2x_r2_h_conted_35"

type: "Scale"

bottom: "h_35"

bottom: "cont_36"

top: "h_conted_35"

scale_param {

axis: 0

}

}

layer {

name: "lstm2x_r2_transform_36"

type: "InnerProduct"

bottom: "h_conted_35"

top: "W_hc_h_35"

param {

name: "W_hc"

}

inner_product_param {

num_output: 400

bias_term: false

weight_filler {

type: "xavier"

}

axis: 2

}

}

layer {

name: "lstm2x_r2_gate_input_36"

type: "Eltwise"

bottom: "W_hc_h_35"

bottom: "W_xc_x_36"

top: "hello"

}