cuda 编程1

本文参考链接:

《CUDA C Programming Guide》(《CUDA C 编程指南》)导读 https://zhuanlan.zhihu.com/p/53773183?from_voters_page=true

/* main.cu */

#include <iostream>

#include <time.h>

#include "opencv2/highgui.hpp"

#include "opencv2/opencv.hpp"

using namespace cv;

using namespace std;

//内核函数

__global__ void rgb2grayincuda(uchar3 * const d_in, unsigned char * const d_out,

uint imgheight, uint imgwidth)

{

const unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

const unsigned int idy = blockIdx.y * blockDim.y + threadIdx.y;

if (idx < imgwidth && idy < imgheight) //有的线程会跑到图像外面去,不执行即可

{

uchar3 rgb = d_in[idy * imgwidth + idx];

d_out[idy * imgwidth + idx] = 0.299f * rgb.x + 0.587f * rgb.y + 0.114f * rgb.z;

}

}

//用于对比的CPU串行代码

void rgb2grayincpu(unsigned char * const d_in, unsigned char * const d_out,

uint imgheight, uint imgwidth)

{

for(int i = 0; i < imgheight; i++)

{

for(int j = 0; j < imgwidth; j++)

{

d_out[i * imgwidth + j] = 0.299f * d_in[(i * imgwidth + j)*3]

+ 0.587f * d_in[(i * imgwidth + j)*3 + 1]

+ 0.114f * d_in[(i * imgwidth + j)*3 + 2];

}

}

}

int main(void)

{

Mat srcImage = imread("/data_2/dog2.jpg");

imshow("srcImage", srcImage);

waitKey(0);

const uint imgheight = srcImage.rows;

const uint imgwidth = srcImage.cols;

Mat grayImage(imgheight, imgwidth, CV_8UC1, Scalar(0));

uchar3 *d_in; //向量类型,3个uchar

unsigned char *d_out;

//首先分配GPU上的内存

cudaMalloc((void**)&d_in, imgheight*imgwidth*sizeof(uchar3));

cudaMalloc((void**)&d_out, imgheight*imgwidth*sizeof(unsigned char));

//将主机端数据拷贝到GPU上

cudaMemcpy(d_in, srcImage.data, imgheight*imgwidth*sizeof(uchar3), cudaMemcpyHostToDevice);

//每个线程处理一个像素

dim3 threadsPerBlock(32, 32);

dim3 blocksPerGrid((imgwidth + threadsPerBlock.x - 1) / threadsPerBlock.x,

(imgheight + threadsPerBlock.y - 1) / threadsPerBlock.y);

clock_t start, end;

start = clock();

#if 0 //cuda

//启动内核

rgb2grayincuda<< <blocksPerGrid, threadsPerBlock>> >(d_in, d_out, imgheight, imgwidth);

//执行内核是一个异步操作,因此需要同步以测量准确时间

cudaDeviceSynchronize();

end = clock();

printf("cuda exec time is %.8f\n", (double)(end-start)/CLOCKS_PER_SEC);

//拷贝回来数据

cudaMemcpy(grayImage.data, d_out, imgheight*imgwidth*sizeof(unsigned char), cudaMemcpyDeviceToHost);

//释放显存

cudaFree(d_in);

cudaFree(d_out);

#endif

#if 1 //cpu

rgb2grayincpu(srcImage.data, grayImage.data,imgheight, imgwidth);

//执行内核是一个异步操作,因此需要同步以测量准确时间

//cudaDeviceSynchronize();

end = clock();

printf("cpu exec time is %.8f\n", (double)(end-start)/CLOCKS_PER_SEC);

#endif

imshow("grayImage", grayImage);

waitKey(0);

return 0;

}

cmake_minimum_required(VERSION 2.8)

project(testcuda)

find_package(CUDA REQUIRED)

find_package(OpenCV REQUIRED)

include_directories("/home/yhl/software_install/opencv3.2/include")

cuda_add_executable(testcuda main.cu)

target_link_libraries(testcuda ${OpenCV_LIBS})

cuda 运行:cuda exec time is 0.00005800

cpu 运行:cpu exec time is 0.00115700

例子2:

参考链接

https://zhuanlan.zhihu.com/p/34587739

#include <iostream>

#include <time.h>

#include "opencv2/highgui.hpp"

#include "opencv2/opencv.hpp"

using namespace cv;

using namespace std;

int main(void)

{

int dev = 0;

cudaDeviceProp devProp;

//CHECK(cudaGetDeviceProperties(&devProp, dev));

cudaGetDeviceProperties(&devProp, dev);

std::cout << "使用GPU device " << dev << ": " << devProp.name << std::endl;

std::cout << "SM的数量:" << devProp.multiProcessorCount << std::endl;

std::cout << "每个线程块的共享内存大小:" << devProp.sharedMemPerBlock / 1024.0 << " KB" << std::endl;

std::cout << "每个线程块的最大线程数:" << devProp.maxThreadsPerBlock << std::endl;

std::cout << "每个EM的最大线程数:" << devProp.maxThreadsPerMultiProcessor << std::endl;

std::cout << "每个EM的最大线程束数:" << devProp.maxThreadsPerMultiProcessor / 32 << std::endl;

}

输出如下:

使用GPU device 0: GeForce GTX 1080

SM的数量:20

每个线程块的共享内存大小:48 KB

每个线程块的最大线程数:1024

每个EM的最大线程数:2048

每个EM的最大线程束数:64

cuda编程,10 篇博客,深入浅出谈CUDA

https://blog.csdn.net/sunmc1204953974/category_6156113.html

-------------------------------------------------------GPU高性能编程CUDA实战---------------------------------

=start=chapter03=====

//CMakeLists.txt

CMAKE_MINIMUM_REQUIRED(VERSION 3.1)

find_package(CUDA)

find_package(OpenCV REQUIRED)

include_directories("/home/yhl/software_install/opencv3.2/include")

link_directories(${OpenCV_LIBRARIES_DIRS})

CUDA_ADD_EXECUTABLE(enum_gpu enum_gpu.cu)

SET_PROPERTY(TARGET enum_gpu PROPERTY FOLDER chapter03)

CUDA_ADD_EXECUTABLE(hello_world hello_world.cu)

SET_PROPERTY(TARGET hello_world PROPERTY FOLDER chapter03)

CUDA_ADD_EXECUTABLE(set_gpu set_gpu.cu)

SET_PROPERTY(TARGET set_gpu PROPERTY FOLDER chapter03)

CUDA_ADD_EXECUTABLE(simple_device_call simple_device_call.cu)

SET_PROPERTY(TARGET simple_device_call PROPERTY FOLDER chapter03)

CUDA_ADD_EXECUTABLE(simple_kernel_params simple_kernel_params.cu)

SET_PROPERTY(TARGET simple_kernel_params PROPERTY FOLDER chapter03)

CUDA_ADD_EXECUTABLE(simple_kernel simple_kernel.cu)

SET_PROPERTY(TARGET simple_kernel PROPERTY FOLDER chapter03)

//hello_world.cu

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* NVIDIA Corporation and its licensors retain all intellectual property and

* proprietary rights in and to this software and related documentation.

* Any use, reproduction, disclosure, or distribution of this software

* and related documentation without an express license agreement from

* NVIDIA Corporation is strictly prohibited.

*

* Please refer to the applicable NVIDIA end user license agreement (EULA)

* associated with this source code for terms and conditions that govern

* your use of this NVIDIA software.

*

*/

#include "../common/book.h"

int main( void ) {

printf( "Hello, World!\n" );

return 0;

}

//simple_kernel.cu

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* NVIDIA Corporation and its licensors retain all intellectual property and

* proprietary rights in and to this software and related documentation.

* Any use, reproduction, disclosure, or distribution of this software

* and related documentation without an express license agreement from

* NVIDIA Corporation is strictly prohibited.

*

* Please refer to the applicable NVIDIA end user license agreement (EULA)

* associated with this source code for terms and conditions that govern

* your use of this NVIDIA software.

*

*/

#include "../common/book.h"

__global__ void kernel( void ) {

printf( "77Hello, World!\n" );

}

int main( void ) {

kernel<<<1,1>>>();

printf( "Hello, World!\n" );

return 0;

}

/data_2/tmp_paper/cuda/cuda_by_example-master/chapter03/cmake-build-debug/simple_kernel

Hello, World!

Process finished with exit code 0

//simple_kernel_params

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* NVIDIA Corporation and its licensors retain all intellectual property and

* proprietary rights in and to this software and related documentation.

* Any use, reproduction, disclosure, or distribution of this software

* and related documentation without an express license agreement from

* NVIDIA Corporation is strictly prohibited.

*

* Please refer to the applicable NVIDIA end user license agreement (EULA)

* associated with this source code for terms and conditions that govern

* your use of this NVIDIA software.

*

*/

#include "../common/book.h"

__global__ void add( int a, int b, int *c ) {

*c = a + b;

}

int main( void ) {

int c;

int *dev_c;

HANDLE_ERROR( cudaMalloc( (void**)&dev_c, sizeof(int) ) );

add<<<1,1>>>( 2, 7, dev_c );

HANDLE_ERROR( cudaMemcpy( &c, dev_c, sizeof(int),

cudaMemcpyDeviceToHost ) );

printf( "2 + 7 = %d\n", c );

HANDLE_ERROR( cudaFree( dev_c ) );

return 0;

}

/data_2/tmp_paper/cuda/cuda_by_example-master/chapter03/cmake-build-debug/simple_kernel_params

2 + 7 = 9

Process finished with exit code 0

//simple_device_call.cu

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* NVIDIA Corporation and its licensors retain all intellectual property and

* proprietary rights in and to this software and related documentation.

* Any use, reproduction, disclosure, or distribution of this software

* and related documentation without an express license agreement from

* NVIDIA Corporation is strictly prohibited.

*

* Please refer to the applicable NVIDIA end user license agreement (EULA)

* associated with this source code for terms and conditions that govern

* your use of this NVIDIA software.

*

*/

#include "../common/book.h"

__device__ int addem( int a, int b ) {

return a + b;

}

__global__ void add( int a, int b, int *c ) {

*c = addem( a, b );

}

int main( void ) {

int c;

int *dev_c;

HANDLE_ERROR( cudaMalloc( (void**)&dev_c, sizeof(int) ) );

add<<<1,1>>>( 2, 7, dev_c );

HANDLE_ERROR( cudaMemcpy( &c, dev_c, sizeof(int),

cudaMemcpyDeviceToHost ) );

printf( "2 + 7 = %d\n", c );

HANDLE_ERROR( cudaFree( dev_c ) );

return 0;

}

/data_2/tmp_paper/cuda/cuda_by_example-master/chapter03/cmake-build-debug/simple_device_call

2 + 7 = 9

Process finished with exit code 0

//enum_gpu.cu

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* NVIDIA Corporation and its licensors retain all intellectual property and

* proprietary rights in and to this software and related documentation.

* Any use, reproduction, disclosure, or distribution of this software

* and related documentation without an express license agreement from

* NVIDIA Corporation is strictly prohibited.

*

* Please refer to the applicable NVIDIA end user license agreement (EULA)

* associated with this source code for terms and conditions that govern

* your use of this NVIDIA software.

*

*/

#include "../common/book.h"

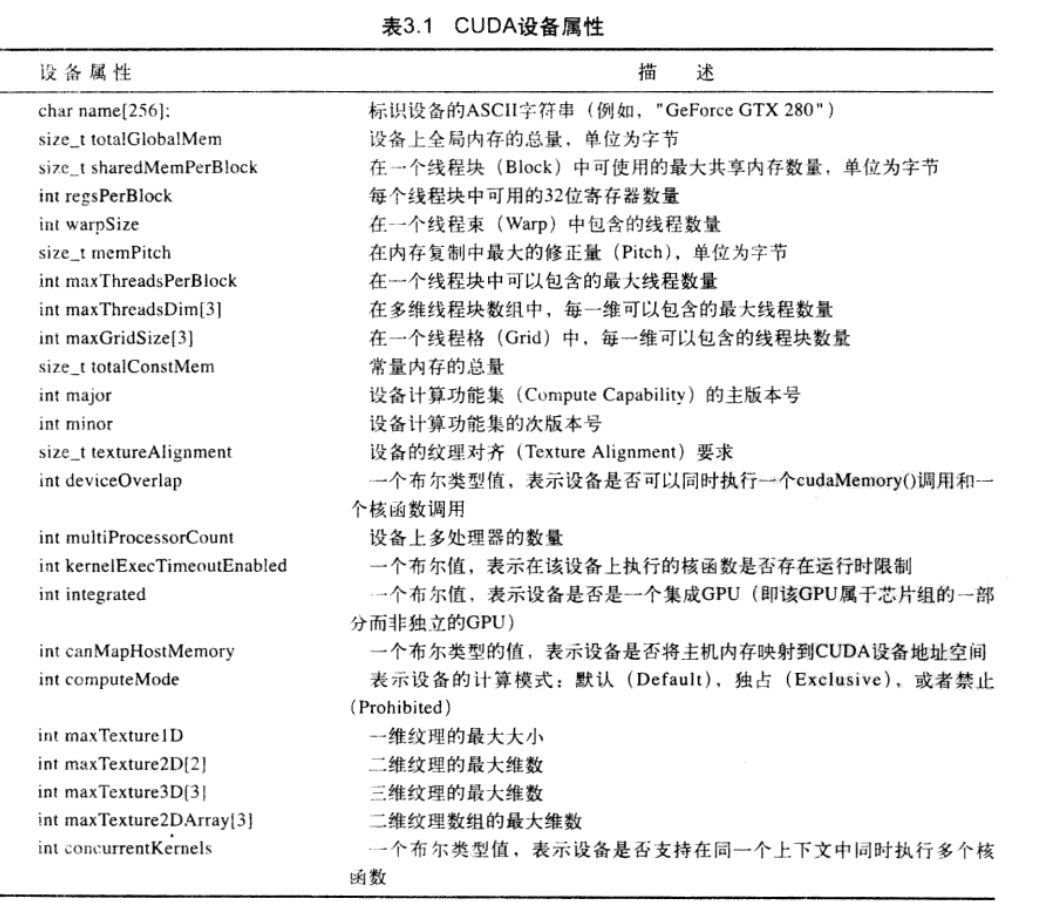

int main( void ) {

cudaDeviceProp prop;

int count;

HANDLE_ERROR( cudaGetDeviceCount( &count ) );

for (int i=0; i< count; i++) {

HANDLE_ERROR( cudaGetDeviceProperties( &prop, i ) );

printf( " --- General Information for device %d ---\n", i );

printf( "Name: %s\n", prop.name );

printf( "Compute capability: %d.%d\n", prop.major, prop.minor );

printf( "Clock rate: %d\n", prop.clockRate );

printf( "Device copy overlap: " );

if (prop.deviceOverlap)

printf( "Enabled\n" );

else

printf( "Disabled\n");

printf( "Kernel execution timeout : " );

if (prop.kernelExecTimeoutEnabled)

printf( "Enabled\n" );

else

printf( "Disabled\n" );

printf( " --- Memory Information for device %d ---\n", i );

printf( "Total global mem: %ld\n", prop.totalGlobalMem );

printf( "Total constant Mem: %ld\n", prop.totalConstMem );

printf( "Max mem pitch: %ld\n", prop.memPitch );

printf( "Texture Alignment: %ld\n", prop.textureAlignment );

printf( " --- MP Information for device %d ---\n", i );

printf( "Multiprocessor count: %d\n",

prop.multiProcessorCount );

printf( "Shared mem per mp: %ld\n", prop.sharedMemPerBlock );

printf( "Registers per mp: %d\n", prop.regsPerBlock );

printf( "Threads in warp: %d\n", prop.warpSize );

printf( "Max threads per block: %d\n",

prop.maxThreadsPerBlock );

printf( "Max thread dimensions: (%d, %d, %d)\n",

prop.maxThreadsDim[0], prop.maxThreadsDim[1],

prop.maxThreadsDim[2] );

printf( "Max grid dimensions: (%d, %d, %d)\n",

prop.maxGridSize[0], prop.maxGridSize[1],

prop.maxGridSize[2] );

printf( "\n" );

}

}

--- General Information for device 0 ---

Name: GeForce GTX 1080

Compute capability: 6.1

Clock rate: 1809500

Device copy overlap: Enabled

Kernel execution timeout : Enabled

--- Memory Information for device 0 ---

Total global mem: 8510701568

Total constant Mem: 65536

Max mem pitch: 2147483647

Texture Alignment: 512

--- MP Information for device 0 ---

Multiprocessor count: 20

Shared mem per mp: 49152

Registers per mp: 65536

Threads in warp: 32

Max threads per block: 1024

Max thread dimensions: (1024, 1024, 64)

Max grid dimensions: (2147483647, 65535, 65535)

Process finished with exit code 0

//set_gpu.cu

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* NVIDIA Corporation and its licensors retain all intellectual property and

* proprietary rights in and to this software and related documentation.

* Any use, reproduction, disclosure, or distribution of this software

* and related documentation without an express license agreement from

* NVIDIA Corporation is strictly prohibited.

*

* Please refer to the applicable NVIDIA end user license agreement (EULA)

* associated with this source code for terms and conditions that govern

* your use of this NVIDIA software.

*

*/

#include "../common/book.h"

int main( void ) {

cudaDeviceProp prop;

int dev;

HANDLE_ERROR( cudaGetDevice( &dev ) );

printf( "ID of current CUDA device: %d\n", dev );

memset( &prop, 0, sizeof( cudaDeviceProp ) );

prop.major = 1;

prop.minor = 3;

HANDLE_ERROR( cudaChooseDevice( &dev, &prop ) );

printf( "ID of CUDA device closest to revision 1.3: %d\n", dev );

HANDLE_ERROR( cudaSetDevice( dev ) );

}

/data_2/tmp_paper/cuda/cuda_by_example-master/chapter03/cmake-build-debug/set_gpu

ID of current CUDA device: 0

ID of CUDA device closest to revision 1.3: 0

Process finished with exit code 0

=end=chapter03=====